Ill Fares the Land – Read Now and Download Mobi

Table of Contents

CHAPTER ONE - The Way We Live Now

CHAPTER TWO - The World We Have Lost

CHAPTER THREE - The Unbearable Lightness of Politics

CHAPTER FOUR - Goodbye to All That?

CHAPTER FIVE - What Is to Be Done?

CHAPTER SIX - The Shape of Things to Come

ALSO BY TONY JUDT

Reappraisals: Reflections on the Forgotten Twentieth Century

Postwar: A History of Europe Since 1945

The Politics of Retribution in Europe

(with Jan Gross and István Deák)

The Burden of Responsibility:

Blum, Camus, Aron, and the French Twentieth Century

Language, Nation and State:

Identity Politics in a Multilingual Age

(edited with Denis Lacorne)

A Grand Illusion?: An Essay on Europe

Past Imperfect: French Intellectuals, 1944-1956

Marxism and the French Left:

Studies on Labour and Politics in France 1830-1982

Resistance and Revolution

in Mediterranean Europe 1939-1948

Socialism in Provence 1871-1914:

A Study in the Origins of the Modern French Left

La reconstruction du Parti Socialiste 1921-1926

THE PENGUIN PRESS

Published by the Penguin Group

Penguin Group (USA) Inc., 375 Hudson Street, New York, New York 10014, U.S.A.

Penguin Group (Canada), 90 Eglinton Avenue East, Suite 700, Toronto, Ontario,

Canada M4P 2Y3 (a division of Pearson Penguin Canada Inc.) • Penguin Books Ltd,

80 Strand, London WC2R 0RL, England • Penguin Ireland, 25 St. Stephen’s Green,

Dublin 2, Ireland (a division of Penguin Books Ltd) • Penguin Books Australia Ltd,

250 Camberwell Road, Camberwell, Victoria 3124, Australia (a division of Pearson

Australia Group Pty Ltd) • Penguin Books India Pvt Ltd, 11 Community Centre,

Panchsheel Park, New Delhi - 110 017, India • Penguin Group (NZ), 67 Apollo

Drive, Rosedale, North Shore 0632, New Zealand (a division of Pearson New Zealand

Ltd) • Penguin Books (South Africa) (Pty) Ltd, 24 Sturdee Avenue, Rosebank,

Johannesburg 2196, South Africa

Penguin Books Ltd, Registered Offices:

80 Strand, London WC2R 0RL, England

First published in 2010 by The Penguin Press,

a member of Penguin Group (USA) Inc.

Copyright © Tony Judt, 2010

All rights reserved

eISBN : 978-1-101-22370-3

Without limiting the rights under copyright reserved above, no part of this publication may be reproduced, stored in or introduced into a retrieval system, or transmitted, in any form or by any means (electronic, mechanical, photocopying, recording or otherwise), without the prior written permission of both the copyright owner and the above publisher of this book.

The scanning, uploading, and distribution of this book via the Internet or via any other means without the permission of the publisher is illegal and punishable by law. Please purchase only authorized electronic editions and do not participate in or encourage electronic piracy of copyrightable materials. Your support of the author’s rights is appreciated.

For Daniel & Nicholas

Ill fares the land, to hastening ills a prey,

Where wealth accumulates, and men decay.

Oliver Goldsmith, The Deserted Village (1770)

ACKNOWLEDGMENTS

Owing to the unusual circumstances in which this book was written, I have incurred numerous debts which it is a pleasure to record. My former students Zara Burdett and Casey Selwyn served indefatigably as research assistants and transcribers, faithfully recording my thoughts, notes and readings over many months. Clémence Boulouque helped me find and incorporate recent material from the media, and responded untiringly to my inquiries and demands. She was also a superb editor.

But my greatest debt is to Eugene Rusyn. He typed the entire book manuscript in less than eight weeks, taking it down verbatim from my rapid-fire and occasionally indistinct dictation for many hours a day, sometimes working around the clock. He was responsible for locating many of the more arcane citations; but above all, he and I collaborated intimately on the editing of the text—for substance, style and coherence. It is the simple truth that I could not have written this book without him and it is all the better for his contribution.

I am indebted to my friends and staff at the Remarque Institute—Professor Katherine Fleming, Jair Kessler, Jennifer Ren and Maya Jex—who have uncomplainingly adapted to the changes brought about by my deteriorating health. Without their cooperation I would not have had the time or resources to devote to this book. Thanks to my colleagues in the administration of New York University—Chancellor (and former Dean) Richard Foley and Administrative Dean Joe Juliano above all—I have received all the support and encouragement that anyone could hope for.

Not for the first time, I am beholden to Robert Silvers. It was at his suggestion that the lecture I gave on social democracy at NYU in the Fall of 2009 was first transcribed (thanks to the staff of the New York Review) and then published in their pages: giving rise to a wholly unanticipated chorus of demands for its expansion into a little book. Sarah Chalfant and Scott Moyers of The Wylie Agency vigorously encouraged this suggestion and The Penguin Press in New York and London was good enough to welcome the project. I hope that they will all be pleased with the result.

In the writing of this book I have benefited hugely from the kindness of strangers, who have written to offer suggestions and criticisms of my writing on these subjects over the years. I could not possibly thank everyone in person, but I hope that for all its inevitable shortcomings the work itself will stand as a token of appreciation.

But my greatest debt is to my family. The burden that I have placed upon them over the past year appears to me quite intolerable, yet they have borne it so lightly that I was able to set aside my concerns and devote myself these past months almost entirely to the business of thinking and writing. Solipsism is the characteristic failing of the professional writer. But in my own case I am especially conscious of the self-indulgence: Jennifer Homans, my wife, has been completing her manuscript on the history of classical ballet while caring for me. My writing has benefited enormously from her love and generosity, now as in years past. That her own book will be published later this year is a tribute to her remarkable character.

My children—Daniel and Nicholas—lead busy adolescent lives. Nevertheless, they have found time to discuss with me the many themes interwoven into these pages. Indeed, it was thanks to our conversations across the dinner table that I first came fully to appreciate just how much today’s youth care about the world that we have bequeathed them—and how inadequately we have furnished them with the means to improve it. This book is dedicated to them.

New York,

February 2010

INTRODUCTION

A Guide for the Perplexed

“I cannot help fearing that men may reach a point where they look on every new theory as a danger, every innovation as a toilsome trouble, every social advance as a first step toward revolution, and that they may absolutely refuse to move at all.”

—ALEXIS DE TOCQUEVILLE

Something is profoundly wrong with the way we live today. For thirty years we have made a virtue out of the pursuit of material self-interest: indeed, this very pursuit now constitutes whatever remains of our sense of collective purpose. We know what things cost but have no idea what they are worth. We no longer ask of a judicial ruling or a legislative act: is it good? Is it fair? Is it just? Is it right? Will it help bring about a better society or a better world? Those used to be the political questions, even if they invited no easy answers. We must learn once again to pose them.

The materialistic and selfish quality of contemporary life is not inherent in the human condition. Much of what appears ‘natural’ today dates from the 1980s: the obsession with wealth creation, the cult of privatization and the private sector, the growing disparities of rich and poor. And above all, the rhetoric which accompanies these: uncritical admiration for unfettered markets, disdain for the public sector, the delusion of endless growth.

We cannot go on living like this. The little crash of 2008 was a reminder that unregulated capitalism is its own worst enemy: sooner or later it must fall prey to its own excesses and turn again to the state for rescue. But if we do no more than pick up the pieces and carry on as before, we can look forward to greater upheavals in years to come.

And yet we seem unable to conceive of alternatives. This too is something new. Until quite recently, public life in liberal societies was conducted in the shadow of a debate between defenders of ‘capitalism’ and its critics: usually identified with one or another form of ‘socialism’. By the 1970s this debate had lost much of its meaning for both sides; all the same, the ‘Left-Right’ distinction served a useful purpose. It provided a peg on which to hang critical commentary about contemporary affairs.

On the Left, Marxism was attractive to generations of young people if only because it offered a way to take one’s distance from the status quo. Much the same was true of classical conservatism: a well-grounded distaste for over-hasty change gave a home to those reluctant to abandon long-established routines. Today, neither Left nor Right can find their footing.

For thirty years students have been complaining to me that ‘it was easy for you’: your generation had ideals and ideas, you believed in something, you were able to change things. ‘We’ (the children of the ’80s, the ’90s, the ‘aughts’) have nothing. In many respects my students are right. It was easy for us—just as it was easy, at least in this sense, for the generations who came before us. The last time a cohort of young people expressed comparable frustration at the emptiness of their lives and the dispiriting purposelessness of their world was in the 1920s: it is not by chance that historians speak of a ‘lost generation’.

If young people today are at a loss, it is not for want of targets. Any conversation with students or schoolchildren will produce a startling checklist of anxieties. Indeed, the rising generation is acutely worried about the world it is to inherit. But accompanying these fears there is a general sentiment of frustration: ‘we’ know something is wrong and there are many things we don’t like. But what can we believe in? What should we do?

This is an ironic reversal of the attitudes of an earlier age. Back in the era of self-assured radical dogma, young people were far from uncertain. The characteristic tone of the ’60s was that of overweening confidence: we knew just how to fix the world. It was this note of unmerited arrogance that partly accounts for the reactionary backlash that followed; if the Left is to recover its fortunes, some modesty will be in order. All the same, you must be able to name a problem if you wish to solve it.

This book was written for young people on both sides of the Atlantic. American readers may be struck by the frequent references to social democracy. Here in the United States, such references are uncommon. When journalists and commentators advocate public expenditure on social objectives, they are more likely to describe themselves—and be described by their critics—as ‘liberals’. But this is confusing. Liberal is a venerable and respectable label and we should all be proud to wear it. But like a well-designed outer coat, it conceals more than it displays.

A liberal is someone who opposes interference in the affairs of others: who is tolerant of dissenting attitudes and unconventional behavior. Liberals have historically favored keeping other people out of our lives, leaving individuals the maximum space in which to live and flourish as they choose. In their extreme form, such attitudes are associated today with self-styled ‘libertarians’, but the term is largely redundant. Most genuine liberals remain disposed to leave other people alone.

Social democrats, on the other hand, are something of a hybrid. They share with liberals a commitment to cultural and religious tolerance. But in public policy social democrats believe in the possibility and virtue of collective action for the collective good. Like most liberals, social democrats favor progressive taxation in order to pay for public services and other social goods that individuals cannot provide themselves; but whereas many liberals might see such taxation or public provision as a necessary evil, a social democratic vision of the good society entails from the outset a greater role for the state and the public sector.

Understandably, social democracy is a hard sell in the United States. One of my goals is to suggest that government can play an enhanced role in our lives without threatening our liberties—and to argue that, since the state is going to be with us for the foreseeable future, we would do well to think about what sort of a state we want. In any case, much that was best in American legislation and social policy over the course of the 20th century—and that we are now urged to dismantle in the name of efficiency and “less government”—corresponds in practice to what Europeans have called ‘social democracy’. Our problem is not what to do; it is how to talk about it.

The European dilemma is somewhat different. Many European countries have long practiced something resembling social democracy: but they have forgotten how to preach it. Social democrats today are defensive and apologetic. Critics who claim that the European model is too expensive or economically inefficient have been allowed to pass unchallenged. And yet, the welfare state is as popular as ever with its beneficiaries: nowhere in Europe is there a constituency for abolishing public health services, ending free or subsidized education or reducing public provision of transport and other essential services.

I want to challenge conventional wisdom on both sides of the Atlantic. To be sure, the target has softened considerably. In the early years of this century, the ‘Washington consensus’ held the field. Everywhere you went there was an economist or ‘expert’ expounding the virtues of deregulation, the minimal state and low taxation. Anything, it seemed, that the public sector could do private individuals could do better.

The Washington doctrine was everywhere greeted by ideological cheerleaders: from the profiteers of the ‘Irish miracle’ (the property-bubble boom of the ‘Celtic tiger’) to the doctrinaire ultra-capitalists of former Communist Europe. Even ‘old Europeans’ were swept up in the wake. The EU’s free-market project—the so-called ‘Lisbon agenda’; the enthusiastic privatization plans of the French and German governments: all bore witness to what its French critics described as the new ‘pensée unique’.

Today there has been a partial awakening. To avert national bankruptcies and wholesale banking collapse, governments and central bankers have performed remarkable policy reversals, liberally dispersing public money in pursuit of economic stability and taking failed companies into public control without a second thought. A striking number of free market economists, worshippers at the feet of Milton Friedman and his Chicago colleagues, have lined up to don sackcloth and ashes and swear allegiance to the memory of John Maynard Keynes.

This is all very gratifying. But it hardly constitutes an intellectual revolution. Quite the contrary: as the response of the Obama administration suggests, the reversion to Keynesian economics is but a tactical retreat. Much the same may be said of New Labour, as committed as ever to the private sector in general and the London financial markets in particular. To be sure, one effect of the crisis has been to dampen the ardor of continental Europeans for the ‘Anglo-American model’; but the chief beneficiaries have been those same center-right parties once so keen to emulate Washington.

In short, the practical need for strong states and interventionist governments is beyond dispute. But no one is ‘re-thinking’ the state. There remains a marked reluctance to defend the public sector on grounds of collective interest or principle. It is striking that in a series of European elections following the financial meltdown, social democratic parties consistently did badly; notwithstanding the collapse of the market, they proved conspicuously unable to rise to the occasion.

If it is to be taken seriously again, the Left must find its voice. There is much to be angry about: growing inequalities of wealth and opportunity; injustices of class and caste; economic exploitation at home and abroad; corruption and money and privilege occluding the arteries of democracy. But it will no longer suffice to identify the shortcomings of ‘the system’ and then retreat, Pilate-like: indifferent to consequences. The irresponsible rhetorical grandstanding of decades past did not serve the Left well.

We have entered an age of insecurity—economic insecurity, physical insecurity, political insecurity. The fact that we are largely unaware of this is small comfort: few in 1914 predicted the utter collapse of their world and the economic and political catastrophes that followed. Insecurity breeds fear. And fear—fear of change, fear of decline, fear of strangers and an unfamiliar world—is corroding the trust and interdependence on which civil societies rest.

All change is disruptive. We have seen that the specter of terrorism is enough to cast stable democracies into turmoil. Climate change will have even more dramatic consequences. Men and women will be thrown back upon the resources of the state. They will look to their political leaders and representatives to protect them: open societies will once again be urged to close in upon themselves, sacrificing freedom for ‘security’. The choice will no longer be between the state and the market, but between two sorts of state. It is thus incumbent upon us to re-conceive the role of government. If we do not, others will.

The arguments that follow were first outlined in an essay I contributed to the New York Review of Books in December 2009. Following the publication of that essay, I received many interesting comments and suggestions. Among them was a thoughtful critique from a young colleague. “What is most striking”, she wrote, “about what you say is not so much the substance but the form: you speak of being angry at our political quiescence; you write of the need to dissent from our economically-driven way of thinking, the urgency of a return to an ethically informed public conversation. No one talks like this any more.” Hence this book.

CHAPTER ONE

The Way We Live Now

“To see what is in front of one’s nose needs a constant struggle.”

—GEORGE ORWELL

All around us we see a level of individual wealth unequaled since the early years of the 20th century. Conspicuous consumption of redundant consumer goods—houses, jewelry, cars, clothing, tech toys—has greatly expanded over the past generation. In the US, the UK and a handful of other countries, financial transactions have displaced the production of goods or services as the source of private fortunes, distorting the value we place upon different kinds of economic activity. The wealthy, like the poor, have always been with us. But relative to everyone else, they are today wealthier and more conspicuous than at any time in living memory. Private privilege is easy to understand and describe. It is rather harder to convey the depths of public squalor into which we have fallen.

PRIVATE AFFLUENCE, PUBLIC SQUALOR

“No society can surely be flourishing and happy, of which the far greater part of the members are poor and miserable.”

—ADAM SMITH

Poverty is an abstraction, even for the poor. But the symptoms of collective impoverishment are all about us. Broken highways, bankrupt cities, collapsing bridges, failed schools, the unemployed, the underpaid and the uninsured: all suggest a collective failure of will. These shortcomings are so endemic that we no longer know how to talk about what is wrong, much less set about repairing it. And yet something is seriously amiss. Even as the US budgets tens of billions of dollars on a futile military campaign in Afghanistan, we fret nervously at the implications of any increase in public spending on social services or infrastructure.

To understand the depths to which we have fallen, we must first appreciate the scale of the changes that have overtaken us. From the late 19th century until the 1970s, the advanced societies of the West were all becoming less unequal. Thanks to progressive taxation, government subsidies for the poor, the provision of social services and guarantees against acute misfortune, modern democracies were shedding extremes of wealth and poverty.

To be sure, great differences remained. The essentially egalitarian countries of Scandinavia and the considerably more diverse societies of southern Europe remained distinctive; and the English-speaking lands of the Atlantic world and the British Empire continued to reflect long-standing class distinctions. But each in its own way was affected by the growing intolerance of immoderate inequality, initiating public provision to compensate for private inadequacy.

Over the past thirty years we have thrown all this away. To be sure, “we” varies with country. The greatest extremes of private privilege and public indifference have resurfaced in the US and the UK: epicenters of enthusiasm for deregulated market capitalism. Although countries as far apart as New Zealand and Denmark, France and Brazil have expressed periodic interest, none has matched Britain or the United States in their unwavering thirty-year commitment to the unraveling of decades of social legislation and economic oversight.

In 2005, 21.2 percent of US national income accrued to just 1 percent of earners. Contrast 1968, when the CEO of General Motors took home, in pay and benefits, about sixty-six times the amount paid to a typical GM worker. Today the CEO of Wal-Mart earns nine hundred times the wages of his average employee. Indeed, the wealth of the Wal-Mart founders’ family that year was estimated at about the same ($90 billion) as that of the bottom 40 percent of the US population: 120 million people.

The UK too is now more unequal—in incomes, wealth, health, education and life chances—than at any time since the 1920s. There are more poor children in the UK than in any other country of the European Union. Since 1973, inequality in take-home pay increased more in the UK than anywhere except the US. Most of the new jobs created in Britain in the years 1977-2007 were either at the very high or the very low end of the pay scale.

The consequences are clear. There has been a collapse in intergenerational mobility: in contrast to their parents and grandparents, children today in the UK as in the US have very little expectation of improving upon the condition into which they were born. The poor stay poor. Economic disadvantage for the overwhelming majority translates into ill health, missed educational opportunity and—increasingly—the familiar symptoms of depression: alcoholism, obesity, gambling and minor criminality. The unemployed or underemployed lose such skills as they have acquired and become chronically superfluous to the economy. Anxiety and stress, not to mention illness and early death, frequently follow.

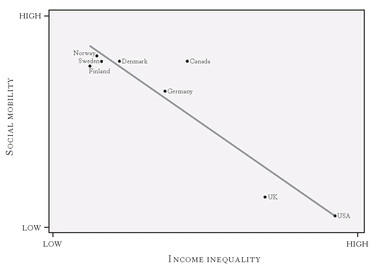

Social Mobility and Inequality.

(From Wilkinson & Pickett, The Spirit Level, Figure 12.1, p. 160)

Income disparity exacerbates the problems. Thus the incidence of mental illness correlates closely to income in the US and the UK, whereas the two indices are quite unrelated in all continental European countries. Even trust, the faith we have in our fellow citizens, corresponds negatively with differences in income: between 1983 and 2001, mistrustfulness increased markedly in the US, the UK and Ireland—three countries in which the dogma of unregulated individual self-interest was most assiduously applied to public policy. In no other country was a comparable increase in mutual mistrust to be found.

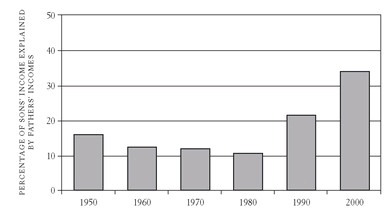

Social Mobility in the USA.

(From Wilkinson & Pickett, The Spirit Level, Figure 12.2, p. 161)

Even within individual countries, inequality plays a crucial role in shaping peoples’ lives. In the United States, for example, your chances of living a long and healthy life closely track your income: residents of wealthy districts can expect to live longer and better. Young women in poorer states of the US are more likely to become pregnant in their teenage years—and their babies are less likely to survive—than their peers in wealthier states. In the same way, a child from a disfavored district has a higher chance of dropping out of high school than if his parents have a steady mid-range income and live in a prosperous part of the country. As for the children of the poor who remain in school: they will do worse, achieve lower scores and obtain less fulfilling and lower paid employment.

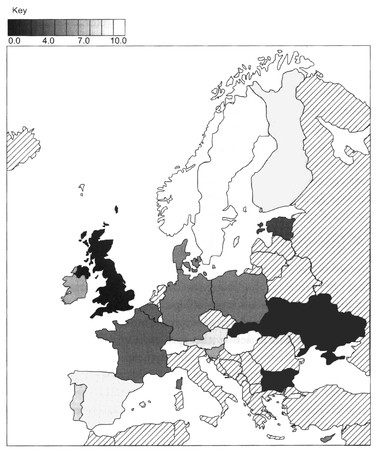

Trust and Belonging in Europe.

(From Tim Jackson, Prosperity Without Growth: Economics for a Finite Planet [London: Earthscan, 2009], Figure 9.1, p. 145)

Inequality, then, is not just unattractive in itself; it clearly corresponds to pathological social problems that we cannot hope to address unless we attend to their underlying cause. There is a reason why infant mortality, life expectancy, criminality, the prison population, mental illness, unemployment, obesity, malnutrition, teenage pregnancy, illegal drug use, economic insecurity, personal indebtedness and anxiety are so much more marked in the US and the UK than they are in continental Europe.

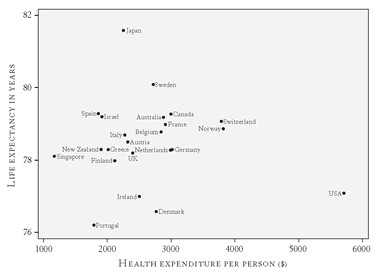

The wider the spread between the wealthy few and the impoverished many, the worse the social problems: a statement which appears to be true for rich and poor countries alike. What matters is not how affluent a country is but how unequal it is. Thus Sweden, or Finland, two of the world’s wealthiest countries by per capita income or GDP, have a very narrow gap separating their richest from their poorest citizens—and they consistently lead the world in indices of measurable wellbeing. Conversely, the United States, despite its huge aggregate wealth, always comes low on such measures. We spend vast sums on healthcare, but life expectancy in the US remains below Bosnia and just above Albania.

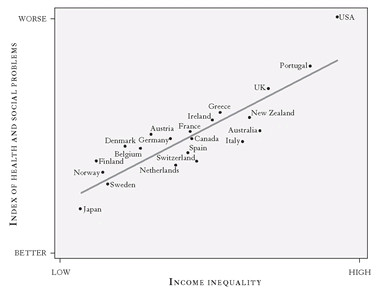

Inequality and Ill Health

(From Jackson, Prosperity Without Growth, Figure 9.2, p. 155)

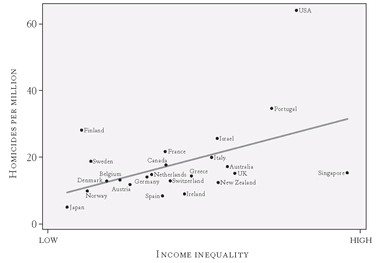

Inequality and Crime.

(From Wilkinson & Pickett, The Spirit Level, Figure 10.2, p. 135)

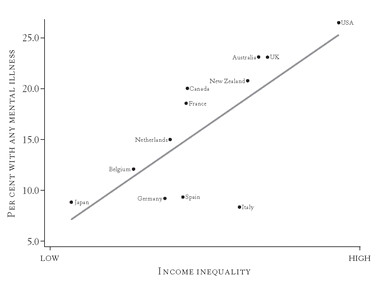

Inequality and Mental Illness.

(From Wilkinson & Pickett, The Spirit Level, Figure 5.1, p. 67)

Health Expenditure and Life Expectancy.

(From Wilkinson & Pickett, The Spirit Level, Figure 6.2, p. 80)

Inequality is corrosive. It rots societies from within. The impact of material differences takes a while to show up: but in due course competition for status and goods increases; people feel a growing sense of superiority (or inferiority) based on their possessions; prejudice towards those on the lower ranks of the social ladder hardens; crime spikes and the pathologies of social disadvantage become ever more marked. The legacy of unregulated wealth creation is bitter indeed.1

CORRUPTED SENTIMENTS

“There are no conditions of life to which a man cannot get accustomed, especially if he sees them accepted by everyone around him.”

—LEV TOLSTOY, ANNA KARENINA

During the long decades of ‘equalization’, the idea that such improvements could be sustained became commonplace. Reductions in inequality are self-confirming: the more equal we get, the more equal we believe it is possible to be. Conversely, thirty years of growing inequality have convinced the English and Americans in particular that this is a natural condition of life about which we can do little.

To the extent that we do speak of alleviating social ills, we suppose economic ‘growth’ to be sufficient: the diffusion of prosperity and privilege will flow naturally from an increase in the cake. Sadly, all the evidence suggests the contrary. Whereas in hard times we are more likely to accept redistribution as both necessary and possible, in an age of affluence economic growth typically privileges the few while accentuating the relative disadvantage of the many.

We are often blind to this: an overall increase in aggregate wealth camouflages distributive disparities. This problem is familiar from the development of backward societies—economic growth benefits everyone but disproportionately serves a tiny minority positioned to exploit it. Contemporary China or India illustrate the point. But that the United States, a fully developed economy, should have a ‘Gini coefficient’ (the conventional measure of the gap separating rich and poor) almost identical to that of China is remarkable.

It is one thing to dwell amongst inequality and its pathologies; it is quite another to revel in them. There is everywhere a striking propensity to admire great wealth and accord it celebrity status (‘Lifestyles of the Rich and Famous’). We have been here before: back in the 18th century, Adam Smith—the founding father of classical economics—observed the same disposition among his contemporaries: “The great mob of mankind are the admirers and worshippers, and, what may seem more extraordinary, most frequently the disinterested admirers and worshippers, of wealth and greatness.”2

For Smith, this uncritical adulation of wealth for its own sake was not merely unattractive. It was also a potentially destructive feature of a modern commercial economy, one that might in the course of time undermine the very qualities which capitalism, in his eyes, needed to sustain and nourish: “The disposition to admire, and almost to worship, the rich and the powerful, and to despise, or, at least, to neglect, persons of poor and mean condition . . . [is] . . . the great and most universal cause of the corruption of our moral sentiments.”3

Our moral sentiments have indeed been corrupted. We have become insensible to the human costs of apparently rational social policies, especially when we are advised that they will contribute to overall prosperity and thus—implicitly—to our separate interests. Consider the 1996 “Personal Responsibility and Work Opportunity Act” (a revealingly Orwellian label), the Clinton-era legislation that sought to gut welfare provision here in the US. The stated purpose of this Act was to shrink the nation’s welfare rolls. This was to be achieved by withholding welfare from anyone who had failed to seek (and, if successful, accept) paid employment. Because an employer could thus hope to attract workers at almost any wage he offered—they could not decline a job, however distasteful, without risking exclusion from welfare benefits—not only were the numbers on welfare considerably reduced but wages and business costs fell too.

Moreover, welfare acquired an explicit stigma. To be a recipient of public aid, whether in the form of child support, food stamps or unemployment benefits, was a mark of Cain: a sign of personal failure, evidence that one had somehow fallen through the cracks of society. In the contemporary United States, at a time of growing unemployment, a jobless man or woman is thus stigmatized: they are not quite a full member of the community. Even in social democratic Norway, the 1991 Social Services Act entitled local authorities to impose comparable work requirements on anyone applying for welfare.

The terms of this legislation should put us in mind of a previous Act, passed in England nearly two hundred years earlier: the New Poor Law of 1834. The provisions of this law are familiar to us, thanks to Charles Dickens’s depiction of its workings in Oliver Twist. When Noah Claypole famously sneers at little Oliver, calling him “Work’us” (“Workhouse”), he is implying, for 1838, precisely what we convey today when we speak disparagingly of “welfare queens”.

The New Poor Law was an outrage. It obliged the indigent and the unemployed to choose between work at any wage, however low, and the humiliation of the workhouse. Here, as in other forms of 19th-century public assistance (still thought of and described as “charity”), the level of aid and support was calibrated so as to be less appealing than the worst available alternative. The Law drew on contemporary economic theories that denied the very possibility of unemployment in an efficient market: if wages fell low enough and there was no attractive alternative to work, everyone would eventually find a job.

For the next 150 years, reformers strove to abolish such demeaning practices. In due course, the New Poor Law and its foreign analogues were succeeded by the public provision of assistance as a matter of right. Workless citizens were no longer deemed any the less deserving for the misfortune of unemployment; they would not be penalized for their condition nor would implicit aspersions be cast upon their good standing as members of society. More than anything else, the welfare states of the mid- 20th century established the profound indecency of defining civic status as a function of economic good fortune.

Instead, the ethic of Victorian voluntarism and punitive eligibility criteria was replaced by universal social provision, albeit varying considerably from country to country. The inability to work or to find work, far from being stigmatized, was now treated as a condition of occasional but by no means dishonorable dependence upon one’s fellow citizens. Needs and rights were accorded special respect and the idea that unemployment was the product of bad character or insufficient effort was put to rest.

Today we have reverted to the attitudes of our early Victorian forebears. Once again, we believe exclusively in incentives, “effort” and reward—together with penalties for inadequacy. To hear Bill Clinton or Margaret Thatcher explain it, making welfare universally available to all who need it would be foolish. If workers are not desperate, why should they work? If the state pays people to be idle, what incentive do they have to seek out paid employment? We have reverted to the hard, cold world of Enlightened economic rationality, first and best expressed in The Fable of the Bees, Bernard Mandeville’s 1732 essay on political economy. Workers, in Mandeville’s view, “have nothing to stir them up to be serviceable but their wants, which it is prudence to relieve but folly to cure”. Tony Blair could not have said it better.

Welfare ‘reforms’ have revived the dreaded ‘means test’. As readers of George Orwell will recall, the indigent in Depression-era England could only apply for poor relief once the authorities had established—by means of intrusive inquiry—that they had exhausted their own resources. A similar test was applied to the unemployed in 1930s America. Malcolm X, in his memoirs, recalls the officials who “checked up” on his family: “[T]he monthly Welfare check was their pass. They acted as if they owned us. As much as my mother would have liked to, she couldn’t keep them out. . . .We couldn’t understand why, if the state was willing to give us packages of meat, sacks of potatoes and fruit, and cans of all kinds of things, our mother obviously hated to accept. What I later understood was that my mother was making a desperate effort to preserve her pride, and ours. Pride was just about all we had to preserve, for by 1934, we really began to suffer.”

Contrary to a widespread assumption that has crept back into Anglo-American political jargon, few derive pleasure from handouts: of clothes, shoes, food, rent support or children’s school supplies. It is, quite simply, humiliating. Restoring pride and self-respect to society’s losers was a central platform in the social reforms that marked 20th century progress. Today we have once again turned our back on them.

Although uncritical admiration for the Anglo-Saxon model of “free enterprise”, “the private sector”, “efficiency”, “profits” and “growth” has been widespread in recent years, the model itself has only been applied in all its self-congratulatory rigor in Ireland, the UK and the USA. Of Ireland there is little to say. The so-called “economic miracle” of the ‘plucky little Celtic tiger’ consisted of an unregulated, low-tax regime which predictably attracted inward investment and hot money. The inevitable shortfall in public income was compensated by subsidies from the much-maligned European Union, funded for the most part by the supposedly inept ‘old European’ economies of Germany, France and the Netherlands. When Wall Street’s party crashed, the Irish bubble burst along with it. It will not soon reflate.

The British case is more interesting: it mimics the very worst features of America while failing to open the UK to the social and educational mobility which characterized American progress at its best. On the whole, the British economy since 1979 tracked the decline of its American confrère not only in a cavalier unconcern for its victims but also in a reckless enthusiasm for financial services at the expense of the country’s industrial base. Whereas bank assets as a share of GDP had remained steady at around 70% from the 1880s through the early 1970s, by 2005 they exceeded 500%. As aggregate national wealth grew, so did the poverty of most of the regions outside London and north of the river Trent.

To be sure, even Margaret Thatcher could not altogether dismantle the welfare state, popular with the same lower middle class that so enthusiastically brought her to power. And thus, in contrast to the United States, the growing number of people at the bottom of the British heap still have access to free or cheap medical services, exiguous but guaranteed pensions, residual unemployment relief and a vestigial system of public education. If Britain is “broken”, as some observers have concluded in recent years, at least the constituent fragments get caught in a safety net. For a society trapped in delusions of prosperity and good prospects, with the losers left to fend for themselves, we must—regretfully—look to the USA.

AMERICAN PECULIARITIES

“As one digs deeper into the national character of the Americans, one sees that they have sought the value of everything in this world only in the answer to this single question: how much money will it bring in?”

—ALEXIS DE TOCQUEVILLE

Without knowing anything about OECD charts or unfavorable comparisons with other nations, many Americans are well aware that something is seriously amiss. They do not live as well as they once did. Everyone would like their child to have improved life chances at birth: better education and better job prospects. They would prefer it if their wife or daughter had the same odds of surviving maternity as women in other advanced countries. They would appreciate full medical coverage at lower cost, longer life expectancy, better public services, and less crime. However, when advised that such benefits are available in Western Europe, many Americans respond: “But they have socialism! We do not want the state interfering in our affairs. And above all, we do not wish to pay more taxes.”

This curious cognitive dissonance is an old story. A century ago, the German sociologist Werner Sombart famously asked: Why is there no socialism in America? There are many answers to this question. Some have to do with the sheer size of the country: shared purposes are difficult to organize and sustain on an imperial scale and the US is, for all practical purposes, an inland empire.

Then there are cultural factors, notorious among them the distinctively American suspicion of central government. Whereas certain very large and diverse territorial units—China, for example, or Brazil—depend upon the powers and initiatives of a distant state, the US, in this respect unmistakably a child of 18th century Anglo-Scottish thought, was built on the premise that the power of central authority should be hemmed in on all sides. The presumption in the American Bill of Rights—that whatever is not explicitly accorded to the national government is by default the prerogative of the separate states—has been internalized over the course of the centuries by generations of settlers and immigrants as a license to keep Washington “out of our lives”.

This suspicion of the public authorities, periodically elevated to a cult by Know Nothings, States’ Rightists, anti-tax campaigners and—most recently—the radio talk show demagogues of the Republican Right, is uniquely American. It translates an already distinctive suspicion of taxation (with or without representation) into patriotic dogma. Here in the US, taxes are typically regarded as uncompensated income loss. The idea that they might (also) be a contribution to the provision of collective goods that individuals could never afford in isolation (roads, firemen, policemen, schools, lamp posts, post offices, not to mention soldiers, warships, and weapons) is rarely considered.

In continental Europe as in much of the developed world, the idea that any one person could be completely ‘self-made’ evaporated with the illusions of 19th century individualism. We are all the beneficiaries of those who went before us, as well as those who will care for us in old age or ill health. We all depend upon services whose costs we share with our fellow citizens, however selfishly we conduct our economic lives. But in America, the ideal of the autonomous entrepreneurial individual remains as appealing as ever.

And yet, the United States has not always been at odds with the rest of the modern world. Even if that were the case for the America of Andrew Jackson or Ronald Reagan, it hardly does justice to the far-reaching social reforms of the New Deal or Lyndon Johnson’s Great Society in the 1960s. After visiting Washington in 1934, Maynard Keynes wrote to Felix Frankfurter: “Here, not in Moscow, is the economic laboratory of the world. The young men who are running it are splendid. I am astonished at their competence, intelligence and wisdom. One meets a classical economist here and there who ought to be thrown out of [the] window—but they mostly have been.”

Much the same might have been said of the remarkable ambitions and achievements of the Democratic-led Congresses of the ’60s that created food stamps, Medicare, the Civil Rights Act, Medicaid, Headstart, the National Endowment for the Humanities, the National Endowment for the Arts and the Corporation for Public Broadcasting. If this was America, it bore a curious resemblance to ‘old Europe’.

Moreover, the ‘public sector’ in American life is in some respects more articulated, developed and respected than its European counterparts. The best instance of this is the public provision of first-class institutions of higher education—something that the US has done for longer and better than most European countries. The land grant colleges that became the University of California, the University of Indiana, the University of Michigan and other internationally renowned institutions have no peers outside the US, and the often underestimated community college system is similarly unique.

Moreover, for all their inability to sustain a national railway system, Americans not only networked their country with taxpayer-financed freeways; today, they support in some of their major cities well-functioning systems of public transport at the very moment that their English counterparts can think of nothing better to do than dump the latter on the private sector at fire-sale prices. To be sure, the citizens of the US remain unable to furnish themselves with even the minimal decencies of a public health system; but ‘public’ as such was not always a term of opprobrium in the national lexicon.

ECONOMISM AND ITS DISCONTENTS

“Once we allow ourselves to be disobedient to the test of an accountant’s profit, we have begun to change our civilization.”

—JOHN MAYNARD KEYNES

Why do we experience such difficulty even imagining a different sort of society? Why is it beyond us to conceive of a different set of arrangements to our common advantage? Are we doomed indefinitely to lurch between a dysfunctional ‘free market’ and the much-advertised horrors of ‘socialism’?

Our disability is discursive: we simply do not know how to talk about these things any more. For the last thirty years, when asking ourselves whether we support a policy, a proposal or an initiative, we have restricted ourselves to issues of profit and loss—economic questions in the narrowest sense. But this is not an instinctive human condition: it is an acquired taste.

We have been here before. In 1905, the young William Beveridge—whose 1942 report would lay the foundations of the British welfare state—delivered a lecture at Oxford, asking why political philosophy had been obscured in public debates by classical economics. Beveridge’s question applies with equal force today. However, this eclipse of political thought bears no relation to the writings of the great classical economists themselves.

Indeed, the thought that we might restrict public policy considerations to a mere economic calculus was already a source of concern two centuries ago. The Marquis de Condorcet, one of the most perceptive writers on commercial capitalism in its early years, anticipated with distaste the prospect that “liberty will be no more, in the eyes of an avid nation, than the necessary condition for the security of financial operations.” The revolutions of the age risked fostering confusion between the freedom to make money . . . and freedom itself.

We too are confused. Conventional economic reasoning today—ostensibly bloodied but apparently quite unbowed by its inability either to foresee or prevent the banking collapse—describes human behavior in terms of ‘rational choice’. We are all, it asserts, economic beings. We pursue our self-interest (defined as maximized economic advantage) with minimal reference to extraneous criteria such as altruism, self-denial, taste, cultural habit or collective purpose. Supplied with sufficient and correct information about ‘markets’—whether real ones or institutions for the sale and purchase of stocks and bonds—we shall make the best possible choices to our separate and common advantage.

Whether or not these propositions hold any truth is not my concern here. No one today could claim with a straight face that anything remains of the so-called ‘efficient market hypothesis’. An older generation of free market economists used to point out that what is wrong with socialist planning is that it requires the sort of perfect knowledge (of present and future alike) that is never vouchsafed to ordinary mortals. They were right. But it transpires that the same is true for market theorists: they don’t know everything and as a result it turns out that they don’t really know anything.

The ‘false precision’ of which Maynard Keynes accused his economist critics is with us still. Worse: we have smuggled in a misleadingly ‘ethical’ vocabulary to bolster our economic arguments, furnishing us with a self-satisfied gloss upon crassly utilitarian calculations. When imposing welfare cuts on the poor, for example, legislators in the UK and US alike have taken a singular pride in the ‘hard choices’ they have had to make.

The poor vote in much smaller numbers than anyone else. So there is little political risk in penalizing them: just how ‘hard’ are such choices? These days, we take pride in being tough enough to inflict pain on others. If an older usage were still in force, whereby being tough consisted of enduring pain rather than imposing it on others, we should perhaps think twice before so callously valuing efficiency over compassion.3

In that case, how should we talk about the way we choose to run our societies? In the first place, we cannot continue to evaluate our world and the choices we make in a moral vacuum. Even if we could be sure that a sufficiently well-informed and self-aware rational individual would always opt for his own best interests, we would still need to ask just what those interests are. They cannot be inferred from his economic behavior, for in that case the argument would be circular. We need to ask what men and women want for themselves and under what conditions those wants may be addressed.

Clearly we cannot do without trust. If we truly did not trust one another, we would not pay taxes for our mutual support. Nor would we venture very far outdoors for fear of violence or chicanery at the hands of our untrustworthy fellow citizens. Moreover, trust is no abstract virtue. One of the reasons that capitalism today is under siege from so many critics, by no means all of them on the Left, is that markets and free competition also require trust and cooperation. If we cannot trust bankers to behave honestly, or mortgage brokers to tell the truth about their loans, or public regulators to blow the whistle on dishonest traders, then capitalism itself will grind to a halt.

Markets do not automatically generate trust, cooperation or collective action for the common good. Quite the contrary: it is in the nature of economic competition that a participant who breaks the rules will triumph—at least in the short run—over more ethically sensitive competitors. But capitalism could not survive such cynical behavior for very long. So why has this potentially self-destructive system of economic arrangements lasted? Probably because of habits of restraint, honesty and moderation which accompanied its emergence.

However, far from inhering in the nature of capitalism itself, values such as these derived from longstanding religious or communitarian practices. Sustained by traditional restraints and the continuing authority of secular and ecclesiastical elites, capitalism’s ‘invisible hand’ benefited from the flattering illusion that it unerringly corrected for the moral shortcomings of its practitioners.

These happy inaugural conditions no longer obtain. A contract-based market economy cannot generate them from within, which is why both socialist critics and religious commentators (notably the early 20th century reforming Pope Leo XIII) drew attention to the corrosive threat posed to society by unregulated economic markets and immoderate extremes of wealth and poverty.

As recently as the 1970s, the idea that the point of life was to get rich and that governments existed to facilitate this would have been ridiculed: not only by capitalism’s traditional critics but also by many of its staunchest defenders. Relative indifference to wealth for its own sake was widespread in the postwar decades. In a survey of English schoolboys taken in 1949, it was discovered that the more intelligent the boy the more likely he was to choose an interesting career at a reasonable wage over a job that would merely pay well.4 Today’s schoolchildren and college students can imagine little else but the search for a lucrative job.

How should we begin to make amends for raising a generation obsessed with the pursuit of material wealth and indifferent to so much else? Perhaps we might start by reminding ourselves and our children that it wasn’t always thus. Thinking ‘economistically’, as we have done now for thirty years, is not intrinsic to humans. There was a time when we ordered our lives differently.

CHAPTER TWO

The World We Have Lost

“All of us know by now that from this war there is no way back to a laissez-faire order of society, that war as such is the maker of a silent revolution by preparing the road to a new type of planned order.”

—KARL MANNHEIM, 1943

The past was neither as good nor as bad as we suppose: it was just different. If we tell ourselves nostalgic stories, we shall never engage the problems that face us in the present—and the same is true if we fondly suppose that our own world is better in every way. The past really is another country: we cannot go back. However, there is something worse than idealizing the past—or presenting it to ourselves and our children as a chamber of horrors: forgetting it.

Between the two world wars Americans, Europeans and much of the rest of the world faced a series of unprecedented man-made disasters. The First World War, already the worst and most intensely destructive in recorded memory, was followed in short order by epidemics, revolutions, the failure and breakup of states, currency collapses and unemployment on a scale never conceived by the traditional economists whose policies were still in vogue.

These developments in turn precipitated the fall of most of the world’s democracies into autocratic dictatorships or totalitarian party states of various kinds and tipped the globe into a second World War even more destructive than the first. In Europe, in the Middle East, in east and southeast Asia, the years between 1931 and 1945 saw occupation, destruction, ethnic cleansing, torture, wars of extermination and deliberate genocide on a scale that would have been unimaginable even 30 years earlier.

As late as 1942, it seemed reasonable to fear for freedom. Outside of the English-speaking lands of the north Atlantic and Australasia, democracy was thin on the ground. The only democracies left in continental Europe were the tiny neutral states of Sweden and Switzerland, both dependent on German goodwill. The US had just joined the war. Everything that we take for granted today was not only in jeopardy, but seriously questioned even by its defenders.

Surely, it seemed, the future lay with the dictatorships? Even after the Allies emerged triumphant in 1945, these concerns were not forgotten: depression and fascism remained ever-present in men’s minds. The urgent question was not how to celebrate a magnificent victory and get back to business as usual, but how on earth to ensure that the experience of the years 1914-1945 would never be repeated. More than anyone else, it was Maynard Keynes who devoted himself to addressing this challenge.

THE KEYNESIAN CONSENSUS

“In those years each one of us derived strength from the common upswing of the time and increased his individual confidence out of the collective confidence. Perhaps, thankless as we human beings are, we did not realize then how firmly and surely the wave bore us. But whoever experienced that epoch of world confidence knows that all since has been retrogression and gloom.”

—STEFAN ZWEIG

The great English economist (born in 1883) grew up in a stable, prosperous and powerful Britain: a confident world whose collapse he was privileged to observe—first from an influential perch at the wartime Treasury and then as a participant in the Versailles peace negotiations of 1919. The world of yesterday unraveled, taking with it not just countries, lives and material wealth but all the reassuring certainties of Keynes’s culture and class. How had this happened? Why had no one foreseen it? Why was no one in authority doing anything effective to ensure that it would not happen again?

Understandably, Keynes focused his economic writings upon the problem of uncertainty: in contrast to the confident nostrums of classical and neoclassical economics, he would insist henceforth upon the essential unpredictability of human affairs. To be sure, there were many lessons to be drawn from economic depression, fascist repression and wars of extermination. But more than anything else, as it seemed to Keynes, it was the newfound insecurity in which men and women were forced to live—uncertainty elevated to paroxysms of collective fear—which had corroded the confidence and institutions of liberalism.

What, then, should be done? Like so many others, Keynes was familiar with the attractions of centralized authority and top-down planning to compensate for the inadequacies of the market. Fascism and Communism shared an unabashed enthusiasm for deploying the state. Far from being a shortcoming in the popular eye, this was perhaps their strongest suit: when asked what they thought of Hitler long after his fall, foreigners would sometimes respond that he did at least put the Germans back to work. Whatever his failings, Stalin—it was often said—kept the Soviet Union clear of the Great Depression. And even the joke about Mussolini making Italian trains run on time had a certain edge: what’s wrong with that?

Any attempt to put democracies back on their feet—or to bring democracy and political freedom to countries which had never had them—would have to engage with the record of the authoritarian states. The alternative was to risk popular nostalgia for their achievements—real or imagined. Keynes knew perfectly well that fascist economic policy could never have succeeded in the long-run without war, occupation and exploitation. Nonetheless, he was sensitive not just to the need for countercyclical economic policies to head off future depression, but also to the prudential virtues of ‘the social security state’.

The point of such a state was not to revolutionize social relations, much less inaugurate a socialist era. Keynes, like most of the men responsible for the innovative legislation of those years—from Britain’s Clement Attlee through France’s Charles de Gaulle to Franklin Delano Roosevelt himself—was an instinctive conservative. Every western leader in those years—elderly gentlemen all—had been born into the stable world so familiar to Keynes. And all of them had lived through a traumatic upheaval. Like the hero of Giuseppe di Lampedusa’s Leopard, they understood very well that in order to conserve you must change.

Keynes died in 1946, exhausted by his wartime labors. But he had long since demonstrated that neither capitalism nor liberalism would survive very long without one another. And since the experience of the interwar years had clearly revealed the inability of capitalists to protect their own best interests, the liberal state would have to do it for them whether they liked it or not.

It is thus an intriguing paradox that capitalism was to be saved—indeed, was to thrive in the coming decades—thanks to changes identified at the time (and since) with socialism. This, in turn, reminds us just how very desperate circumstances were. Intelligent conservatives—like the many Christian Democrats who found themselves in office after 1945 for the first time—offered little objection to state control of the “commanding heights” of the economy; along with steeply progressive taxation, they welcomed it enthusiastically.

There was a moralized quality to policy debates in those early postwar years. Unemployment (the biggest issue in the UK, the US or Belgium); inflation (the greatest fear in central Europe, where it had ravaged private savings for decades); and agricultural prices so low (in Italy and France) that peasants were driven off the land and into extremist parties out of despair: these were not just economic issues, they were regarded by everyone from priests to secular intellectuals as tests of the ethical coherence of the community.

The consensus was unusually broad. From the New Dealers to West German “social market” theorists, from Britain’s governing Labour Party to the “indicative” economic planning that shaped public policy in France (and Czechoslovakia, until the 1948 Communist coup): everyone believed in the state. In part, this was because almost everyone feared the implications of a return to the terrors of the recent past and was happy to constrain the freedom of the market in the name of the public interest. Just as the world was now to be regulated and protected by a bevy of international institutions and agreements, from the United Nations to the World Bank, so a well-run democracy would likewise maintain consensus around comparable domestic arrangements.

As early as 1940, Evan Durbin (a British Labour pamphleteer) had written that he could not imagine “the least alteration” in the contemporary trend towards collective bargaining, economic planning, progressive taxation and the provision of publicly funded social services. Sixteen years later, the English Labour politician Anthony Crosland could write, with still greater confidence, that there had been a permanent transition from “an uncompromising faith in individualism and self-help to a belief in group action and participation”. He could even assert that “[a]s for the dogma of the ‘invisible hand’ and the belief that private gain must always lead to the public good, these failed entirely to survive the Great Depression; and even Conservatives and businessmen now subscribe to the doctrine of collective government responsible for the state of the economy”.5

Durbin and Crosland were both social democrats and thus interested parties. But they were not wrong. By the mid-’50s English politics had reached such a level of implied consensus around public policy issues that mainstream political argument was dubbed “Butskellism”: blending the ideas of R.A. Butler, a moderate Conservative minister and Hugh Gaitskell, the centrist leader of the Labour opposition in those years. And Butskellism was universal. Whatever their other differences, French Gaullists, Christian Democrats and Socialists shared a common faith in the activist state, economic planning and large-scale public investment. Much the same was true of the consensus that dominated policy-making in Scandinavia, the Benelux countries, Austria and even ideologically-riven Italy.

In Germany, where social democrats persisted in their Marxist rhetoric (if not Marxist policies) until 1959, there was nevertheless comparatively little separating them from Chancellor Konrad Adenauer’s Christian Democrats. Indeed, it was the (to them) stifling consensus on everything from education to foreign policy to the public provision of recreational facilities—and the interpretation of their country’s troubled past—that drove a younger generation of German radicals into “extra-parliamentary” activity.

Even in the United States, where Republicans were in power throughout the ’50s and aging New Dealers found themselves in the wilderness for the first time in a generation, the transition to conservative administrations—while it had significant consequences for foreign affairs and even free speech—made little difference to domestic policy. Taxation was not a contentious issue and it was a Republican president, Dwight Eisenhower, who authorized the massive, federally-overseen project of the interstate highway system. For all the lip service paid to competition and free markets, the American economy in those years depended heavily upon protection from foreign competition, as well as standardization, regulation, subsidies, price supports, and government guarantees.

The natural inequities of capitalism were softened by the assurance of present well-being and future prosperity. In the mid’ 60s, Lyndon Johnson pushed through a series of path-breaking social and cultural changes; he was able to do so in part because of the residual consensus favoring New Deal-style investments, all-inclusive programs and government initiatives. Significantly, it was civil rights and race relation legislation that divided the country, not social policy.

The years 1945—1975 were widely acknowledged as something of a miracle, giving birth to the ‘American way of life’. Two generations of Americans—the men and women who went through WWII and their children who were to celebrate the ’60s—experienced job security and upward social mobility on an unprecedented (and never to be repeated) scale. In Germany, the Wirtschaftswunder (economic miracle) lifted the country in a single generation from humiliating, rubble-strewn defeat into the wealthiest state in Europe. For France, those years were to become famous (with no hint of irony) as “Les Trente Glorieuses”. In England, at the height of the “age of affluence”, the Conservative Prime Minister Harold Macmillan assured his compatriots that “you have never had it so good”. He was right.

In some countries (Scandinavia being the best-known case) the postwar welfare states were the work of social democrats; elsewhere—in Great Britain, for example—the “social security state” amounted in practice to little more than a series of pragmatic policies aimed at alleviating disadvantage and reducing extremes of wealth and indigence. Their common accomplishment was a remarkable success in curbing inequality. If we compare the gap separating rich and poor, whether measured by overall assets or annual income, we find that in every continental European country as well as in Great Britain and the US, the gap shrank dramatically in the generation following 1945.

With greater equality there came other benefits. Over time, the fear of a return to extremist politics abated. The ‘West’ entered a halcyon era of prosperous security: a bubble, perhaps, but a comforting bubble in which most people did far better than they could ever have hoped in the past and had good reason to anticipate the future with confidence.

Moreover, it was social democracy and the welfare state that bound the professional and commercial middle classes to liberal institutions in the wake of World War II. This was a matter of some consequence: it was the fear and disaffection of the middle class which had given rise to fascism. Bonding the middle classes back to the democracies was by far the most important task facing postwar politicians—and by no means an easy one.

In most cases it was achieved by the magic of “universal-ism”. Instead of having their benefits keyed to income—in which case well-paid professionals or thriving shopkeepers might have complained bitterly at being taxed for social services from which they did not derive much advantage—the educated “middling sort” were offered the same social assistance and public services as the working population and the poor: free education, cheap or free medical treatment, public pensions and unemployment insurance. As a consequence, now that so many of life’s necessities were covered by their taxes, the European middle class found itself by the 1960s with far greater disposable incomes than at any time since 1914.

Interestingly, these decades were characterized by a uniquely successful blend of social innovation and cultural conservatism. Keynes himself exemplifies the point. A man of impeccably elitist tastes and upbringing—though unusually open to new artistic work—he nonetheless grasped the importance of bringing first-class art, performance and writing to the broadest possible audience if British society were to overcome its paralyzing divisions. It was Keynes whose initiatives led to the creation of the Royal Ballet, the Arts Council and much else besides. These were innovative public provisions of uncompromisingly “high” art—much like Lord Reith’s BBC, with its self-assigned obligation to raise popular standards rather than condescend to them.

For Reith or Keynes or the French Culture Minister André Malraux, there was nothing patronizing about this new approach—any more than there was for the young Americans who worked with LBJ on the establishment of a Corporation for Public Broadcasting or the National Endowment for the Humanities. This was “meritocracy”: the opening up of elite institutions to mass applicants at public expense—or at least underwritten by public assistance. It began the process of replacing selection by inheritance or wealth with upward mobility through education. And it produced a few years later a generation for whom all of this seemed self-evident and who thus took it for granted.

There was nothing inevitable about these developments. Wars were typically followed by economic downturns—and the bigger the war the worse the dip. Those who did not fear a resurgence of fascism looked instead anxiously eastwards at the hundreds of divisions of the Red Army and the powerful, popular Communist parties and trade unions of Italy, France and Belgium. When US Secretary of State George Marshall visited Europe in the spring of 1947 he was appalled by what he saw: the Marshall Plan was born of the anxiety that the aftermath of World War II might end up even worse than that of its predecessor.

As for the US, it was deeply divided in those early postwar years by a renascent suspicion of foreigners, radicals and above all communists. McCarthyism may have posed no threat to the Republic, but it was a reminder of just how easily a mediocre demagogue could exploit fear and exaggerate threats. What might he not have done had the economy reverted to its low point of twenty years before? In short, and despite the consensus that was to emerge, it was all more than a little unexpected. So why did it work so well?

THE REGULATED MARKET

“The idea is essentially repulsive, of a society held together only by the relations and feelings arising out of pecuniary interest.”

—JOHN STUART MILL

The short answer is that by 1945 few people believed any longer in the magic of the market. This was an intellectual revolution. Classical economics mandated a tiny role for the state in economic policymaking, and the prevailing liberal ethos of 19th century Europe and North America favored hands-off social legislation, confined for the most part to regulating only the more egregious inequities and dangers of competitive industrialism and financial speculation.

But two world wars had habituated almost everyone to the inevitability of government intervention in daily life. In the First World War most of the participant states had increased their control (hitherto negligible) of production: not just of military matériel but clothing, transport, communications and almost anything relevant to the conduct of an expensive and desperate war. In most places after 1918 these controls were lifted, but there remained a significant residue of government involvement in the regulation of economic life.

Following a short, illusory era of retreat (marked symptomatically by the ascendancy of Calvin Coolidge in the United States and by comparably negligent types in much of western Europe), the utter devastation of the 1929 slump and the ensuing depression forced all governments to choose between ineffectual reticence and overt intervention. Sooner or later, all would opt for the latter.

Whatever remained of the laissez-faire state was then erased by the experience of total war. With no exception, winners and losers in World War II committed not just the country, the economy and every citizen to the pursuit of war; they also mobilized the state for this purpose in ways which would have been inconceivable just thirty years earlier. Whatever their political colour, the combatant states mobilized, regulated, directed, planned and administered every aspect of life.

Even in the United States, the job you held, the wage you were paid, the things you could buy and the places you might go were all constrained in ways that would have horrified Americans a few short years before. The New Deal, whose agencies and institutions had seemed so shockingly innovatory, could now be seen as a mere prelude to the business of mobilizing the whole country around a collective project.

War, in short, concentrated the mind. It had proven possible to convert a whole country into a war machine around a war economy; why then, people asked, could something similar not be accomplished in pursuit of peace? There was no convincing answer. Without anyone quite intending it, western Europe and North America entered upon a new era.

The most obvious symptom of the change came in the form of ‘planning’. Rather than letting things just happen, economists and bureaucrats concluded, it was better to think them out in advance. Unsurprisingly, planning was most admired and advocated at the political extremes. On the Left it was thought that planning was what the Soviets did so well; on the Right it was (correctly) believed that Hitler, Mussolini and their fascist acolytes were committed to top-down planning and that this accounted for their appeal.

The intellectual case for planning was never very strong. Keynes, as we have seen, regarded economic planning much as he did pure market theory: in order to succeed, both required impossibly perfect data. But he accepted, at least in wartime, the necessity of short-term planning and controls. For the postwar peace, he preferred to minimize direct government intervention and manipulate the economy through fiscal and other incentives. But for this to work, governments needed to know what they wished to achieve and this, in the eyes of its advocates, was what ‘planning’ was all about.

Curiously, the enthusiasm for planning was especially marked in the United States. The Tennessee Valley Authority was nothing if not an exercise in confident economic design: not just of a vital resource but of the economy of a whole region. Observers like Louis Mumford declared themselves “entitled to a little collective strutting and crowing”: the TVA and similar projects showed that democracies could match the dictatorships when it came to large-scale, long-term, forward-looking schemes. A few years earlier, Rexford Tugwell had gone so far as to eulogize the idea: “I see the great plan already/And the keen joy of the work will be mine . . . /I shall roll up my sleeves—make/America over.”6

The difference between a planned economy and a state-owned economy was still unclear to many. Liberals like Keynes, William Beveridge or Jean Monnet, the founding spirit behind French planning, had no time for nationalization as an objective in its own right, though they were flexible as to its practical advantages in particular cases. The same was true of the Social Democrats of Scandinavia: far more interested in progressive taxation and the provision of all-embracing social services than in state control of major industries—car manufacturing, for example.

Conversely, Britain’s Labourites doted on the idea of public ownership. If the state represented the working population, then surely a state-owned operation was henceforth in the hands and at the disposal of the workers? Whether or not this was true in practice—the history of British Steel suggests that the state can be just as incompetent and inefficient as the worst private entrepreneur—it diverted attention from any sort of planning at all, with detrimental consequences in decades to come. At the other extreme, Communist planning—which amounted to little more than the establishment of fictional targets to be met by fictional output data—would in due course discredit the whole exercise.

In continental Europe, centralized administrations had traditionally played a more active role in the provision of social services and continued to do so on a greatly expanded scale. The market, it was widely held, was inadequate to the task of defining collective ends: the state would have to step in and fill the breach. Even in the USA, where the state—the “Administration”—was always wary of overstepping traditional bounds, everything from the GI Bill to the scientific education of the coming generation was initiated and paid for from Washington.

Here, too, it was simply assumed that there were public goods and goals for which the market was just not suited. In the words of T.H. Marshall, a leading commentator on the British welfare state, the whole point of ‘welfare’ is to “supersede the market by taking goods and services out of it, or in some way to control and modify its operations so as to produce a result it could not have produced itself.”7

Even in West Germany, where there was an understandable reluctance to pursue Nazi-style centralized controls, ‘social market theorists’ compromised. They insisted that the free market was compatible with social goals and welfare legislation: it would actually function best if encouraged to perform with these objectives in mind. Hence the legislation, much of it still in force, requiring banks and public companies to take the long view, listen to the interests of their employees and maintain an awareness of the social consequences of their business even while pursuing profits.

That the state might exceed its remit and damage the market by distorting its operations was not taken very seriously in these years. From the institution of an International Monetary Fund and a World Bank (later a World Trade Organization as well) to international clearing houses, currency controls, wage restrictions and indicative price limits, the emphasis lay rather in the need to compensate for the palpable shortcomings of markets.

For the same reason, high taxation was not regarded in these years as an affront. On the contrary, steep rates of progressive income tax were seen as a consensual device to take excess resources away from the privileged and the useless and place them at the disposal of those who needed them most or could use them best. This too was not a new idea. The income tax had started to bite in most European countries well before the First World War, and had continued to increase between the wars in many places. All the same, as recently as 1925, most middle class families could still afford one, two or even more servants—often in residence.

By 1950, however, only the aristocracy and the nouveaux riches could hope to maintain such a household: between taxes, inheritance duties and a steady increase in jobs and wages available to the working population, the labor pool of impoverished and subservient domestic employees had all but dried up. Thanks to universal welfare provision, the one benefit of long-term domestic service—the presumptive generosity of the employer for his sick, aged or otherwise indisposed servant—was now redundant.

In the general population there was a widespread belief that a moderate redistribution of wealth, eliminating extremes of rich and poor, was to everyone’s benefit. Condorcet had wisely observed that, “[i]t will always be cheaper for the Treasury to put the poor in a position to buy corn, than to bring the price of corn down to within the reach of the poor.”8 By 1960 this thesis had become de facto government policy throughout the West.