Secure Programming Cookbook for C and C++

John Viega

Matt Messier

Editor

Debby Russell

Copyright © 2009 O'Reilly Media, Inc.

O'Reilly Media

A Note Regarding Supplemental Files

Supplemental files and examples for this book can be found at http://examples.oreilly.com/9780596003944/. Please use a standard desktop web browser to access these files, as they may not be accessible from all ereader devices.

All code files or examples referenced in the book will be available online. For physical books that ship with an accompanying disc, whenever possible, we’ve posted all CD/DVD content. Note that while we provide as much of the media content as we are able via free download, we are sometimes limited by licensing restrictions. Please direct any questions or concerns to [email protected].

Foreword

There is a humorous, computing-related aphorism that goes like this: "There are 10 types of people: those who understand binary, and those who don't." Besides being amusing to people who understand number representation, this saying can be used to group people into four (or 100) categories:

Those who will never quite get the meaning of the statement, even if it is explained to them

Those who need some explanation, but will eventually get the meaning

Those who have the background to grasp the meaning when they read it

Those who have the knowledge and understanding to not only see the statement as obvious, but be able to come up with it independently on their own

There are parallels for these four categories in many different areas of endeavor. You can apply it to art, to cooking, to architecture...or to writing software. I have been teaching aspects of software engineering and security for over 20 years, and I have seen it up close. When it comes to writing reliable software, there are four kinds of programmers:

Those who are constantly writing buggy code, no matter what

Those who can write reasonable code, given coaching and examples

Those who write good code most of the time, but who don't fully realize their limitations

Those who really understand the language, the machine architecture, software engineering, and the application area, and who can write textbook code on a regular basis

The gap between the third category and the fourth may not seem like much to some readers, but there are far fewer people in that last category than you might think. It's also the case that there are lots of people in the third category who would claim they are in the fourth, but really aren't...similar to the 70% of all licensed drivers who say they are in the top 50% of safe drivers. Being an objective judge of one's own abilities is not always possible.

What compounds the problem for us all is that programmers are especially unlikely to realize (or are unwilling to admit) their limits. There are levels and degrees of complexity when working with computers and software that few people completely understand. However, programmers generally hold a world view that they can write correct code all the time, and only occasionally do mistakes occur, when in reality mistakes are commonplace in nearly everyone's code. As with the four categories, or the drivers, or any other domain where skill and training are required, the experts with real ability are fewer in number than those who believe they are expert. The result is software that may be subtly—or catastrophically—incorrect.

A program with serious flaws may compile properly, and work with obvious inputs. This helps reinforce the view that the code is correct. If something later exposes a flaw, many programmers will say that a "bug" somehow "got into the code." Or maybe "it's a computer problem." Neither is candid. Instead, whoever designed and built the system made mistakes. As a profession, we are unwilling to take responsibility when we code things incorrectly. Is it any wonder that a recent NIST study estimated that industry in the United States alone is spending $60 billion a year patching and customizing badly-written software? Is it a surprise that there are thousands of security patches per year for common software platforms? We've seen estimates that go as high as $1.5 trillion in damages per year worldwide for security problems alone, and simple crashes and errors may be more than 10 times as much. These are not rare flaws causing problems. There is a real crisis in producing quality software.

The reality is that if we truly face up to the situation, we might reassess some conventional beliefs. For instance, it is not true that a system is more secure because we can patch the source code when a flaw is discovered. A system is secure or it is not—there is no "more secure." You can't say a car is safer because you can replace the fenders yourself after the brakes give out and it goes over a cliff, either. A system is secure if there are no flaws that lead to a violation of policy. Being able to install the latest patch to the latest bad code doesn't make a system safer. If anything, after we've done it a few times, it should perhaps reduce our confidence in the quality of the software.

An honest view of programming might also cause us to pay more attention to design—to capturing requirements and developing specifications. Too often we end up with code that is put together without understanding the needs—and the pitfalls—of the environment where it will be used. The result is software that misbehaves when someone runs it in a different environment, or with unexpected input. There's a saying that has been attributed to Brian Kernighan, but which appears to have first been written down by W. D. Young, W.E. Boebert, and R.Y. Kain in 1985: "A program that has not been specified cannot be incorrect; it can only be surprising." Most of the security patches issued today are issued to eliminate surprises because there are no specifications for the underlying code. As a profession, we write too much surprising code.

I could go on, but I hope my points are clear: there are some real problems in the way software is being produced, and those problems lead to some serious—and expensive—problems. However, problem-free software and absolute security are almost always beyond our reach in any significant software project, so the next best thing is to identify and reduce the risks. Proven approaches to reduce these risks include using established methods of software engineering, exercising care in design and development, reusing proven software, and thinking about how to handle potential errors. This is the process of assurance—of building trust in our systems. Assurance needs to be built in rather than asserted after the software is finished.

That's why this book is so valuable. It can help people write correct, robust software the first time and avoid many of the surprises. The material in this book can help you provide a network connection with end-to-end security, as well as help you eliminate the need to patch the code because you didn't add enough entropy to key generation, or you failed to change the UID/GID values in the correct order. Using this code you can get the environment set correctly, the signals checked, and the file descriptors the way you need them. And along the way, you can read a clear, cogent description about what needs to be set and why in each case. Add in some good design and careful testing, and a lot of the surprises go away.

Are all the snippets of code in this book correct? Well, correct for what? There are many other things that go into writing reliable code, and they depend on the context. The code in this book will only get you partway to your goal of good code. As with any cookbook, you may need to adjust the portions or add a little extra seasoning to match your overall menu. But before you do that, be sure you understand the implications! The authors of this book have tried to anticipate most of the circumstances where you would use their code, and their instructions can help you avoid the most obvious problems (and many subtle ones). However, you also need to build the rest of the code properly, and run it on a well-administered system. (For that, you might want to check out some of the other O'Reilly books, such as Secure Coding by Mark Graff and Kenneth van Wyk, and Practical Unix and Internet Security by Simson Garfinkel, Gene Spafford, and Alan Schwartz.)

So, let's return to those four categories of programmers. This book isn't likely to help the group of people who are perpetually unclear on the concepts, but it is unlikely to hurt them. It will do a lot to help the people who need guidance and examples, because it contains the text as well as the code. The people who write good software most of the time could learn a lot by reading this book, and using the examples as starting points. And the experts are the ones who will readily adopt this code (with, perhaps, some small adaptions); expert coders know that reuse of trusted components is a key method of avoiding mistakes. Whichever category of programmer you think you are in, you will probably benefit from reading this book and using the code.

Maybe if enough people catch on to what it means to write reliable code, and they start using references such as this book, we can all start saying "There are 10 kinds of computer programmers: those who write code that breaks, and those who read O'Reilly books."

—Gene Spafford, June 2003

Preface

We don't think we need to tell you that writing secure software is incredibly difficult, even for the experts. We're not going to waste any time trying to convince you to start thinking about security—we assume you're already doing that.

Our goal here is to provide you with a rich set of code samples that you can use to help secure the C and C++ programs you write, for both Unix[1] and Windows environments.

There are already several other books out there on the topic of writing secure software. Many of them are quite good, but they universally focus on the fundamentals, not code. That is, they cover basic secure programming principles, and they usually explain how to design for security and perform risk assessments. Nevertheless, none of them show you by example how to do such things as SSL-enable your applications properly, which can be surprisingly difficult.

Fundamental software security skills are important, and everybody should master them. But, in this book, we assume that you already have the basics under your belt. We do talk about design considerations, but we do so compactly, focusing instead on getting the implementation details correct. If you need a more in-depth treatment of basic design principles, there are now several good books on this topic, including Building Secure Software (Addison Wesley). In addition, on this book's web site, we provide links to background resources that are available on the Internet.

More Than Just a Book

There is no way we could cover all the topics we wanted to cover in a reasonable number of pages. In this book, we've had to focus on the recipes and technologies we thought would be most universally applicable. In addition, we've had to focus on the C programming language, with some quick forays into C++ when important, and a bit of assembly when there's no other way.

We hope this book will do well enough that we'll be able to produce versions for other programming languages. Until then, we are going to solve both of the aforementioned problems at once with our web site, http://www.secureprogramming.com, which you can also get to from the book's web page on the O'Reilly site (http://oreilly.com/catalog/secureprgckbk/). Not only can you find errata there, but you can also find and submit secure programming recipes that are not in the book. We will put on the site recipes that we validate to be good. The goal of the site is to be a living, breathing resource that can evolve as time progresses.

[1] We know Linux is not a true Unix, but we will lump it in there throughout this book for the sake of convenience.

We Can't Do It All

There are plenty of things that people may find to criticize about this book. It's too broad a topic to make a perfect book (that's the motivation for the web site, actually). Although we believe that this book is likely to help you a great deal, we do want to address some specific issues so at least you'll know what you're getting if you buy this book:

This book is implementation-focused.

You're not likely to build secure software if you don't know how to design software to be secure from the get-go. We know that well, and we discuss it at great length in the book Building Secure Software. On the other hand, it's at least as easy to have a good design that results in an insecure implementation, particularly when C is the programming language you're using. Not only do our implementation-level solutions incorporate good design principles, but we also discuss plenty of issues that will affect your designs as well as your implementations. The world needs to know both how to design and how to implement with security in mind. We focus on the implementation so that you'll do a better job of it. Nonetheless, we certainly recommend that you read a book that thoroughly covers design before you read this book.

This book doesn't cover C++ well enough.

C++ programmers may grumble that we don't use any C++ specific idioms. For the most part, the advice we give applies to both languages, but giving all the examples in C makes them more applicable, because practitioners in both languages can still use them. On the rare occasion that there are things to note that are specific to C++, we certainly try to do so; examples include our discussions of buffer overflows and the use of exception handling to prevent leaving programs in an insecure state. Over time, our coverage of C++ will improve on the book's web site, but, until then, C++ programmers should still find this book relevant.

This book doesn't always force you to do the secure thing.

Some people would rather we take the approach of showing you one right way to do the few things you should be doing in your applications. For example, we could simply cover ways to create a secure channel, instead of talking about all the different low-level cryptographic primitives and the many ways to use them. We do provide a lot of high-level solutions that we'd strongly prefer you use. On the other hand, we have consulted on so many real-world systems that we know all too well that some people need to trade off the absolute best security possible for some other requirement. The whole security game is about risk mitigation, and it's up to you to decide what your acceptable levels of risk are. We have tried to accommodate people who may have nonstandard requirements, and to teach those people the risks involved in what they're doing. If we simply provide high-level solutions, many people won't use them, and will continue to build their own ad hoc solutions without adequate guidance.

This book could be friendlier to Windows developers.

In general, we cover the native Win32 API, rather than the variety of other API sets that Microsoft offers, such as ATL and MFC. It would simply be infeasible to cover all of them, so we've opted to cover the one that everything else builds on. We're sorry if you have to go to a lower-level API than you might like if you want to use our code, but at least this way the recipes are more widely applicable.

Much of the code that we present in the book will work on both Unix and Windows with little or no modification. In these cases, we've favored traditional Unix naming conventions. The naming conventions may feel foreign, but the bottom line is that no matter what platform you're writing code for, naming conventions are a matter of personal preference.

If you thumb through the table of contents, you'll quickly find that this book contains a considerable amount of material relating to cryptography. Where it was reasonable to do so, we've covered CryptoAPI for Windows, but on the whole, OpenSSL gets far better coverage. It is our experience that CryptoAPI is not nearly as full-featured as OpenSSL in many respects. Further, some of the built-in Windows APIs for things such as SSL are far more complex than we felt was reasonable to cover. Security is something that is difficult to get right even with a good API to work with; an overly complex and underdocumented API certainly doesn't help the situation.

We've tried our best to give Unix and Windows equivalent coverage. However, for some topic areas, one platform may receive more in-depth attention. Generally, this is because of a specific strength or weakness in the platform. We do believe both Windows and Unix programmers can benefit from the material contained in this book.

There will still be security problems in code despite this book.

We have done our best to give you the tools you need to make your code a lot better. But even security gurus occasionally manage to write code with much bigger risks than anticipated. You should expect that it may happen to you, too, no matter what you know about security. One caveat: you should not use the code in this book as if it were a code library you can simply link against. You really need to read the text and understand the problems our code is built to avoid to make sure that you actually use our code in the way it was intended. This is no different from any other API, where you really should RTFM thoroughly before coding if you want to have a chance of getting things right.

Despite the shortcomings some readers may find, we think this book has a great deal to offer. In addition, we will do the best job we can to supplement this book on the Web in hopes of making the material even better.

Organization of This Book

Because this book is a cookbook, the text is not presented in tutorial style; it is a comprehensive reference, filled with code that meets common security needs. We do not intend for this book to be read straight through. Instead, we expect that you will consult this book when you need it, just to pick out the information and code that you need.

To that end, here is a strategy for getting the most out of this book:

Each recipe is named in some detail. Browse through the table of contents and through the list of supplemental recipes on the book's web site.

Before reading appropriate recipes, take a look at the chapter introduction and the first few recipes in the chapter for fundamental background on the topic.

Sometimes, we offer a general recipe providing an overview of possible solutions to a problem, and then more specific recipes for each solution. For example, we have a generic recipe on buffer overflows that helps you determine which technology is best for your application; then there are recipes covering specific technologies that couldn't have been covered concisely in the overview.

If particular concepts are unclear, look them up in the glossary, which is available on the book's web site.

Throughout each recipe, we detail potential "gotchas" that you should consider, so be sure to read recipes in their entirety.

The book is divided into 13 chapters:

Chapter 1, Safe Initialization, provides recipes for making sure your programs are in a secure state on startup and when calling out to other programs.

Chapter 2, Access Control, shows how to manipulate files and directories in a secure manner. We demonstrate both the Unix permissions model and the Windows access control lists used to protect files and other resources.

Chapter 3, Input Validation, teaches you how to protect your programs from malicious user input. In this chapter, we demonstrate techniques for preventing things like buffer overflow problems, cross-site scripting attacks, format string errors, and SQL-injection attacks.

Chapter 4, Symmetric Cryptography Fundamentals, covers basic encoding and storage issues that are often helpful in traditional encryption.

Chapter 5, Symmetric Encryption, shows how to choose and use symmetric encryption primitives such as AES, the Advanced Encryption Standard.

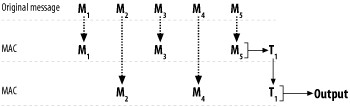

Chapter 6, Hashes and Message Authentication, focuses on ensuring data integrity using message authentication codes.

Chapter 7, Public Key Cryptography, teaches you how to use basic public key algorithms such as RSA.

Chapter 8, Authentication, shows you how to manipulate login credentials. We focus on implementing password-based systems as securely as possible, because this is what most people want to use. Here we also cover a wide variety of technologies, including PAM and Kerberos.

Chapter 9, Networking, provides code for securing your network connections. We discuss SSL and TLS, and also describe more lightweight protocols for when you do not want to set up a public key infrastructure. We strongly encourage you to come here before you go to the cryptography chapters, because it is exceedingly difficult to build a secure network protocol from parts.

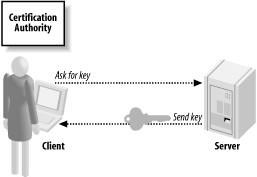

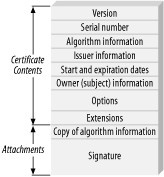

Chapter 10, Public Key Infrastructure, is largely a supplement for Chapter 9 for when you are using a public key infrastructure (PKI), as well as when you are using the SSL/TLS protocol. In this chapter, we demonstrate best practices for using a PKI properly. For example, we show how to determine whether certificates have expired or are otherwise invalid.

Chapter 11, Random Numbers, describes how to get secure random data and turn such data into an efficient and secure stream of pseudo-random numbers.

Chapter 12, Anti-Tampering, gives you the foundations necessary to start protecting your software against reverse engineering. There are no absolute solutions in this area, but if you are willing to put a lot of effort into it, you can make reverse engineering significantly more difficult.

Chapter 13, Other Topics, contains a potpourri of topics that did not fit into other chapters, such as erasing secrets from memory properly, writing a secure signal handler, and preventing common attacks against the Windows messaging system.

In addition, our web site contains a glossary providing a comprehensive listing of the many security-related terms used throughout this book, complete with concise definitions.

Recipe Compatibility

Most of the recipes in this book are written to work on both Unix and Windows platforms. In some cases, however, we have provided different versions for these platforms. In the individual recipes, we've noted any such issues. For convenience, Table P-1 lists those recipes that are specific to one particular platform. Note also that in a few cases, recipes work only on particular variants of Unix.

Table P-1. Platform-specific recipes

| Recipe | System | Recipe | System |

|---|---|---|---|

| 1.1 | Unix | 8.2 | Unix |

| 1.2 | Windows | 8.3 | Windows |

| 1.3 | Unix | 8.6 | Unix |

| 1.4 | Unix | 8.9 | Unix |

| 1.5 | Unix | 8.13 | Unix |

| 1.6 | Unix | 9.5 | Windows |

| 1.7 | Unix | 9.9 | Unix [2] |

| 1.8 | Windows | 10.6 | Windows |

| 1.9 | Unix | 10.11 | Windows |

| 1.5 | Unix | 11.3 | Unix |

| 2.1 | Unix | 11.4 | Windows |

| 2.2 | Windows | 11.7 | Unix |

| 2.3 | Unix | 11.21 | Windows |

| 2.7 | Unix | 12.13 | Unix |

| 2.9 | Unix | 12.14 | Windows |

| 2.10 | Windows | 12.15 | Windows |

| 2.12 | Unix | 12.17 | Unix [3] |

| 2.13 | FreeBSD | 13.5 | Unix |

| 5.25 | Windows | 13.6 | Windows |

| 5.26 | Windows | 13.9 | Unix |

| 5.26 | Windows | 13.10 | Windows |

| [2] This recipe works for FreeBSD, Linux, and NetBSD. It does not work for Darwin, OpenBSD, and Solaris.

[3] This recipe works for FreeBSD, Linux, NetBSD, OpenBSD, and Solaris. It does not work for Darwin. | |||

Conventions Used in This Book

The following typographical conventions are used in this book:

Italic

Is used for filenames, directory names, and URLs. It is also used for emphasis and for the first use of a technical term.

Constant width

Is used for code examples. It is also used for functions, arguments, structures, environment variables, data types, and values.

Tip

Indicates a tip, suggestion, or general note.

Warning

Indicates a warning or caution.

Comments and Questions

We have tested and verified the information in this book to the best of our ability, but you may find that we have made mistakes.

If you find problems with the book or have technical questions, please begin by visiting our web site to see whether your concerns are addressed:

| http://www.secureprogramming.com |

As mentioned earlier, we keep an updated list of known errors in the book on that page, along with new recipes. You can also submit your own recipes or suggestions for new recipes on that page.

If you do not find what you're looking for on our web site, feel free to contact us by sending email to:

| [email protected] |

You may also contact O'Reilly directly with questions or concerns:

| O'Reilly & Associates |

| 1005 Gravenstein Highway North |

| Sebastopol, CA 95472 |

| (800) 998-9938 (in the United States or Canada) |

| (707) 829-0515 (international/local) |

| (707) 829-0104 (fax) |

To ask technical questions or comment on the book, send email to:

| [email protected] |

The O'Reilly web site for the book lists errata and any plans for future editions. You can access this page at:

| http://www.oreilly.com/catalog/secureprgckbk |

For information about other books and O'Reilly in general, see the O'Reilly web site:

| http://www.oreilly.com |

Acknowledgments

This book is all the better for its reviewers, including Seth Arnold, Theo de Raadt, Erik Fichtner, Bob Fleck, Simson Garfinkel, Russ Housley, Mike Howard, Douglas Kilpatrick, Tadayoshi Kohno, John Regehr, Ray Schneider, Alan Schwartz, Fred Tarabay, Rodney Thayer, David Wagner, Scott Walters, and Robert Zigweid. In addition, we would like to single out Tom O'Connor for his Herculean efforts in review and detailed comments.

Zakk Girouard did a lot of background work for us on material in Chapter 1, Chapter 2, Chapter 3, and Chapter 8, and wrote some text for us. We're very grateful, and, dude, we're sorry we didn't make it to your winter solstice party; we tried!

We'd also like to thank the wonderful staff at O'Reilly, particularly our editor, Debby Russell. They were all extraordinarily accommodating, and it was a pleasure working with them. In fact, this project was originally O'Reilly's idea. Sue Miller, our first editor at O'Reilly, initially suggested a Cryptography Cookbook that we were happy to do, and it evolved from there. Thanks for tapping us to write it. Thanks as well to Jon Orwant, who helped in the initial stages of the project.

Many thanks to Gene Spafford for contributing a wonderful foreword to this book and for his many contributions to the field.

Matt Mackall lent us his expertise, helping us to write Recipe 11.19 and providing good feedback on the rest of Chapter 11.

Chapter 12 was written "on the clock," by Secure Software staff, thanks to a contract from the Air Force Research Labs. Martin Stytz and Dawn Ross were responsible for the contract on the Air Force side, and they were a pleasure to work with. Eric Fedel, Zachary Girouard, and Paolo Soto were part of the technical work on this effort, and Kaye Kirsch provided (fantastic) administrative support.

Thanks to everyone at Secure Software for supporting this book, including Admiral Guy Curtis, Kaye Kirsch, and Peter Thimmesch. In addition, we'd like to thank Bill Coleman for being an all-around cool guy, even though he 12:10'd much of our caffeine supply and our stash of late-night snacks.

Finally, we'd like to thank Strong Bad for teaching us how to type up a book while wearing boxing gloves.

John Viega: Thanks to Crispin Cowan, Jeremy Epstein, Eric Fedel, Bob Fleck, Larry Hiller, Russ Housley, Tetsu Iwata, Tadayoshi Kohno, Ben Laurie, David McGrew, Rodney Thayer, David Wagner, Doug Whiting, and Jason Wright for conversations that ended up improving this book, either directly or indirectly. Thanks also to my good friend Paul Wouters for hosting the book's web site. And, as always, thanks to my family for their unflagging support. My daughters Emily and Molly deserve special thanks, because time I spend writing is often time I don't get to spend with them. Of course, if they were given a choice in the matter, this book probably wouldn't exist....

Over the years I've been lucky to have a number of excellent mentors. Thanks to Matt Conway, Russ Housley, Gary McGraw, Paul Reynolds, Greg Stein, and Peter Thimmesch—you were/are all excellent at the role.

I'd also like to thank Matt Messier for the awesome job he did on the book. I'm sorry it was so much more work than it was intended to be!

Finally, I would like to thank sugar-free Red Bull and Diet Dr. Pepper for keeping me awake to write. Narcolepsy is a pain.

Matt Messier: I would like to thank Jim Archer, Mike Bilow, Eric Fedel, Bob Fleck, Brian Gannon, Larry Hiller, Fred Tarabay, Steve Wells, and the Rabble Babble Crew (Ellen, Brad, Gina, and Michael especially) for moral support, and for listening to me ramble about whatever I happened to be writing about at the time, regardless of how much or how little sense I was making. An extra special "thank you" to my parents, without whom I would never be writing these words.

Thanks also to John Viega for pulling me in to work on this book, and for consistently pushing to make it as great as I believe it is. John, it's been a pleasure working with you.

Finally, a big thanks goes out to Red Bull and to Peter's wonderful contribution of the espresso machine in the kitchen that got me going every morning.

Chapter 1. Safe Initialization

Robust initialization of a program is important from a security standpoint, because the more parts of the program's environment that can be validated (e.g., input, privileges, system parameters) before any critical code runs, the better you can minimize the risks of many types of exploits. In addition, setting a variety of operating parameters to a known state will help thwart attackers who run a program in a hostile environment, hoping to exploit some assumption in the program regarding an external resource that the program accesses (either directly or indirectly). This chapter outlines some of these potential problems, and suggests solutions that work towards reducing the associated risks.

1.1. Sanitizing the Environment

Problem

Attackers can often control the value of important environment variables, sometimes even remotely—for example, in CGI scripts, where invocation data is passed through environment variables.

You need to make sure that an attacker does not set environment variables to malicious values.

Solution

Many programs and libraries, including the shared library loader on both Unix and Windows systems, depend on environment variable settings. Because environment variables are inherited from the parent process when a program is executed, an attacker can easily sabotage variables, causing your program to behave in an unexpected and even insecure manner.

Typically, Unix systems are considerably more dependent on environment variables than are Windows systems. In fact, the only scenario common to both Unix and Windows is that there is an environment variable defining the path that the system should search to find an executable or shared library (although differently named variables are used on each platform). On Windows, one environment variable controls the search path for finding both executables and shared libraries. On Unix, these are controlled by separate environment variables. Generally, you should not specify a filename and then rely on these variables for determining the full path. Instead, you should always use absolute paths to known locations.[1]

Certain variables expected to be present in the environment can cause insecure program behavior if they are missing or improperly set. Make sure, therefore, that you never fully purge the environment and leave it empty. Instead, variables that should exist should be forced to sane values or, at the very least, treated as highly suspect and examined closely before they're used. Remove any unknown variables from the environment altogether.

Discussion

The standard C runtime library defines a global variable,[2] environ , as a NULL-terminated array of strings, where each string in the array is of the form "name=value".

Most systems do not declare the variable in any standard header file, Linux being the notable exception, providing a declaration in unistd.h. You can gain access to the variable by including the following extern statement in your code:

extern char **environ;

Several functions defined in stdlib.h, such as getenv( ) and putenv( ) , provide access to environment variables, and they all operate on this variable. You can therefore make changes to the contents of the array or even build a new array and assign it to the variable.

This variable also exists in the standard C runtime library on Windows; however, the C runtime on Windows is not as tightly bound to the operating system as it is on Unix. Directly manipulating the environ variable on Windows will not necessarily produce the same effects as it will on Unix; in the majority of Windows programs, the C runtime is never used at all, instead favoring the Win32 API to perform the same functions as those provided by the C runtime. Because of this, and because of Windows' lack of dependence on environment variables, we do not recommend using the code in this recipe on Windows. It simply does not apply. However, we do recommend that you at least skim the textual content of this recipe so that you're aware of potential pitfalls that could affect you on Windows.

On a Unix system, if you invoke the command printenv at a shell prompt, you'll likely see a sizable list of environment variables as a result. Many of the environment variables you will see are set by whichever shell you're using (i.e., bash or tcsh). You should never use nor trust any of the environment variables that are set by the shell. In addition, a malicious user may be able to set other environment variables.

In most cases, the information contained in the environment variables set by the shell can be determined by much more reliable means. For example, most shells set the HOME environment variable, which is intended to be the user's home directory. It's much more reliable to call getuid( ) to determine who the user is, and then call getpwuid( ) to get the user's password file record, which will contain the user's home directory. For example:

#include <sys/types.h>

#include <stdio.h>

#include <string.h>

#include <unistd.h>

#include <pwd.h>

int main(int argc, char *argv[ ]) {

uid_t uid;

struct passwd *pwd;

uid = getuid( );

printf("User's UID is %d.\n", (int)uid);

if (!(pwd = getpwuid(uid))) {

printf("Unable to get user's password file record!\n");

endpwent( );

return 1;

}

printf("User's home directory is %s\n", pwd->pw_dir);

endpwent( );

return 0;

}

Warning

The code above is not thread-safe. Be sure multiple threads do not try to manipulate the password database at the same time.

In many cases, it is reasonably safe to throw away most of the environment variables that your program will inherit from its parent process, but you should make it a point to be aware of any environment variables that will be used by code you're using, including the operating system's dynamic loader and the standard C runtime library. In particular, dynamic loaders on ELF-based Unix systems (among the Unix variants we're explicitly supporting in this book, Darwin is the major exception here because it does not use ELF (Executable and Linking Format) for its executable format) and most standard implementations of malloc( ) all recognize a wide variety of environment variables that control their behavior.

In most cases, you should never be doing anything in your programs that will make use of the PATH environment variable. Circumstances do exist in which it may be reasonable to do so, but make sure to weigh your options carefully beforehand. Indeed, you should consider carefully whether you should be using any environment variable in your programs. Regardless, if you launch external programs from within your program, you may not have control over what the external programs do, so you should take care to provide any external programs you launch with a sane and secure environment.

In particular, the two environment variables IFS and PATH should always be forced to sane values. The IFS environment variable is somewhat obscure, but it is used by many shells to determine which character separates command-line arguments. Modern Unix shells use a reasonable default value for IFS if it is not already set. Nonetheless, you should defensively assume that the shell does nothing of the sort. Therefore, instead of simply deleting the IFS environment variable, set it to something sane, such as a space, tab, and newline character.

The PATH environment variable is used by the shell and some of the exec*( ) family of standard C functions to locate an executable if a path is not explicitly specified. The search path should never include relative paths, especially the current directory as denoted by a single period. To be safe, you should always force the setting of the PATH environment variable to _PATH_STDPATH, which is defined in paths.h. This value is what the shell normally uses to initialize the variable, but an attacker or naïve user could change it later. The definition of _PATH_STDPATH differs from platform to platform, so you should generally always use that value so that you get the right standard paths for the system your program is running on.

Finally, the TZ environment variable denotes the time zone that the program should use, when relevant. Because users may not be in the same time zone as the machine (which will use a default whenever the variable is not set), it is a good idea to preserve this variable, if present. Note also that this variable is generally used by the OS, not the application. If you're using it at the application level, make sure to do proper input validation to protect against problems such as buffer overflow.

Finally, a special environment variable,, is defined to be the time zone on many systems. All systems will use it if it is defined, but while most systems will get along fine without it, some systems will not function properly without its being set. Therefore, you should preserve it if it is present.

Any other environment variables that are defined should be removed unless you know, for some reason, that you need the variable to be set. For any environment variables you preserve, be sure to treat them as untrusted user input. You may be expecting them to be set to reasonable values—and in most cases, they probably will be—but never assume they are. If for some reason you're writing CGI code in C, the list of environment variables passed from the web server to your program can be somewhat large, but these are largely trustworthy unless an attacker somehow manages to wedge another program between the web server and your program.

Of particular interest among environment variables commonly passed from a web server to CGI scripts are any environment variables whose names begin with HTTP_ and those listed in Table 1-1.

Table 1-1. Environment variables commonly passed from web servers to CGI scripts

| Environment variable name | Comments |

|---|---|

| AUTH_TYPE | If authentication was required to make the request, this contains the authentication type that was used, usually "BASIC". |

| CONTENT_LENGTH | The number of bytes of content, as specified by the client. |

| CONTENT_TYPE | The MIME type of the content sent by the client. |

| GATEWAY_INTERFACE | The version of the CGI specification with which the server complies. |

| PATH_INFO | Extra path information from the URL. |

| PATH_TRANSLATED | Extra path information from the URL, translated by the server. |

| QUERY_STRING | The portion of the URL following the question mark. |

| REMOTE_ADDR | The IP address of the remote client in dotted decimal form. |

| REMOTE_HOST | The host name of the remote client. |

| REMOTE_IDENT | If RFC1413 identification was used, this contains the user name that was retrieved from the remote identification server. |

| REMOTE_USER | If authentication was required to make the request, this contains the user name that was authenticated. |

| REQUEST_METHOD | The method used to make the current request, usually either "GET" or "POST". |

| SCRIPT_NAME | The name of the script that is running, canonicalized to the root of the web site's document tree (e.g., DocumentRoot in Apache). |

| SERVER_NAME | The host name or IP address of the server. |

| SERVER_PORT | The port on which the server is running. |

| SERVER_PROTOCOL | The protocol used to make the request, typically "HTTP/1.0" or "HTTP/1.1". |

| SERVER_SOFTWARE | The name and version of the server. |

The code presented in this section defines a function called spc_sanitize_environment( ) that will build a new environment with the IFS and PATH environment variables set to sane values, and with the TZ environment variable preserved from the original environment if it is present. You can also specify a list of environment variables to preserve from the original in addition to the TZ environment variable.

The first thing that spc_sanitize_environment( ) does is determine how much memory it will need to allocate to build the new environment. If the memory it needs cannot be allocated, the function will call abort( ) to terminate the program immediately. Otherwise, it will then build the new environment and replace the old environ pointer with a pointer to the newly allocated one. Note that the memory is allocated in one chunk rather than in smaller pieces for the individual strings. While this is not strictly necessary (and it does not provide any specific security benefit), it's faster and places less strain on memory allocation. Note, however, that you should be performing this operation early in your program, so heap fragmentation shouldn't be much of an issue.

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <paths.h>

extern char **environ;

/* These arrays are both NULL-terminated. */

static char *spc_restricted_environ[ ] = {

"IFS= \t\n",

"PATH=" _PATH_STDPATH,

0

};

static char *spc_preserve_environ[ ] = {

"TZ",

0

};

void spc_sanitize_environment(int preservec, char **preservev) {

int i;

char **new_environ, *ptr, *value, *var;

size_t arr_size = 1, arr_ptr = 0, len, new_size = 0;

for (i = 0; (var = spc_restricted_environ[i]) != 0; i++) {

new_size += strlen(var) + 1;

arr_size++;

}

for (i = 0; (var = spc_preserve_environ[i]) != 0; i++) {

if (!(value = getenv(var))) continue;

new_size += strlen(var) + strlen(value) + 2; /* include the '=' */

arr_size++;

}

if (preservec && preservev) {

for (i = 0; i < preservec && (var = preservev[i]) != 0; i++) {

if (!(value = getenv(var))) continue;

new_size += strlen(var) + strlen(value) + 2; /* include the '=' */

arr_size++;

}

}

new_size += (arr_size * sizeof(char *));

if (!(new_environ = (char **)malloc(new_size))) abort( );

new_environ[arr_size - 1] = 0;

ptr = (char *)new_environ + (arr_size * sizeof(char *));

for (i = 0; (var = spc_restricted_environ[i]) != 0; i++) {

new_environ[arr_ptr++] = ptr;

len = strlen(var);

memcpy(ptr, var, len + 1);

ptr += len + 1;

}

for (i = 0; (var = spc_preserve_environ[i]) != 0; i++) {

if (!(value = getenv(var))) continue;

new_environ[arr_ptr++] = ptr;

len = strlen(var);

memcpy(ptr, var, len);

*(ptr + len + 1) = '=';

memcpy(ptr + len + 2, value, strlen(value) + 1);

ptr += len + strlen(value) + 2; /* include the '=' */

}

if (preservec && preservev) {

for (i = 0; i < preservec && (var = preservev[i]) != 0; i++) {

if (!(value = getenv(var))) continue;

new_environ[arr_ptr++] = ptr;

len = strlen(var);

memcpy(ptr, var, len);

*(ptr + len + 1) = '=';

memcpy(ptr + len + 2, value, strlen(value) + 1);

ptr += len + strlen(value) + 2; /* include the '=' */

}

}

environ = new_environ;

}

See Also

[1] Note that the shared library environment variable can be relatively benign on modern Unix-based operating systems, because the environment variable will get ignored when a program that can change permissions (i.e., a setuid program) is invoked. Nonetheless, it is better to be safe than sorry!

[2] The use of the term "variable" can quickly become confusing because C defines variables and the environment defines variables. In this recipe, when we are referring to a C variable, we simply say "variable," and when we are referring to an environment variable, we say "environment variable."

1.2. Restricting Privileges on Windows

Problem

Your Windows program runs with elevated privileges, such as Administrator or Local System, but it does not require all the privileges granted to the user account under which it's running. Your program never needs to perform certain actions that may be dangerous if users with elevated privileges run it and an attacker manages to compromise the program.

Solution

When a user logs into the system or the service control manager starts a service, a token is created that contains information about the user logging in or the user under which the service is running. The token contains a list of all of the groups to which the user belongs (the user and each group in the list is represented by a Security ID or SID), as well as a set of privileges that any thread running with the token has. The set of privileges is initialized from the privileges assigned by the system administrator to the user and the groups to which the user belongs.

Beginning with Windows 2000, it is possible to create a restricted token and force threads to run using that token. Once a restricted token has been applied to a running thread, any restrictions imposed by the restricted token cannot be lifted; however, it is possible to revert the thread back to its original unrestricted token. With restricted tokens, it's possible to remove privileges, restrict the SIDs that are used in access checking, and deny SIDs access. The use of restricted tokens is more useful when combined with the CreateProcessAsUser( ) API to create a new process with a restricted token that cannot be reverted to a more permissive token.

Beginning with Windows .NET Server 2003, it is possible to permanently remove privileges from a process's token. Once the privileges have been removed, they cannot be added back. Any new processes created by a process running with a modified token will inherit the modified token; therefore, the same restrictions imposed upon the parent process are also imposed upon the child process. Note that modifying a token is quite different from creating a restricted token. In particular, only privileges can be removed; SIDs can be neither restricted nor denied.

Discussion

Tokens contain a list of SIDs, composed of the user's SID and one SID for each group of which the user is a member. SIDs are assigned by the system when users and groups are created. In addition to the SIDs, tokens also contain a list of restricted SIDs. When access checks are performed and the token contains a list of restricted SIDs, the intersection of the two lists of SIDs contained in the token is used to perform the access check. Finally, tokens also contain a list of privileges. Privileges define specific access rights. For example, for a process to use the Win32 debugging API, the process's token must contain the SeDebugPrivilege privilege.

The primary list of SIDs contained in a token cannot be modified. The token is created for a particular user, and the token must always contain the user's SID along with the SIDs for each group of which the user is a member. However, each SID in the primary list can be marked with a "deny" attribute, which causes access to be denied when an access control list (ACL) contains a SID that is marked as "deny" in the active token.

Creating restricted tokens

Using the CreateRestrictedToken( ) API, a restricted token can be created from an existing token. The resulting token can then be used to create a new process or to set an impersonation token for a thread. In the former case, the restricted token becomes the newly created process's primary token; in the latter case, the thread can revert back to its primary token, effectively making the restrictions imposed by the restricted token useful for little more than helping to prevent accidents.

CreateRestrictedToken( ) requires a large number of arguments, and it may seem an intimidating function to use, but with some explanation and examples, it's not actually all that difficult. The function has the following signature:

BOOL CreateRestrictedToken(HANDLE ExistingTokenHandle, DWORD Flags,

DWORD DisableSidCount, PSID_AND_ATTRIBUTES SidsToDisable,

DWORD DeletePrivilegeCount, PLUID_AND_ATTRIBUTES PrivilegesToDelete,

DWORD RestrictedSidCount, PSID_AND_ATTRIBUTES SidsToRestrict,

PHANDLE NewTokenHandle);

These functions have the following arguments:

ExistingTokenHandle

Handle to an existing token. An existing token handle can be obtained via a call to either OpenProcessToken( ) or OpenThreadToken( ) . The token may be either a primary or a restricted token. In the latter case, the token may be obtained from an earlier call to CreateRestrictedToken( ). The existing token handle must have been opened or created with TOKEN_DUPLICATE access.

Flags

May be specified as 0 or as a combination of DISABLE_MAX_PRIVILEGE or SANDBOX_INERT. If DISABLE_MAX_PRIVILEGE is used, all privileges in the new token are disabled, and the two arguments DeletePrivilegeCount and PrivilegesToDelete are ignored. The SANDBOX_INERT has no special meaning other than it is stored in the token, and can be later queried using GetTokenInformation( ).

DisableSidCount

Number of elements in the list SidsToDisable. May be specified as 0 if there are no SIDs to be disabled. Disabling a SID is the same as enabling the SIDs "deny" attribute.

SidsToDisable

List of SIDs for which the "deny" attribute is to be enabled. May be specified as NULL if no SIDs are to have the "deny" attribute enabled. See below for information on the SID_AND_ATTRIBUTES structure.

DeletePrivilegeCount

Number of elements in the list PrivilegesToDelete. May be specified as 0 if there are no privileges to be deleted.

PrivilegesToDelete

List of privileges to be deleted from the token. May be specified as NULL if no privileges are to be deleted. See below for information on the LUID_AND_ATTRIBUTES structure.

RestrictedSidCount

Number of elements in the list SidsToRestrict. May be specified as 0 if there are no restricted SIDs to be added.

SidsToRestrict

List of SIDs to restrict. If the existing token is a restricted token that already has restricted SIDs, the resulting token will have a list of restricted SIDs that is the intersection of the existing token's list and this list. May be specified as NULL if no restricted SIDs are to be added to the new token.

NewTokenHandle

Pointer to a HANDLE that will receive the handle to the newly created token.

The function OpenProcessToken( ) will obtain a handle to the process's primary token, while OpenThreadToken( ) will obtain a handle to the calling thread's impersonation token. Both functions have a similar signature, though their arguments are treated slightly differently:

BOOL OpenProcessToken(HANDLE hProcess, DWORD dwDesiredAccess, PHANDLE phToken);

BOOL OpenThreadToken(HANDLE hThread, DWORD dwDesiredAccess, BOOL bOpenAsSelf,

PHANDLE phToken);

This function has the following arguments:

hProcess

Handle to the current process, which is normally obtained via a call to GetCurrentProcess( ).

hThread

Handle to the current thread, which is normally obtained via a call to GetCurrentThread( ).

dwDesiredAccess

Bit mask of the types of access desired for the returned token handle. For creating restricted tokens, this must always include TOKEN_DUPLICATE. If the restricted token being created will be used as a primary token for a new process, you must include TOKEN_ASSIGN_PRIMARY; otherwise, if the restricted token that will be created will be used as an impersonation token for the thread, you must include TOKEN_IMPERSONATE.

bOpenAsSelf

Boolean flag that determines how the access check for retrieving the thread's token is performed. If specified as FALSE, the access check uses the calling thread's permissions. If specified as TRUE, the access check uses the calling process's permissions.

phToken

Pointer to a HANDLE that will receive the handle to the process's primary token or the thread's impersonation token, depending on whether you're calling OpenProcessToken( ) or OpenThreadToken( ).

Creating a new process with a restricted token is done by calling CreateProcessAsUser( ) , which works just as CreateProcess( ) does (see Recipe 1.8) except that it requires a token to be used as the new process's primary token. Normally, CreateProcessAsUser( ) requires that the active token have the SeAssignPrimaryTokenPrivilege privilege, but if a restricted token is used, that privilege is not required. The following pseudo-code demonstrates the steps required to create a new process with a restricted primary token:

HANDLE hProcessToken, hRestrictedToken;

/* First get a handle to the current process's primary token */

OpenProcessToken(GetCurrentProcess( ), TOKEN_DUPLICATE | TOKEN_ASSIGN_PRIMARY,

&hProcessToken);

/* Create a restricted token with all privileges removed */

CreateRestrictedToken(hProcessToken, DISABLE_MAX_PRIVILEGE, 0, 0, 0, 0, 0, 0,

&hRestrictedToken);

/* Create a new process using the restricted token */

CreateProcessAsUser(hRestrictedToken, ...);

/* Cleanup */

CloseHandle(hRestrictedToken);

CloseHandle(hProcessToken);

Setting a thread's impersonation token requires a bit more work. Unless the calling thread is impersonating, calling OpenThreadToken( ) will result in an error because the thread does not have an impersonation token and thus is using the process's primary token. Likewise, calling SetThreadToken( ) unless impersonating will also fail because a thread cannot have an impersonation token if it's not impersonating.

If you want to restrict a thread's access rights temporarily, the easiest solution to the problem is to force the thread to impersonate itself. When impersonation begins, the thread is assigned an impersonation token, which can then be obtained via OpenThreadToken( ). A restricted token can be created from the impersonation token, and the thread's impersonation token can then be replaced with the new restricted token by calling SetThreadToken( ).

The following pseudo-code demonstrates the steps required to replace a thread's impersonation token with a restricted one:

HANDLE hRestrictedToken, hThread, hThreadToken;

/* First begin impersonation */

ImpersonateSelf(SecurityImpersonation);

/* Get a handle to the current thread's impersonation token */

hThread = GetCurrentThread( );

OpenThreadToken(hThread, TOKEN_DUPLICATE | TOKEN_IMPERSONATE, TRUE, &hThreadToken);

/* Create a restricted token with all privileges removed */

CreateRestrictedToken(hThreadToken, DISABLE_MAX_PRIVILEGE, 0, 0, 0, 0, 0, 0,

&hRestrictedToken);

/* Set the thread's impersonation token to the new restricted token */

SetThreadToken(&hThread, hRestrictedToken);

/* ... perform work here */

/* Revert the thread's impersonation token back to its original */

SetThreadToken(&hThread, 0);

/* Stop impersonating */

RevertToSelf( );

/* Cleanup */

CloseHandle(hRestrictedToken);

CloseHandle(hThreadToken);

Modifying a process's primary token

Beginning with Windows .NET Server 2003, support for a new flag has been added to the function AdjustTokenPrivileges( ) ; it allows a privilege to be removed from a token, rather than simply disabled. Once the privilege has been removed, it cannot be added back to the token. In older versions of Windows, privileges could only be enabled or disabled using AdjustTokenPrivileges( ), and there was no way to remove privileges from a token without duplicating it. There is no way to substitute another token for a process's primary token—the best you can do in older versions of Windows is to use restricted impersonation tokens.

BOOL AdjustTokenPrivileges(HANDLE TokenHandle, BOOL DisableAllPrivileges,

PTOKEN_PRIVILEGES NewState, DWORD BufferLength,

PTOKEN_PRIVILEGES PreviousState, PDWORD ReturnLength);

This function has the following arguments:

TokenHandle

Handle to the token that is to have its privileges adjusted. The handle must have been opened with TOKEN_ADJUST_PRIVILEGES access; in addition, if PreviousState is to be filled in, it must have TOKEN_QUERY access.

DisableAllPrivileges

Boolean argument that specifies whether all privileges held by the token are to be disabled. If specified as TRUE, all privileges are disabled, and the NewState argument is ignored. If specified as FALSE, privileges are adjusted according to the information in the NewState argument.

NewState

List of privileges that are to be adjusted, along with the adjustment that is to be made for each. Privileges can be enabled, disabled, and removed. The TOKEN_PRIVILEGES structure contains two fields: PrivilegeCount and Privileges. PrivilegeCount is simply a DWORD that indicates how many elements are in the array that is the Privileges field. The Privileges field is an array of LUID_AND_ATTRIBUTES structures, for which the Attributes field of each element indicates how the privilege is to be adjusted. A value of 0 disables the privilege, SE_PRIVILEGE_ENABLED enables it, and SE_PRIVILEGE_REMOVED removes the privilege. See Section 1.2.3.4 later in this section for more information regarding these structures.

BufferLength

Length in bytes of the PreviousState buffer. May be 0 if PreviousState is NULL.

PreviousState

Buffer into which the state of the token's privileges prior to adjustment is stored. It may be specified as NULL if the information is not required. If the buffer is not specified as NULL, the token must have been opened with TOKEN_QUERY access.

ReturnLength

Pointer to an integer into which the number of bytes written into the PreviousState buffer will be placed. May be specified as NULL if PreviousState is also NULL.

The following example code demonstrates how AdjustTokenPrivileges( ) can be used to remove backup and restore privileges from a token:

#include <windows.h>

BOOL RemoveBackupAndRestorePrivileges(VOID) {

BOOL bResult;

HANDLE hProcess, hProcessToken;

PTOKEN_PRIVILEGES pNewState;

/* Allocate a TOKEN_PRIVILEGES buffer to hold the privilege change information.

* Two privileges will be adjusted, so make sure there is room for two

* LUID_AND_ATTRIBUTES elements in the Privileges field of TOKEN_PRIVILEGES.

*/

pNewState = (PTOKEN_PRIVILEGES)LocalAlloc(LMEM_FIXED, sizeof(TOKEN_PRIVILEGES) +

(sizeof(LUID_AND_ATTRIBUTES) * 2));

if (!pNewState) return FALSE;

/* Add the two privileges that will be removed to the allocated buffer */

pNewState->PrivilegeCount = 2;

if (!LookupPrivilegeValue(0, SE_BACKUP_NAME, &pNewState->Privileges[0].Luid) ||

!LookupPrivilegeValue(0, SE_RESTORE_NAME, &pNewState->Privileges[1].Luid)) {

LocalFree(pNewState);

return FALSE;

}

pNewState->Privileges[0].Attributes = SE_PRIVILEGE_REMOVED;

pNewState->Privileges[1].Attributes = SE_PRIVILEGE_REMOVED;

/* Get a handle to the process's primary token. Request TOKEN_ADJUST_PRIVILEGES

* access so that we can adjust the privileges. No other privileges are req'd

* since we'll be removing the privileges and thus do not care about the previous

* state. TOKEN_QUERY access would be required in order to retrieve the previous

* state information.

*/

hProcess = GetCurrentProcess( );

if (!OpenProcessToken(hProcess, TOKEN_ADJUST_PRIVILEGES, &hProcessToken)) {

LocalFree(pNewState);

return FALSE;

}

/* Adjust the privileges, specifying FALSE for DisableAllPrivileges so that the

* NewState argument will be used instead. Don't request information regarding

* the token's previous state by specifying 0 for the last three arguments.

*/

bResult = AdjustTokenPrivileges(hProcessToken, FALSE, pNewState, 0, 0, 0);

/* Cleanup and return the success or failure of the adjustment */

CloseHandle(hProcessToken);

LocalFree(pNewState);

return bResult;

}

Working with SID_AND_ATTRIBUTES structures

A SID_AND_ATTRIBUTES structure contains two fields: Sid and Attributes. The Sid field is of type PSID, which is a variable-sized object that should never be directly manipulated by application-level code. The meaning of the Attributes field varies depending on the use of the structure. When a SID_AND_ATTRIBUTES structure is being used for disabling SIDs (enabling the "deny" attribute), the Attributes field is ignored. When a SID_AND_ATTRIBUTES structure is being used for restricting SIDs, the Attributes field should always be set to 0. In both cases, it's best to set the Attributes field to 0.

Initializing the Sid field of a SID_AND_ATTRIBUTES structure can be done in a number of ways, but perhaps one of the most useful ways is to use LookupAccountName( ) to obtain the SID for a specific user or group name. The following code demonstrates how to look up the SID for a name:

#include <windows.h>

PSID SpcLookupSidByName(LPCTSTR lpAccountName, PSID_NAME_USE peUse) {

PSID pSid;

DWORD cbSid, cchReferencedDomainName;

LPTSTR ReferencedDomainName;

SID_NAME_USE eUse;

cbSid = cchReferencedDomainName = 0;

if (!LookupAccountName(0, lpAccountName, 0, &cbSid, 0, &cchReferencedDomainName,

&eUse)) return 0;

if (!(pSid = LocalAlloc(LMEM_FIXED, cbSid))) return 0;

ReferencedDomainName = LocalAlloc(LMEM_FIXED,

(cchReferencedDomainName + 1) * sizeof(TCHAR));

if (!ReferencedDomainName) {

LocalFree(pSid);

return 0;

}

if (!LookupAccountName(0, lpAccountName, pSid, &cbSid, ReferencedDomainName,

&cchReferencedDomainName, &eUse)) {

LocalFree(ReferencedDomainName);

LocalFree(pSid);

return 0;

}

LocalFree(ReferencedDomainName);

if (peUse) *peUse = eUse;

return 0;

}

If the requested account name is found, a PSID object allocated via LocalAlloc( ) is returned; otherwise, NULL is returned. If the second argument is specified as non-NULL, it will contain the type of SID that was found. Because Windows uses SIDs for many different things other than simply users and groups, the type could be one of many possibilities. If you're looking for a user, the type should be SidTypeUser. If you're looking for a group, the type should be SidTypeGroup. Other possibilities include SidTypeDomain, SidTypeAlias, SidTypeWellKnownGroup, SidTypeDeletedAccount, SidTypeInvalid, SidTypeUnknown, and SidTypeComputer.

Working with LUID_AND_ATTRIBUTES structures

An LUID_AND_ATTRIBUTES structure contains two fields: Luid and Attributes. The Luid field is of type LUID , which is an object that should never be directly manipulated by application-level code. The meaning of the Attributes field varies depending on the use of the structure. When an LUID_AND_ATTRIBUTES structure is being used for deleting privileges from a restricted token, the Attributes field is ignored and should be set to 0. When an LUID_AND_ATTRIBUTES structure is being used for adjusting privileges in a token, the Attributes field should be set to SE_PRIVILEGE_ENABLED to enable the privilege, SE_PRIVILEGE_REMOVED to remove the privilege, or 0 to disable the privilege. The SE_PRIVILEGE_REMOVED attribute is not valid on Windows NT, Windows 2000, or Windows XP; it is a newly supported flag in Windows .NET Server 2003.

Initializing the Luid field of an LUID_AND_ATTRIBUTES structure is typically done using LookupPrivilegeValue( ) , which has the following signature:

BOOL LookupPrivilegeValue(LPCTSTR lpSystemName, LPCTSTR lpName, PLUID lpLuid);

This function has the following arguments:

lpSystemName

Name of the computer on which the privilege value's name is looked up. This is normally specified as NULL, which indicates that only the local system should be searched.

lpName

Name of the privilege to look up. The Windows platform SDK header file winnt.h defines a sizable number of privilege names as macros that expand to literal strings suitable for use here. Each of these macros begins with SE_, which is followed by the name of the privilege. For example, the SeBackupPrivilege privilege has a corresponding macro named SE_BACKUP_NAME.

lpLuid

Pointer to a caller-allocated LUID object that will receive the LUID information if the lookup is successful. LUID objects are a fixed size, so they may be allocated either dynamically or on the stack.

See Also

1.3. Dropping Privileges in setuid Programs

Problem

Your program runs setuid or setgid (see Section 1.3.3 for definitions), thus providing your program with extra privileges when it is executed. After the work requiring the extra privileges is done, those privileges need to be dropped so that an attacker cannot leverage your program during an attack that results in privilege elevation.

Solution

If your program must run setuid or setgid, make sure to use the privileges properly so that an attacker cannot exploit other possible vulnerabilities in your program and gain these additional privileges. You should perform whatever work requires the additional privileges as early in the program as possible, and you should drop the extra privileges immediately after that work is done.

While many programmers may be aware of the need to drop privileges, many more are not. Worse, those who do know to drop privileges rarely know how to do so properly and securely. Dropping privileges is tricky business because the semantics of the system calls to manipulate IDs for setuid/setgid vary from one Unix variant to another—sometimes only slightly, but often just enough to make the code that works on one system fail on another.

On modern Unix systems, the extra privileges resulting from using the setuid or setgid bits on an executable can be dropped either temporarily or permanently. It is best if your program can do what it needs to with elevated privileges, then drop those privileges permanently, but that's not always possible. If you must be able to restore the extra privileges, you will need to be especially careful in your program to do everything possible to prevent an attacker from being able to take control of those privileges. We strongly advise against dropping privileges only temporarily. You should do everything possible to design your program such that it can drop privileges permanently as quickly as possible. We do recognize that it's not always possible to do—the Unix passwd command is a perfect example: the last thing it does is use its extra privileges to write the new password to the password file, and it cannot do it any sooner.

Discussion

Before we can discuss how to drop privileges either temporarily or permanently, it's useful to have at least a basic understanding of how setuid, setgid, and the privilege model in general work on Unix systems. Because of space constraints and the complexity of it all, we're not able to delve very deeply into the inner workings here. If you are interested in a more detailed discussion, we recommend the paper "Setuid Demystified" by Hao Chen, David Wagner, and Drew Dean, which was presented at the 11th USENIX Security Symposium in 2002 and is available at http://www.cs.berkeley.edu/~daw/papers/setuid-usenix02.pdf.

On all Unix systems, each process has an effective user ID, a real user ID, an effective group ID, and a real group ID. In addition, each process on most modern Unix systems also has a saved user ID and a saved group ID.[3] All of the Unix variants that we cover in this book have saved user IDs, so our discussion assumes that the sets of user and group IDs each have an effective ID, a real ID, and a saved ID.

Normally when a process is executed, the effective, real, and saved user and group IDs are all set to the real user and group ID of the process's parent, respectively. However, when the setuid bit is set on an executable, the effective and saved user IDs are set to the user ID that owns the file. Likewise, when the setgid bit is set on an executable, the effective and saved group IDs are set to the group ID that owns the file.

For the most part, all privilege checks performed by the operating system are done using the effective user or effective group ID. The primary deviations from this rule are some of the system calls used to manipulate a process's user and group IDs. In general, the effective user or group ID for a process may be changed as long as the new ID is the same as either the real or the saved ID.

Taking all this into account, permanently dropping privileges involves ensuring that the effective, real, and saved IDs are all the same value. Temporarily dropping privileges requires that the effective and real IDs are the same value, and that the saved ID is unchanged so that the effective ID can later be restored to the higher privilege. These rules apply to both group and user IDs.

One more issue needs to be addressed with regard to dropping privileges. In addition to the effective, real, and saved group IDs of a process, a process also has ancillary groups. Ancillary groups are inherited by a process from its parent process, and they can only be altered by a process with superuser privileges. Therefore, if a process with superuser privileges is dropping these privileges, it must also be sure to drop any ancillary groups it may have. This is achieved by calling setgroups( ) with a single group, which is the real group ID for the process. Because the setgroups( ) system call is guarded by requiring the effective user ID of the process to be that of the superuser, it must be done prior to dropping root privileges. Ancillary groups should be dropped regardless of whether privileges are being dropped permanently or temporarily. In the case of a temporary privilege drop, the process can restore the ancillary groups if necessary when elevated privileges are restored.

The first of two functions, spc_drop_privileges( ) drops any extra group or user privileges either permanently or temporarily, depending on the value of its only argument. If a nonzero value is passed, privileges will be dropped permanently; otherwise, the privilege drop is temporary. The second function, spc_restore_privileges( ) , restores privileges to what they were at the last call to spc_drop_privileges( ). If either function encounters any problems in attempting to perform its respective task, abort( ) is called, terminating the process immediately. If any manipulation of privileges cannot complete successfully, it's safest to assume that the process is in an unknown state, and you should not allow it to continue.

Recalling our earlier discussion regarding subtle differences in the semantics for changing a process's group and user IDs, you'll notice that spc_drop_privileges( ) is littered with preprocessor conditionals that test for the platform on which the code is being compiled. For the BSD-derived platforms (Darwin, FreeBSD, NetBSD, and OpenBSD), dropping privileges involves a simple call to setegid( ) or seteuid( ), followed by a call to either setgid( ) or setuid( ) if privileges are being permanently dropped. The setgid( ) and setuid( ) system calls adjust the process's saved group and user IDs, respectively, as well as the real group or user ID.

On Linux and Solaris, the setgid( ) and setuid( ) system calls do not alter the process's saved group and user IDs in all cases. (In particular, if the effective ID is not the superuser, the saved ID is not altered; otherwise, it is.). That means that these calls can't reliably be used to permanently drop privileges. Instead, setregid( ) and setreuid( ) are used, which actually simplifies the process except that these two system calls have different semantics on the BSD-derived platforms.

Warning

As discussed above, always drop group privileges before dropping user privileges; otherwise, group privileges may not be able to be fully dropped.

#include <sys/param.h>

#include <sys/types.h>

#include <stdlib.h>

#include <unistd.h>

static int orig_ngroups = -1;

static gid_t orig_gid = -1;

static uid_t orig_uid = -1;

static gid_t orig_groups[NGROUPS_MAX];

void spc_drop_privileges(int permanent) {

gid_t newgid = getgid( ), oldgid = getegid( );

uid_t newuid = getuid( ), olduid = geteuid( );

if (!permanent) {

/* Save information about the privileges that are being dropped so that they

* can be restored later.

*/

orig_gid = oldgid;

orig_uid = olduid;

orig_ngroups = getgroups(NGROUPS_MAX, orig_groups);

}

/* If root privileges are to be dropped, be sure to pare down the ancillary

* groups for the process before doing anything else because the setgroups( )

* system call requires root privileges. Drop ancillary groups regardless of

* whether privileges are being dropped temporarily or permanently.

*/

if (!olduid) setgroups(1, &newgid);

if (newgid != oldgid) {

#if !defined(linux)

setegid(newgid);

if (permanent && setgid(newgid) = = -1) abort( );

#else

if (setregid((permanent ? newgid : -1), newgid) = = -1) abort( );

#endif

}

if (newuid != olduid) {

#if !defined(linux)

seteuid(newuid);

if (permanent && setuid(newuid) = = -1) abort( );

#else

if (setreuid((permanent ? newuid : -1), newuid) = = -1) abort( );

#endif

}

/* verify that the changes were successful */

if (permanent) {

if (newgid != oldgid && (setegid(oldgid) != -1 || getegid( ) != newgid))

abort( );

if (newuid != olduid && (seteuid(olduid) != -1 || geteuid( ) != newuid))

abort( );

} else {

if (newgid != oldgid && getegid( ) != newgid) abort( );

if (newuid != olduid && geteuid( ) != newuid) abort( );

}

}

void spc_restore_privileges(void) {

if (geteuid( ) != orig_uid)

if (seteuid(orig_uid) = = -1 || geteuid( ) != orig_uid) abort( );

if (getegid( ) != orig_gid)

if (setegid(orig_gid) = = -1 || getegid( ) != orig_gid) abort( );

if (!orig_uid)

setgroups(orig_ngroups, orig_groups);

}

See Also

"Setuid Demystified" by Hao Chen, David Wagner, and Drew Dean: http://www.cs.berkeley.edu/~daw/papers/setuid-usenix02.pdf

Recipe 2.1

[3] Linux further complicates the already complex privilege model by adding a filesystem user ID and a filesystem group ID, as well as POSIX capabilities. At this time, most systems do not actually make use of POSIX capabilities, and the filesystem IDs are primarily maintained automatically by the kernel. If the filesystem IDs are not explicitly modified by a process, they can be safely ignored, and they will behave properly. We won't discuss them any further here.

1.4. Limiting Risk with Privilege Separation

Problem

Your process runs with extra privileges granted by the setuid or setgid bits on the executable. Because it requires those privileges at various times throughout its lifetime, it can't permanently drop the extra privileges. You would like to limit the risk of those extra privileges being compromised in the event of an attack.

Solution

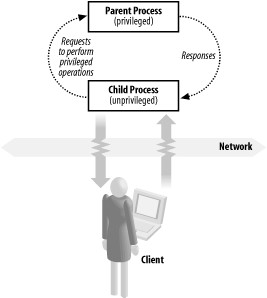

When your program first initializes, create a Unix domain socket pair using socketpair( ), which will create two endpoints of a connected unnamed socket. Fork the process using fork( ) , drop the extra privileges in the child process, and keep them in the parent process. Establish communication between the parent and child processes. Whenever the child process needs to perform an operation that requires the extra privileges held by the parent process, defer the operation to the parent.

The result is that the child performs the bulk of the program's work. The parent retains the extra privileges and does nothing except communicate with the child and perform privileged operations on its behalf.

If the privileged process opens files on behalf of the unprivileged process, you will need to use a Unix domain socket, as opposed to an anonymous pipe or some other other interprocess communication mechanism. The reason is that only Unix domain sockets provide a means by which file descriptors can be exchanged between the processes after the initial fork( ).

Discussion

In Recipe 1.3, we discussed setuid, setgid, and the importance of permanently dropping the extra privileges resulting from their use as quickly as possible to minimize the window of vulnerability to a privilege escalation attack. In many cases, the extra privileges are necessary for performing some initialization or other small amount of work, such as binding a socket to a privileged port. In other cases, however, the work requiring extra privileges cannot always be restricted to the beginning of the program, thus requiring that the extra privileges be dropped only temporarily so that they can later be restored when they're needed. Unfortunately, this means that an attacker who compromises the program can also restore those privileges.

Privilege separation