That Used to Be Us: How America Fell Behind in the World It Invented and How We Can Come Back – Read Now and Download Mobi

Table of Contents

Title Page

Preface: Growing Up in America

PART I - THE DIAGNOSIS

PART II - THE EDUCATION CHALLENGE

PART III - THE WAR ON MATH AND PHYSICS

It makes no sense for China to have better rail systems than us, and Singapore having better airports than us. And we just learned that China now has the fastest supercomputer on Earth—that used to be us.

—President Barack Obama, November 3, 2010

A reader might ask why two people who have devoted their careers to writing about foreign affairs—one of us as a foreign correspondent and columnist at The New York Times and the other as a professor of American foreign policy at The Johns Hopkins University School of Advanced International Studies—have collaborated on a book about the American condition today. The answer is simple. We have been friends for more than twenty years, and in that time hardly a week has gone by without our discussing some aspect of international relations and American foreign policy. But in the last couple of years, we started to notice something: Every conversation would begin with foreign policy but end with domestic policy—what was happening, or not happening, in the United States. Try as we might to redirect them, the conversations kept coming back to America and our seeming inability today to rise to our greatest challenges.

This situation, of course, has enormous foreign policy implications. America plays a huge and, more often than not, constructive role in the world today. But that role depends on the country’s social, political, and economic health. And America today is not healthy—economically or politically. This book is our effort to explain how we got into that state and how we get out of it.

We beg the reader’s indulgence with one style issue. At times, we include stories, anecdotes, and interviews that involve only one of us. To make clear who is involved, we must, in effect, quote ourselves: “As Tom recalled …” “As Michael wrote …” You can’t simply say “I said” or “I saw” when you have a co-authored book with a lot of reporting in it.

Readers familiar with our work know us mainly as authors and commentators, but we are also both, well, Americans. That is important, because that identity drives the book as much as our policy interests do. So here are just a few words of introduction from each of us—not as experts but as citizens.

Tom: I was born in Minneapolis, Minnesota, and was raised in a small suburb called St. Louis Park—made famous by the brothers Ethan and Joel Coen in their movie A Serious Man, which was set in our neighborhood. Senator Al Franken, the Coen brothers, the Harvard political philosopher Michael J. Sandel, the political scientist Norman Ornstein, the longtime NFL football coach Marc Trestman, and I all grew up in and around that little suburb within a few years of one another, and it surely had a big impact on all of us. In my case, it bred a deep optimism about America and the notion that we really can act collectively for the common good.

In 1971, the year I graduated from high school, Time magazine had a cover featuring then Minnesota governor Wendell Anderson holding up a fish he had just caught, under the headline “The Good Life in Minnesota.” It was all about “the state that works.” When the senators from your childhood were the Democrats Hubert Humphrey, Walter Mondale, and Eugene McCarthy, your congressmen were the moderate Republicans Clark MacGregor and Bill Frenzel, and the leading corporations in your state—Dayton’s, Target, General Mills, and 3M—were pioneers in corporate social responsibility and believed that it was part of their mission to help build things like the Tyrone Guthrie Theater, you wound up with a deep conviction that politics really can work and that there is a viable political center in American life.

I attended public school with the same group of kids from K through 12. In those days in Minnesota, private schools were for kids in trouble. Private school was pretty much unheard of for middle-class St. Louis Park kids, and pretty much everyone was middle-class. My mom enlisted in the U.S. Navy in World War II, and my parents actually bought our home thanks to the loan she got through the GI Bill. My dad, who never went to college, was vice president of a company that sold ball bearings. My wife, Ann Bucksbaum, was born in Marshalltown, Iowa, and was raised in Des Moines. To this day, my best friends are still those kids I grew up with in St. Louis Park, and I still carry around a mental image—no doubt idealized—of Minnesota that anchors and informs a lot of my political choices. No matter where I go—London, Beirut, Jerusalem, Washington, Beijing, or Bangalore—I’m always looking to rediscover that land of ten thousand lakes where politics actually worked to make people’s lives better, not pull them apart. That used to be us. In fact, it used to be my neighborhood.

Michael: While Tom and his wife come from the middle of the country, my wife, Anne Mandelbaum, and I grew up on the two coasts—she in Manhattan and I in Berkeley, California. My father was a professor of anthropology at the University of California, and my mother, after my two siblings and I reached high school age, became a public school teacher and then joined the education faculty at the university that we called, simply, Cal.

Although Berkeley has a reputation for political radicalism, during my childhood in the 1950s it had more in common with Tom’s Minneapolis than with the Berkeley the world has come to know. It was more a slice of Middle America than a hotbed of revolution. As amazing as it may seem today, for part of my boyhood it had a Republican mayor and was represented by a Republican congressman.

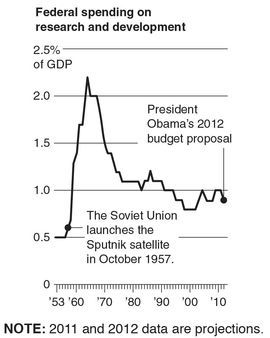

One episode from those years is particularly relevant to this book. It occurred in the wake of the Soviet Union’s 1957 launching of Sputnik, the first Earth-orbiting satellite. The event was a shock to the United States, and the shock waves reached Garfield Junior High School (since renamed after Martin Luther King Jr.), where I was in seventh grade. The entire student body was summoned to an assembly at which the principal solemnly informed us that in the future we all would have to study harder, and that mathematics and science would be crucial.

Given my parents’ commitment to education, I did not need to be told that school and studying were important. But I was impressed by the gravity of the moment. I understood that the United States faced a national challenge and that everyone would have to contribute to meeting it. I did not doubt that America, and Americans, would meet it. There is no going back to the 1950s, and there are many reasons to be glad that that is so, but the kind of seriousness the country was capable of then is just as necessary now.

We now live and work in the nation’s capital, where we have seen firsthand the government’s failure to come to terms with the major challenges the country faces. But although this book’s perspective on the present is gloomy, its hopes and expectations for the future are high. We know that America can meet its challenges. After all, that’s the America where we grew up.

Thomas L. Friedman

Michael Mandelbaum

Bethesda, Maryland, June 2011

Michael Mandelbaum

Bethesda, Maryland, June 2011

If You See Something, Say Something

This is a book about America that begins in China.

In September 2010, Tom attended the World Economic Forum’s summer conference in Tianjin, China. Five years earlier, getting to Tianjin had involved a three-and-a-half-hour car ride from Beijing to a polluted, crowded Chinese version of Detroit, but things had changed. Now, to get to Tianjin, you head to the Beijing South Railway Station—an ultramodern flying saucer of a building with glass walls and an oval roof covered with 3,246 solar panels—buy a ticket from an electronic kiosk offering choices in Chinese and English, and board a world-class high-speed train that goes right to another roomy, modern train station in downtown Tianjin. Said to be the fastest in the world when it began operating in 2008, the Chinese bullet train covers 115 kilometers, or 72 miles, in a mere twenty-nine minutes.

The conference itself took place at the Tianjin Meijiang Convention and Exhibition Center—a massive, beautifully appointed structure, the like of which exists in few American cities. As if the convention center wasn’t impressive enough, the conference’s co-sponsors in Tianjin gave some facts and figures about it (www.tj-summerdavos.cn). They noted that it contained a total floor area of 230,000 square meters (almost 2.5 million square feet) and that “construction of the Meijiang Convention Center started on September 15, 2009, and was completed in May, 2010.” Reading that line, Tom started counting on his fingers: Let’s see—September, October, November, December, January …

Eight months.

Returning home to Maryland from that trip, Tom was describing the Tianjin complex and how quickly it was built to Michael and his wife, Anne. At one point Anne asked: “Excuse me, Tom. Have you been to our subway stop lately?” We all live in Bethesda and often use the Washington Metrorail subway to get to work in downtown Washington, D.C. Tom had just been at the Bethesda station and knew exactly what Anne was talking about: The two short escalators had been under repair for nearly six months. While the one being fixed was closed, the other had to be shut off and converted into a two-way staircase. At rush hour, this was creating a huge mess. Everyone trying to get on or off the platform had to squeeze single file up and down one frozen escalator. It sometimes took ten minutes just to get out of the station. A sign on the closed escalator said that its repairs were part of a massive escalator “modernization” project.

What was taking this “modernization” project so long? We investigated. Cathy Asato, a spokeswoman for the Washington Metropolitan Transit Authority, had told the Maryland Community News (October 20, 2010) that “the repairs were scheduled to take about six months and are on schedule. Mechanics need 10 to 12 weeks to fix each escalator.”

A simple comparison made a startling point: It took China’s Teda Construction Group thirty-two weeks to build a world-class convention center from the ground up—including giant escalators in every corner—and it was taking the Washington Metro crew twenty-four weeks to repair two tiny escalators of twenty-one steps each. We searched a little further and found that WTOP, a local news radio station, had interviewed the Metro interim general manager, Richard Sarles, on July 20, 2010. Sure, these escalators are old, he said, but “they have not been kept in a state of good repair. We’re behind the curve on that, so we have to catch up … Just last week, smoke began pouring out of the escalators at the Dupont Circle station during rush hour.”

On November 14, 2010, The Washington Post ran a letter to the editor from Mark Thompson of Kensington, Maryland, who wrote:

I have noted with interest your reporting on the $225,000 study that Metro hired Vertical Transportation Excellence to conduct into the sorry state of the system’s escalators and elevators … I am sure that the study has merit. But as someone who has ridden Metro for more than 30 years, I can think of an easier way to assess the health of the escalators. For decades they ran silently and efficiently. But over the past several years—when the escalators are running—aging or ill-fitting parts have generated horrific noises that sound to me like a Tyrannosaurus Rex trapped in a tar pit screeching its dying screams.

The quote we found most disturbing, though, came from a Maryland Community News story about the long lines at rush hour caused by the seemingly endless Metro repairs: “‘My impression, standing on line there, is people have sort of gotten used to it,’ said Benjamin Ross, who lives in Bethesda and commutes every day from the downtown station.”

People have sort of gotten used to it. Indeed, that sense of resignation, that sense that, well, this is just how things are in America today, that sense that America’s best days are behind it and China’s best days are ahead of it, have become the subject of watercooler, dinner-party, grocery-line, and classroom conversations all across America today. We hear the doubts from children, who haven’t been to China. Tom took part in the September 2010 Council of Educational Facility Planners International (CEFPI) meeting in San Jose, California. As part of the program, there was a “School of the Future Design Competition,” which called for junior high school students to design their own ideal green school. He met with the finalists on the last morning of the convention, and they talked about global trends. At one point, Tom asked them what they thought about China. A young blond-haired junior high school student, Isabelle Foster, from Old Lyme Middle School in Connecticut, remarked, “It seems like they have more ambition and will than we do.” Tom asked her, “Where did you get that thought?” She couldn’t really explain it, she said. She had never visited China. But it was just how she felt. It’s in the air.

We heard the doubts about America from Pennsylvania governor Ed Rendell, in his angry reaction after the National Football League postponed for two days a game scheduled in Philadelphia between the Philadelphia Eagles and the Minnesota Vikings—because of a severe snowstorm. The NFL ordered the games postponed because it didn’t want fans driving on icy, snow-covered roads. But Rendell saw it as an indicator of something more troubling—that Americans had gone soft. “It goes against everything that football is all about,” Rendell said in an interview with the sports radio station 97.5 The Fanatic in Philadelphia (December 27, 2010). “We’ve become a nation of wusses. The Chinese are kicking our butt in everything. If this was in China, do you think the Chinese would have called off the game? People would have been marching down to the stadium, they would have walked, and they would have been doing calculus on the way down.”

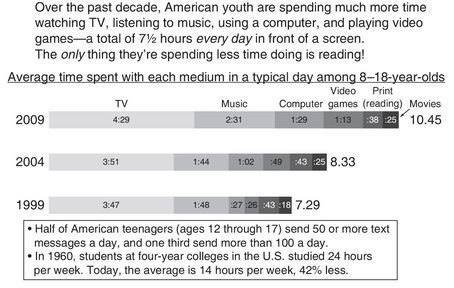

We read the doubts in letters to the editor, such as this impassioned post by Eric R. on The New York Times comments page under a column Tom wrote about China (December 1, 2010):

We are nearly complete in our evolution from Lewis and Clark into Elmer Fudd and Yosemite Sam. We used to embrace challenges, endure privation, throttle our fear and strike out into the (unknown) wilderness. In this mode we rallied to span the continent with railroads, construct a national highway system, defeated monstrous dictators, cured polio and landed men on the moon. Now we text and put on makeup as we drive, spend more on video games than books, forswear exercise, demonize hunting, and are rapidly succumbing to obesity and diabetes. So much for the pioneering spirit that made us (once) the greatest nation on earth, one that others looked up to and called “exceptional.”

Sometimes the doubts hit us where we least expect them. A few weeks after returning from China, Tom went to the White House to conduct an interview. He passed through the Secret Service checkpoint on Pennsylvania Avenue, and after putting his bags through the X-ray machine and collecting them, he grabbed the metal door handle to enter the White House driveway. The handle came off in his hand. “Oh, it does that sometimes,” the Secret Service agent at the door said nonchalantly, as Tom tried to fit the wobbly handle back into the socket.

And often now we hear those doubts from visitors here—as when a neighbor in Bethesda mentions that over the years he has hired several young women from Germany to help with his child care, and they always remark on two things: how many squirrels there are in Washington, and how rutted the streets are. They just can’t believe that America’s capital would have such potholed streets.

So, do we buy the idea, increasingly popular in some circles, that Britain owned the nineteenth century, America dominated the twentieth century, and China will inevitably reign supreme in the twenty-first century—and that all you have to do is fly from Tianjin or Shanghai to Washington, D.C., and take the subway to know that?

No, we do not. And we have written this book to explain why no American, young or old, should resign himself or herself to that view either. The two of us are not pessimists when it comes to America and its future. We are optimists, but we are also frustrated. We are frustrated optimists. In our view, the two attitudes go together. We are optimists because American society, with its freewheeling spirit, its diversity of opinions and talents, its flexible economy, its work ethic and penchant for innovation, is in fact ideally suited to thrive in the tremendously challenging world we are living in. We are optimists because the American political and economic systems, when functioning properly, can harness the nation’s talents and energy to meet the challenges the country faces. We are optimists because Americans have plenty of experience in doing big, hard things together. And we are optimists because our track record of national achievement gives ample grounds for believing we can overcome our present difficulties.

But that’s also why we’re frustrated. Optimism or pessimism about America’s future cannot simply be a function of our capacity to do great things or our history of having done great things. It also has to be a function of our will actually to do those things again. So many Americans are doing great things today, but on a small scale. Philanthropy, volunteerism, individual initiative: they’re all impressive, but what the country needs most is collective action on a large scale.

We cannot be pessimists about America when we know that it is home to so many creative, talented, hardworking people, but we cannot help but be frustrated when we discover how many of those people feel that our country is not educating the workforce they need, or admitting the energetic immigrants they seek, or investing in the infrastructure they require, or funding the research they envision, or putting in place the intelligent tax laws and incentives that our competitors have installed.

Hence the title of this opening chapter: “If you see something, say something.” That is the mantra that the Department of Homeland Security plays over and over on loudspeakers in airports and railroad stations around the country. Well, we have seen and heard something, and millions of Americans have, too. What we’ve seen is not a suspicious package left under a stairwell. What we’ve seen is hiding in plain sight. We’ve seen something that poses a greater threat to our national security and well-being than al-Qaeda does. We’ve seen a country with enormous potential falling into disrepair, political disarray, and palpable discomfort about its present condition and future prospects.

This book is our way of saying something—about what is wrong, why things have gone wrong, and what we can and must do to make them right.

Why say it now, though, and why the urgency?

“Why now?” is easy to answer: because our country is in a slow decline, just slow enough for us to be able to pretend—or believe—that a decline is not taking place. As the ever-optimistic Timothy Shriver, chairman of the Special Olympics, son of Peace Corps founder Sargent Shriver, and nephew of President John F. Kennedy, responded when we told him about our book: “It’s as though we just slip a little each year and shrug it off to circumstances beyond our control—an economic downturn here, a social problem there, the political mess this year. We’re losing a step a day and no one’s saying, Stop!” No doubt, Shriver added, most Americans “would still love to be the country of great ideals and achievements, but no one seems willing to pay the price.” Or, as Jeffrey Immelt, the CEO of General Electric, put it to us: “What we lack in the U.S. today is the confidence that is generated by solving one big, hard problem—together.” It has been a long time now since we did something big and hard together.

We will argue that this slow-motion decline has four broad causes. First, since the end of the Cold War, we, and especially our political leaders, have stopped starting each day by asking the two questions that are crucial for determining public policy: What world are we living in, and what exactly do we need to do to thrive in this world? The U.S. Air Force has a strategic doctrine originally designed by one of its officers, John Boyd, called the OODA loop. It stands for “observe, orient, decide, act.” Boyd argued that when you are a fighter pilot, if your OODA loop is faster than the other guy’s, you will always win the dogfight. Today, America’s OODA loop is far too slow and often discombobulated. In American political discourse today, there is far too little observing, orienting, deciding, and acting and far too much shouting, asserting, dividing, and postponing. When the world gets really fast, the speed with which a country can effectively observe, orient, decide, and act matters more than ever.

Second, over the last twenty years, we as a country have failed to address some of our biggest problems—particularly education, deficits and debt, and energy and climate change—and now they have all worsened to a point where they cannot be ignored but they also cannot be effectively addressed without collective action and collective sacrifice. Third, to make matters worse, we have stopped investing in our country’s traditional formula for greatness, a formula that goes back to the founding of the country. Fourth, as we will explain, we have not been able to fix our problems or reinvest in our strengths because our political system has become paralyzed and our system of values has suffered serious erosion. But finally, being optimists, we will offer our own strategy for overcoming these problems.

“Why the urgency?” is also easy to answer. In part the urgency stems from the fact that as a country we do not have the resources or the time to waste that we had twenty years ago, when our budget deficit was under control and all of our biggest challenges seemed at least manageable. In the last decade especially, we have spent so much of our time and energy—and the next generation’s money—fighting terrorism and indulging ourselves with tax cuts and cheap credit that we now have no reserves. We are driving now without a bumper, without a spare tire, and with the gas gauge nearing empty. Should the market or Mother Nature make a sudden disruptive move in the wrong direction, we would not have the resources to shield ourselves from the worst effects, as we had in the past. Winston Churchill was fond of saying that “America will always do the right thing, but only after exhausting all other options.” America simply doesn’t have time anymore for exhausting any options other than the right ones.

Our sense of urgency also derives from the fact that our political system is not properly framing, let alone addressing, our ultimate challenge. Our goal should not be merely to solve America’s debt and deficit problems. That is far too narrow. Coping with these problems is important—indeed necessary and urgent—but it is only a means to an end. The goal is for America to remain a great country. This means that while reducing our deficits, we must also invest in education, infrastructure, and research and development, as well as open our society more widely to talented immigrants and fix the regulations that govern our economy. Immigration, education, and sensible regulation are traditional ingredients of the American formula for greatness. They are more vital than ever if we hope to realize the full potential of the American people in the coming decades, to generate the resources to sustain our prosperity, and to remain the global leader that we have been and that the world needs us to be. We, the authors of this book, don’t want simply to restore American solvency. We want to maintain American greatness. We are not green-eyeshade guys. We’re Fourth of July guys.

And to maintain American greatness, the right option for us is not to become more like China. It is to become more like ourselves. Certainly, China has made extraordinary strides in lifting tens of millions of its people out of poverty and in modernizing its infrastructure—from convention centers, to highways, to airports, to housing. China’s relentless focus on economic development and its willingness to search the world for the best practices, experiment with them, and then scale those that work is truly impressive.

But the Chinese still suffer from large and potentially debilitating problems: a lack of freedom, rampant corruption, horrible pollution, and an education system that historically has stifled creativity. China does not have better political or economic systems than the United States. In order to sustain its remarkable economic progress, we believe, China will ultimately have to adopt more features of the American system, particularly the political and economic liberty that are fundamental to our success. China cannot go on relying heavily on its ability to mobilize cheap labor and cheap capital and on copying and assembling the innovations of others.

Still, right now, we believe that China is getting 90 percent of the potential benefits from its second-rate political system. It is getting the most out of its authoritarianism. But here is the shortcoming that Americans should be focused on: We are getting only 50 percent of the potential benefits from our first-rate system. We are getting so much less than we can, should, and must get out of our democracy.

In short, our biggest problem is not that we’re failing to keep up with China’s best practices but that we’ve strayed so far from our own best practices. America’s future depends not on our adopting features of the Chinese system, but on our making our own democratic system work with the kind of focus, moral authority, seriousness, collective action, and stick-to-itiveness that China has managed to generate by authoritarian means for the last several decades.

In our view, all of the comparisons between China and the United States that you hear around American watercoolers these days aren’t about China at all. They are about us. China is just a mirror. We’re really talking about ourselves and our own loss of self-confidence. We see in the Chinese some character traits that we once had—that once defined us as a nation—but that we seem to have lost.

Orville Schell heads up the Asia Society’s Center on U.S.-China Relations in New York City. He is one of America’s most experienced China-watchers. He also attended the Tianjin conference, and one afternoon, after a particularly powerful presentation there about China’s latest economic leap forward, Tom asked Schell why he thought China’s rise has come to unnerve and obsess Americans.

“Because we have recently begun to find ourselves so unable to get things done, we tend to look with a certain over-idealistic yearning when it comes to China,” Schell answered. “We see what they have done and project onto them something we miss, fearfully miss, in ourselves”—that “can-do, get-it-done, everyone-pull-together, whatever-it-takes” attitude that built our highways and dams and put a man on the moon. “These were hallmarks of our childhood culture,” said Schell. “But now we view our country turning into the opposite, even as we see China becoming animated by these same kinds of energies … China desperately wants to prove itself to the world, while at the same time America seems to be losing its hunger to demonstrate its excellence.” The Chinese are motivated, Schell continued, by a “deep yearning to restore China to greatness, and, sadly, one all too often feels that we are losing that very motor force in America.”

The two of us do feel that, but we do not advocate policies and practices to sustain American greatness out of arrogance or a spirit of chauvinism. We do it out of a love for our country and a powerful belief in what a force for good America can be—for its own citizens and for the world—at its best. We are well aware of America’s imperfections, past and present. We know that every week in America a politician takes a bribe; someone gets convicted of a crime he or she did not commit; public money gets wasted that should have gone for a new bridge, a new school, or pathbreaking research; many young people drop out of school; young women get pregnant without committed fathers; and people unfairly lose their jobs or their houses. The cynic says, “Look at the gap between our ideals and our reality. Any talk of American greatness is a lie.” The partisan says, “Ignore the gap. We’re still ‘exceptional.’” Our view is that the gaps do matter, and this book will have a lot to say about them. But America is not defined by its gaps. Our greatness as a country—what truly defines us—is and always has been our never-ending effort to close these gaps, our constant struggle to form a more perfect union. The gaps simply show us the work we still have to do.

To repeat: Our problem is not China, and our solution is not China. Our problem is us—what we are doing and not doing, how our political system is functioning and not functioning, which values we are and are not living by. And our solution is us—the people, the society, and the government that we used to be, and can be again. That is why this book is meant as both a wake-up call and a pep talk—unstinting in its critique of where we are and unwavering in its optimism about what we can achieve if we act together.

Ignoring Our Problems

It is not the strongest of the species that survives, nor the most intelligent that survives. It is the one that is the most adaptable to change.

—Evolutionary theory

We are going to do a terrible thing to you. We are going to deprive you of an enemy.

—Georgi Arbatov, Soviet expert on the United States, speaking at the end of the Cold War

It all seems so obvious now, but on the historic day when the Berlin Wall was cracked open—November 11, 1989—no one would have guessed that America was about to make the most dangerous mistake a country can make: We were about to misread our environment. We should have remembered Oscar Wilde’s admonition: “In this world there are only two tragedies. One is not getting what one wants, and the other is getting it.” America was about to experience the second tragedy. We had achieved a long-sought goal: the end of the Cold War on Western terms. But that very achievement ushered in a new world, with unprecedented challenges to the United States. No one warned us—neither Oscar Wilde nor someone like the statesman who had done precisely that for America four decades earlier: George Kennan.

On the evening of February 22, 1946, Kennan, then the forty-two-year-old deputy chief of mission at the U.S. embassy in Moscow, dispatched an 8,000-word cable to the State Department in Washington. The “Long Telegram,” as it was later known, became the most famous diplomatic communication in the history of the United States. A condensed version, which ran under the byline “X” in Foreign Affairs the next year, became perhaps the most influential journal article in American history.

Kennan’s cable earned its renown because it served as the charter for American foreign policy during the Cold War. It called for the “containment” of the military power of the Soviet Union and political resistance to its communist ideology. It led to the Marshall Plan for aid to war-torn Europe; to NATO—the first peacetime military alliance in American history—and the stationing of an American army in Europe; to America’s wars in Korea and Vietnam; to the nuclear arms race; to a dangerous brush with nuclear war over Cuba; and to a political rivalry waged in every corner of the world through military assistance, espionage, public relations, and economic aid.

The Cold War came to an end with the overthrow of the communist regimes of Eastern Europe in 1989 and the collapse of the Soviet Union in 1991. But the broad message of the Long Telegram is one we need to hear today: “Wake up! Pay attention! The world you are living in has fundamentally changed. It is not the world you think it is. You need to adapt, because the health, security, and future of the country depend upon it.”

It is hard to realize today what a shock that message was to many Americans. The world Kennan’s cable described was not the one in which most Americans believed they were living in or wanted to live in. Most of them assumed that, with the end of World War II, the United States could look forward to good relations with its wartime Soviet ally and the end of the kind of huge national exertion that winning the war had required. The message of the Long Telegram was that both of these happy assumptions were wrong. The nation’s leaders eventually accepted Kennan’s analysis and adopted his prescription. Before long the American people knew they had to be vigilant, creative, and united. They knew they had to foster economic growth, technological innovation, and social mobility in order to avoid losing the global geopolitical competition with their great rival. The Cold War had its ugly excesses and its fiascos—Vietnam and the Bay of Pigs, for example—but it also set certain limits on American politics and society. We just had to look across at the Iron Curtain and the evil empire behind it—or take part in one of those nuclear bomb drills in the basements of our elementary schools—to know that we were living in a world defined by the struggle for supremacy between two nuclear-armed superpowers. That fact shaped both the content of our politics and the prevailing attitude of our leaders and citizens, which was one of constant vigilance. We didn’t always read the world correctly, but we paid close attention to every major trend beyond our borders.

Americans had just seen totalitarian powers conquer large swaths of the world, threatening free societies with a return to the Dark Ages. The nation had had to sacrifice mightily to reverse these conquests. The Cold War that followed imposed its own special form of discipline. If we flinched, we risked being overwhelmed by communism; if we became trigger-happy, we risked a nuclear war. For all these reasons, it was a serious, sober time.

Then that wall in Berlin came down. And like flowers in spring, up sprouted a garden full of rosy American assumptions about the future. Is it any wonder? The outcome of the global conflict eliminated what had loomed for two generations as by far the most menacing challenge the country had faced: the economic, political, and military threat from the Soviet Union and international communism. Though no formal ceremony of surrender took place and there was no joyous ticker-tape parade for returning servicemen and women as after World War II, it felt like a huge military victory for the United States and its allies. In some ways, it was. Like Germany after the two world wars of the twentieth century, the losing power, the Soviet Union, gave up territory and changed its form of government to bring it in line with the governments of the victors. So, watching on CNN as people in the formerly communist states toppled statues of Lenin, it was natural for us to relax, to be less serious, and to assume that the need for urgent and sustained collective action had passed.

We could have used another Long Telegram. While the end of the Cold War was certainly a victory, it also presented us with a huge new challenge. But at the time we just didn’t see it.

By helping to destroy communism, we helped open the way for two billion more people to live like us: two billion more people with their own versions of the American dream, two billion more people practicing capitalism, two billion more people with half a century of pent-up aspirations to live like Americans and work like Americans and drive like Americans and consume like Americans. The rest of the world looked at the victors in the Cold War and said, “We want to live the way they do.” In this sense, the world we are now living in is a world that we invented.

The end of communism dramatically accelerated the process of globalization, which removed many of the barriers to economic competition. Globalization would turn out to be a blessing for international stability and global growth. But it enabled so many more of those “new Americans” to compete for capital and jobs with the Americans living in America. In economic terms, this meant that Americans had to run even faster—that is, work harder—just to stay in place. At the end of the Cold War, America resembled a cross-country runner who had won his national championship year after year, but this time the judge handed him the trophy and said, “Congratulations. You will never compete in our national championship again. From now on you will have to race in the Olympics, against the best in the world—every day, forever.”

We didn’t fully grasp what was happening, so we did not respond appropriately. Over time we relaxed, underinvested, and lived in the moment just when we needed to study harder, save more, rebuild our infrastructure, and make our country more open and attractive to foreign talent. Losing one’s primary competitor can be problematic. What would the New York Yankees be without the Boston Red Sox, or Alabama without Auburn? When the West won the Cold War, America lost the rival that had kept us sharp, outwardly focused, and serious about nation-building at home—because offering a successful alternative to communism for the whole world to see was crucial to our Cold War strategy.

In coastal China, India, and Brazil, meanwhile, the economic barriers had begun coming down a decade earlier. The Chinese were not like citizens of the old Soviet Union, where, as the saying went, the people pretended to work and the government pretended to pay them. No, they were like us. They had a powerful work ethic and huge pent-up aspirations for prosperity—like a champagne bottle that had been shaken for fifty years and now was about to have its cork removed. You didn’t want to be in the way of that cork. Moreover, in parallel with the end of the Cold War, technology was flattening the global economic playing field, reducing the advantages of the people in developed countries such as the United States, while empowering those in the developing ones. The pace of global change accelerated to a speed faster than any we had seen before. It took us Americans some time to appreciate that while many of our new competitors were low-wage, low-skilled workers, for the first time a growing number, particularly those in Asia, were low-wage, high-skilled workers. We knew all about cheap labor, but we had never had to deal with cheap genius—at scale. Our historical reference point had always been Europe. The failure to understand that we were living in a new world and to adapt to it was a colossal and costly American mistake.

To be sure, the two decades following the Cold War were an extraordinarily productive period for some Americans and some sectors of the American economy. This was the era of the revolution in information technology, which began in the United States and spread around the world. It made some Americans wealthy and gave all Americans greater access to information, entertainment, and one another—and to the rest of the world as well—than ever before. It really was revolutionary. But it posed a formidable challenge to Americans and contributed to our failure as a country to cope effectively with its consequences. That failure had its roots in what we can now see as American overconfidence.

“It was a totally lethal combination of cockiness and complacency,” Secretary of Education Arne Duncan told us. “We were the king of the world. But we lost our way. We rested on our laurels … we kept telling ourselves all about what we did yesterday and living in the past. We have been slumbering and living off our reputation. We are like the forty-year-old who keeps talking about what a great high school football player he was.” It is this dangerous complacency that produced the potholes, loose door handles, and protracted escalator outages of twenty-first-century America. Unfortunately, America’s difficulties with infrastructure are the least of our problems.

And that brings us to the core argument of this book. The end of the Cold War, in fact, ushered in a new era that poses four major challenges for America. These are: how to adapt to globalization, how to adjust to the information technology (IT) revolution, how to cope with the large and soaring budget deficits stemming from the growing demands on government at every level, and how to manage a world of both rising energy consumption and rising climate threats. These four challenges, and how we meet them, will define America’s future.

The essence of globalization is the free movement of people, goods, services, and capital across national borders. It expanded dramatically because of the remarkable economic success of the free-market economies of the West, states that traded and invested heavily among themselves. Other countries, observing this success, decided to follow the Western pattern. China, other countries in East and Southeast Asia, India, Latin America, and formerly communist Europe all entered the globalized economy. Americans did not fully grasp the implications of globalization becoming—if we can put it this way—even more global, in part because we thought we had seen it all before.

All the talk about China is likely to give any American over the age of forty a sense of déjà vu. After all, we faced a similar challenge from Japan in the 1980s. It ended with America still rising and Japan declining. It is tempting to believe that China today is just a big Japan.

Unfortunately for us, China and the expansion of globalization, to which its remarkable growth is partly due, are far more disruptive than that. Japan threatened one American city, Detroit, and two American industries: cars and consumer electronics. China—and globalization more broadly—challenges every town in America and every industry. China, India, Brazil, Israel, Singapore, Vietnam, Taiwan, Korea, Chile, and Switzerland (and the list could go on and on) pose a huge challenge to America because of the integration of computing, telecommunications, the World Wide Web, and free markets. Japan was a tornado that blew through during the Cold War. China and globalization are a category-5 hurricane that will never move out to sea in the post–Cold War world.

Charles Vest, the former president of MIT, observed that back in the 1970s and 1980s, once we realized the formidable challenge posed by Japan, “we took the painful steps that were required to get back in the game. We analyzed, repositioned, persevered, and emerged stronger. We did it. In that case, the ‘we’ who achieved this was U.S. industry.” But now something much more comprehensive is required.

“This time around,” said Vest, “it requires a public awakening, establishment of political will, resetting of priorities, sacrifice for the future, and an alliance of governments, businesses, and citizens. It requires truth-telling, sensible investment, a rebirth of civility, and a cessation by both political and corporate leaders of pandering to our baser instincts. Engineering, education, science, and technology are clearly within the core of what has to be done. After all, this is the knowledge age. The United States cannot prosper based on low wages, geographic isolation, or military might. We can prosper only based on brainpower: properly prepared and properly applied brainpower.”

If globalization has put virtually every American job under pressure, the IT revolution has changed the composition of work—as computers, cell phones, the Internet, and all their social-media offshoots have spread. It has eliminated old jobs and spawned new ones—and whole new industries—faster than ever. Moreover, by making almost all work more complex and more demanding of critical-thinking skills, it requires every American to be better educated than ever to secure and keep a well-paying job. The days when you could go directly from high school to a job that supported a middle-class lifestyle, the era memorably depicted in two of the most popular of all American situation comedies—The Honeymooners of the 1950s, with Jackie Gleason as the bus driver Ralph Kramden, and All in the Family of the 1970s, starring Carroll O’Connor as Archie Bunker, the colorful denizen of Queens, New York—are long gone. The days when you could graduate from college and do the same job, with the same skills, for four decades before sliding into a comfortable retirement are disappearing as well. The IT revolution poses an educational challenge—to expand the analytical and innovative skills of Americans—that is no less profound than those created by the transition from plow horses to tractors or from sailing ships to steamships.

The third great challenge for America’s future is the rising national debt and annual deficits, which have both expanded to dangerous levels since the Cold War through our habit of not raising enough money through taxation to pay for what the federal government spends, and then borrowing to bridge the gap. The American government has been able to borrow several trillion dollars—a good chunk of it from China and other countries—because of confidence in the American economy and because of the special international role of the dollar, a role that dates from the days of American global economic supremacy.

In effect, America has its own version of oil wealth: dollar wealth. Because its currency became the world’s de facto currency after World War II, the United States can print money and issue debt to a degree that no other country can. Countries that are rich in oil tend to be fiscally undisciplined; a country that can essentially print its own dollar-denominated wealth can fall into the same trap. Sure enough, since the end of the Cold War, and particularly since 2001, America has suffered a greater loss of fiscal discipline than ever before in its history. And it has come at exactly the wrong time: just when the baby boomer generation is about to retire and draw on its promised entitlements of Social Security and Medicare.

The accumulation of annual deficits is the national debt, and here the widely cited numbers, hair-raising though they are, actually understate what is likely to be the extent of American taxpayers’ obligations. The figures do not take account of the huge and in some cases probably unpayable debts of states and cities. By one estimate, states have $3 trillion in unfunded pension-related obligations. The gaps between what New York, Illinois, and California in particular will owe in the coming years and the taxes their governments can reasonably expect to collect are very large indeed.

Vallejo, California, a city of about 117,000 people, which declared bankruptcy in May 2008, was devoting about 80 percent of its budget to salaries and benefits for its unionized policemen, firefighters, and other public safety officials. Tracy, California, made news when it announced in 2010 that citizens were henceforth being asked to pay for 911 emergency services—$48 per household per year, $36 for low-income households. The fee rises automatically to $300 if the household actually calls 911 and the first responder administers medical treatment. The federal government will surely be called upon to take responsibility for some of these obligations. It will also come under pressure to rescue some of the private pension plans that are essentially bankrupt. And most estimates assume that the country will have to pay only modest interest costs for the borrowing it undertakes to finance its budget deficits. Doubts about the U.S. government’s creditworthiness could, however, raise the interest rates the Treasury Department has to offer in order to find enough purchasers for its securities. This could increase the total debt significantly—depending on how high future interest rates are. In short, our overall fiscal condition is even worse than we think. There is a website that tracks the “Outstanding Public Debt of the United States,” and as of June 15, 2011, the national debt was $14,344,566,636,826.26. (Maybe China will forgive us the 26 cents?)

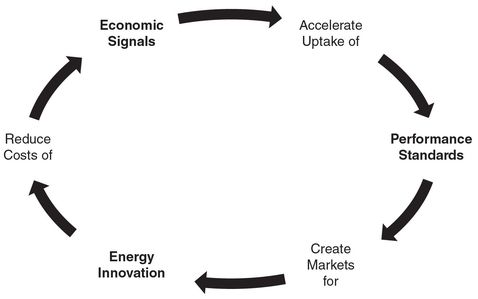

As for the fourth challenge, the threat of fossil fuels to the planet’s biosphere, it is a direct result of the surge in energy consumption, which, in turn, is a direct result of the growth that has come about through globalization and the adoption (especially in Asia) of free-market economics. If we do not find a new source of abundant, cheap, clean, and reliable energy to power the future of all these “new Americans,” we run the risk of burning up, choking up, heating up, and smoking up our planet far faster than even Al Gore predicted.

This means, however, that the technologies that can supply abundant, cheap, clean, and reliable energy will be the next new global industry. Energy technology—ET—will be the new IT. A country with a thriving ET industry will enjoy energy security, will enhance its own national security, and will contribute to global environmental security. It also will be home to innovative companies, because companies cannot make products greener without inventing smarter materials, smarter software, and smarter designs. It is hard to imagine how America will be able to sustain a rising standard of living if it does not have a leading role in this next great global industry.

What all four of these challenges have in common is that they require a collective response. They are too big to be addressed by one party alone, or by one segment of the public. Each is a national challenge; only the nation as a whole can deal adequately with it. Of course, a successful response in each case depends on individuals doing the right things. Workers must equip themselves with the skills to win the well-paying jobs, and entrepreneurs must create these jobs. Americans must spend less and save more and accept higher taxes. Individuals, firms, and industries must use less fossil fuel. But to produce the appropriate individual behavior in each case, we need to put in place the incentives, regulations, and institutions that will encourage it, and putting them in place is a collective task.

Because these are challenges that the nation as a whole must address, because addressing them will require exertion and sacrifice, and because they have an international dimension, it seems natural to discuss them in the language of international competition and conflict. The challenge that Kennan identified in his Long Telegram really was a war of sorts. The four major challenges the country confronts today have to be understood in a different framework. It seems to us that the appropriate framework is provided by the great engine of change in the natural world, evolution. The driving force of evolution is adaptation. Where Kennan was urging Americans to oppose a new enemy, we are calling on Americans to adapt to a new environment.

Over hundreds of millions of years, many thousands of species (plants and animals, including humans) have survived when their biological features have allowed them to adapt to their environment—that is, allowed them to reproduce successfully and so perpetuate their genes. If gray-colored herons are better disguised from their predators than white ones, more and more grays and fewer and fewer whites will survive and reproduce in every generation until all herons are gray. (The phrase “survival of the fittest” that is often used to describe evolution means survival of the best adapted.)

Adaptation becomes particularly urgent when a species’ environment changes. Birds may fly to an island far from their previous habitat. Whether these birds survive will depend on how well adapted they happen to be to their new home, and whether the species as a whole survives there will depend on how successfully those adaptations are passed down to subsequent generations.

Sixty-five million years ago, scientists believe, a large meteor or a series of them struck the Earth, igniting firestorms and shrouding the planet in a cloud of dust. This caused the extinction of three-quarters of all then existing species, including the creatures that at the time dominated the Earth, the dinosaurs.

The end of the Cold War and the challenges that followed brought on a fundamental change in our environment. Only the individuals, the companies, and the nations that adapt to the new global environment will thrive in the coming decades. The end of the Cold War should have been an occasion not for relaxation and self-congratulation but for collective efforts to adapt to the new world that we invented.

We thought of ourselves as the lion that, having just vanquished the leader of the competing pride of lions on the savanna, reigns as the undisputed king of all he surveys. Instead we were, and are, running the risk of becoming dinosaurs.

The analogy between the effects of evolution on particular species and the impact of social, economic, and political change on sovereign states breaks down in a couple of crucial ways, though. For one, adaptation in biology takes place across hundreds of generations, while the adaptation we are talking about will have to happen within a few years. And whether or not a species is well adapted to its environment is the product of uncontrollable genetic coding. Individuals, groups, and nations, by contrast, can understand their circumstances and deliberately make the adjustments necessary to flourish in them. The dinosaurs could do nothing to avoid extinction. The United States can choose to meet the challenges it faces and adopt the appropriate policies for doing so.

The country is not facing extinction, but the stakes involved are very high indeed.

Our success in meeting the four challenges will determine the rate and the shape of U.S. economic growth, and how widely the benefits of such growth are shared. For most of its history the United States achieved impressive annual increases in GDP, which lifted the incomes of most of its citizens. That economic growth served as the foundation for almost everything we associate with America: its politics, its social life, its role in the world, and its national character. Fifty-five years ago the historian David Potter, in People of Plenty, argued that affluence has shaped the American character. The performance of the U.S. economy has generally made it possible for most Americans who worked hard to enjoy at least a modest rise in their material circumstances during their lifetimes—and enabled them to be confident that their children would do the same. Economic growth created opportunity for each generation of Americans, and over time most Americans came to expect that the future would be better than the past, that hard work would be rewarded, and that each generation would be wealthier than the previous one. That expectation came to have a name: “the American dream.” The American dream depends on sustained, robust economic growth, which now depends on the country meeting the four major challenges it faces.

As Senator Lindsey Graham, a South Carolina Republican, put it to us: “America needs to think long term just at a time when long-term thinking has never been more difficult to achieve. I hope it is just more difficult, not impossible.” He added: “Those who do not think the American dream is being jeopardized are living in a dark corner somewhere … It is my hope that the Tea Party, Wall Street, labor unions, and soccer moms will all rally around the idea that ‘I don’t want to lose the American dream on my watch.’”

More and more Americans, though, fear that the American dream is slipping away. A poll published by Rasmussen Reports (November 19, 2010) found that while 37 percent of the Americans polled believed that the country’s best days lay ahead, many more, 47 percent of those polled, thought that the country’s best days had already passed. Failing to take collective action to solve the problems that globalization, IT, debt, and energy and global warming have created risks proving the pessimists right.

“Lots of things in life are more important than money,” goes the old saying, “and they all cost money.” In his 2005 book, The Moral Consequences of Economic Growth, the Harvard economist Benjamin M. Friedman shows how periods of economic prosperity were also periods of social, political, and religious tolerance that saw the expansion of rights and liberties and were marked by broad social harmony. By contrast, when the American economy did poorly, as after the crash of 1929, conflict of all kinds increased. The American dream is the glue that has held together a diverse, highly competitive, and often fractious society.

The manager of the Liverpool, England, soccer team once observed of his sport, which everyone except Americans calls football: “Some people say that football is a matter of life and death. They are wrong. It’s much more important than that.” Similarly, while America’s success or failure in mastering the challenges of globalization, IT, debt, energy, and global warming will define the country’s future, more than the American future is at stake. Because America plays such a vital role in world affairs, the way things turn out in the United States will have effects on the people of the next generations all over the world.

As Michael argued in his 2006 book, The Case for Goliath: How America Acts as the World’s Government in the Twenty-first Century, since 1945, and especially since the end of the Cold War, the United States has provided to the world many of the services that governments generally furnish to the societies they govern. World leaders appreciate this role even when they do not publicly acknowledge it. America has acted as the architect, policeman, and banker of the international institutions and practices it established after World War II and in which the whole world now participates. While maintaining the world’s major currency, the dollar, it has served as a market for the exports that have fueled remarkable economic growth in Asia and elsewhere. America’s navy safeguards the sea-lanes along which much of the world’s trade passes, and its military deployments in Europe and East Asia underwrite security in those regions. Our military also guarantees the world’s access to the oil of the Persian Gulf, on which so much of the global economy depends. American intelligence assets, diplomatic muscle, and occasionally military force do most to resist the most dangerous trend in modern international politics—the proliferation of nuclear weapons.

Over and above all of this, there is America’s visible demonstration of the connections between freedom, economic growth, and human fulfillment. The power of example is a hugely potent social force, and the American example, with its remarkable record of economic success, has had a particularly strong global impact. Of course, other countries are democratic, prosperous, powerful, and influential. The political and economic principles on which the United States is based originated in the British Isles. After the collapse of communism, the countries of Central and Eastern Europe were inspired to follow the capitalist and democratic paths by the democratic, capitalist example of Western Europe. The increasingly prosperous countries of Asia adopted their versions of free-market economies from Japan.

Still, it was the United States that helped to establish and protect democracy and free-market economies in Western Europe and Japan after World War II, and it is the United States that has been, over the past hundred years, the most consistently democratic, prosperous, and powerful—and therefore the most influential—country in the world. It is the American example that deserves the most credit for the global spread of democratic politics and free-market economies. In this sense, too, the world of today is a world that we invented.

Alas, no country is prepared to step in to replace the United States as the world’s government, the way we stepped in when Great Britain went into decline. Nor will our economically pressed allies in Europe and Asia shoulder the costs of these global services. Therefore, a weaker America would leave the world a nastier, poorer, more dangerous place.

In sum, America’s future—and the future of the world beyond America—depends on how well we deal with all four of our challenges. Because they are so important to the United States, and because the United States is so important to the rest of the world, it is not an exaggeration to say that the course of the rest of the century depends on how we respond collectively to them.

There is every reason to think that the United States can rise to meet them. Our optimism rests on our country’s history of rising to great challenges. America is a nation that won its independence through a daring, violent break with the richest country and the greatest maritime power in the world. Americans then settled a vast and wild continent and waged a bloody civil war from which they recovered so rapidly that they built the largest economy on the planet within a few decades. Our armed forces tipped the balance in Europe in the first great war of the twentieth century; and our tanks, ships, and airplanes, as well as the efforts of our fighting men and women, were central to the defeat of Germany and Japan in the second one.

Winston Churchill once said to his British compatriots that “we have not journeyed across the centuries, across the oceans, across the mountains, across the prairies, because we are made of sugar candy.” The same could be said of Americans. Yet faced with era-defining challenges, the country has responded with all the vigor and determination of a lollipop. It has no concerted, serious, well-designed, and broadly supported policies to prepare Americans for the jobs of the future, or to put the nation’s fiscal affairs in order, or to hedge against dangerous changes in the planet’s climate. How to explain our failure of will? Our political system has gone awry, and so cannot produce the big, ambitious policies the country needs. And the American people have not demanded that our leaders tackle the challenges we face because they still have not fully understood the world we are living in.

What world are we living in? What do we need to do to thrive in this world? Do we have the requisite policies and are we carrying them out effectively? How do we adjust them to work better?

In Singapore, the political and business leaders ask these questions obsessively, as Tom saw during a visit to the country in the winter of 2011. The Singaporean economist Tan Kong Yam pinpointed the reason: Because of its small size and big neighbors, Singapore, he said, is “like someone living in a hut without any insulation. We feel every change in the wind or the temperature and have to adapt. You Americans are still living in a brick house with central heating and don’t have to be so responsive.”

Tom saw the result of Singapore’s obsessive attention to what it must do in order to thrive when he visited a fifth-grade science class in an elementary school in a middle-class neighborhood. All the eleven-year-old boys and girls were wearing white lab coats with their names monogrammed on them. Outside in the hall, yellow police tape had blocked off a “crime scene.” Lying on a floor, bloodied, was a fake body that had been “murdered.” The class was learning about DNA through the use of fingerprints and evidence-collecting, and their science teacher had turned each one into a junior CSI investigator. All of them had to collect fingerprints and other evidence from the scene and then analyze them. Asked whether this was part of the national curriculum, the school’s principal said that it was not. But she had a very capable science teacher interested in DNA, the principal explained, and the principal was also aware that Singapore was making a big push to expand its biotech industries, so she thought it would be a good idea to expose her students to the subject.

A couple of them took Tom’s fingerprints. He was innocent—but impressed.

Curtis Carlson, the CEO of SRI International, a Silicon Valley innovation laboratory, has worked with both General Motors and Singapore’s government, and he had this to say: “Being inside General Motors, this huge company, it felt a lot like being inside America today. Adaptation is the key to survival, and the people and companies who adapt the best, survive the best. When you are the biggest company in the world, you become arrogant, and your mind-set is such that no one can convince you that Toyota has anything to teach you. You are focused inward and not outward; you are focused on the politics inside your company rather than on the outside and what your competition is doing. If you become arrogant, you become blind. That was General Motors, and that is, unfortunately, America today … You cannot adapt unless you are constantly monitoring what is happening in your environment. Countries that do that well, such as Singapore, are all about looking out.”

Singapore has no natural resources, and even has to import sand for building. But today its per capita income is just below U.S. levels, built entirely with advanced manufacturing, services, and exports. The country’s economy grew in 2010 at 14.7 percent, led by exports of pharmaceuticals and biomedical equipment. The United States is not Singapore, and it is certainly not about to adopt the more authoritarian features of Singapore’s political system. Nor, like Singapore, are we likely to link the pay of high-level bureaucrats and cabinet ministers to the top private-sector wages (all top government officials in Singapore make more than $1 million a year) or give them annual bonuses tied to the country’s annual GDP growth rate. But we do have something to learn from the seriousness and creativity the Singaporeans bring to elementary education and economic development, and from their attention to the requirements for success in the post–Cold War world. Carlson told us he once met with a senior Singaporean economic minister whom he complimented on the country’s educational and economic achievements. Carlson said the minister “would not accept the compliment.” “Rather, he said, ‘We are not good enough. We must never think we are good enough. We must continuously improve.’ Exactly right—there is no alternative: adapt or die.”

To be sure, countries don’t compete directly with one another in economic terms. When Singapore or China gets richer, America does not become poorer. To the contrary, Asia’s surging economic growth has made Americans better off. But individuals do compete against one another for good jobs, and those with the best skills will get the highestpaying ones. In today’s world, more and more people around the world are able to compete with Americans in this way.

Another reason that Americans have not recognized the magnitude of the challenges they face is that these challenges are all the products of American success. For years, the United States was the world’s most vigorous champion of free trade and investment—the essence of globalization. Globalization spread due to the remarkable productivity of the free-market economies of the West, which traded and invested heavily among themselves. By contrast, the communist countries were discredited by their dismal record in achieving economic growth. So they embraced free markets and globalization.

Likewise, the IT revolution was started in the United States. The transistor, communications satellites, the personal computer, the cell phone, and the Internet, not to mention the PalmPilot, the iPad, the iPhone, and the Kindle, were all invented in the United States and then were brought to the world market by American-based companies. That gave more people than ever the tool kit to compete with us and remove the barriers erected by their own governments.

Similarly, the American government has been able to borrow several trillion dollars because of confidence, both at home and abroad, in the American economy and because of the special international role of the dollar, which dates from the days of American global economic supremacy. And the global population uses so much fossil fuel that it threatens to disrupt the climate precisely because economic growth, which is expanding rapidly, goes hand in hand with a rise in fuel consumption. The surge in growth over the last two decades has come about because of the expansion of American-sponsored globalization and the adoption, especially in Asia, of the American and Western system of free-market economics.

In sum, the world to which the United States must adapt is, to a very great extent, “Made in America.” But in this case familiarity and pride of authorship have bred complacency. We are dangerously complacent about this new world precisely because it is a world that we invented.

Another feature of the four challenges America confronts is the fact that they will require sacrifice, which makes generating collective action much more difficult. This is most obvious in the case of the federal deficits. Americans will have to pay more in taxes and accept less in benefits. Paying more for less is the reverse of what most people want out of life, so it is no wonder that deficits have grown so large. Similarly, Americans won’t begin to use less fossil fuel and industry won’t invest in nonfossil sources of energy unless the prices of coal, oil, and natural gas rise significantly to reflect the true cost to society of our use of them. Higher American fuel bills will ultimately be good for the country and for the planet because they will stimulate the development of renewable energy sources, but they will be hard on household budgets in the short term. To meet the challenge of globalization and the IT revolution and to achieve the steadily rising standard of living U.S. citizens have come to expect, Americans will have to save more, consume less, study longer, and work harder than they have become accustomed to doing in recent decades.

Ours is “no longer a question of sacrificing or not sacrificing—we gave up that choice a long time ago,” notes Michael Maniates, a professor of political science and environmental science at Allegheny College in Pennsylvania, who writes on this theme. We cannot choose whether or not Americans will sacrifice, but only who will bear the brunt of it. The more the present generation shrinks from the nation’s challenges now, the longer sacrifice is deferred, the higher will be the cost to the next generation of the decline in America’s power and Americans’ wealth.

Fifty years ago, at his inauguration, John F. Kennedy urged his fellow citizens, “Ask not what your country can do for you. Ask what you can do for your country.” That idea resonated with most of the Americans to whom he spoke because they had personal memories of an era of supreme and successful sacrifice, which earned them the name “the Greatest Generation.”

Unfortunately, today’s challenges differ in an important way from those of the last century. The problems the Greatest Generation faced were inescapable, immediate, and existential: the Great Depression, German and Japanese fascism, and Soviet communism. The enemies they had to confront were terrifying, tangible, and obvious: long unemployment lines, soup kitchens, heartless bankers evicting families from their homes, the twisted wreckage of Pearl Harbor, the maniacal countenance and braying voice of Adolf Hitler, the ballistic missiles decorating the May Day parades in Red Square in Moscow—missiles that one day were dispatched to Cuba, just ninety miles from America’s shores. When the Soviets weren’t putting up a wall topped with barbed wire, slicing through the heart of Berlin like a jagged knife, they were invading Hungary and Czechoslovakia to stomp out a few wildflowers of freedom that had broken through the asphalt layer of communism the Soviet Union had laid down on those two countries. These challenges were impossible to ignore.

Whether or not the public and the politicians all agreed on the strategies for dealing with those challenges—and often they didn’t—they recognized that decisions had to be made, endless wrangling had to stop, and denying the existence of such threats or postponing dealing with them was unthinkable. Most Americans understood the world they were living in. They understood, too, that in confronting these problems they had to pull together—they had to act collectively—in a unified, serious, and determined way. Confronting those challenges meant bringing to bear the full weight and power of the American people. It also meant that leaders could not avoid asking for sacrifice. Kennedy also summoned his countrymen to “pay any price, bear any burden, meet any hardship, support any friend, oppose any foe.” Everyone had to contribute something—time, money, energy, and, in many cases, lives. Losing was not an option, nor were delay, denial, dithering, or despair.

Today’s major challenges are different. All four—globalization, the IT revolution, out-of-control deficits and debt, and rising energy demand and climate change—are occurring incrementally. Some of their most troubling features are difficult to detect, at least until they have reached crisis proportions. Save for the occasional category-5 hurricane or major oil spill, these challenges offer up no Hitler or Pearl Harbor to shock the nation into action. They provide no Berlin Wall to symbolize the threat to America and the world, no Sputnik circling the Earth proclaiming with every cricket-like chirp of its orbiting signal that we are falling behind in a crucial arena of geopolitical competition. We don’t see the rushing river of dollars we send abroad every month—about $28 billion—to sustain our oil addiction. The carbon dioxide that mankind has been pumping into the atmosphere since the Industrial Revolution, and at rising rates over the past two decades, is a gas that cannot be seen, touched, or smelled.

To be sure, the four great challenges have scarcely gone unnoticed. But in the last few years the country was distracted, indeed preoccupied, by the worst economic crisis in eight decades. It is no wonder that Americans became fixated on their immediate economic circumstances. Those circumstances were grim: American households lost an estimated $10 trillion in the crisis. For more than a year the American economy contracted. Unemployment rose to 9 percent (and if people too discouraged to seek jobs were included, the figure would be significantly higher).

There is an important difference between the challenge of the economic crisis and the four long-term challenges that America faces. The deep recession of late 2007 to 2009 was what economists call a “cyclical event.” A recession reduces economic growth to a point below its potential level. But the economy, and the country, eventually recover. By contrast, the four great challenges will determine our overall economic potential. A recession is like an illness, which can ruin a person’s week, month, or even year. The four challenges we face are more like chronic conditions. Ultimately, these determine the length and the quality of a person’s life. Similarly, how we respond to these major challenges will determine the quality of American life for decades to come. And it is later than we think.

A few years ago, fans of the Chicago Cubs baseball team, which last won the World Series in 1908, took to wearing T-shirts bearing the slogan “Any team can have a bad century.” Countries, too, can have bad centuries. China had three of them between 1644 and 1980. If we do not master the four major challenges we now face, we risk a bad twenty-first century.

Failure is by no means inevitable. Coming back, thriving in this century, preserving the American dream and the American role in the world, will require adopting policies appropriate to confronting the four great challenges. For this there are two preconditions. One is recognizing those challenges—which we have clearly been slow to do. The other is remembering how we developed the strength to face similar challenges in the past. As Bill Gates put it to us: “What was all that good stuff we had that other people copied?” That is the subject of the next chapter.

Ignoring Our History

On January 5, 2011, the opening day of the 112th Congress, the House of Representatives began its activities with a reading of the Constitution of the United States. The idea originated with the Tea Party, a grassroots movement whose support for Republican candidates in the 2010 elections had helped sweep them to victory, giving the GOP control of the House. The members of the new majority wanted to drive home the point that they had come to Washington to enforce limits on both the spending and the general powers of the federal government—which they believed had gone far beyond the powers granted to it in the Constitution. Historians said it was the first time the Constitution, completed in 1787, was read in its entirety on the House floor.

The Constitution has served as the framework of American political and economic life for nearly 225 years, a span of time in which the United States has grown from a series of small cities, towns, villages, and farms along the eastern seaboard to a superpower of continental dimensions with the largest economy in the world. For America’s remarkable history, the Constitution deserves a large share of the credit.

But even reverence for the Constitution can be taken too far. Former congressman Bob Inglis, a conservative Republican from South Carolina who lost his party’s 2010 primary to a Tea Party–sponsored opponent, told us about an experience he had speaking to members of that group at the main branch of the Greenville, South Carolina, county library several weeks before the primary. “About halfway into the hour and a half program, a middle-aged fellow stood up to ask his question,” Inglis said. “He identified himself as a night watchman/security guard. Pulling a copy of the Constitution out of his shirt pocket and waving it in the air, he asked me, ‘yes or no,’ if I would vote to eliminate all case law and go back to just ‘this’—the Constitution.”