And Then There’s This – Read Now and Download Mobi

Table of Contents

VIKING

Published by the Penguin Group

Penguin Group (USA) Inc., 375 Hudson Street, New York, New York 10014, U.S.A. • Penguin

Group (Canada), 90 Eglinton Avenue East, Suite 700, Toronto, Ontario, Canada M4P 2Y3 (a division

of Pearson Penguin Canada Inc.) • Penguin Books Ltd, 80 Strand, London WC2R 0RL,

England • Penguin Ireland, 25 St Stephen’s Green, Dublin 2, Ireland (a division of Penguin

Books Ltd) • Penguin Books Australia Ltd, 250 Camberwell Road, Camberwell, Victoria

3124, Australia (a division of Pearson Australia Group Pty Ltd) • Penguin Books India Pvt

Ltd, 11 Community Centre, Panchsheel Park, New Delhi - 110 017, India • Penguin Group

(NZ), 67 Apollo Drive, Rosedale, North Shore 0632, New Zealand (a division of Pearson

New Zealand Ltd) • Penguin Books (South Africa) (Pty) Ltd, 24 Sturdee Avenue, Rosebank,

Johannesburg 2196, South Africa

Penguin Books Ltd, Registered Offices:

80 Strand, London WC2R 0RL, England

First published in 2009 by Viking Penguin,

a member of Penguin Group (USA) Inc.

Copyright © Bill Wasik, 2009

All rights reserved

Portions of this book first appeared in different form as “My Crowd” in Harper’s Magazine and

“Hype Machine” in The Oxford American. “Hype Machine” was later published as “Annuals:

A North Carolina Band Navigates the Ephemeral Blogosphere” in Best Music Writing 2008,

edited by Nelson George and Daphne Carr (Da Capo Press).

Graphs by the author

Library of Congress Cataloging-in-Publication Data

Wasik, Bill.

And then there’s this : how stories live and die in viral culture / Bill Wasik.

p. cm.

Includes bibliographical references and index.

eISBN : 978-1-101-05770-4

1. Internet—Social aspects. 2. Information society—Psychological aspects.

3. Internet users—Psychology. 4. Blogs—Social aspects. I. Title.

HM851.W38 2009

303.48’33—dc22 2009004100

Without limiting the rights under copyright reserved above, no part of this publication may be reproduced, stored in or introduced into a retrieval system, or transmitted, in any form or by any means (electronic, mechanical, photocopying, recording, or otherwise), without the prior written permission of both the copyright owner and the above publisher of this book.

The scanning, uploading, and distribution of this book via the Internet or via any other means without the permission of the publisher is illegal and punishable by law. Please purchase only authorized electronic editions and do not participate in or encourage electronic piracy of copyrightable materials. Your support of the author’s rights is appreciated.

INTRODUCTION:

KEY CONCEPTS

THE NANOSTORY

I begin with a plea to the future historians, those eminent and tenured minds of a generation as yet unconceived, who will sift through our benighted present, compiling its wreckage into wiki-books while lounging about in silvery bodysuits: to these learned men and women I ask only that in telling the story of this waning decade, the first of the twenty-first century, they will spare a thought for the fate of a girl named Blair Hornstine. Period photographs will record that Hornstine, as a high-school senior in the spring of 2003, was a small-framed girl with a full face, a sweep of dark, curly hair, and a broad, laconic smile. She was an outstanding student—the top of her high-school class in Moorestown, New Jersey, in fact, boasting a grade-point average of 4.689—and was slated to attend Harvard. But as her graduation neared, the district superintendent ruled that the second-place finisher, a boy whose almost-equal average (4.634) was lower due only to a technicality, should be allowed to share the valedictory honors.

At this point, the young Hornstine made a decision that even our future historians, no doubt more impervious than we to notions of the timeless or tragic, must rate almost as Sophoclean in the fatality of its folly. After learning of the superintendent’s intention, Hornstine filed suit in U.S. federal court, demanding that she not only be named the sole valedictorian but also awarded punitive damages of $2.7 million. Incredibly, a U.S. district judge ruled in Hornstine’s favor, giving her the top spot by fiat (though damages were later whittled down to only sixty grand). Meanwhile, however, a local paper for which she had written discovered that she was a serial plagiarist, having stolen sentences wholesale from such inadvisable marks as a think-tank report on arms control and a public address by President Bill Clinton. Harvard quickly rescinded its acceptance of Hornstine, who thereafter slunk away to a fate unknown.

I stoop to shovel up the remains of Blair Hornstine’s reputation not to draw any moral from her misdeeds. Of the morals that might be drawn, roughly all were offered up at one point or another during the initial weeks after her lawsuit. She was “just another member of a hyper-accomplished generation for whom getting good grades and doing good deeds has become a way of life,” wrote the Los Angeles Times. The Philadelphia Daily News eschewed such sociology, instead citing more structural causes: “With college costs skyrocketing, scholarship dollars limited and competition fierce for admissions, the road to the Ivy League has become a bloody battleground.” MSNBC’s Joe Scarborough declared that “across the country, parents are suing when the ball doesn’t bounce their child’s way,” while CNN’s Jeffrey Toobin went somewhat more Freudian, branding her judge father as another example of “lawyers. . . inflicting it on [their] children.” Nonprofessional Internet pundits, meanwhile, were every bit as incisive. “The girl’s father is a judge,” wrote one proto-Toobin on MetaFilter, a popular community blog. “Guess he’s taught her too much about the legal system.” Echoed another commenter: “Behind every over-achieving student there are usually a pair of over-achieving parents who want their child to live up to their ridiculous expectations.”

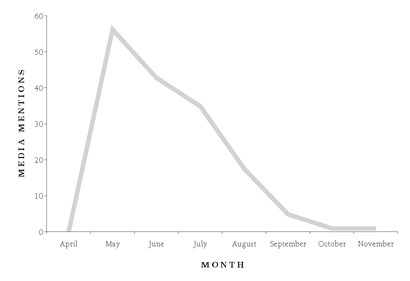

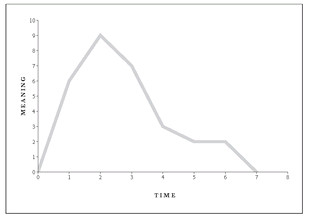

No, I offer up Blair Hornstine to history simply for the trajectory of her short-lived fame, the rapidity with which she was gobbled up into the mechanical maw of the national conversation, masticated thoroughly, and spat out:

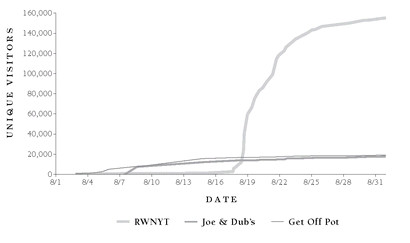

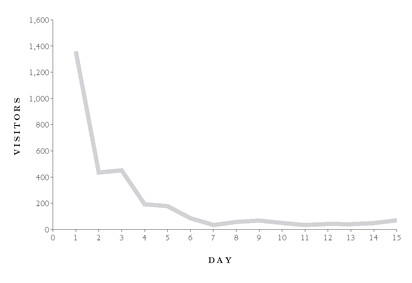

FIG. 0-1—BLAIR HORNSTINE (THE SPIKE)

This telltale spike, this ascent to sudden heights followed by a decline nearly as precipitous—it is a pattern that will recur throughout this book, and it is one that even the casual consumer of mass media will surely recognize. To keep up with current affairs today is to suffer under a terrible bombardment of Blair Hornstines, these media pileons that surge and die off within a matter of months, days, even hours. Consider just one of the weeks (May 25 through June 1) that Blair Hornstine was being dragged through the streets behind the media jeep; tied up beside her were a host of other persons or products or things, in various states of uptake or castoff—in the realm of politics, Pfc. Jessica Lynch (on her way down, as her rescue tale was found to have been exaggerated) and Howard Dean (on his way up in the race for president, having excited the Internet); in fashion, the trucker hat (on its way down) and Friendster.com (on its way up); in music, an indie-rock band called the Yeah Yeah Yeahs (down) and a new subgenre of “emo” called “screamo” (up); all these and more were experiencing their intense but ephemeral media moment during one week in the early summer of 2003.

We do not have an easy word to describe these transient bursts of attention, in part because we often categorize them differently based on their object. When this sort of fleeting attention attaches to things, we tend to call them “fads”; but this term, I think, conjures up too much the media-unsavvy consumer of an earlier era, while underestimating the extent to which our enthusiasms today are entirely knowing, postironic, aware. If there is one attribute of today’s consumers, whether of products or of media, that differentiates them from their forebears of even twenty years ago, it is this: they are so acutely aware of how media narratives themselves operate, and of how their own behavior fits into these narratives, that their awareness feeds back almost immediately into their consumption itself.

Likewise, when this sort of transient attention falls on people, we tend to describe it as someone’s “fifteen minutes of fame.” But is celebrity really what is at work here? The majority of the tens of millions of people who pondered the story of Blair Hornstine never knew what she looked like, or cared. What they knew, instead, was how she fit handily into one or more of the various meanings imposed on her: the ambition-addled generation, the lawsuit-drunk society. Most people who remember Blair Hornstine today will recall her not by name or face but simply by role—as “that girl,” perhaps, “who sued to become valedictorian.” No name need even be invoked for her to do her conversational work.

In keeping with the entrepreneurial wordsmithery of the times, I would like to propose a new term to encompass all these miniature spikes, these vertiginous rises and falls: the nanostory. We allow ourselves to believe that a narrative is larger than itself, that it holds some portent for the long-term future; but soon enough we come to our senses, and the story, which cannot bear the weight of what we have heaped upon it, dies almost as suddenly as it was born. The gift we so graciously gave Blair Hornstine in 2003 was her fifteen minutes not of fame but of meaning.

VIRAL CULTURE

On May 27, 2003, during the same fitful weeks, I made up my mind to create a nanostory of my own. To sixty-three friends and acquaintances, I sent an e-mail that began as follows:

You are invited to take part in MOB, the project that creates an inexplicable mob of people in New York City for ten minutes or less. Please forward this to other people you know who might like to join.

More precisely, I forwarded them this message, which, in order to conceal my identity as its original author, I had sent myself earlier that day from an anonymous webmail account. As further explanation, the e-mail offered a “frequently asked questions” (FAQ) section, which consisted of only one question:

Q. Why would I want to join an inexplicable mob?

A. Tons of other people are doing it.

Watches were to be synchronized against the U.S. government’s atomic clocks, and the e-mail gave instructions for doing so. In order that the mob not form until the appointed time, participants were asked to approach the site from all four cardinal directions, based on birth month: January or July, up Broadway from the south; February or August, down Broadway from the north; etc. At 7:24 p.m. the following Tuesday—June 3—the mob was to converge upon Claire’s Accessories, a small chain store near Astor Place that sold barrettes, scrunchies, and such. The gathering was to last for precisely seven minutes, until 7:31, at which time all would disperse. “NO ONE,” the e-mail cautioned, “SHOULD REMAIN AT THE SITE AFTER 7:33.”

My reason for sending this e-mail was simple: I was bored, by which I mean the world at that moment seemed adequate for neither my entertainment nor my sense of self. Something had to be done, and quickly. It was out of the question to undertake a project that might last, some new institution or some great work of art, for these would take time, exact cost, require risk, even as their odds for success hovered at nearly zero. Meanwhile, the odds of creating a short-lived sensation, of attracting incredible attention for a very brief period of time, were far more promising indeed. New York culture, like the national culture, was nothing but a shimmering cloud of nanostories, a churning constellation of “important” new bands and ideas and fashions that literally hundreds if not thousands of writers, in print and online, devoted themselves to building up and then dismantling with alacrity. I wanted my new project to be what someone would call “The X of the Summer,” before I even contemplated exactly what X might be.

In the Internet, moreover, I had been handed a set of tools that allowed sensations to be created by anyone for almost no cost. Some of the successes had been epic, as in the case of The Blair Witch Project, which cost roughly $22,000 to make but earned, after a brilliant and dirt-cheap Internet marketing campaign, more than $248 million in gross—an anecdote that launched a thousand business books. (The sequel cost $15 million and flopped.) Scores of other viral victories had been considerably more modest financially but eye-opening nonetheless. A gregarious Turk named Mahir Çagri lured literally millions of visitors to his slapdash personal home page, which professed his love for beautiful women and popularized, for most of a year, the greeting “I kiss you!” A twentysomething New Yorker named Jonah Peretti (who figures in chapter 3 of this book) sent to his friends an e-mail exchange he had had with Nike, and weeks later found that it had been disseminated to literally millions of people; he later went on to create more such “contagious media” projects and even taught a class on the subject at NYU. These sorts of links passed from person to person, from in-box to in-box, arriving once a week or even daily. Sometimes they were essentially found objects (like Mahir’s home page), culled from the dark corners of the web in the service of semi-ironic hipster sport; but as the years went on, increasingly the forwarded e-mails linked to content that was consciously made to spread. The “viral,” whether e-mail or website or song or video, was gradually emerging as a new genre of communication, even of art.

A marginal genre only a few years ago, the intentional viral has become central as this decade malingers on. Each day, hundreds of millions of videos are viewed on YouTube, and hundreds of thousands are uploaded. The viral currents charging through MySpace, with its millions of members and tens of thousands of bands, can literally create a number-one band—cf. the Arctic Monkeys—while the network of indie-rock music blogs, chief among them Pitchfork Media, is arguably the most important influence on which smaller bands prosper and which languish. Corporations are funneling million-dollar budgets into “word-of-mouth” marketing, with its emphasis on quick-hit pass-along ads, short-lived web “experiences,” promotional blogs, and endless Internet tie-ins to the companies’ more traditional campaigns. In national politics, the Internet has emerged as the crucial location for volunteer recruitment, fund-raising, and, above all, “conversation”—if one can accept that as a euphemism for the churning mess of interconnected blogs, most of them partisan, that every day and night are looking for opportunities to score political points against their enemies. The traditional media, unsure of their own business models, have moved to adapt, scrutinizing their most e-mailed lists for clues to the zeitgeist and then sending their reporters to chase the sorts of contagious stories that the Internet audience craves. All of this money and energy is aimed at creating hyperquick blowups, the kind of miniature crazes whose success is measured in hits and whose life span is measured in months if not weeks or days.

Sudden success has ever been the truest American dream, in 1800 and in 2000 and, God willing, in 2200, among those who survive the terror attacks and sea swells and oil wars. But what this particular decade, the first of the twenty-first century, has built, I would argue, is a new viral culture based on a new type of sudden success—a success with four key attributes. The first is outright speed: viral culture confers success with incredible rapidity, in a few weeks at the outside. The second is shamelessness: it is a success defined entirely by attention, and whether that attention is positive or negative matters hardly one bit. The third is duration: it is a success generally assumed to be ephemeral even by those caught up in it. These first three attributes, it should be noted, are simply more extreme versions of the overnight success afforded by television.

The fourth attribute, however, is new and somewhat surprising. It is what one might call sophistication: where TV success was a passive thing, success in viral culture is interactive, born of mass participation, defined by an awareness of the conditions of its creation. Viral culture is built, that is, upon what one might call the media mind.

THE MEDIA MIND

In the waning days of 2006, Time magazine blundered into ridicule by choosing “You” as its Person of the Year. On a CNN special, Time editor Richard Stengel announced the decision while brandishing a copy of the issue, whose cover’s reflective Mylar panel served as a murky sort of mirror.

STENGEL: Time’s 2006 person of the year is—you!

SOLEDAD O’BRIEN : Literally me?

STENGEL: Yes, you! Me! Everyone!

Just four weeks later the New York Observer would describe the choice as “a public belly flop, an instant punch line among readers and commentators”; and indeed, in their shared derision for Time, American pundits had come together in an almost touching moment of fellow feeling. From the right, Jonah Goldberg groused, “You are Person of the Year because the editors of Time want to live in a Feel-Good Age where everyone is empowered.” From the left, Frank Rich bemoaned what You were ignoring: “In the Iraq era, the dropout nostrums of choice are not the drugs and drug culture of the Vietnam era but the equally self-gratifying and narcissistic (if less psychedelic) pastimes of the Internet.” Time and its critics seemed strangely united, in fact, in their interpretation of the choice, and of the underlying online reality it was supposed to celebrate. “You” were supposed to have taken control of the culture—“the many wresting power from the few”—simply by enacting, each of you together, your naïve, untutored yearnings for community and self-expression. As NBC anchor Brian Williams put it, in a (cautionary) piece in Time’s package:

The larger dynamic at work is the celebration of self. The implied message is that if it has to do with you, or your life, it’s important enough to tell someone. Publish it, record it . . . but for goodness’ sake, share it—get it out there so that others can enjoy it.

Let us set aside, for a moment, the irony of Brian Williams accusing others of the “celebration of self,” and let us instead pose a question: does this vision of Internet culture—as a playground for unsophisticated navel-gazers—actually square with reality? I would argue that it is a deep misunderstanding, and one that dates from an earlier era. Before the Internet, only professionals could attract audiences that ranged far beyond their own circle of acquaintance. But today, we have an era of truly popular culture that is not professionally created: people can now attract tremendous followings for their writing or art (or music, etc.) while making their livelihoods elsewhere. For this reason, debates about the Internet’s effect on culture tend necessarily to boil down to debates over the merits of amateurism. Web 2.0 boosters celebrate the power of the collaborative many, who are breaking the grip of the elites (and corporations) over the creation and distribution of culture. Detractors fret that the rise of the amateur portends a decline in quality, that a culture without professionals is a culture without professionalism—a world of journalism without regard for fact, art without regard for craft, language without regard for grammar, and so on.

Mostly, though, both sides miss the point, because both lean, like Williams, on a homespun notion of the “amateur” that simply doesn’t reflect how amateurs act when they get an audience online. The majority of bloggers may well be writing tedious personal journals, but the readership of that kind of blog is usually not intended to stray, nor does it stray in practice, beyond the bounds of the blogger’s own acquaintance. Much the same can be said of YouTube or Flickr: the content is purely personal and pedestrian precisely to the extent that its poster cares nothing for attracting an audience. With rare exceptions, purely personal content posted on the Internet simply doesn’t matter to anyone its creator doesn’t know, any more than would the diary on your own nightstand. Surely Time would never have named “You” the person of the year if all blogs were diaries, if all YouTube videos were bedroom soliloquies.

Bloggers, mashup artists, YouTube videographers, political “hacktivists”—these people aren’t sitting in their bedrooms spinning out moony personal diaries, hoping that someone will come along and recognize them. Aware they’re always being watched, they act accordingly, tailoring their posts to draw traffic, stirring up controversy, watching their stats to see what works and what doesn’t. They develop a meta-understanding of the conversation they’re in and how that conversation works, and they try to figure out where it’s going so they can get there first. Often these web amateurs have learned these rules just by observing—by lurking on other blogs before starting their own, for example. But just as often they learn from on-the-job experience of sorts, by having scores of ideas fail while one succeeds against all expectation. Either way, countless Internet “amateurs” come to think about their projects with a hard-nosed sophistication that rivals anything to be found inside the conference rooms of our corporate culturemasters.

In fact, the relevant actors of so-called consumer-generated media—the tens if not hundreds of thousands of content creators who are attracting fans beyond their own circles of friends—are every bit as savvy, as ambitious, and as calculating as aspiring culture-makers have ever been. Time’s own examples bore this out: the amateurs who won a following that year invariably had done so cannily. S. R. Sidarth, the young man responsible for Sen. George Allen’s infamous “macaca” moment on YouTube, was an ambitious young volunteer for the opposing campaign who was trailing Allen specifically to document his missteps. The Silence Xperiment, a pseudonymous “bedroom producer” who created a wildly popular mashup album combining the raps of 50 Cent with the music of Queen, had previously DJed clubs, and also had his own radio show (albeit at a college station). Lane Hudson, the blogger whose “Stop Sex Predators” blog posted the e-mails and chats that brought down Rep. Mark Foley, himself worked for a number of political campaigns, including John Kerry’s for president. Tila Tequila, the model/singer who became the first MySpace phenomenon, sent a mass e-mail to “30,000 to 50,000” people inviting them to join up and add her as a friend. These were people who wanted a piece of mass culture; and so they watched the culture, learned from the culture, and then used what they learned to get what they wanted.

And so it goes every year, with the hordes of supposed naïfs out there writing their blogs, producing their videos, posting their songs. Bands trawl other bands’ MySpace pages, raiding their friend lists to try to find potential fans for themselves; every month, thousands more buy a software program called Easy Adder, which (for $24.95) allows them to automate the friend-request process, sending friend requests to hundreds or even thousands of potential friends at a time. On YouTube, video-makers create their own “channels” with customizable promotional backdrops, and they pimp their home page URLs at the ends of videos. Bloggers follow their stats and trackbacks; they add other bloggers to their “blogrolls” in hopes they will reciprocate. Having been sold culture for so many years, in so many sophisticated ways, consumers have now been handed the tools to sell themselves and they are doing so with great gusto.

All this is why I, for one, had no quibble with Time’s choice of “You” as the person of the year. Indeed, I will happily put “You” forward as the defining person of this whole random decade, which our hordes of cultural critics have redefined so often and so variously that it lacks an identity or even a name (the Zeros? the Oughties?). But make no mistake: I am onto You. You are no more starry-eyed about community and collaboration and “people power” and self-expression than is Brian Williams or Jonah Goldberg or Frank Rich; no more, for that matter, than am I. You blog and photograph and record precisely so you can be read and heard and seen by others. You monitor and you scheme and you promote, just like the hit-addled corporate culture has been teaching you for years. Because when your words or actions or art are available not only to your friends but to potentially thousands or even millions of strangers, it changes what you say, how you act, how you see yourself. You become aware of yourself as a character on a stage, as a public figure with a meaning. You develop, that is, the media mind. You know exactly what you are doing.

THE EXPERIMENTAL METHOD

“If you can measure it, you can manage it,” I heard a man behind the podium at a marketing conference say. He attributed this remark to the late-nineteenth-century scientist and engineer Lord Kelvin—whom he called “a pretty big data geek himself, back in the day”—but added, “The quote is actually much more Old English than that.” The full quote, as it happens, takes as its subject not management but science itself, and it is much beloved of scientists, appearing on some fifteen thousand different websites in its original not-quite-Old-English, which reads as follows:

I often say that when you can measure what you are speaking about, and express it in numbers, you know something about it; but when you cannot measure it, when you cannot express it in numbers, your knowledge is of a meagre and unsatisfactory kind; it may be the beginning of knowledge, but you have scarcely in your thoughts advanced to the state of Science, whatever the matter may be.

Kelvin’s point is that data is a necessary undergirding of science—indeed, data engenders science. And this is a crucial insight to understanding viral culture. For the better part of a century, corporations have seen culture through a lens of numbers, from 1926, when Maxwell Sackheim invented the Book-of-the-Month Club and began the hardening of the art of direct marketing into a statistical science; to 1936, when George Gallup used a scientifically selected random sample to correctly call the presidential election for FDR; to 1941, when the sociologist Robert Merton convened the first “focus group,” a tool that marketers would quickly use to wed the wisdom of polling to the qualitative insights of small-group conversations; and so on into the rest of the century, with its Nielsen ratings and demographic profiling and direct marketing. Corporate America has had the numbers and has used them to conduct its profit-seeking experiments on all of us.

But the Internet is revolutionary in how it has democratized not just culture-making but culture monitoring, giving individual creators a profusion of data with which to identify trends surrounding their own work and that of others. On YouTube, a video’s poster—or anyone else who cares to check—is privy to the kind of internals that TV executives can only dream of: not just real-time rankings of how often a video has been viewed, but which other videos a fan is likely to enjoy as well, based on the behavior of other users. For music, the story is the same: on MySpace, a band can track which of its songs are downloaded more and how this compares to other bands. This shift has seeped into the old media as well—writers for print newspapers or magazines, through their site’s “most e-mailed” lists, can see precisely which of their articles attain the heights of ubiquity and which fall into oblivion. If one’s built-in numbers do not provide enough market intelligence, there are countless other sites to consult. How many bloggers have linked to your site? The search engine Technorati will tell you, and also show you what those bloggers have to say. How is your site doing versus the competition? At Alexa.com you can see not only your site’s rank in its category but also plot it against other sites you choose.

This glut of data, combined with the ability to assume anonymity or pseudonymity—i.e., to act without one’s identity influencing the results, which is another crucial prerequisite of science—is establishing the Internet as an unprecedented medium for cultural experimentation. As more and more Americans become aware of the patterns and forces that shape culture, they begin to develop their own hypotheses about what will spread and what won’t. Online, with minimal cost or risk, they can test these theories, tweaking different versions of their would-be viral projects and monitoring the results, which in turn feed back into how future projects are made. In viral culture, we are all driven by the ratings, the numbers, the Internet equivalent of the box-office gross.

It is with trembling anticipation that I welcome our new generation of cultural scientists, to whom I dedicate this book and for whom I hope it might serve as both primer and cautionary tale. In its pages I survey the various precincts of viral culture, from politics and literature to marketing and music, in locales throughout the virtual world (blogs, chat rooms, MySpace, YouTube) and the real one (New York, Washington, Minneapolis, Santa Monica). In each chapter, I also detail the results of one of my own admittedly modest experiments in viral culture. But before those nanostories can be told, I need to return to that e-mail of May 27, 2003, and reveal what it portended for Claire’s Accessories, and for me.

1.

MY CROWD

EXPERIMENT: THE MOB PROJECT

BOREDOM

I started the Mob Project because I was bored, I wrote above, and perhaps that explanation seems simple enough. But before we proceed to the rise and fall of my peculiar social experiment, I think that boredom is worth interrogating in some detail. The first recorded use of the term, per the OED, did not take place until 1852, in Charles Dickens’s Bleak House, chapter 12. It is deployed by way of describing Lady Dedlock, a woman with whose habits of mind I often find myself in sympathy:

Concert, assembly, opera, theatre, drive, nothing is new to my Lady, under the worn-out heavens. Only last Sunday, when poor wretches were gay—. . . encompassing Paris with dancing, love-making, wine-drinking, tobacco-smoking, tomb-visiting, billiard, card and domino playing, quack-doctoring, and much murderous refuse, animate and inanimate—only last Sunday, my Lady, in the desolation of Boredom and the clutch of Giant Despair, almost hated her own maid for being in spirits.

Psychologists have been trying to elucidate the nature of boredom since at least Sigmund Freud, who identified a “lassitude” in young women that he attributed to penis envy. In 1951, Otto Fenichel described boredom as a state where the “drive-tension” is present but the “drive-aim” is absent, a state of mind that he said could be “schematically formulated” in this enigmatic but undeniably evocative way: “I am excited. If I allow this excitation to continue I shall get anxious. Therefore I tell myself, I am not at all excited, I don’t want to do anything. Simultaneously, however, I feel I do want to do something; but I have forgotten my original goal and do not know what I want to do. The external world must do something to relieve me of my tension without making me anxious.”

In the 1970s, researchers developed various tests designed, in part, to assess the boredom-plagued, from the Imaginal Processes Inventory (Singer and Antrobus, 1970) to the Sensation Seeking Scale (Zuckerman, Eysenck & Eysenck, 1978). But it was not until 1986, with the unveiling of the Boredom Proneness Scale (BPS), that the propensity toward boredom in the individual could be comprehensively measured and reckoned with. The test was created by Richard Farmer and Norman Sundberg, both of the University of Oregon, and it has allowed researchers in the two decades since to tally up boredom’s grim wages. The boredom-prone, we have discovered, display higher rates of procrastination, inattention, narcissism, poor work performance, and “solitary sexual behaviors” including both onanism and the resort to pornography.

The BPS test consists of twenty-eight statements to which respondents answer true or false, with a point assessed for each boredom-aligned choice, e.g.:

1. It is easy for me to concentrate on my activities. [+1 for False.]

5. I am often trapped in situations where I have to do meaningless things. [+1 for True.]

25. Unless I am doing something exciting, even dangerous, I feel half-dead and dull. [+1 for True.]

Farmer and Sundberg found the average score to be roughly 10. My own score is 16, and indeed when I peruse the list of correct answers I feel as if I am scanning a psychological diagnosis of myself. I do find it hard to concentrate; I do find myself constantly trapped doing meaningless things; and half-dead or dull does not begin to describe how I feel when I lack a project that is adequately transgressive or, worse, find myself exiled somewhere among the slow-witted. I worry about other matters while I work (#2), and I find that time passes slowly (#3). I am bad at waiting patiently (#15), and in fact when I am forced to wait in line, I get restless (#17). Even for those questions to which I give the allegedly nonbored answer, I tend to feel I am forfeiting the points on some technicality. Yes, I do have “projects in mind, all the time” (#7)—so many that few of them ever get acted upon, precisely because my desperate craving for variety means that I am rarely satisfied for very long with even projects that are hypothetical. Yes, others do tend to say that I am a “creative or imaginative person” (#22), but honestly I think this is due to declining standards.

And no, I do not often find myself “at loose ends” (#4), sitting around doing nothing (#14), with “time on my hands” (#16), without “something to do or see to keep me interested” (#13). But such has been true for me only since the start of this viral decade, when my idle stretches have been erased by the grace of the Internet, with its soothingly fast and infinitely available distractions, engaging me for hours on end without assuaging my fundamental boredom in any way. In fact, I would advance the prediction that answers to these latter four questions have become meaningless in recent years, when all of our interactive technologies—video games and mobile devices as well as the web—have kept those of us most boredom-prone from generally thinking, as we might while watching TV, that we are “doing nothing,” even if in every practical sense we are doing precisely that. I fear I am ahead of the science, however, because I have been unable to find any study that either supports or undercuts this conjecture. I put the question to Richard Farmer, the lead author of the Boredom Proneness Scale, only to find that he had not done boredom research in many years. He had moved on to other topics. I did not ask him why, though I have the glimmer of a notion.

EXPERIMENT: THE MOB PROJECT

That May my boredom was especially acute, but none of the projects crowding around in my mind seemed feasible. I wanted to use e-mail to get people to come to some sort of show, where something surprising would happen, perhaps involving a fight; or an entire fake arts scene, maybe, some tight-knit band of fictitious young artists and writers who all lived together in a loft, and we would reel in journalists and would-be admirers eager to congratulate themselves for having discovered the next big thing. Both of these ideas seemed far too difficult. It was while ruminating on these two ideas, in the shower, that I realized I needed to make my idea lazier. I could use e-mail to gather an audience for a show, yes, but the point of the show should be no show at all: the e-mail would be straightforward about exactly what people would see, namely nothing but themselves, coming together for no reason at all. Such a project would work, I reflected, because it was meta, i.e., it was a self-conscious idea for a self-conscious culture, a promise to create something out of nothing. It was the perfect project for me.

During the week between the first MOB e-mail and the appointed day, I found myself anxious, not knowing what to expect. MOB’s only goal was to attract a crowd, but as an event it had none of the typical draws: no name of any artist or performer, no endorsement by any noted tastemaker. All it had was its own ironically wild, unsupportable claims—that “tons” of people would be there, that they would constitute a “mob.” The subject heading of the e-mail had read MOB #1, so as to imply that it was the first in what would be an ongoing series of gatherings. (In fact, I was unsure whether there would be a MOB #2.) As I was gathering my things to head north the seven blocks from my office to the mob site, I received a call from my friend Eugene, a stand-up comedian whose attitude toward daily living I have long admired. Once, on a slow day while he was working at an ice-cream store, he slid a shovel through the inside handles of the store’s plate-glass front doors, along with a note that read CLOSED DUE TO SHOVEL.

“Is the mob supposed to be at Claire’s Accessories?” Eugene asked.

Yes, I said.

“There’s six cops standing guard in front of it,” he said. “And a paddywagon.”

This was not the mob I had been anticipating. If anyone was to land in that paddywagon, I thought, it ought to be me, and so I hastened to the site. The cops, thankfully, did not seem to be in an arresting mood. But they would not allow anyone to enter the store, even when we told them (not unpersuasively, I thought) that we were desperate accessories shoppers. I scanned the faces of passersby, hoping to divine how many had come to mob—quite a few, I judged, based on their excited yet wry expressions, but seeing the police they understandably hurried past. Still others lingered around, filming with handheld video cameras or snapping digital pictures. A radio crew lurked with a boom mike. Despite the police, my single e-mail had generated enough steam to power a respectable spectacle.

The underlying science of the Mob Project seemed sound, and so I readied plans for MOB #2, which would be held two weeks later, on June 17. I found four ill-frequented bars near the intended site and had the participants gather at those beforehand, again split by the month of their birth. Ten minutes before the appointed time of 7:27 p.m., slips of paper bearing the final destination were distributed at the bars. The site was the Macy’s rugs department, which in that tremendous store is a mysterious and inaccessible kingdom, the farthest reach of the ninth and uppermost floor, accessed by a seemingly endless series of ancient escalators that grind past women’s apparel and outerwear and furs and fine china and the in-store Starbucks and Au Bon Pain. By quarter past seven waves of mobbers were sweeping through the dimly illuminated furniture department, glancing sidelong toward the rugs room as they pretended to shop for loveseats and bureaus; but all at once, in a giant rush, two hundred people wandered over to the carpet in the back left corner and, as instructed, informed clerks that they all lived together in a Long Island City commune and were looking for a “love rug.”

E-MAIL MOB TAKES MANHATTAN read the headline two days later on Wired News, an online technology-news site. More media took note, and interview requests began to filter in to my anonymous webmail account: on June 18, from New York Magazine; on June 20, from the New York Observer, NPR’s All Things Considered, the BBC World Service, the Italian daily Corriere della Serra. By MOB #3, which was held two weeks after the previous one, I had gotten fifteen requests in total. Would-be mobbers in other cities had heard the call as well, and soon I received e-mails from San Francisco, Minneapolis, Boston, Austin, announcing their own local chapters. Some asked for advice, which I very gladly gave. (“Before you send out the instructions, visit the spot at the same time and on the same day of the week, and figure out how long it will take people to get to the mob spot, etc.,” I wrote to Minneapolis.)

Perhaps most important, the Mob Project was almost immediately taken up by blogs. A blog called Cheesebikini, run by a thirty-one-year-old graduate student in Berkeley named Sean Savage, gave the concept its name—“flash mobs”—as an homage to a 1973 science-fiction short story called “Flash Crowd,” by Larry Niven. The story is a warning about the unexpected downside of cheap teleportation technology: packs of thrill seekers can beam themselves in whenever something exciting is going down. The protagonist, Jerryberry Jensen, is a TV journalist who broadcasts a fight in a shopping mall, which soon, thanks to teleportation booths, grows into a multiday riot, with miscreants beaming in from around the world. Jensen is blamed, and his bosses threaten to fire him, but eventually he clears his name by showing how the technology was to blame. Since the mid-1990s, the term “flash crowd” had been invoked from time to time as a metaphor for the sudden and debilitating traffic surges that can occur when a small website is linked to by a very popular one. This is more commonly known as the “Slashdot effect,” after the popular tech-head site Slashdot.org, which was—and still is—known to choke the sites on which it bestows links.

With its meteorological resonance, its evocation of a “flash flood” of people mobbing a place or a site or a thing all at once and then dispersing, the term “flash mob” was utterly perfect. The phenomenon it described, now properly named, could venture out into the universe and begin swiftly, stylishly, assuredly to multiply.

HYPOTHESIS

The logic behind the Mob Project ran, roughly, as follows.

1. At any given time in New York—or in any other city where culture is actively made—the vast majority of events (concerts, plays, readings, comedy nights, and gallery shows, but also protests, charities, association meetings) are summarily ignored, while a small subset of events attracts enormous audiences and, soon, media attention.

2. For most of these latter events, the beneficiaries of that ineffable boon known as buzz, one can, after the fact, point out nominal reasons for their sudden popularity: high quality, for example; or perception of high quality due to general acclamation, or at least an assumption of general acclamation; or the participation of some well-liked figure, or the presence, or rumored presence, of same; etc.

3. But: so often does popularity, even among the highest of brow, bear no relationship to merit, that an experiment might be devised to determine just how far one might take the former while neglecting the latter entirely; that is, how much buzz one could create about an event whose only point was buzz, a show whose audience was itself the only show. Given all culture in New York was demonstrably commingled with scenesterism, my thinking ran, it should theoretically be possible to create an art project consisting of pure scene—meaning the scene would be the entire point of the work, and indeed would itself constitute the work.

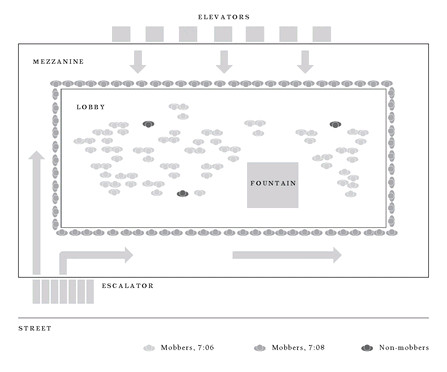

At its best, the Mob Project brought to this task a sort of formal unity, as can be illustrated in MOB #3, which took place fifteen days after #2. To get the slips with the destination, invitees were required to roam the downstairs food court of Grand Central Station, looking for Mob Project representatives reading the New York Review of Books. The secret location was a hotel adjacent to the station on Forty-second Street, the Grand Hyatt, which has a block-long lobby with fixtures in the high ’80s style: gold-chrome railings and sepia-mirror walls and a fountain in marblish stone and a mezzanine overhead, ringed around. Mob time was set for 7:07 p.m., the tail end of the evening rush hour; the train station next door was thick with commuters and so (visible through the hotel’s tinted-glass facade) was the sidewalk outside, but the lobby was nearly empty: only a few besuited types, guests presumably, sunk here and there into armchairs.

Starting five minutes beforehand the mob members slipped in, in twos and threes and tens, milling around in the lobby and making stylish small talk. Then all at once, they rode the elevators and escalators up to the mezzanine and wordlessly lined the banister, like so:

FIG. 1-1: SCHEMATIC, MOB #3 IN GRAND HYATT HOTEL

The handful of hotel guests were still there, alone again, except now they were confronted with a hundreds-strong armada of mobbers overhead, arrayed shoulder to shoulder, staring silently down. Intimidation was not the point; we were staring down at where we had just been, and also across at one another, two hundred artist-spectators commandeering an atrium on Forty-second Street as a coliseum-style theater of self-regard. After five minutes of staring, the ring erupted into precisely fifteen seconds of tumultuous applause—for itself—after which it scattered back downstairs and out the door, just as the police cruisers were rolling up, flashers on.

THE MEME, SUPREME

From the moment flash mobs first began to spread, there was a term applied to them by both boosters and detractors, and that term was meme. “The Flash Mob meme is #1 with a bullet,” wrote the author Howard Rheingold on his blog. Three days later, on MetaFilter, one commenter wrote, “I was going to take the time to savage this wretched warmed-over meme, but am delighted to see that so many of you have already done so”; countered the next commenter, “I happen to think the meme is a bit silly, but the backlash even more so.” In September, when the comic strip Doonesbury had one of its characters create flash mobs for Howard Dean, one blogger named Eric enthused, “Trust Gary [sic] Trudeau to combine the hottest memes of the summer,” adding as an aside: “Yes, I fully realize that [I] just called Dr Dean a meme.”

Readers will be excused for their ignorance of this term, though in 1998 it did enter the Merriam-Webster dictionary, which defines it as “an idea, behavior, style, or usage that spreads from person to person within a culture.” The operative word here is “spreads,” for this simple monosyllabic noun has buried within it a particular vision of culture and how it evolves. In a meme’s-eye view of the world, any idea—from a religious belief or a political affiliation to a new style of jeans or a catchy tune—can be seen as a sort of independent agent loosed into the population, where it travels from mind to mind, burrowing into each, colonizing all as widely and ruthlessly as it can. Some brains are more susceptible than others to certain memes, but by and large memes spread by virtue of their own inherent contagiousness. The meme idea, that is, sees cultural entities as being similar to genes, or better, to viruses, and in fact the term “viral” is often used to express the same idea.

If we consider the meme idea itself as a meme, we see that its virulence in the Internet era has been impressive. The term was coined in 1976 by the biologist Richard Dawkins in his first book, The Selfish Gene, in which he persuasively argues that genes are the operative subjects of evolutionary selection: that is, individuals struggle first and foremost to perpetuate their genes, and insofar as evolution is driven by the “survival of the fittest,” it is the fitness of our genes, not of us, that is the relevant factor. He puts forward a unified vision of history in which replication is king: in Dawkins’s view, from that very first day when, somewhere in the murk, there emerged the first genes—molecules with the ability to create copies of themselves—these selfish replicators have been orchestrating the whole shebang. After extending out this argument to explain the evolution of species, Dawkins turns to the question of human culture. If replication, over long periods of time, explains why we exist, then might it not serve also to explain what inhabits our minds? Dawkins writes, with characteristic flourish,

I think that a new kind of replicator has recently emerged on this very planet. It is staring us in the face. It is still in its infancy, still drifting clumsily about in its primeval soup. . . . The new soup is the soup of human culture. We need a name for the new replicator, a noun that conveys the idea of a unit of cultural transmission, or a unit of imitation. “Mimeme” [Greek for “that which is imitated”] comes from a suitable Greek root, but I want a monosyllable that sounds a bit like gene. I hope my classicist friends will forgive me if I abbreviate mimeme to meme.

Although Dawkins clearly intends the meme to be analogous first and foremost to the gene—which spreads itself only through successive generations, through the reproduction of its host—on the very same page he invokes the more apt biological metaphor of the virus. “When you plant a fertile meme in my mind you literally parasitize my brain,” he wrote, “turning it into a vehicle for the meme’s propagation in just the way that a virus may parasitize the genetic mechanism of a host cell.”

Why has the meme meme spread? Why has the viral become so viral? The Selfish Gene was a bestselling book thirty years ago, but it was not until the mid-1990s that the meme and viral ideas became epidemics of their own. I would hazard two reasons for this chronology, one psychological and one technological. The psychological reason is the rise of market consciousness, in a culture where stock ownership increased during the 1990s from just under a quarter to more than half. After all, the meme vision of culture—where ideas compete for brain space, unburdened by history or context—really resembles nothing so much as an economist’s dream of the free market. We are asked to admire the marvelous theoretical efficiencies (no barriers to entry, unfettered competition, persistence of the fittest) but to ignore the factual inequalities: the fact, for example, that so many of our most persistent memes succeed only through elaborate sponsorship (what would be a genetic analogy here? factory-farm breeding, perhaps?), while other, fitter memes wither.

The other, technological reason for the rise of the meme/viral idea is perhaps more obvious: the Internet. But it is worth teasing out just what about the Internet has conjured up these memes all around us. Yes, the Internet allows us to communicate instantaneously with others around the world, but that has been possible since the telegraph. Yes, the Internet allows us to find others with similar interests and chat among ourselves; but this is just an online analogue of what we always have been able to do in person, even if perhaps not on such a large scale. What the Internet has done to change culture—to create a new, viral culture—is to archive trillions of our communications, to make them linkable, trackable, searchable, quantifiable, so they can serve as ready grist for yet more conversation. In an offline age, we might have had a vague notion that a slang phrase or a song or a perception of a product or an enthusiasm for a candidate was spreading through social groups; but lacking any hard data about how it was spreading, why would any of us (aside from marketers and sundry social scientists) really care? Today, though, in the Internet, we have a trillion-terabyte answer that in turn has influenced our questions. We can see how we are embedded in numerical currents, how we precede or lag curves, how we are enmeshed in so-called social networks, and how our networks compare to the networks of others. The Internet has given us not just new ways of communicating but new ways of measuring ourselves.

PROPAGATION

To spread the Mob Project, I endeavored to devise a media strategy on the project’s own terms. The mob was all about the herd instinct, I reasoned, about the desire not to be left out of the latest fad; logically, then, it should grow as quickly as possible, and eventually—this seemed obvious—to buckle under the weight of its own popularity. I developed a simple maxim for myself, as custodian of the mob: “Anything that grows the mob is pro-mob.” And in accordance with this principle, I gave interviews to all reporters who asked. In the six weeks following MOB #3 I did perhaps thirty different interviews, not only with local newspapers (the Post and the Daily News, though not yet the Times—more on that later) but also with Time, Time Out New York, the Christian Science Monitor, the San Francisco Chronicle, the Chicago Tribune , the Associated Press, Reuters, Agence France-Presse, and countless websites.

There was also the matter of how I would be identified. My original preference had been to remain entirely anonymous, but I had only half succeeded; at the first, aborted mob, a radio reporter had discovered my first name and broadcast it, and so I was forced to be Bill—or, more often, “Bill”—in my dealings with the media thereafter. “[L]ike Cher and Madonna, prefers to use only his first name,” wrote the Chicago Daily Herald. (To those who asked my occupation, I replied simply that I worked in the “culture industry.”) Usually a flash-mob story would invoke me roughly three quarters of the way down, as the “shadowy figure” at the center of the project. There were dark questions as to my intentions. “Bill, who denies he is on a power-trip, declined to be identified,” intoned Britain’s Daily Mirror. Here is an exchange from Fox News’s On the Record with Greta Van Susteren:

ANCHOR: Now, the guy who came up with the Mob Project is a mystery man named Bill. Do either of you know who he is? MOBBER ONE: Nope.

MOBBER TWO: Well, I’ve—I’ve e-mailed him. That’s about it. MOBBER ONE: Oh, you have ... ?

ANCHOR: What—what—who is this Bill? Do you know anything about him?

MOBBER TWO: Well, from what I’ve read, he’sa—heworks in the culture industry, and that’s—that’s about as specific as we’ve gotten with him.

As the media frenzy over the mobs grew, so did the mobs themselves. For MOB #4, I sent the mob to a shoe store in SoHo, a spacious plate-glassed corner boutique whose high ceilings and walls made of undulating mosaic gave it an almost aquatic feel, and I was astonished to see the mob assemble: as I marched with one strand streaming down Lafayette, we saw another mounting a pincers movement around Prince Street from the east, pouring in through the glass doors past the agape mouths of the attendants, perhaps three hundred bodies, packing the space and then, once no one else could enter, crowding around the sidewalk, everyone gawking, taking pictures with cameras, calling friends on cell phones (as the instructions for this mob had ordered), each pretending to be a tourist, all feigning awe—an awe I myself truly felt—to be not merely in New York but so close to the center of something so big.

Outside New York, too, the mob was multiplying in dizzying ways, far past the point that I could correspond with the leaders or even keep up with all the different new cities: not only all across the United States but London, Vienna, twenty-one different municipalities in Germany. In July, soon after New York’s MOB #4, Rome held its first two flash mobs; in the first, three hundred mobbers strode into a bookstore and demanded nonexistent titles (e.g., Pinocchio 2: The Revenge), while the second (which, based on descriptions I read later, is perhaps my favorite flash mob of any ever assembled) was held right in the Piazza dell’Esquilino, in a crosswalk just in front of the glorious Basilica di Santa Maria Maggiore, where the crowd broke into two and balletically crossed back and forth and met each other multiple times, first hugging, then fighting, and then asking for the time: “CHE ORE SONO?” yelled one semi-mob, and the other replied, “LE SETTE E QUARANTA”—“It’s 7:40”—which, in fact, it was exactly.

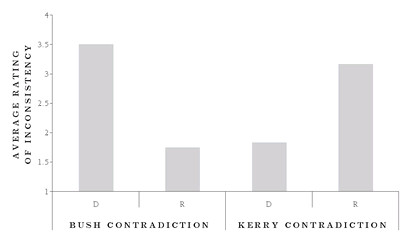

UP: THE BANDWAGON EFFECT

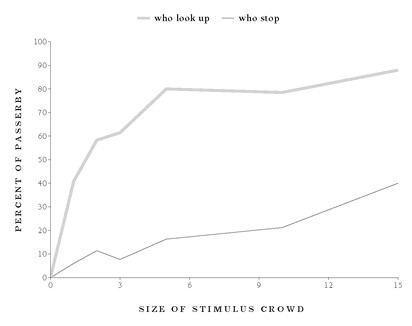

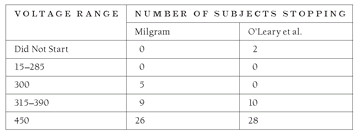

When a British art magazine asked me who, among artists past or present, had most influenced the flash-mob project, I named Stanley Milgram—i.e., the social psychologist best known for his authority experiments in which he induced average Americans to give seemingly fatal shocks to strangers. As it happens, I later discovered that Milgram also did a project much like a flash mob, in which a “stimulus crowd” of his confederates, varying in number from one to fifteen, stopped on a busy Manhattan sidewalk and all at once looked up to the same sixth-floor window. The results, in a chart from his paper “Notes on the Drawing Power of Crowds of Different Size”:

FIG. 1-2—MILGRAM’S CROWD EXPERIMENT

In this single chart, Milgram elegantly documented the essence of herd behavior, what economists call a “bandwagon effect”: the instinctive tendency of the human animal to rely on the actions of others in choosing its own course of action. We get interested in the things we see others getting interested in. Homo sapiens has been jumping on bandwagons, as the expression goes, since long before the vogue for such vehicles in the late nineteenth century (when less popular American politicians would clamor to be seen aboard the bandwagon of a more popular candidate for another office, hence the meaning we have today); since long before, even, the invention of the wheel.

The bandwagon effect is especially pronounced in Internet culture-making, however, because popularity can immediately be factored into how choices are presented to us. If in our nonwired lives we jump on a bandwagon once a day, in our postwired existence we hop whole fleets of them—often without even knowing it. Start, for example, where most of us start online, with Google: when we search for a phrase, Google’s sophisticated engine delivers our results in an order determined (at least in part) by how many searchers before us eventually clicked on the pages in question. Whenever we click on a top-ten Google result, we are jumping on an invisible bandwagon. Similarly with retailers such as Amazon, whose front-page offerings, tailored to each customer, are based on what other customers have bought: another invisible bandwagon. Then there are the countless visible bandwagons, in the form of the ubiquitous lists: most bought, most downloaded, most e-mailed, most linked-to. Even the august New York Times, through its “most e-mailed” list, has turned the news into a popularity contest, whose winners in turn get more attention among Internet readers who have only a limited amount of attention to give. Thus do the attention-rich invariably get richer.

In some cases, our bandwagons—online or off—do us a service, by weeding out extraneous or undesirable information through the “wisdom of crowds” (as a bestselling book by James Surowiecki put it). Moreover, in the case of culture, what is already popular in some respect has more intrinsic value: we read news, or watch television, or listen to music in part so we can have discussions with other people who have done the same. But it is a severe mistake to assume, as the economically minded often do, that the bandwagon effect manages to select for intrinsic worth, or even to give individuals what they actually want. This was demonstrated ingeniously a few years ago in an experiment run by Matthew Salganik, a sociologist who was then at Columbia University. Fourteen thousand participants were invited to join a music network where they could listen to songs, rate them, and, if they wanted, download the songs for free. A fifth of the volunteers—the “independent” group—were left entirely to their own tastes, unable to see what their fellows were downloading. The other four-fifths were split up into eight different networks—the “influence” groups—all of which saw the same songs, but listed in rank order based on how often they had been downloaded in that particular group. The songs were by almost entirely unknown bands, to avoid having songs get popular based on preexisting associations with the band’s name.

What Salganik and his colleagues found was that the “independent” group chose songs that differed significantly from those chosen in the “influence” groups, which in turn differed significantly from one another. In their paper, the sociologists call the eight different networks “worlds,” and they mean this in the philosophical sense of possible worlds: each network began from the same starting point with the same universe of songs, but each of these independent evolutionary environments yielded very different outcomes. A band called 52metro, for example, was #1 in one world but didn’t even rate the top ten in five of the other seven worlds. Similarly, a band called Silent Film topped one chart but was #3, #4, #7, or #8 (twice) on five of the charts, and was entirely left off the other two. “Quality”—if we define that to mean the independent group’s choices—did not seem to be entirely irrelevant: the top-rated song on their list, by a group called Parker Theory, made all eight of the “influence” lists and topped five of them. But of the other nine acts on the independent list, one was entirely shut out of the influence lists, and three made only two lists. On average, the top ten bands for the independent group made only four of the eight influence lists. Apparently they were drowned out, in the other worlds, by noise from the bandwagons.

The Mob Project was a self-conscious bandwagon—it advertised itself as a bandwagon, as a joke about conformity, and it lampooned bandwagons in doing so. But curiously, this seemed not to diminish its actual bandwagonesque properties. Indeed, if anything, the self-consciousness made it even more viral. The e-mails poured in: “I WANT IN.” “Request to mob, sir.” “Girls can keep secrets!” “Want to get my mob on.” In Boston’s first flash mob, entitled “Ode to Bill,” hundreds packed the greeting-card aisles of a Harvard Square department store, telling bystanders who inquired that they were looking for a card for their “friend Bill in New York.” By making a halfhearted, jesting attempt to elevate me to celebrity status, Boston had given the flash-mob genre an appropriately sly turn.

PEAK AND BACKLASH

The best attended of all the New York gatherings was MOB #6, which for a few beautiful minutes stifled what has to be the most ostentatious chain store in the entire city: the Times Square Toys “R” Us, whose excesses are too many to catalog here but include, in the store’s foyer, an actual operational Ferris wheel some sixty feet in diameter. Up until the appointed time of 7:18 p.m. the mobbers loitered on the upper level, among the GI Joes and the Nintendos and up inside the glittering pink of the two-floor Barbie palace. But then all at once the mob, five hundred strong, crowded around the floor’s centerpiece, a life-size animatronic Tyrannosaurus rex that growls and feints with a Hollywood-class lifelikeness. “Fill in all around it,” the mob slip had instructed. “It is like a terrible god to you.”

Two minutes later, the mob dropped to its knees, moaning and cowering at the beast behind outstretched hands; in doing so we repaid this spectacle, which clearly was the product of not only untold expenditure but many man-months of imagineering, with an en masse enactment of the very emotions—visceral fright and infantile fealty—that it obviously had been designed to evoke. MOB #6 was, as many bloggers pointed out pejoratively, “cute,” but the cuteness had been massed, refracted, and focused to such a bright point that it became a physical menace. For six minutes the upper level was paralyzed; the cash registers were cocooned behind the moaning, kneeling bodies pressed together; customers were trapped; business could not be done. The terror-stricken personnel tried in vain to force the crowd out. “Is anyone making a purchase?” one was heard to call out weakly. As the mob dispersed down the escalators and out into the street, the police were downstairs, telling us to leave, but we had already accomplished the task, had delivered what was in effect a warning.

Almost unanimously, though, the bloggers panned MOB #6. “Another Mob Botched” was the verdict on the blog Fancy Robot: “[I]nstead of setting the Flash Mob out in public on Times Square itself, as everyone had hoped, The Flash Master decided to set it in Toys ‘R’ Us, with apparently dismal results.” SatansLaundromat.com (a photo-blog that contains the most complete visual record of the New York project) concurred—“not public enough,” the blogger wrote, without enough “spectators to bewilder”—as did Chris from the CCE Blog: “I think the common feeling among these blogger reviews is: where does the idea go from here? . . . After seeing hundreds of people show up for no good reason, it’s obvious that there’s some kind of potential for artistic or political expression here.”

The idea seemed to be that flash mobs could be made to convey a message, but for a number of reasons this dream was destined to run aground. First, as outlined above, flash mobs were gatherings of insiders, and as such could hardly communicate to those who did not already belong. They were intramural play; they drew their energies not from impressing outsiders or freaking them out but from showing them utter disregard, from using the outside world as merely a terrain for private games. Second, in terms of time, flash mobs were by definition transitory, ten minutes or fewer, and thereby not exactly suited to standing their ground and testifying. Third, in terms of physical space, flash mobs relied on constraints to create an illusion of superior strength. I never held mobs in the open, the bloggers complained, in view of enough onlookers, but this was entirely purposeful on my part, for like Colin Powell I hewed to a doctrine of overwhelming force. Only in enclosed spaces could the mob generate the necessary self-awe; to allow the mob to feel small would have been to destroy it.

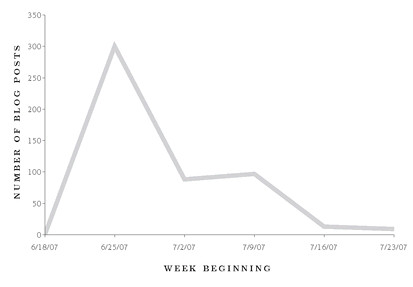

The following week, the interview request from the New York Times finally arrived. On the phone the reporter, Amy Harmon, made it clear to me that the Times knew it was behind on the story. The paper would be remedying this, she told me, by running a prominent piece on flash mobs in its Sunday Week in Review section. What the Times did, in fascinating fashion, was not just to run the backlash story (which I had been expecting in three to five more weeks) but to do so preemptively—i.e., before there was actually a backlash. Harmon’s piece bore the headline GUESS SOME PEOPLE DON’T HAVE ANYTHING BETTER TO DO, and its nut sentence ran: “[T]he flash mob juggernaut has now run into a flash mob backlash that may be spreading faster than the fad itself.” As evidence, she mustered the following:

E-mail lists like “antimob” and “slashmob” have sprung up, as did a Web site warning that “flashmuggers” are bound to show up “wherever there’s groups of young, naïve, wealthy, bored fashionistas to be found.” And a new definition was circulated last week on several Web sites: “flash mob, noun: An impromptu gathering, organized by means of electronic communication, of the unemployed.”

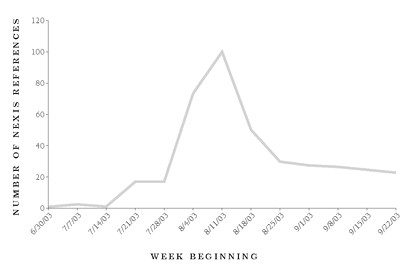

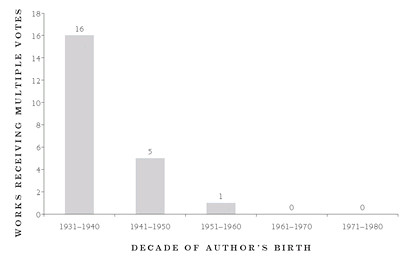

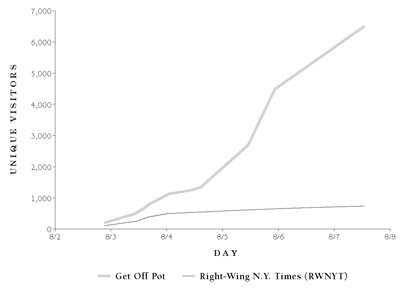

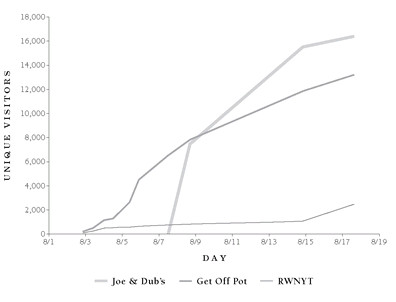

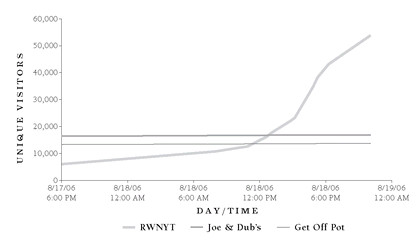

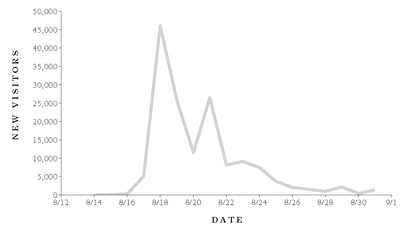

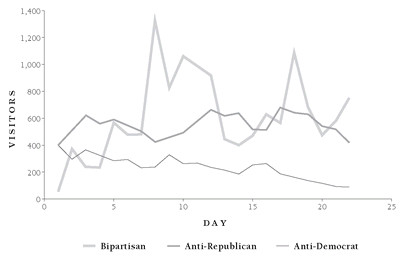

Two e-mail lists, a website, and a forwarded definition hardly constituted a “backlash” against this still-growing, intercontinental fad, but what I think Harmon and the Times rightly understood was that a backlash was the only avenue by which they could advance the story, i.e., find a new narrative. Whether through direct causation or mere journalistic intuition, the Times timed its backlash story (8/17/03) with remarkable accuracy:

FIG. 1-3—MEDIA REFERENCES TO FLASH MOBS, BY WEEK

PERMUTATIONS

Whether the backlash was real or not, it almost didn’t matter: there was no way for the flash mob to keep growing, and since the entire logic of the mob was to grow, the mob was destined to die. It couldn’t become political, or even make any statement at all, within the prescribed confines of a ten-minute burst. After six mobs, even conceiving of new enough crowd permutations started to feel like a challenge. We had done consumers collaborating (MOB #2) and consumers not collaborating (MOB #4). We had done an ecstatic, moaning throng (MOB #6) and a more sedate, absurdist one (MOB #5, in which the mob hid in some Central Park woods and simulated animal noises). In MOB #3, we had formed an inward-facing ring, and the idea of another geometrical mob appealed to me. I decided that MOB #7, set for late August, should be a queue.

Scouting locations had become in many ways the most pleasant task of the experiment. Roughly a week before each appointed day, having chosen the neighborhood in advance, I would stroll nonchalantly around the streets, looking at parks and stairways and stores, imagining a few hundred people descending at once upon each: How would such a mob arrive? How would it array itself? What would it do? Could it be beautiful? For MOB #7 I had picked Midtown, the commercial heart of the city, and so one Thursday in the late afternoon I took the subway uptown from my office to envision a crowd. I started on Fiftieth Street at Eighth Avenue and walked east, slipping through the milling Broadway tourists: too much real crowd for a fake crowd to stand out successfully. At Sixth Avenue I eyed Radio City Music Hall—an inviting target, to be sure, but the sidewalk in front was too narrow, too trafficked, for a mob comfortably to do its work. At Rockefeller Center, I eyed the Christmas tree spot, which was empty that August; but there was far too much security lurking about.

When I arrived at Fifth Avenue, though, and looked up at the spires of Saint Patrick’s Cathedral, I realized I had found just the place. A paramount Manhattan landmark with not much milling out front (since tourists were generally allowed inside); a sacred site, moreover, all the better for profaning with a disposable crowd. Crossing Fifth, I started around the structure from the north, following it to Madison and coming around the south side back to Fifth Avenue. I wanted a line, but it would be too risky to have it start at the front doors, since the cathedral staff that stood near the door might disperse it before it could fully form. But on each side of the cathedral, I noticed, was a small wooden door, not open to the public. If a line began at one of these doors, it could wind around the grand structure but keep staff confused long enough to last for the allotted five minutes. I stood at the corner of Fiftieth and Fifth, pondering what should happen after that. Flash mobbers were mostly people like me, downtown and Brooklyn kids, a demographic seldom seen in either Midtown or church. I loved the idea that Saint Patrick’s might somehow have inexplicably become cool, a place where if one lined up at the side door, one might stand a chance of getting in for—what? Tickets to see some stupidly cool band, I thought; and in the summer of 2003 the right band to make fun of was the Strokes, a band had been clearly manufactured, Monkees-like, precisely for our delectation. It was settled, then: the mob would line up from the small door on the north side, wind around the front and down the south side. When passersby asked why they were lining up, mobbers were to say they “heard they’re selling Strokes tickets.”

Having settled the script that Thursday afternoon, I started back down Fiftieth, only to see a man walk out of the side door. He was a short man with curly hair tight to his head, and he wore a knit blue tie over a white patterned button-down shirt. Without acknowledging me in any way, he walked to the curb and then stood still, staring up at the sky. Back at Madison, I saw that since I last was at the corner, the stop-light had gone dark: an uncommon city sight. As I headed south down Madison, weaving through accumulating traffic crowding crosswalks, more people began to trickle out of the office buildings, looking dazed, rubbing their eyes in the sunlight. A woman dressed as Nefertiti was fanning herself. Customers lined up at bodegas to buy bottles of water. I thought I would walk south until I got out of the blackout zone; by Union Square I realized that the blackout zone was very large indeed, though it was not until I got back to work, walking the eleven flights up, that I was told the zone was not just all of New York City but most of the northeastern United States. Having spent my last half hour envisioning my own absurdist mob, I had suddenly stepped into a citywide flash mob planned by no one, born not of a will to metaspectacle but of basic human need. The power returned within the week, and MOB #7 went on exactly as planned; but to gather a crowd on New York’s streets never felt quite the same, and I knew my days making mobs were dwindling fast.

DOWN: BOREDOM REDUX

I wrote of boredom as inspiring the mob’s birth; but I suspect that boredom helped to hasten its death, as well—the boredom, that is, of the constantly distracted mind. This paradoxical relationship between boredom and distraction was demonstrated elegantly in 1989, by psychologists at Clark University in Worcester, Massachusetts. Ninety-one undergraduates, broken up into small groups, were played a tape-recorded reading of fifteen minutes in length, but during the playback for some of the students a modest distraction was introduced: the sound of a TV soap opera, playing at a “just noticeable” level. Afterward, when the students were asked if their minds had wandered during the recording, the results were as to be expected. Seventy percent of the intentionally distracted listeners said their minds had wandered, as opposed to 55 percent of the control group. What was far more surprising, however, were the reasons they gave: among those whose minds wandered, 76 percent of the distracted listeners said this was because the tape was “boring,” versus only 41 percent of their non-distracted counterparts. We tend to think of boredom as a response to having too few external stimuli, but here boredom was perceived more keenly at the precise time that more stimuli were present.

The experiment’s authors, Robin Damrad-Frye and James Laird, argue that the results make sense in the context of “self-perception theory”: the notion that we determine our internal states in large part by observing our own behavior. Other studies have supported this general idea. Subjects asked to mimic a certain emotion will then report feeling that emotion. Subjects made to argue in favor of a statement, either through a speech or an essay, will afterward attest to believing it. Subjects forced to gaze into each other’s eyes will later profess sexual attraction for each other. In the case of boredom, the authors write, “The reason people know they are bored is, at least in part, that they find they cannot keep their attention focused where it should be.” That is, they ascribe their own inattention to a deficiency not in themselves, or in their surroundings, but in that to which they are supposed to be attending.

Today, in the advanced stages of our information age, with our e-mail in-boxes and phones and instant messages all chirping for our attention, it is as if we are conducting Damrad-Frye and Laird’s experiment on a society-wide scale. Gloria Mark, a professor at the University of California at Irvine, has found that white-collar officeworkers can work for only eleven minutes, on average, without being interrupted. Among the young, permanent distraction is a way of life: the majority of seventh- to twelfth-graders in the United States say that they “multitask”—using other media—some or even most of the time they are reading or using the computer. The writer and software expert Linda Stone has called this lifestyle one of “continuous partial attention,” an elegant phrase to describe the endless wave of electronic distraction that so many of us ride. There is ample evidence that all this distraction impairs our ability to do things well: a psychologist at the University of Michigan found that multitasking subjects made more errors in each task and took from 50 to 100 percent more time to finish. But far more intriguing, I think, is how our constant distraction may be feeding back into our perception of the world—the effect observed among those Clark undergraduates, writ large; the sense, that is, that nothing we attend to is adequate, precisely because nothing can escape the roiling scorn of our distraction.

This, finally, is what kills nanostories, I think, and what surely killed the flash mob, not only in the media but in my own mind: this always-encroaching boredom, this need to tell ever new stories about our society and ourselves, even when there are no new stories to be told. This impulse itself is far from new, of course; it is a species of what Neil Postman meant in 1984 when he decried the culture of news as entertainment, and indeed of what Daniel Boorstin meant in 1961 when he railed against our “extravagant expectations” for the world of human events. What viral culture adds is, in part, just pure acceleration—the speed born of more data sources, more frequent updates, more churn—but far more crucially it adds interactivity, and with it a perverse kind of market democracy. As more of us take on the media mantle ourselves, telling our own stories rather than allowing others to tell them for us, it is we who can act to assuage our own boredom, our inadequacy, our despair by proj ecting them out through how we describe the world.

DISPERSAL

I announced that MOB #8, in early September, would be the last. The invitation e-mail’s subject was MOB #8: The end, and the text began with a FAQ:

Q. The end?

A. Yes.

Q. Why?

A. It can’t be explained. Like the individual mobs, the Mob Project

appeared for no reason, and like the mobs it must disperse.

Q. Will the Mob Project ever reappear?

A. It might. But don’t expect it.

The site was a concrete alcove right on Forty-second Street, just across from the Condé Nast building. Participants had been told to follow the instructions blaring from a cheap boom box I had set up beforehand atop a brick ledge. I had prerecorded a tape of myself, barking out commands. I envisioned my hundreds of mobbers, following the dictates of what was effectively a loudspeaker on a pole. It was hard to get more straightforward than that, I thought.