Defending Your Digital Assets Against Hackers, Crackers, Spies, and Thieves (Rsa Press) – Read Now and Download Mobi

Copyright © 2000 by The McGraw-Hill Companies, Inc. All rights reserved. Manufactured in the United States of America. Except as permitted under the United States Copyright Act of 1976, no part of this publication may be reproduced or distributed in any form or by any means, or stored in a database or retrieval system, without the prior written permission of the publisher.

0-07-212880-1

The material in this eBook also appears in the print version of this title: 0-07-212285-4.

All trademarks are trademarks of their respective owners. Rather than put a trademark symbol after every occurrence of a trademarked name, we use names in an editorial fashion only, and to the benefit of the trademark owner, with no intention of infringement of the trademark. Where such designations appear in this book, they have been printed with initial caps.

McGraw-Hill eBooks are available at special quantity discounts to use as premiums and sales promotions, or for use in corporate training programs. For more information, please contact George Hoare, Special Sales, at [email protected] or (212) 904-4069.

TERMS OF USE

This is a copyrighted work and The McGraw-Hill Companies, Inc. (“McGraw-Hill”) and its licensors reserve all rights in and to the work. Use of this work is subject to these terms. Except as permitted under the Copyright Act of 1976 and the right to store and retrieve one copy of the work, you may not decompile, disassemble, reverse engineer, reproduce, modify, create derivative works based upon, transmit, distribute, disseminate, sell, publish or sublicense the work or any part of it without McGraw-Hill’s prior consent. You may use the work for your own noncommercial and personal use; any other use of the work is strictly prohibited. Your right to use the work may be terminated if you fail to comply with these terms.

THE WORK IS PROVIDED “AS IS”. McGRAW-HILL AND ITS LICENSORS MAKE NO GUARANTEES OR WARRANTIES AS TO THE ACCURACY, ADEQUACY OR COMPLETENESS OF OR RESULTS TO BE OBTAINED FROM USING THE WORK, INCLUDING ANY INFORMATION THAT CAN BE ACCESSED THROUGH THE WORK VIA HYPERLINK OR OTHERWISE, AND EXPRESSLY DISCLAIM ANY WARRANTY, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO IMPLIED WARRANTIES OF MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. McGraw-Hill and its licensors do not warrant or guarantee that the functions contained in the work will meet your requirements or that its operation will be uninterrupted or error free. Neither McGraw-Hill nor its licensors shall be liable to you or anyone else for any inaccuracy, error or omission, regardless of cause, in the work or for any damages resulting therefrom. McGraw-Hill has no responsibility for the content of any information accessed through the work. Under no circumstances shall McGraw-Hill and/or its licensors be liable for any indirect, incidental, special, punitive, consequential or similar damages that result from the use of or inability to use the work, even if any of them has been advised of the possibility of such damages. This limitation of liability shall apply to any claim or cause whatsoever whether such claim or cause arises in contract, tort or otherwise.

DOI: 10.1036/0072128801

From Randy Nichols

To: Montine, Kent, Robin, Phillip, Diana and my pumpkin Michelle—the Bear is back!

To: Gladys and Chuck—I thought you guys retired.

To: My Grandkids—Ryan, Kara and Brandon, take the future in your hands.

To: WITZEND and Waldo T. Boyd, two of the best editors in the country.

From Dan and Julie Ryan

To: Mom & Dad; R, C & G; Chris, Alleen, & Jake; Andy; Jon & Sandy.

Contents

Preface

Information Trends

Three information trends, evident in our world, which make this book necessary are: 1) a huge amount of valuable information is being created, stored, processed, and communicated using computers and computer-based systems and networks, 2) computers are increasingly interconnected, creating new pathways to valuable information assets, and 3) threats to information assets are becoming more widespread and more sophisticated.

These three trends have influenced significant developments in three diverse computer security areas: 1) Infocrimes and Digital Espionage (DE), 2) Information Security (INFOSEC) and 3) Information Warfare (IW). This book studies the interrelationships and technologies associated with each area.

Infocrimes and Digital Espionage

Infocrime refers to any crime where the criminal target is information. Digital Espionage (DE) is the specific intent infocrime of attacking, by computer means, personal, commercial or government information systems and assets for the purpose of theft, misappropriation, destruction, and disinformation for personal or political gain. This crime has become an enormous problem with the growth of the Internet. The authors’ definition of Digital Espionage represents a compendium of activities. DE is really a family of specific intent infocrimes. Some examples of DE cases prosecuted under the 1996 computer crime statute, 18 U.S.C § 1030 include:

- Eugene E. Kashpureff for unleashing software on the Internet that interrupted service for tens of thousands of Internet users worldwide.

- Israeli citizen for hacking United States (including Whitehouse.gov) and Israeli computers.

- A chief computer network program designer for a $10 million extortion scheme based on a computer bomb.

- A juvenile hacker who cut off communications at the FAA tower at a regional airport.

- Reuters’s indictment for digital espionage of rival Bloomberg’s operating code worth $millions.

According to 1999 FBI crime statistics, the DOD and other government computer using offices are attacked up to a hundred times a day. One particularly irritating mode of DE is the use of malicious viruses and code. One virus researcher has catalogued over 53,000 virus signatures by “families” and their variants. He has shown how a search of the Internet would yield at least four different free “automatic” virus making kits that require little brainpower to activate and unleash for malicious purposes. Commercial sites are “easy pickings” for the experienced computer criminal.

The Players

Computers are everywhere and they affect everything we do. As long as there have been computers, there have been hackers. Until recently being a hacker was not considered a dirty word. Now hackers are mild peppers compared to crackers. The difference is that hackers are performing break-ins for the thrill of it and crackers are breaking in for the profit of it. In the 21st century, we can expect that all fraud against property will be perpetrated by computer means. Thieves do not need to enter our homes to steal. Everything we own, invest, bank, or hold title to is referenced on our personal computers or computer databanks of the organizations with whom we do business. And then we have traditional spies. Their targets may be any of a variety of personal, commercial or government treasures.

No matter what we call them—hackers, crackers, spies, or thieves—they are breaking the law and violating your property and rights. It is your responsibility and duty to stop them! It is your right to protect your personal data, your computers, or your organization’s information. We trust this book will provide tools to assist you in meeting these security goals.

INFOSEC Countermeasures

Digital Espionage can be thwarted by the appropriate and effective use of INFOSEC technologies. INFOSEC is a maturing science that has broad ranging applications in business and government. Every commercial venture that either markets its products internationally or uses computer networks for global communications and customer services must be concerned with protecting its assets and its customers’ information from a wide variety of attacks. This concern is increased when the Internet is the primary communications medium.

At one time the sole province of government and military concerns, INFOSEC technologies have expanded in many directions and dimensions: recreational, scientific, biometric, educational, tactical, strategic, and global. The well-informed company (and government) recognizes the value that INFOSEC adds to the security of its computer information assets. Unfortunately, only a minority of corporate managers has deployed INFOSEC tools to protect their company computer communications and databases. This trend seems to be reversing dramatically as businesses expand into global markets and the cost of installing appropriate computer security systems decreases.

Information Warfare

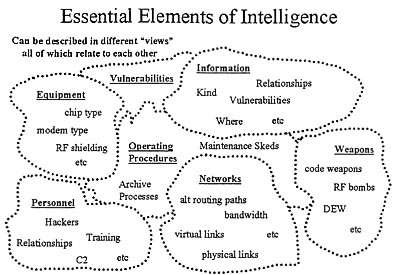

Information warfare (IW) operations consist of those actions intended to protect, destroy, exploit, corrupt, or deny information or information resources to achieve a significant advantage over an enemy. IW is based on the value of a target and the cost to destroy or disable it. IW involves much more than computer and computer networks. It encompasses information in any form and transmitted over any media. IW includes operations against information, support systems, hardware, software and people. It is arguably more damaging than conventional warfare.

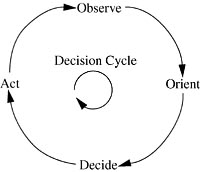

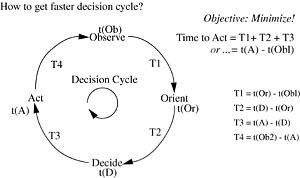

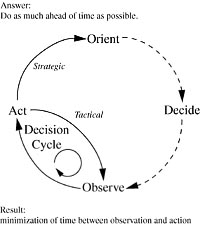

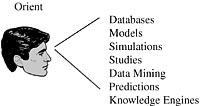

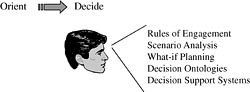

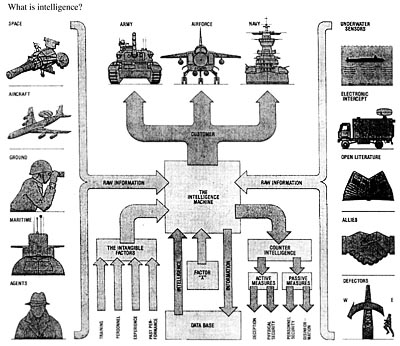

The purview of IW is very large. The concepts of information security, INFOSEC weapons, intelligence, countermeasures and policy need to be applied to the more global view of national security. A systems methodology to analyze the effects of various security contributions is required to affect information warfare in terms of the enemy’s decision cycle. Information targets and the resulting countermeasures indicated to the defender have value and their risk assessment may be politically, technologically or behaviorally based. A model to view the overlapping technologies of OSI, practical implementation and available services in the cyberspace battlefield is studied.

The Rationale for this Book

Productivity, and hence competitiveness, is inextricably tied to computer connectivity, so we are increasingly vulnerable to the corruption, destruction, or exploitation of our valuable information assets and systems. Electronic access to vast amounts of data and critical infrastructure control is now possible from almost anywhere in the world. We are past the point of knowing the identity of everyone to whom our systems are connected. The sheer volume of data in our information systems makes these systems increasingly vulnerable and potentially lucrative targets for disgruntled employees, foreign intelligence services, hackers and terrorists, and competing commercial interests. We are only in the early stages of applying and understanding the new information technologies across our society and many questions remain unanswered. Neither the ethics that define acceptable behavior on-line for an Internet society nor the legal structures that would punish misbehavior have been fully developed.

The threat to our information and systems continues to grow. The threat is no longer limited to high school computer geeks. Criminals use systems and networks to steal valuable information to sell, or just eliminate the middlemen and illicitly enter the computers of banks and other financial institutions, and steal the money directly. Criminal hackers, using bogus wire transfers to offshore banks where the money can be retrieved with less risk, have transferred millions of dollars.

Today, information technology is evolving at a faster rate than information security technology. Technological advances in optical communications, for example, have led to unprecedented improvements in communications. Hair-thin strands of silica glass have spawned a communications revolution. A similar picture can be drawn for the computer industry where personal computer and workstation-based technology is reported to roll over every eighteen months. In fact, the technology is so fast paced that system designers can barely complete system design calculations before the manufacturer wants to update certain specifications. Databases, operating environments, and even operating systems are being distributed across networks.

These technological transformations have resulted in improved network services, performance, reliability, and availability as well as significantly reduced operating costs due to the more efficient utilization of network resources. And—most important to this discussion—interoperability of dissimilar computers in multi-vendor environments is paving the way for transparent information sharing capabilities and a globally integrated information infrastructure.

Unfortunately, testing and validation of the security of large systems can take years. The technologies and architectures, which were advancing the state-of-the-art when existing security policies were written, are now obsolete. Methods carefully crafted to secure computers that stood alone have been shown to be wholly inadequate when computers are networked.

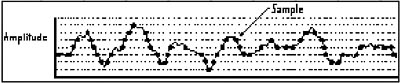

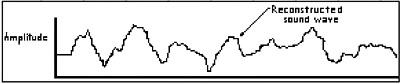

In addition to the natural evolution of information technology, we are also undergoing a revolution that is creating unprecedented security challenges for information systems. For example, the development and operation of massive parallel processing and neural networks, artificial intelligence systems, and multimedia environments present problems beyond any that formed our current information systems security experience base. Policies and standards applying to data formats and data labeling must be reviewed and adjusted as necessary to incorporate the necessary information systems security. Standards for security labeling of voice and video notes and files are needed. Doctrines for manipulating and combining formats have yet to be developed.

There is also a natural opposition between security management and network management. Network managers need to know who is using the system and how they are using it. They need to know where data is coming from and where it is going, and at what rates, in order to maximize the efficiency of their networks. Security managers—recognizing the value of such information to those who would corrupt, exploit or destroy our information assets and systems—prefer these things to be confidential. These requirements are difficult to reconcile.

High technology is readily available on the open market and so is appearing in the hands of narcotics traffickers and terrorists. The technology needed by these miscreants is not very expensive, and the understanding needed to use the technology in support of illicit goals is available at the undergraduate levels of our universities. Even governments are getting into the field, using the capabilities developed during the cold war to obtain information from business travelers to pass to local competitors. Developing the capability to commit infocrime or infoterrorism or to wage infowar is much less expensive than developing nuclear, chemical or biological weapons of mass destruction. These new threats have considerable disposable income behind them that can be applied, from co-opting our authorized users in gaining access to our confidential information, to attacks against our technology-based national infrastructure.

Fortunately, we are not helpless. We have powerful INFOSEC tools, such as cryptography, to help us protect information that is being stored on our computers or which is being transferred across our networks. For those times when we cannot use cryptography to protect our information assets—mostly when we are creating or working with them on-line—we have computer science to help us build and operate systems and networks. These systems deny illicit access to those who would misuse or destroy our data, and help us detect intrusions by outsiders or abuses by those who have been granted authorized access to some information or systems. Further, that same science can facilitate corrective action and recovery from attacks.

Target Audience

Defending Your Digital Assets Against Hackers, Crackers, Spies and Thieves is aimed at the manager and policy maker, not the specialist. It was written for the benefit of commercial and government managers and policy-level executives who must exercise due diligence in protecting the valuable information assets and systems upon which their organizations depend. Aimed at the information-technology practitioner, it is valuable to CIO’s, operations managers, technology managers, agency directors, military commanders, network managers, database managers, programmers, analysts, EDI planners and other professionals charged with applying appropriate INFOSEC counter-measures to stop all forms of Digital Espionage. This book is suitable for a first year graduate course in computer security, for computers in business courses and for Engineering/MBA programs. There are plenty of resources in the bibliography, URL references, and textual leads to further reading.

Benefits to the Reader

Defending Your Digital Assets Against Hackers, Crackers, Spies and Thieves will provide the reader with an appreciation of the nature and extent of the threat to information assets and systems and an understanding of the:

- Nature and scope of the problems that arise because of our increasing dependence on automated on-line information systems

- Steps that can and should be taken to abate the risks encountered when on-line operations are adopted as part of an organization’s technology infrastructure

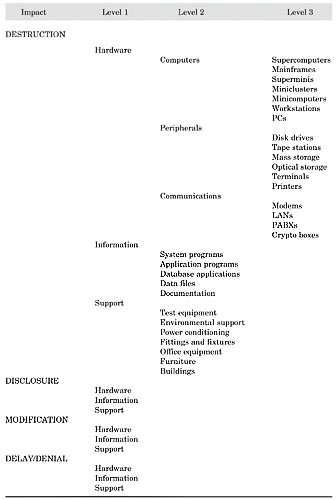

- Processes used to protect information assets from disclosure, misuse, alteration, or destruction

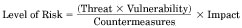

- Application of risk management to making key decisions about the application of scarce resources to information assurance

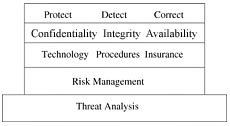

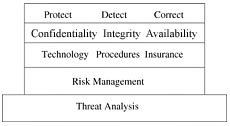

- Security architectures that can be used in designing and implementing systems that protect the confidentiality, integrity, and availability of valuable information assets

- Issues associated with managing and operating systems designed to protect trade secrets and confidential business information from industrial spies, organized criminals, and infoterrorists

- Practical countermeasures that can be used to protect information assets from digital attacks

Defending Your Digital Assets Against Hackers, Crackers, Spies and Thieves covers INFOSEC, security policy, risk management, enterprise continuity planning processes, cryptography, protocols, key management, public key infrastructure (PKI), virtual private networks, certificate authorities and digital signatures technologies for identification, authentication and authorization on the world wide web, secure electronic commerce, biometrics and digital warfare.

The book provides resources for applying INFOSEC to protect private, commercial and government computer systems.

Plan of the Book

There is a calculated momentum to this book. PART 1 introduces the surprising range and level of Digital Espionage against commercial and government computer systems. PART 2 presents the theoretical foundations of INFOSEC technology and shows its relevance to the protection of economic and military operations. Among the topics presented are risk management, security policy, privacy and security verification of computer systems and networks. PART 3 should be of special interest to managers who have the authority to make the vital security decisions required to protect their organizations. PART 3 is the core group of chapters defining INFOSEC practical countermeasures. It discusses cryptography, public key systems, access control, digital signatures and certificate authorities, procedures for permission management, identification, authentication and authorization on the World Wide Web, virtual private networks (VPN), and biometrics. PART 4 discusses the enterprise management process before, during and after a digital attack. Special attention is devoted to protecting the web server. PART 5 expands the concept of DE and INFOSEC principles to the global arena and information warfare (IW) operations. It discusses the requirements of information warfare, weapons and methods of employment. The process of building trust on-line through a Public Key Infrastructure (PKI) is reviewed in depth. Finally, as an expansion to Chapter 7 privacy issues, the authors look at politics of cryptography, one of the technology pillars of INFOSEC. The Appendices cover information technology, legal statutes and political issues, digital signatures, certificate authorities, Executive Orders, U.S. Title 18 Code, and compare virtual private networks (VPNs).

PART 1: Digital Espionage, Warfare, and Information Security (INFOSEC)

PART 1 introduces the family of infocrimes that constitute Digital Espionage and their relationship to the restricted implementation of information security (INFOSEC) technologies. This is covered in two chapters:

1. Introduction to Digital Espionage: This chapter introduces key topics: digital espionage (DE), scope of computer crime, infocrime, problems in jurisdiction and identity, digital warfare, anatomy of the computer crime and criminal factors relating to computer crime and countermeasures thereof, prosecution tools and encryption.

2. Foundations of Information Security (INFOSEC)—Chapter 2 develops the theoretical acceptable foundation of information security, such as confidentiality, integrity, availability; relevance of information security to economic and military operations; the consequences of failure to maintain INFOSEC goals. Donn Parker’s brilliant extensions to the traditional INFOSEC theory are reviewed.

PART 2: INFOSEC Concepts

PART 2 presents the INFOSEC concepts of risk management, information security policy, privacy, intellectual capital and multilevel secure systems.

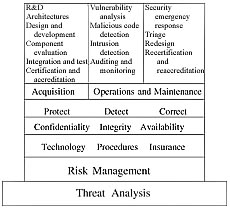

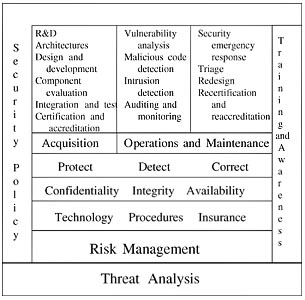

3. Risk Management and the Architecture of Information Security (INFOSEC)—Chapter 3 discusses risk management, threats and reducing vulnerability of information systems.

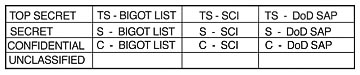

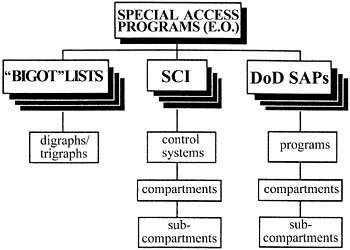

4. Information Security Policy—Chapter 4 argues for establishing an effective security policy for the nation and organizations. It discusses information classification management and the requirement for standards of due diligence.

5. Privacy in a Knowledge-Based Economy—Chapter 5 explores the tradeoffs of information security with our notions of privacy.

6. Security Verification of Systems and Networks—Chapter 6 presents the cost/benefits and requirements for accreditation, evaluation and certification for multi-level secure systems.

PART 3: Practical Countermeasures

INFOSEC is a family of technologies used as countermeasures against digital invasions and espionage. The six chapters of PART 3 present practical countermeasures:

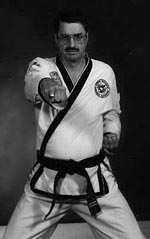

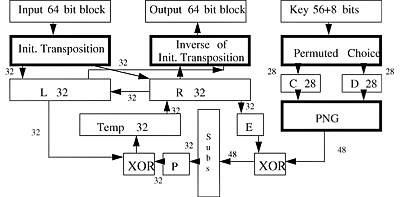

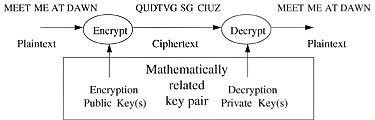

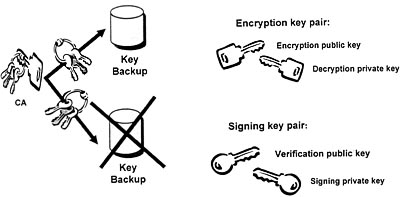

7. Cryptography—Chapter 7 presents modern cryptography concepts, key management, Public Key Infrastructure (PKI), and comparison of the well-known RSA and elliptic curve cryptography (ECC) public key technologies. Hardware and software implementation issues are discussed.

8. Access Control—Two Views—Chapter 8 reviews the access control mechanisms normally used to limit access to systems and networks as well as audit people.

9. Digital Signatures and Certification Authorities—Technology, Policy, and Legal Issues—Chapter 9 covers the complex topic of digital signatures and certificate authorities used for authentication of computer systems and users.

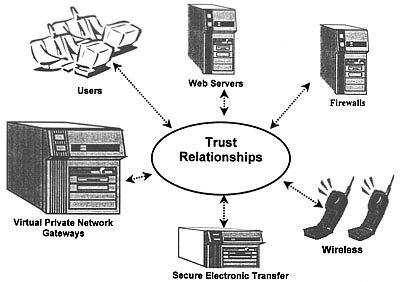

10. Permissions Management: Identification, Authentication, and Authorization (PMIAA)—Chapter 10 is dedicated to permissions management and identification, authentication and authorization for secure commerce. It discusses protection systems and secure electronic commerce on the World Wide Web. Web site vulnerability, Internet and on-line systems, attacks, counter-measures, deployment, transaction security and technologies are explored.

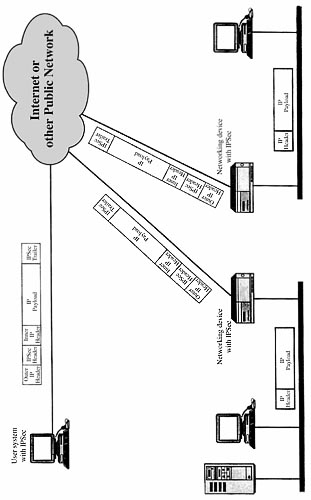

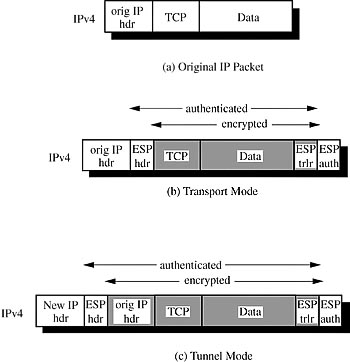

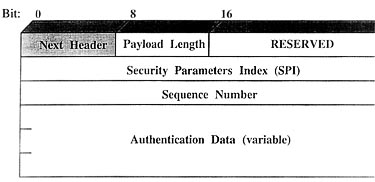

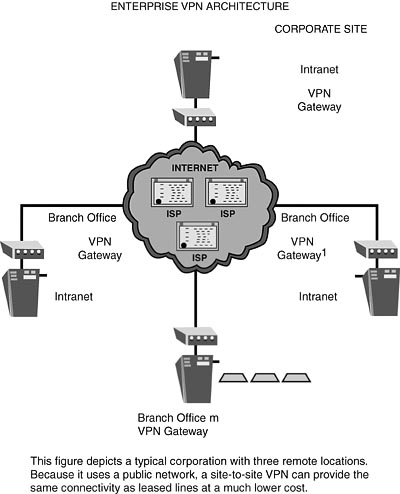

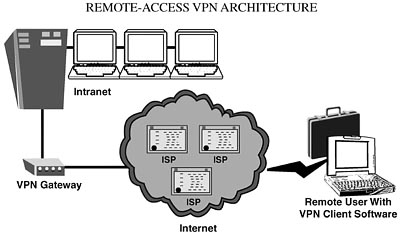

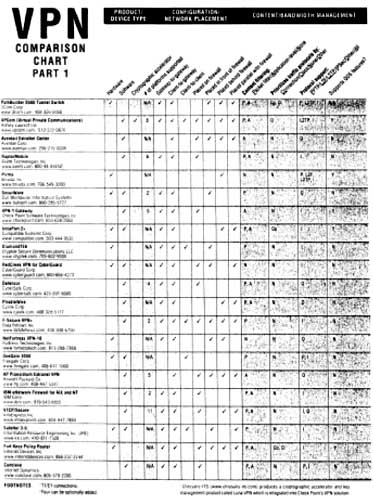

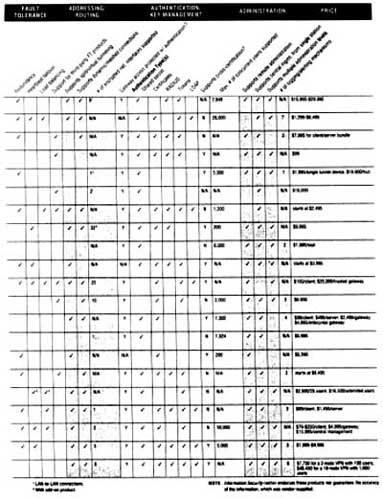

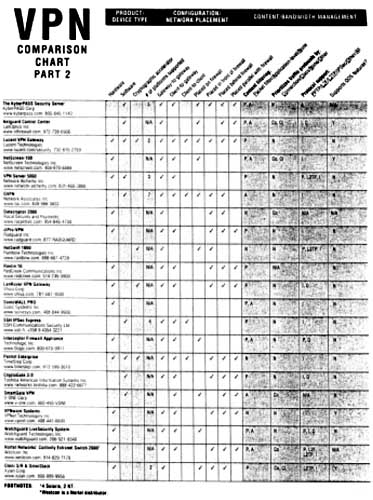

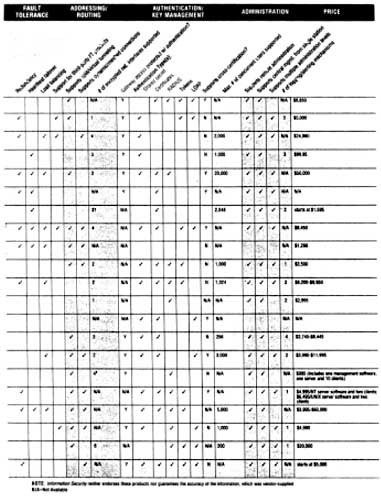

11. Virtual Private Networks—This chapter discusses the use, standards, and security of VPN technologies for secure point-to-point and remote to host communications. It covers VPN implementation hurdles of performance, authentication/key management, fault tolerance, reliable transport, network placement, addressing/routing, administrative management, and interoperability.

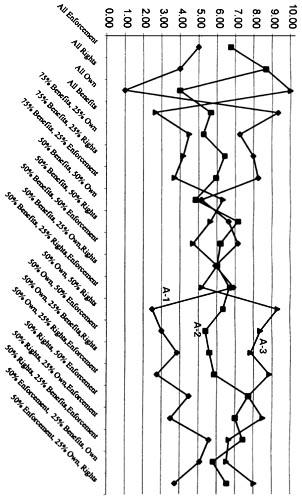

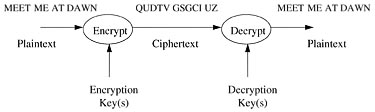

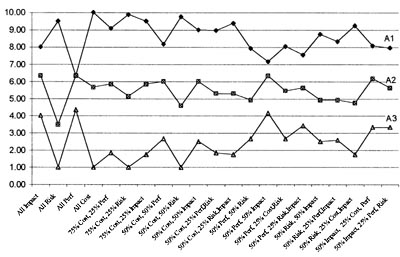

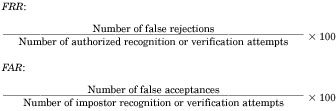

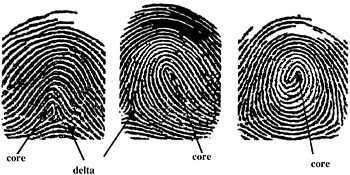

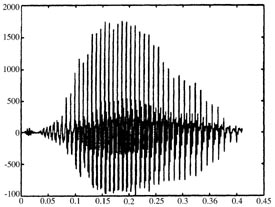

12. Biometric Countermeasures—Chapter 12 compares eleven different biometric technologies and their use as secondary counter-measures.

PART 4: Enterprise Continuity Planning

PART 4 acknowledges that Digital Espionage against commercial and government computer information assets is prevalent. It discusses the management of defending against attacks during the process.

13. Before the Attack: Protect and Detect—Chapter 13 covers the process of vulnerability assessment, countermeasures, backups and beta sites, anti-viral measures, training and awareness, auditing and monitoring information transactions, system and network security management, encryption, and liaison with law enforcement and intelligence communities.

14. During and After the Attack; Special Consideration—The Web Server—Chapter 14 looks at the manager in the heat of the attack. It discusses system and network triage and reconstituting capability at a backup location. Protection of the web server is critical because it is a prime target.

PART 5: Order of Battle

PART 5 scales the DE/INFOSEC process to the level of war—INFOWAR. It adds the dimension of building trust online via a public-key infrastructure (PKI) and assesses the politics associated with implementation of and exportation of encryption systems on U.S. industry.

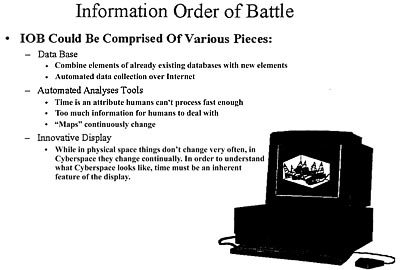

15. The Big Picture—Chapter 15 combines the concepts of information security, INFOSEC countermeasures and policy. It applies them to the more global view of National security. A systems methodology to analyze the effects of various securities contributions is presented.

16. Information Warfare—Chapter 16 discusses the IW, cyberspace environment, and implications for conventional war strategies. Chapter 16 discusses information warfare in terms of the enemy’s decision cycle. It looks at the way value is assigned to information targets and the resulting countermeasures indicated to the defender. Risk assessment may be politically, technologically or behaviorally based.

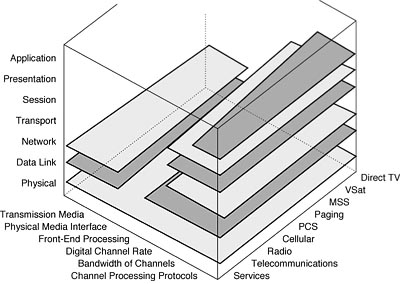

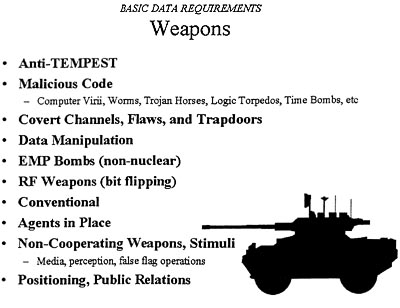

17. Information Warfare Weapons, Intelligence and the Cyberspace Environment—Chapter 17 is a fascinating look at the weapons, intelligence and cyberspace environment for IW. A multi-dimensional model of the services (telecommunications, radio, cellular communications, personal communications systems, paging, mobile satellite services, very small aperture terminals and direct TV) is overlayed with the theoretical OSI framework and practical hardware implementations.

18. Methods of Employment—Chapter 18 continues the discussion by adding planning targeting and deployment issues for effective IW. The focus is on the unique features of IW. It looks at deployment issues such as targeting, Cyberwar Integrated Operations Plan (CIOP), candidate targets, intelligence preparation, comprehensive strategic information, weapons capabilities, metric for success, possible enemies, phases of conflict, and uniqueness of IW.

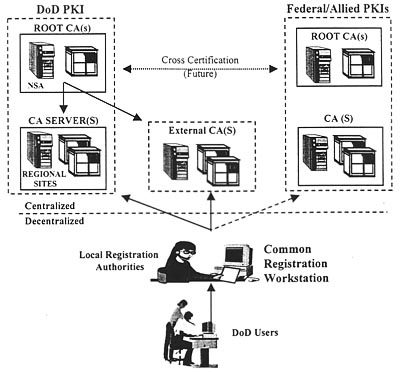

19. Public Key Infrastructure: Building Trust Online—Chapter 19 investigates the application, management and policy considerations for building trust on line using a Public Key Infrastructure (PKI). It switches to the government wide effort to deploy a functional PKI infrastructure by 2001. There is a serious effort to build trust online; however, the challenges of workable standards and interoperable equipment have not been fully solved.

20. Cryptographic Politics: Encryption Export Law and Its Impact on U.S. Industry—Chapter 20 recognizes the contribution that cryptography plays in the scheme of both government and commercial computer countermeasures. Cryptography exists in a particularly active political environment. On 16 September 1999, Clinton ordered the export controls on cryptography [after 3 years of tightening efforts] to be relaxed. A review of the laws governing cryptography and their impact on U.S industry is presented.

The Appendices cover INFOSEC technology, Executive Orders, electronic signatures, certificate authorities, criminal statutes, and offer a comparison of commercial VPN offerings.

Internet Mailing List

An Internet mailing list has been set up through COMSEC Solutions, LLC so that managers who want to keep current or instructors using this book can exchange information, suggestions, and questions with each other and the authors. To subscribe, send an e-mail message to [email protected] with a message body of “subscribe Digital-Espionage.” Further instructions will be returned for posting messages and receiving updates.

Acknowledgments

Books such as this are the products of contributions by many people, not just the musings of the authors. Defending Your Digital Assets: Against Hackers, Crackers, Spies, and Thieves has benefited from review by numerous experts in the field, who gave generously of their time and expertise. The following people reviewed all or part of the manuscript: William E. Baugh, Jr., Vice President and General Manager, Advanced Network Technologies and Security for SAIC; Dorothy Denning, Professor of INFOSEC Technology at Georgetown University; Jules M. Price (WITZ END), an expert on cipher technology; Charles M. Thatcher, Distinguished Professor of Chemical Engineering, University of Arkansas; Scott Inch, Associate Professor of Advanced Mathematics, Bloomsburg University; Jim Lewis, Director of Cryptographic Export Policy for the Bureau of Export Administration; Phillip Zimmermam, internationally known cryptographer and inventor of PGP; Cindy Cohn, attorney for Professor Daniel J. Bernstein; Waldo T. Boyd, senior editor, owner of Creative Writing, Pty. and senior cryptographer; Donn B. Parker, author of Fighting Computer Crime; Mark Zaid, Attorney at Law for the James Madison Project; Arthur W. Coviello, Jr., President of RSA Security, Inc.; Sandra Toms Lapedis, Director of Communications for RSA; Andrew Brown, Director of Marketing at RSA; Elizabeth M. Nichols, CFO for COMSEC Solutions; Kevin V. Shannon, Executive Vice President and Damian Fozard, CEO of InvisiMail; and Penny Linskey, supervising editor; Jennifer Perillo, managing editor, and Steven Elliot, senior executive editor for McGraw Hill Professional Books.

Several of Professor Nichols’ students from his 1999 graduate course in Cryptographic Systems: Application, Management and Policy at The George Washington University assisted with Chapters 19 and 20 and Appendix 16. We are grateful for the dedicated and enthusiastic assistance from Charlene Fareira, Mike Ross, Jim Luczka, Cornellis Ramey, Shafat Khan, Bill Kimbrough, Lynn Kinch, John Allen, Sami Mousa, Terry May, Harry Watson, Alexx Bahouri, Joan L. Bowers, Rebecca Catey, Chad Smith, and Joseph Wojsznski.

Among the many we have to thank, special recognition is due Nina Stewart, Tony Oettinger, Bob Morris, Devolyn Arnold, and Tom Rona who provided much of the inspiration that fostered our thinking. Mike McChesney, Steve Kent, and Steve Walker helped shape our understanding of the technologies and the business implications of their use. William Perry, as Secretary of Defense, and R. James Woolsey, as the Director of Central Intelligence, had the foresight and courage to charter the Joint DoD-DCI Security Commission, which explored the depths and breadths of the subject matter. Bill Stasior, Larry Wright, Fred Demech, and Larry Clarke provided opportunities and encouragement to us. Jim Morgan has been a friend, colleague, mentor, supporter and constructive critic for years. Finally, Montine Nichols deserves a commendation for her help on the Glossary and References and patience during the writing of this book. To these and many others we may have failed to give appropriate credit, we are grateful for their relevant ideas, advice and counsel. Any mistakes or errors are, of course, our own. Please advise the authors of errors by e-mail to [email protected] and we will do our best to correct the errors and publish an errata list on the www.COMSEC-Solutions.com listserver.

Best to all,

Randy Nichols

Professor, The George Washington University

and

President

COMSEC Solutions, LLC

Cryptographic / Anti-Virus / Biometric Countermeasures

Email: [email protected]

Website: http://www.comsec-solutions.com

Carlisle, Pennsylvania

December 1999

Dan Ryan, Esq.

E-mail: [email protected]

Website: http://www.danryan.com

Annapolis, Maryland

December 1999

Julie J.C.H. Ryan

President

Wyndrose Technical Group

E-mail: [email protected]

Website: http://www.julieryan.com

Annapolis, Maryland

December 1999

List of Contributors

The authors express their gratitude to the talented review team who contributed so much of their time and expertise to make this book a success. With deep respect, we present the qualifications of our teammates.

Authors

Randall K. Nichols

Randy Nichols (Supervising Author/Editor) formed COMSEC Solutions, LLC in 1997 [www.comsec-solutions.com]. He has 35 years of experience in a variety of leadership roles in cryptography and computer applications in the engineering, consulting, construction, and chemicals industries. COMSEC Solutions provides INFOSEC support to more than 200 commercial, educational and U.S. government clients. In addition to CEO duties for COMSEC Solutions, Nichols serves as Series Editor for Encryption and INFOSEC for McGraw Hill Professional Books and lectures at the prestigious The George Washington University in Washington, DC.

Defending Your Digital Assets Against Hackers, Crackers, Spies and Thieves is Nichols’ fourth book on the subjects of cryptography and INFOSEC countermeasures. Nichols’ previous books, The ICSA Guide to Cryptography (McGraw-Hill Professional Books, 1998, ISBN 0-07-913759-8) and Classical Cryptography Course, Volumes I and II (Aegean Park Press, 1995, ISBN 0-89412-263-0 and 1996, ISBN 0-89412-264-9, respectively) have gained recognition and industry respect for both Nichols and COMSEC Solutions.

Professor Nichols teaches graduate level Cryptography and Systems Applications Management and Policy at The George Washington University in Washington, D.C. He has taught cryptography at the FBI National Academy in Quantico, VA. Mr. Nichols is a professional speaker and regularly presents material at professional conferences, national technology meetings, schools and client in-house locations on INFOCRIME/INFOSEC/INFOWAR.

Nichols previously served as Technology Director of Cryptography and Biometrics for the International Computer Security Association (ICSA). Nichols (a.k.a. LANAKI) has served as President and Vice President of the American Cryptogram Association (ACA). He has been the Aristocrats’ Department Editor for the ACA’s bimonthly publication “The Cryptogram,” since 1985.

Nichols holds BSChE and MSChE degrees from Tulane University and Texas A & M University, respectively, and an MBA from University of Houston. In 1995, Nichols was awarded a 2nd DAN (Black Belt) by the American and Korean Tae Kwon Do Moo Duk Kwan Associations.

Daniel J. Ryan

Daniel J. Ryan is a lawyer, businessman, and educator. Prior to entering private practice, he served as Corporate Vice President of Science Applications International Corporation with responsibility for information security for Government customers and commercial clients who operate worldwide and must create, store, process, and communicate sensitive information and engage in electronic commerce. At SAIC he developed and provided security products and services for use in assessing security capabilities and limitations of client systems and networks, designing or re-engineering client systems and networks to ensure security, enhancing protection through a balanced mix of security technologies, detecting intrusions or abuses, and reacting effectively to attacks to prevent or limit damage.

Prior to assuming his current position, Mr. Ryan served as Executive Assistant to the Director of Central Intelligence with primary responsibility as Executive Secretary and Staff Director for the Joint DoD-DCI Security Commission. Earlier, he was Director of Information Systems Security for the Office of the Secretary of Defense serving as the principal technical advisor to the Secretary of Defense, the ASD (C3I) and the DASD (CI & SCM) for all aspects of information security. He developed information security policy for the Department of Defense and managed the creation, operation and maintenance of secure computers, systems and networks. His specific areas of responsibility spanned information systems security (INFOSEC), including classification management, communications security (COMSEC) and cryptology, computer security (COMPUSEC) and transmission security (TRANSEC), as well as TEMPEST, technical security countermeasures (TSCM), operational security (OPSEC), port security, overflight security and counterimagery.

In private industry, he was a Principal at Booz•Allen & Hamilton where he served as a consultant in the areas of strategic and program planning, system operations and coordination, data processing and telecommunications. At Bolt Beranek & Newman, he supplied secure wide-area telecommunications networks to Government and commercial Division of TRW in Washington, D.C., and he was Director of Electronic Warfare Advanced Programs at Litton’s AMECOM Division. He headed a systems engineering section at Hughes Aircraft Company where he was responsible for the design, development and implementation of data processing systems. He began his career at the National Security Agency.

Mr. Ryan received his Bachelor of Science degree in Mathematics from Tulane University in New Orleans, Louisiana, a Master of Arts degree in Mathematics from the University of Maryland, a Master of Business Administration degree from California State University and the degree of Juris Doctor from the University of Maryland. He is admitted to the Bar in the State of Maryland and the District of Columbia, and has been admitted to practice in the United States District Court, the United States Tax Court, and the Supreme Court of the United States. He has been Certified by the United States Government as a Professional in the fields of Data Systems Analysis, Mathematics and Cryptologic Mathematics.

Julie J. C. H. Ryan

Julie J. C. H. Ryan began her career as an intelligence officer after graduating from the Air Force Academy in 1982. She transitioned from active duty Air Force to civilian service with the Defense Intelligence Agency, where she served as an all-source intelligence analyst studying the capabilities, intentions and expertise of Soviet Union and Warsaw Pact military forces in the areas of C3, EW, and C3 countermeasures (C3CM). Her work at DIA included supporting the early attempts to understand how to develop multi-level secure computer systems; including directing and monitoring the design, development, and delivery of secure data bases. Her responsibilities spanned budget and program management techniques, system design and engineering practices, data base design, intelligence data requirements, information security concepts, user capabilities and knowledge base, and human engineering. From that experience, Julie developed an abiding interest in enterprise-wide security solutions, creating and deploying an appropriate mixture of technologies, tools, and procedures to address the security requirements of information assets and systems.

Since leaving government service, Julie Ryan has continued working in security engineering. At Sterling Federal Systems she performed comparative functional, technical and cost feasibility analyses of possible alternative system implementations for the Intelligence Community and the military services. At Booz & Hamilton, she served as a special consultant to highly classified Government programs, performing technical analyses, test and system engineering, and long-range strategic planning. At Welkin Associates, she assisted in advanced systems design and development for national- and theater-level requirements satisfaction, including architecture studies and investigations of new and unique uses for emerging technologies in secure information management, data processing and product distribution. For TRW, Julie served as an Information Warfare specialist, coordinating Information Warfare-related activities horizontally across the lines of businesses, networking applicable capabilities and competencies, and participating in strategic planning processes. She prepared white papers, briefings and publications on Information Warfare for both internal educational and strategic planning purposes as well as for customer briefings and work proposals. These included an Information Order of Battle concept briefing, a concept exploration of intelligence support to information warfare.

Julie participated as a Member of the Naval Studies Board of the National Academy of Sciences in studying technology implications to warfare and national security applications. She also supports the Assistant Secretary of Defense (C3I) in Information Warfare studies, evaluations and planning.

Julie currently serves as President of the Wyndrose Technical Group, Inc., a company providing information technology and security consulting services. She specializes in security systems engineering, creating solutions for specific operational environments based on realistic assessments of the organization’s security requirements. Having received a Masters Degree from Eastern Michigan University’s College of Technology, she is now pursuing a Doctor of Science from the Engineering Management and Systems Engineering Department of the School of Engineering and Applied Sciences (SEAS), George Washington University. Julie teaches graduate-level classes in engineering and technology for GWU and is researching information security management practices for her dissertation.

Randy Nichols

Dan Ryan

Julie J.C.H. Ryan

COMSEC Solutions Review Team

Waldo T. Boyd

Waldo was not always President of Creative Writing, Inc. [[email protected]] or vigorously editing Defending Your Digital Assets Against Hackers, Crackers, Spies and Thieves. The U.S. Navy lopped off Waldo’s blond curls at age 18 and converted his 80 wpm typing into radio operating in 1936. After attending technical and engineering schools, he was assigned to Sydney University (Australia) ECM labs (electronic counter-measures) for research in crypto, underwater, aircraft, ship and sub ECM. Since his Secret clearance required line officer or else, he left the ranks to become a Warrant Radar Electrician.

Waldo resigned his commission in 1947, and became tech writer and teacher of ECM to Air Force officers as a civilian, Berlin Airlift. He spent nine years at Aerojet-General Rocket-Engine plants as manager technical manuals dept, produced over 100 missile tech manuals on Titan and other systems. Edited magnetic air and undersea detection manuals under contract for U.S. Air Force, computer texts, wrote six books for Simon & Schuster, H. W. Sams, etc., general and youth readership. Wrote weekly science articles for two syndicates. “Retired” at age 62—he continues to write and edit selectively to the present day.

His publication credits include seven titles:

Your Career in the Aerospace Industry, Julian Messner Div., Simon & Schuster, 1966 (Jr. Lit. Guild Selection).

Your Career in Oceanology, Simon & Schuster, 1969

Oceanologia (Spanish Edition) Brazil, 1969

The World of Cryogenics, G.P. Putnam’s Sons, N.Y., 1970

The World of Energy Storage, Ibid, 1977

Fiber Optics with Experiments, Howard W. Sams, Ind., 1982

Fiber Optics (Japanese Edition), Tokyo, 1984

Cryptology and the Personal Computer, Aegean Press, L.A., 1986

Computer Cryptology: Beyond Decoder Rings, Prentice-Hall, 1988

And 2000 articles in Popular Science Monthly; Popular Electronics; Popular Mechanics; Think (IBM); Western Fruit Grower; Harvest Years; London Times; The Foreman’s Letter; Supervisor’s Letter; Business Management; Cable TV Magazine; Ham Radio; 73 Magazine; 80-Micro; 80-US; Hot Coco; Advanced Computing; Fate; OfficeSystems96; Weekly Syndicated Science Column; and many others.

Jules M. Price

Jules M. Price (WITZ END) has been a Senior Cryptographer with the ACA since 1950 and has solved over 10,000 cryptograms. Only a few people in the world, since 1929, have obtained that ranking, which signals expertise in nearly 75 different classical cipher systems. In his other life, he is a Professional Engineer, and as Vice President, Chief Engineer and Project Manager, he has directly involved in the construction of multi-million dollar projects ranging from highways and bridges to colleges, schools, hospitals, and Shea Stadium (home of the New York Mets.) WITZ END was the chief reviewer for the successful ICSA Guide To Cryptography, published by McGraw Hill in 1998. His long experience with building plans and specifications has given him a razor sharp eye for details. For this talent, he was chosen as a primary reviewer for this book.

Charles M. Thatcher

Chuck Thatcher joined COMSEC Solutions in 1998 as Vice President in charge of Research and Development. He has more than 33 years of experience in mathematical modeling, systems analysis, computer simulation, optimization and applications programming.

Dr. Thatcher is the principal author of Digital Computer Programming: Logic and Language by Addison-Wesley Publishing Co., 1967, and Fundamentals of Chemical Engineering by Charles E. Merrill Books, Inc., 1962. Numerous papers, technical reports, patents, and commercial computer programs are credited to Dr. Thatcher.

Dr. Thatcher was the Distinguished Professor of Chemical Engineering and Alcoa Aluminum Company Chair at the University of Arkansas, 1970–1992. He was awarded his Ph.D. (ChE) from the University of Michigan in 1955.

Scott Inch

Scott Inch earned a B.S. in Mathematics in 1986 from Bloomsburg University, a M.S. in Mathematics in 1988 and a Ph.D. in Applied Mathematics in 1992 from Virginia Polytechnic Institute and State University. His areas of specialty are control theory, integro-partial differential equations, and energy decay in viscoelastic and thermo-viscoelastic rods. Dr. Inch is currently an Associate Professor in the Department of Mathematics, Computer Science and Statistics at Bloomsburg University. He has always been interested in cryptology and now gets to teach it.

Mark Zaid

Mark S. Zaid is a solo practitioner in Washington, D.C., specializing in litigation and lobbying on matters relating to international transactions, torts and crimes, national security, foreign sovereign and diplomatic immunity, defamation (plaintiff) and the Freedom of Information/Privacy Acts.

Through his practice Mr. Zaid often represents former/current federal employees, intelligence officers, Whistleblowers and others who have grievances or have been wronged by agencies of the United States Government or foreign governments.

Mr. Zaid is the Executive Director of the James Madison Project, a Washington, D.C.-based non-profit, with the primary purpose of educating the public on issues relating to intelligence gathering and operations, secrecy policies, national security and government wrongdoing.

In connection with his legal practice on international and national security matters, Mr. Zaid has testified before, or provided testimony to, a variety of governmental bodies including the Department of Energy and subcommittees of the Senate Judiciary Committee, the Senate Governmental Affairs Committee, the House Judiciary Committee, and the House Government Reform Committee.

A 1992 graduate of Albany Law School of Union University in New York, where he served as an Associate Editor of the Albany Law Review, he completed his undergraduate education (cum laude) in 1989 at the University of Rochester, New York with honors in Political Science and high honors in History. Mr. Zaid is a member of the Bars of New York State, Connecticut, the District of Columbia, and several federal courts.

Elizabeth M. Nichols

Elizabeth M. Nichols joined COMSEC Solutions in 1998 as General Manager in charge of Finance, Accounting and Public Relations. In 1999, she was promoted to the post of CFO, adding to her duties Auditing, Taxes, Capital Expenditures, and Human Relations.

Mrs. Nichols came to COMSEC Solutions with 23 years of management and field experience in “stat” laboratory and hospital administration. She has developed policies and security procedures for major hospitals and schools such as Humana Hospital, LabCorp and University of Arkansas Medical School at Little Rock.

The Glossary, Appendices and final copy edit for Defending Your Digital Assets Against Hackers, Crackers, Spies and Thieves were developed, inspected, and tenaciously prepared by Mrs. Nichols.

Mrs. Nichols was granted a BSMT from University of Arkansas in 1976.

Christopher R. Ryan

Christopher Ryan is an information security expert with Ernst & Young, specializing in the evaluation and deployment of public key infrastructures. Prior to joining Ernst & Young, he was an information engineer at Science Applications International Corporation. He has a B.S. in Computer Science from the University of Maryland.

Foreword

The authors’ broad backgrounds of practice and academia allowed them to seamlessly present the most current state of information protection and vulnerabilities in an easy to understand, but documented treatise. The cases presented are real, topical and flow with the theme of the subject matter presented. They tell you the risks, examine the cases where people and systems have fallen victim and tell you the remedy.

The work begins with discussion of foundation and structure of information protection and reviews the consequences of failure to protect information. An interesting discussion of the tools that are available to protect against cyberthreats complete this area of discussion.

Countermeasures and protections are presented and include relevant tools such as encryption, access control, authentication, and digital signatures. The authors discuss private networks and the challenges inherent in end-to-end security of communications. Public key infrastructure and management are treated well and in detail.

Contingency planning, recovery, and practical directions are provided for those who have been attacked and damaged by hackers or malicious code insertion. Readers will understand to a much greater detail what has happened and what to do. Then in scholarly but interesting detail Information Warfare and the Politics of the subject are analyzed and observed. These weapons, their use, and potential are explained and range from viruses and malicious code to RF weapons that destroy circuits and components.

Readers will find the approach the authors have taken is one of best business practices, combined with a systems approach to the problem of information security. The book is designed for readers, who operate at the executive, policy or managerial level and who have some responsibility for protection of the organization’s information assets, intellectual property and systems. Content presented is equally valuable to both commercial and government managers.

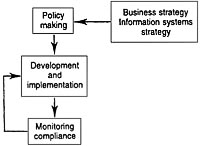

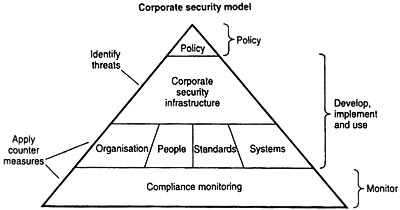

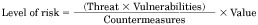

This work provides a reasoned approach to making sound decisions about how to expend resources, often scarce, to achieve a balanced multidisciplinary approach. The search is for the security required at an affordable price to the organization. Three basic processes are used in defining and developing a program of protection: protection, detection and correction. Risk management rather than risk avoidance, provides the methodology for applying cost-benefit and Return on income analyses.

The extensive appendices provide source information on information technology, legal and political issues now in the cyberspace.

Defending Your Digital Assets: Against Hackers, Crackers, Spies, and Thieves is a joint effort by three experienced information security professionals.

Randall K. Nichols is President of COMSEC Solutions and author of three previous titles on cryptography. He recently served as Technology Director of Cryptography and Biometrics for the International Computer Security Association (ICSA). Nichols is considered an expert on cryptanalysis and INFOSEC counter-measures. Nichols lectures on cryptography policy and systems management at the prestigious The George Washington University.

Daniel J. Ryan is an attorney, businessman, and educator who has served in private industry, including as Corporate VP of SAIC, and in the public sector, as Executive Assistant to the DCI. He is an Adjunct Professor of Information Security and Internet Law for The George Washington University and James Madison University.

Julie J. C. H. Ryan is President of Wyndrose Technical Group, providing security engineering and knowledge management consulting to commercial and government clients. She is an Adjunct Professor with The George Washington University in the Engineering Management and Systems Engineering Department of the School of Engineering and Applied Sciences.

WILLIAM E. BAUGH, JR.

MCLEAN, VA. USA

16 NOVEMBER 1999

About William E. Baugh, Jr.

Mr. Baugh is a senior executive in a Fortune 500 company, an Attorney, and a Director or Advisor to companies involved in Information Security and Intellectual Property Protection. He is active in both National, and International Telecommunications fraud remediation, signal theft, piracy, and network security. He has published a number of articles on Information Security, Encryption and Crime in Cyberspace. He has testified before the United States Congress as an invited expert on encryption and information protection and periodically comments to the National Media. Before entering private industry, he served as Assistant Director of the Federal Bureau of Investigation, responsible for Information Resources and Technology, retiring after 26 years of service. Mr. Baugh has offices in McLean, Virginia, and Savannah, Georgia.

Foreword

Welcome to the inaugural book from RSA Press! From our participation in technical and legislative policy to hosting the world’s foremost security conference, RSA Security has continually sought to educate the world on e-security issues, technologies and futures. Now, we are pleased to extend this same tradition through our new line of RSA Press books. We believe that the industry as a whole can advance more quickly with an open exchange of information and through education on the security issues that are shaping today’s online marketplace.

RSA Security’s nearly two decades of hands-on experience in the e-security realm make us uniquely suited to be a trusted source for information on the latest e-security trends and issues. With the proliferation of the Internet and revolutionary e-business practices, there has never been a more critical need for sophisticated security technologies and solutions - and for trustworthy information about e-security. RSA Press books can help your company move safely into the new and rewarding arena of electronic business, by providing relevant and unbiased information to guide you around the potential dangers of doing business in the networked world. Whether you are an enterprise IT manager, an application developer, a CEO, CIO or an end user of computer technologies, titles from RSA Press will be available to answer your questions and address your concerns about reaping the benefits of e-commerce while minimizing the risks involved with doing so.

As our first published title, Defending Your Digital Assets Against Hackers, Crackers, Spies and Thieves provides a solid foundation for information security professionals to learn about the methods of protecting their organizations’ most precious assets: data. Network data has become increasingly valuable in today’s public and private sectors, and most information that’s accessible from a network is not as secure as it should be. Defending Your Digital Assets Against Hackers, Crackers, Spies and Thieves discusses the growing vulnerabilities associated with doing business online and offers detailed explanations and advice on how to prevent future attacks, detect attacks in progress, and quickly recover business operations.

We hope you enjoy this inaugural work, and welcome your comments, criticism and ideas for future RSA Press books. Please visit our Web site at http://www.rsasecurity.com for more information about RSA Security, or find us at http://www.rsasecurity.com/rsapress/ to learn more about RSA Press.

ARTHUR W. COVIELLO, JR.

PRESIDENT

RSA SECURITY INC.

PART 1

Digital Espionage, Warfare, and Information Security (INFOSEC)

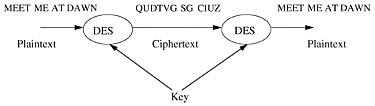

In Chapter 1, the interesting concepts of Digital Espionage and Digital Warfare are introduced. The family of computer security countermeasures known as INFOSEC is discussed. All computer information is vulnerable to a variety of attacks. A primary theme of this book is that information security (INFOSEC) countermeasures are reasonable and prudent technologies to thwart Digital Espionage and Infocrimes perpetuated by a host of bad guys we encounter: Hackers, Crackers, Spies, and Thieves. As a corollary, poorly implemented INFOSEC technologies provide fertile ground for the practice of Digital Espionage in corporate and military theaters. We attempt to profile the computer criminal and present some primary tools that investigators have at their disposal to prosecute them. Since encryption is a primary weapon in the INFOSEC arsenal, it is introduced at this junction.

In Chapter 2, traditional INFOSEC goals are persented. We review some of the serious consequences that occur when INFOSEC technologies are inappropriately applied. Finally, Donn Parker’s brilliant extensions to the traditional INFOSEC theory are reviewed.

CHAPTER 1

Introduction to Digital Espionage

Digital Espionage (DE)—What It Is and What It Represents

Infocrime refers to any crime where the criminal target is information. Digital espionage (DE) is the specific-intent infocrime of attacking, by computer means, personal, commercial, or government information systems and assets for the purpose of theft, misappropriation, destruction, and disinformation for personal or political gain. This crime has become an enormous problem with the growth of the Internet. The authors’ definition of digital espionage represents a compendium of activities. DE is really a family of specific-intent infocrimes. The logical questions are as follows: how big is the family, what really is a computer crime, why and how does it occur, and most importantly, how does one prevent a computer crime from occurring? How important is it that the main thrust of the attack is against information assets?

The advent and growth of the Internet has made digital espionage a real and potential danger of enormous magnitude. The average computer user is still blissfully unaware of this danger, but digital espionage has already begun to affect the general public, as well as corporations and government agencies. Corporate management has also been slow to recognize and react to the specter of digital espionage. Most existing security systems reflect a concern for short-term profit or reaction to a specific breach of security in the past. The need to educate employees about the protection of intellectual property is rarely seen as a high-priority item. Many security initiatives are delayed until the manager is dealing with crisis rather than appropriate planning and security policies. Each new security crisis usually induces a similar limited reaction, without any consideration of the more general problem within which any one incident is only an immediate example.

Scope of Computer Crime (Infocrime)

The scope of computer crime is difficult to quantify. Public reports have estimated that computer crime costs us between $500 million and $10 billion per year.1 A survey performed jointly by the Computer Security Institute (CSI) and the Federal Bureau of Investigation’s Computer Crime Division found that nearly half of the 5000 companies, federal institutions, and universities polled experienced computer security breaches within the past 12 months. These attacks ranged from unauthorized access by employees to break-ins by unknown intruders. The study found that:2

- The problem is growing.

- The greatest problem is insider attacks.

- Identified computer crime accounted for over $100 million in losses in 1996.

In addition, a WarRoom Research, Inc. survey of 236 respondents showed major underreporting of security incidences related to computers:3

- 6.8 percent always reported intrusion

- 30.2 percent only report if anonymous

- 21.7 percent only report if everyone else did

- 37.4 percent only report if required by law

- 3.9 percent only report for “other reasons, including protect self”

According to a recent U.S. Department of Justice presentation,4 some examples of systems and facilities that were seriously hit are as follows:

- U.S. Marshals system—Alaska

- U.S. District Court system—Seattle

- NASA attack—Houston

- Military systems—Gulf War

- Organ Transplant Hospital—Italy

- Power companies

- 911 systems

Furthermore, in 1996 the National Security Agency (NSA) reported over 250 intrusions into DoD systems. The consequences from these computer attacks were labeled as devastating.

Research by Barbara D. Ritchey at the University of Houston presents another view: computer crimes account for losses of more than $1 billion annually and those computer criminals manifest themselves in many forms, including coworkers, competitors, and “crackers.”5 The Computer Security Institute of San Francisco also surveyed 242 separate Fortune 500 companies concerning Internet security and found that in 1995 only 12 percent of the companies reported losses as a result of system penetration totaling losses of $50 million. In terms of dollar value, the average theft costs a company $450,000 for each incident.

In 1996, Information Week magazine conducted its third annual survey in conjunction with Ernst and Young. The survey queried 1290 respondents, almost one-half of which said they suffered a financial loss related to information security in the last two years. At least 20 percent of the 1290 respondents stated their information security losses came to more than $1 million each. Additional loss information acquired from the Ernst and Young survey is that one in four U.S. companies has been a victim of computer crime, with losses ranging from $1 billion to $15 billion.6

From 1990 through 1995, the number of computers in the world increased tenfold, from 10 million to 100 million. In 1990, 15 percent of the computers were networked, and by 1995, 50 percent, or almost 50 million computers, were hooked together. From 1995 to 1999 the number of connected computers is estimated to have grown to over 250 million.

Theft of trade secrets is one of the most serious threats facing business today. The latest CSI/FBI Computer Crime and Security Study, released in March 1999, found that of the 12 types of computer crime and misuse, theft of proprietary information had the greatest reported financial losses for the period 1997 to 1999. According to the survey, more than $42 million worth of trade secrets were stolen from 64 organizations that were able to quantify their losses from this type of breach.7 Reported losses in 1998 ranged from $500 to $500,000. Penetration attacks by “outside” sources were 18 percent of the organizations reporting in 1997 and 21 percent of those reporting in 1998.8

The Federal Computer Incident Response Center reported 244 incidents involving government sites from October 1996 through October 1997. Of those, 92 (38 percent) were intrusion incidents, 83 (34 percent) were probes, 37 (15 percent) were computer viruses, 22 (9 percent) were e-mail incidents, 4 (2 percent) were denial of service incidents, 2 (1 percent) were malicious code incidents, 2 were misuse incidents, and 2 were scams. One particularly sensitive intrusion ran over several months and involved more than 10,000 hosts. Hackers gained root access in several of the incidents.9

The National Police Agency of Japan received reports of 946 cases involving hacking during the first six months of 1997. This was a 25 percent increase over the first six months in 1996. The Australian Computer Emergency Response Team reported a 220 percent increase in hacker attacks from 1996 to 1997.10

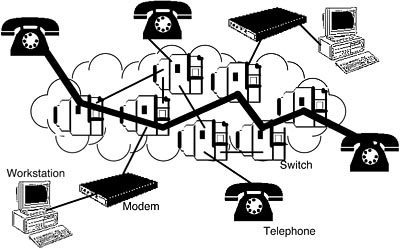

Networking has helped technology increase exponentially. With these cultural changes, the need for heightened security has also increased. A computer criminal formerly was able to attack systems at only one location, giving administrators the advantage of protecting one site. In today’s client/server environment, network administrators are fighting a very different battle. They are subject to attacks at every access point on their network, from a modem port to a laptop on an airplane headed for Paris. The Internet poses its own share of unique problems in that never before have so many computers been hooked together.11

The Criminal Playground

Computer crimes take several forms including sabotage, revenge, vandalism, theft, eavesdropping, and even “data diddling,” or the unauthorized altering of data before, during, or after it is input into a computer system. Computers can be used to commit such crimes as credit card fraud, counterfeiting, bank embezzlement, and theft of secret documents. The physical theft of a disk storing 2.8 MB of intellectual data is considered data theft. Logging into a computer account with restricted access and being caught there or purposely leaving evidence in the form of a message with an explanation of what has been done are examples of data diddling. A traveling employee who leaves his or her computer unattended while on an airplane, only to discover an empty drive slot to the tune of lost billing information, marketing plans, and/or customer data, can be considered inattentive, but this type of incident is steadily increasing.

Another type of computer crime involves electronic funds transfer or embezzlement. The first person convicted under the Computer Fraud and Abuse Act was Robert T. Morris Jr., who, as a Cornell graduate student, introduced a “worm” into the Internet. These “worms” float freely through the computer environment, attacking programs in a manner similar to viruses. Some would consider this an act of vandalism. By multiplying, the worm interfered with approximately 6200 computers. Morris was sentenced to three years’ probation, ordered to pay a $10,000 fine, required to perform 40 hours of community service, and required to pay $91 per month to cover his probation supervision.

Computers can play three different roles in criminal activity. First, computers can be targets of an offense; for example, a hacker tries to steal information from or damage a computer or computer network. Other examples of this behavior include vandalism of Web sites and the introduction of viruses into computers.

Second, computers can be tools in the commission of a traditional offense, for instance, to create and transmit child pornography. COMSEC Solutions composed an interesting list wherein the computer was used as a tool to facilitate the following crimes:12

- Drug trade

- Illegal telemarketing

- Fraud, especially false invoices

- Intellectual property theft

- “True face” or ID theft and misrepresentation

- Espionage, both industrial and national

- Conventional terrorism and crimes

- Electronic terrorism and crime

- Electronic stalking

- Electronic harassment of ex-spouses

- Inventory of child pornography

- Bookmaking

- Contract repudiation on the Internet

- Cannabis smuggling

- Date rape

- Gang crimes, especially weapons violations

- Organized crime

- Armed robbery simulation

- Copycat crimes

- Pyramid schemes

- DoS (denial of service) attacks

- Exposure or blackmail schemes

- Revenge and solicitation to murder of spouses

- Hate crimes

- Web site defacement (automated)

Third, computers can be incidental to the offense, but still significant for law enforcement purposes. For example, many drug dealers now store their records on computers, which raises difficult forensic and evidentiary issues that are different from paper records.

In addition, a single computer could be used in all three ways. For example, a hacker might use his or her computer to gain unauthorized access to an Internet service provider (“target”) such as America Online, and then use that access to illegally distribute (“tool”) copyrighted software stored on the ISP’s computer-server hard drive (“incidental”). COMSEC Solutions composed another interesting list where the computer was an incidental part of computer crime. These included hacking, data theft, diddling, alteration and destruction, especially involving financial or medical records, spreading viruses or malicious code, misuse of credit and business information, theft of services, and finally, denial of service.

Internet service providers (ISPs) and large financial institutions are not the only organizations that should be concerned about computer crime. Hackers can affect individual citizens directly or through the person’s ISP by compromising the confidentiality and integrity of personal and financial information. In one case, a hacker from Germany gained complete control of an ISP server in Miami and captured all the credit card information maintained about the service’s subscribers. The hacker then threatened to destroy the system and distribute all the credit card numbers unless the ISP paid a ransom. German authorities arrested the hacker when he tried to collect the money. Had he been quiet, he could have used the stolen credit card numbers to defraud thousands of consumers.13

Government records, like any other records, can be susceptible to a network attack if they are stored on a networked computer system without proper protections. In Seattle, two hackers pleaded guilty to penetrating the U.S. District Court system, an intrusion that gave them access to confidential and even sealed information. In carrying out their attack, they used supercomputers at the Seattle-based Boeing Computer Center to crack the courthouse system’s password file. If Boeing had not reported the intrusion to law enforcement, the district court system administrator would not have known the system was compromised.14

The computer can also be a powerful tool for consumer fraud. The Internet can provide a con artist with an unprecedented ability to reach millions of potential victims. As far back as December 1994, the Justice Department indicted two individuals for fraud on the Internet. Among other things, these persons had placed advertisements on the Internet promising victims valuable goods upon payment of money. But the defendants never had access to the goods and never intended to deliver them to their victims. Both pleaded guilty to wire fraud.15

Personal computers can be used to engage in new and unique kinds of consumer fraud never before possible. In one interesting case, two hackers in Los Angeles pleaded guilty to computer crimes committed to ensure they would win prizes given away by local radio stations. When the stations announced that they would award prizes to a particular caller—for example, the ninth caller—the hackers manipulated the local telephone switching network to ensure that the winning call was their own. Their prizes included two Porsche automobiles and $30,000 in cash. Both miscreants received substantial jail terms.16

In another interesting case that raises novel issues, a federal court in New York granted the Federal Trade Commission’s request for a temporary restraining order to shut down an alleged scam on the World Wide Web. According to the FTC’s complaint, people who visited pornographic Web sites were told they had to download a special computer program to view the sites. Unknown to them, the program secretly rerouted their phone calls from their own local Internet provider to a phone number in Moldova, a former Soviet republic, for which a charge of more than $2 a minute could be billed. According to the FTC, more than 800,000 minutes of calling time were billed to U.S. customers.17

Internet crimes can be addressed proactively and reactively. Fraudulent activity over the Internet, like other kinds of crimes, can be prevented to some extent by increased consumer education. People must bring the same common sense to bear on their decisions in cyberspace as they do in the physical world. They should realize that a World Wide Web site can be created at relatively low cost and can look completely reputable even if it is not. The user should invest time and energy to investigate the legitimacy of parties with whom they interact over the Web. Just as with other consumer transactions, we should be careful about where and to whom we provide our credit card numbers. The legal maxim caveat emptor (“let the buyer beware”), which dates back to the early sixteenth century, applies with full force in the computer age.

The public can also be protected by vigorous law enforcement. Many consumer-oriented Internet crimes, such as fraud or harassment, can be prosecuted using traditional statutory tools, such as wire fraud. Congress substantially strengthened the laws against computer crime in the National Information Infrastructure Protection Act of 1996. The law contains 11 separate provisions designed to protect the confidentiality, integrity, and availability of data and systems.

Novel Challenges: Jurisdiction and Identity

The Internet presents novel challenges for law enforcement. Two particularly difficult issues for law enforcement are identification and jurisdiction.

One of the benefits of the global Internet is its ability to bring people together, regardless of where in the world they are located. Boundaries are virtual, not real. This can sometimes have a subtle impact for law enforcement. For example, to buy a book, you used to drive to the local bookstore and have a face-to-face transaction; if the bookseller cheated you, you went to the local police. But the Internet can make it easier and cheaper for a consumer to make purchases, without even leaving his or her home, from a distributor based in a different state or even a different country. And if the consumer pays by credit card or, in the future, electronic cash, and then the book never arrives, this simple transaction may become a matter for the federal or even international law enforcement community, rather than a local matter. There are issues of trust that concern both the merchant and the customer.

The Internet makes interstate and international crime significantly easier in a number of respects. For example, a fraudulent telemarketing scheme might be extremely difficult to execute on a global basis because of the cost of international telephone calls, the difficulty of identifying suitable international victims, and the more mundane problem of planning calls across numerous time zones.18 But the Internet enables scam artists to victimize consumers all over the world in simple and inexpensive ways. An offshore World Wide Web site offering the sale of fictitious goods may attract U.S. consumers who can “shop” at the site without incurring international phone charges, who can be contacted through e-mail messages, and who may not even know that the supposed merchant is overseas. The Moldova phone scam demonstrates the relative ease with which more-complex international crimes may be perpetrated. In such a global environment, not only are international crimes more likely, but some consumer fraud cases traditionally handled by state and local authorities may require federal action.

Another fundamental issue facing law enforcement involves proving a criminal’s identity in a networked environment. In all crimes—especially information-based infocrimes—the defendant’s guilt must be proved beyond a reasonable doubt, but global networks lack effective identification mechanisms. Individuals on the Internet can be anonymous, and even those individuals who identify themselves can adopt false identities by providing inaccurate biographical information and misleading screen names. Even if a criminal does not intentionally use anonymity as a shield, it is easy to see how difficult it could be for law enforcement to prove who was actually sitting at the keyboard and committing the illegal act. This is particularly true because identifiable physical attributes such as fingerprints, voices, or faces are absent from cyberspace, and there are few mechanisms for proving identity in an electronic environment.

A related problem arises with the identity of the victim. With increasing frequency, policymakers are appropriately seeking to protect certain classes of citizens, most notably minors, from unsuitable materials. But if individuals requesting information can remain anonymous or identify themselves as adults, how can the flow of materials be restricted? Similarly, if adults can self-identify as children and lure real children into dangerous situations, how can these victims be protected? In 1999, Congress, in response to this problem, enacted the Communications Decency Act. The act did not pass its first federal court challenge. The federal court found the act to be exceedingly vague.

One area that raises both identification and jurisdictional issues is Internet gambling. The Internet offers several advantages for gambling businesses. First, electronic communications, such as electronic mail, allow for simple record keeping. Second, the Internet is far cheaper than long-distance and international telephone service. Third, many software packages make it easy to operate consumer businesses over the Internet. Use of the Internet for gambling—as well as for other illegal activities such as money laundering—could increase substantially as the use of “electronic cash” becomes more commonplace.19