CompTIA Security+ All-In-One Exam Guide, Second Edition – Read Now and Download Mobi

Comments

ALL IN ONE

CompTIA Security+™

EXAM GUIDE

Second Edition

ALL IN ONE

CompTIA Security+™

EXAM GUIDE

Second Edition

Gregory White

Wm. Arthur Conklin

Dwayne Williams

Roger Davis

Chuck Cothren

Copyright © 2009 by The McGraw-Hill Companies. All rights reserved. Except as permitted under the United States Copyright Act of 1976, no part of this publication may be reproduced or distributed in any form or by any means, or stored in a database or retrieval system, without the prior written permission of the publisher.

ISBN: 978-0-07-164384-9

MHID: 0-07-164384-2

The material in this eBook also appears in the print version of this title: ISBN: 978-0-07-160127-6, MHID: 0-07-160127-9.

All trademarks are trademarks of their respective owners. Rather than put a trademark symbol after every occurrence of a trademarked name, we use names in an editorial fashion only, and to the benefit of the trademark owner, with no intention of infringement of the trademark. Where such designations appear in this book, they have been printed with initial caps.

McGraw-Hill eBooks are available at special quantity discounts to use as premiums and sales promotions, or for use in corporate training programs. To contact a representative please visit the Contact Us page at www.mhprofessional.com.

TERMS OF USE

This is a copyrighted work and The McGraw-Hill Companies, Inc. ("McGraw-Hill") and its licensors reserve all rights in and to the work. Use of this work is subject to these terms. Except as permitted under the Copyright Act of 1976 and the right to store and retrieve one copy of the work, you may not decompile, disassemble, reverse engineer, reproduce, modify, create derivative works based upon, transmit, distribute, disseminate, sell, publish or sublicense the work or any part of it without McGraw-Hill’s prior consent. You may use the work for your own noncommercial and personal use; any other use of the work is strictly prohibited. Your right to use the work may be terminated if you fail to comply with these terms.

THE WORK IS PROVIDED “AS IS.” McGRAW-HILL AND ITS LICENSORS MAKE NO GUARANTEES OR WARRANTIES AS TO THE ACCURACY, ADEQUACY OR COMPLETENESS OF OR RESULTS TO BE OBTAINED FROM USING THE WORK, INCLUDING ANY INFORMATION THAT CAN BE ACCESSED THROUGH THE WORK VIA HYPERLINK OR OTHERWISE, AND EXPRESSLY DISCLAIM ANY WARRANTY, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO IMPLIED WARRANTIES OF MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. McGraw-Hill and its licensors do not warrant or guarantee that the functions contained in the work will meet your requirements or that its operation will be uninterrupted or error free. Neither McGraw-Hill nor its licensors shall be liable to you or anyone else for any inaccuracy, error or omission, regardless of cause, in the work or for any damages resulting therefrom. McGraw-Hill has no responsibility for the content of any information accessed through the work. Under no circumstances shall McGraw-Hill and/or its licensors be liable for any indirect, incidental, special, punitive, consequential or similar damages that result from the use of or inability to use the work, even if any of them has been advised of the possibility of such damages. This limitation of liability shall apply to any claim or cause whatsoever whether such claim or cause arises in contract, tort or otherwise.

CompTIA Authorized Quality Curriculum

The logo of the CompTIA Authorized Quality Curriculum (CAQC) program and the status of this or other training material as “Authorized” under the CompTIA Authorized Quality Curriculum program signifies that, in CompTIA’s opinion, such training material covers the content of CompTIA’s related certification exam.

The contents of this training material were created for the CompTIA Security+ exam covering CompTIA certification objectives that were current as of 2008.

CompTIA has not reviewed or approved the accuracy of the contents of this training material and specifically disclaims any warranties of merchantability or fitness for a particular purpose.

CompTIA makes no guarantee concerning the success of persons using any such “Authorized” or other training material in order to prepare for any CompTIA certification exam.

How to become CompTIA certified:

This training material can help you prepare for and pass a related CompTIA certification exam or exams. In order to achieve CompTIA certification, you must register for and pass a CompTIA certification exam or exams.

In order to become CompTIA certified, you must

- 1. Select a certification exam provider. For more information please visit http://www.comptia.org/certification/general_information/exam_locations.aspx.

- 2. Register for and schedule a time to take the CompTIA certification exam(s) at a convenient location.

- 3. Read and sign the Candidate Agreement, which will be presented at the time of the exam(s). The text of the Candidate Agreement can be found at http://www.comptia.org/certification/general_information/candidate_agreement.aspx.

- 4. Take and pass the CompTIA certification exam(s).

For more information about CompTIA’s certifications, such as its industry acceptance, benefits, or program news, please visit www.comptia.org/certification.

CompTIA is a not-for-profit information technology (IT) trade association. CompTIA’s certifications are designed by subject matter experts from across the IT industry. Each CompTIA certification is vendor-neutral, covers multiple technologies, and requires demonstration of skills and knowledge widely sought after by the IT industry.

To contact CompTIA with any questions or comments, please call (1) (630) 678 8300 or email [email protected].

This book is dedicated to the many security professionals who daily work to

ensure the safety of our nation’s critical infrastructures.

We want to recognize the thousands of dedicated individuals who strive to

protect our national assets but who seldom receive praise and often are only

noticed when an incident occurs.

To you, we say thank you for a job well done!

ABOUT THE AUTHORS

Dr. Gregory White has been involved in computer and network security since 1986. He spent 19 years on active duty with the United States Air Force and is currently in the Air Force Reserves assigned to the Air Force Information Warfare Center. He obtained his Ph.D. in computer science from Texas A&M University in 1995. His dissertation topic was in the area of computer network intrusion detection, and he continues to conduct research in this area today. He is currently the Director for the Center for Infrastructure Assurance and Security (CIAS) and is an associate professor of information systems at the University of Texas at San Antonio (UTSA). Dr. White has written and presented numerous articles and conference papers on security. He is also the coauthor for three textbooks on computer and network security and has written chapters for two other security books. Dr. White continues to be active in security research. His current research initiatives include efforts in high-speed intrusion detection, infrastructure protection, and methods to calculate a return on investment and the total cost of ownership from security products.

Dr. Wm. Arthur Conklin is an assistant professor in the College of Technology at the University of Houston. Dr. Conklin’s research interests lie in software assurance and the application of systems theory to security issues. His dissertation was on the motivating factors for home users in adopting security on their own PCs. He has coauthored four books on information security and has written and presented numerous conference and academic journal papers. A former U.S. Navy officer, he was also previously the Technical Director at the Center for Infrastructure Assurance and Security at the University of Texas at San Antonio.

Chuck Cothren, CISSP, is the president of Globex Security, Inc., and applies a wide array of network security experience to consulting and training. This includes performing controlled penetration testing, network security policies, network intrusion detection systems, firewall configuration and management, and wireless security assessments. He has analyzed security methodologies for Voice over Internet Protocol (VoIP) systems and supervisory control and data acquisition (SCADA) systems. Mr. Cothren was previously employed at The University of Texas Center for Infrastructure Assurance and Security. He has also worked as a consulting department manager, performing vulnerability assessments and other security services for Fortune 100 clients to provide them with vulnerability assessments and other security services. He is coauthor of the book Voice and Data Security as well as Principles of Computer Security. Mr. Cothren holds a B.S. in Industrial Distribution from Texas A&M University.

Roger L. Davis, CISSP, CISM, CISA, is Program Manager of ERP systems at the Church of Jesus Christ of Latter-day Saints, managing the Church’s global financial system in over 140 countries. He has served as president of the Utah chapter of the Information Systems Security Association (ISSA) and various board positions for the Utah chapter of the Information Systems Audit and Control Association (ISACA). He is a retired Air Force lieutenant colonel with 30 years of military and information systems/security experience. Mr. Davis served on the faculty of Brigham Young University and the Air Force Institute of Technology. He coauthored McGraw-Hill’s Principles of Computer Security and Voice and Data Security. He holds a master’s degree in computer science from George Washington University, a bachelor’s degree in computer science from Brigham Young University, and performed post-graduate studies in electrical engineering and computer science at the University of Colorado.

Dwayne Williams is Associate Director, Special Projects, for the Center for Infrastructure Assurance and Security at the University of Texas at San Antonio and has over 18 years of experience in information systems and network security. Mr. Williams’s experience includes six years of commissioned military service as a Communications-Computer Information Systems Officer in the United States Air Force, specializing in network security, corporate information protection, intrusion detection systems, incident response, and VPN technology. Prior to joining the CIAS, he served as Director of Consulting for SecureLogix Corporation, where he directed and provided security assessment and integration services to Fortune 100, government, public utility, oil and gas, financial, and technology clients. Mr. Williams graduated in 1993 from Baylor University with a Bachelor of Arts in Computer Science. Mr. Williams is a Certified Information Systems Security Professional (CISSP) and coauthor of Voice and Data Security, Security+ Certification, and Principles of Computer Security.

About the Technical Editor

Glen E. Clarke, MCSE/MCSD/MCDBA/MCT/CEH/SCNP/CIWSA/A+/Security+, is an independent trainer and consultant, focusing on network security assessments and educating IT professionals on hacking countermeasures. Mr. Clark spends most of his time delivering certified courses on Windows Server 2003, SQL Server, Exchange Server, Visual Basic .NET, ASP.NET, Ethical Hacking, and Security Analysis. He has authored and technical edited a number of certification titles including The Network+ Certification Study Guide, 4th Edition. You can visit Mr. Clark online at http://www.gleneclarke.com or contact him at [email protected].

CONTENTS AT A GLANCE

Chapter 1 General Security Concepts

Chapter 2 Operational Organizational Security

Chapter 3 Legal Issues, Privacy, and Ethics

Part II Cryptography and Applications

Chapter 5 Public Key Infrastructure

Chapter 6 Standards and Protocols

Part III Security in the Infrastructure

Chapter 8 Infrastructure Security

Chapter 9 Authentication and Remote Access

Part IV Security in Transmissions

Chapter 11 Intrusion Detection Systems

Chapter 13 Types of Attacks and Malicious Software

Chapter 14 E-Mail and Instant Messaging

Chapter 16 Disaster Recovery and Business Continuity

Chapter 19 Privilege Management

Appendix B OSI Model and Internet Protocols

CONTENTS

Chapter 1 General Security Concepts

Chapter 2 Operational Organizational Security

Policies, Standards, Guidelines, and Procedures

Organizational Policies and Procedures

Chapter 3 Legal Issues, Privacy, and Ethics

Payment Card Industry Data Security Standards (PCI DSS)

Import/Export Encryption Restrictions

SANS Institute IT Code of Ethics

Part II Cryptography and Applications

Chapter 5 Public Key Infrastructure

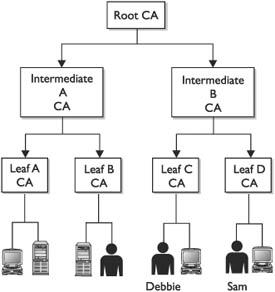

The Basics of Public Key Infrastructures

Local Registration Authorities

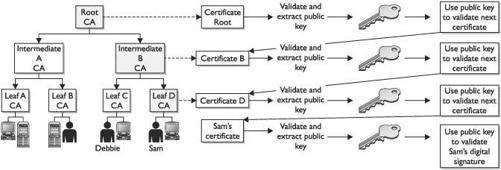

Trust and Certificate Verification

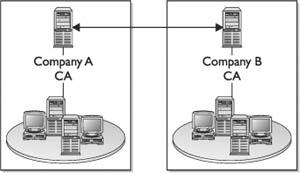

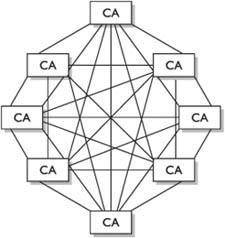

Centralized or Decentralized Infrastructures

Public Certificate Authorities

In-house Certificate Authorities

Outsourced Certificate Authorities

Chapter 6 Standards and Protocols

ISO/IEC 27002 (Formerly ISO 17799)

Part III Security in the Infrastructure

Access Controls and Monitoring

Chapter 8 Infrastructure Security

Security Concerns for Transmission Media

Chapter 9 Authentication and Remote Access

Part IV Security in Transmissions

Chapter 11 Intrusion Detection Systems

History of Intrusion Detection Systems

Resurgence and Advancement of HIDSs

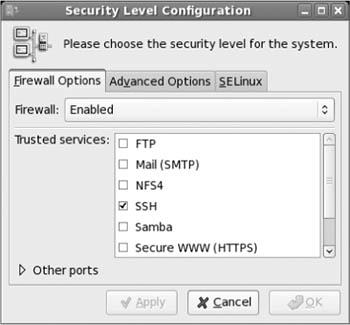

Operating System and Network Operating System Hardening

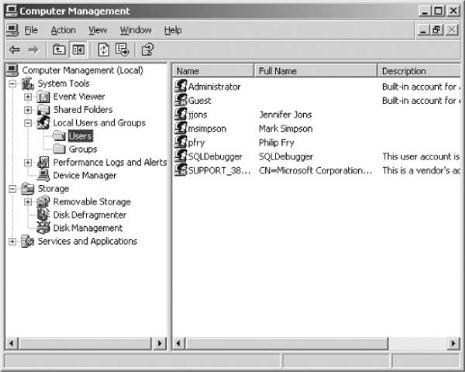

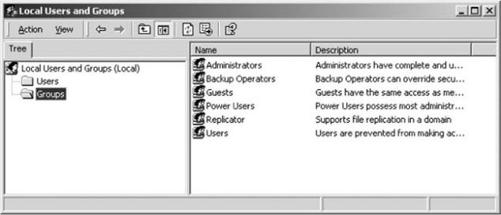

Hardening Microsoft Operating Systems

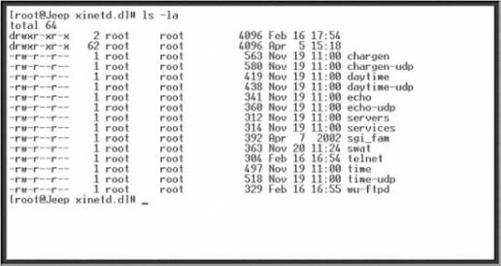

Hardening UNIX- or Linux-Based Operating Systems

Chapter 13 Types of Attacks and Malicious Software

Minimizing Possible Avenues of Attack

Attacking Computer Systems and Networks

Chapter 14 E-Mail and Instant Messaging

Unsolicited Commercial E-Mail (Spam)

Current Web Components and Concerns

Directory Services (DAP and LDAP)

Open Vulnerability and Assessment Language (OVAL)

Chapter 16 Disaster Recovery and Business Continuity

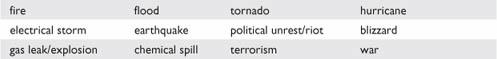

Disaster Recovery Plans/Process

High Availability and Fault Tolerance

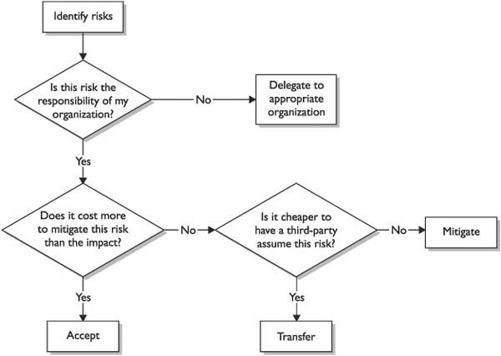

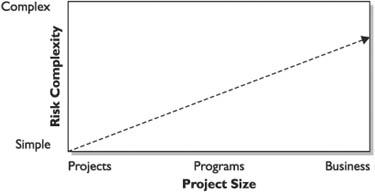

An Overview of Risk Management

Example of Risk Management at the International Banking Level

Key Terms for Understanding Risk Management

Software Engineering Institute Model

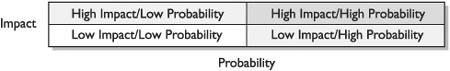

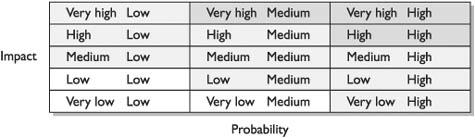

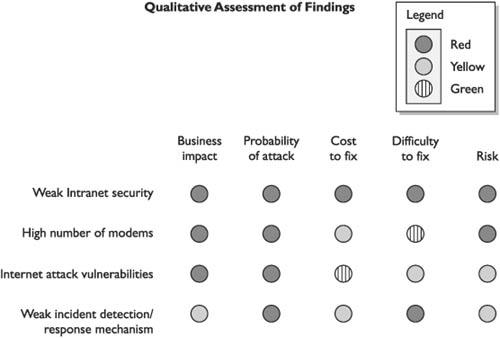

Qualitative vs. Quantitative Risk Assessment

The Key Concept: Separation (Segregation) of Duties

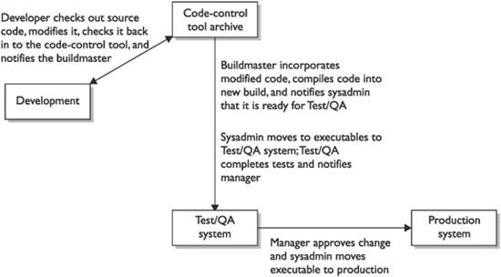

Implementing Change Management

The Purpose of a Change Control Board

The Capability Maturity Model Integration

Chapter 19 Privilege Management

User, Group, and Role Management

Centralized vs. Decentralized Management

The Decentralized, Centralized Model

Auditing (Privilege, Usage, and Escalation)

Logging and Auditing of Log Files

Periodic Audits of Security Settings

Handling Access Control (MAC, DAC, and RBAC)

Mandatory Access Control (MAC)

Discretionary Access Control (DAC)

Role-based Access Control (RBAC)

Rule-based Access Control (RBAC)

Permissions and Rights in Windows Operating Systems

Three Rules Regarding Evidence

Installing and Running MasterExam

Appendix B OSI Model and Internet Protocols

Networking Frameworks and Protocols

ACKNOWLEDGMENTS

We, the authors of CompTIA Security+ Certification All-in-One Exam Guide, have many individuals who we need to acknowledge—individuals without whom this effort would not have been successful.

The list needs to start with those folks at McGraw-Hill who worked tirelessly with the project’s multiple authors and contributors and led us successfully through the minefield that is a book schedule and who took our rough chapters and drawings and turned them into a final, professional product we can be proud of. We thank all the good people from the Acquisitions team, Tim Green, Jennifer Housh, and Carly Stapleton; from the Editorial Services team, Jody McKenzie; and from the Illustration and Production team, George Anderson, Peter Hancik, and Lyssa Wald. We also thank the technical editor Glen Clarke; the project editor, LeeAnn Pickrell; the copyeditor, Lisa Theobald; the proofreader, Susie Elkind; and the indexer, Karin Arrigoni for all their attention to detail that made this a finer work after they finished with it.

We also need to acknowledge our current employers who, to our great delight, have seen fit to pay us to work in a career field that we all find exciting and rewarding. There is never a dull moment in security because it is constantly changing.

We would like to thank Art Conklin for herding the cats on this one.

Finally, we would each like to individually thank those people who—on a personal basis—have provided the core support for us individually. Without these special people in our lives, none of us could have put this work together.

I would like to thank my wife, Charlan, for the tremendous support she has always given me. It doesn’t matter how many times I have sworn that I’ll never get involved with another book project only to return within months to yet another one; through it all, she has remained supportive.

I would also like to publicly thank the United States Air Force, which provided me numerous opportunities since 1986 to learn more about security than I ever knew existed.

To whoever it was who decided to send me as a young captain—fresh from completing my master’s degree in artificial intelligence—to my first assignment in computer security: thank you, it has been a great adventure!

—Gregory B. White, Ph.D.

To Susan, my muse and love, for all the time you suffered as I work on books.

—Art Conklin

Special thanks to Josie for all her support.

—Chuck Cothren

Geena, thanks for being my best friend and my greatest support. Anything I am is because of you. Love to my kids and grandkids!

—Roger L. Davis

To my wife and best friend Leah for your love, energy, and support—thank you for always being there. Here’s to many more years together.

—Dwayne Williams

PREFACE

Information and computer security has moved from the confines of academia to mainstream America in the last decade. The CodeRed, Nimda, and Slammer attacks were heavily covered in the media and broadcast into the average American’s home. It has become increasingly obvious to everybody that something needs to be done in order to secure not only our nation’s critical infrastructure but also the businesses we deal with on a daily basis. The question is, “Where do we begin?” What can the average information technology professional do to secure the systems that he or she is hired to maintain? One immediate answer is education and training. If we want to secure our computer systems and networks, we need to know how to do this and what security entails.

Complacency is not an option in today’s hostile network environment. While we once considered the insider to be the major threat to corporate networks, and the “script kiddie” to be the standard external threat (often thought of as only a nuisance), the highly interconnected networked world of today is a much different place. The U.S. government identified eight critical infrastructures a few years ago that were thought to be so crucial to the nation’s daily operation that if one were to be lost, it would have a catastrophic impact on the nation. To this original set of eight sectors, more have recently been added. A common thread throughout all of these, however, is technology—especially technology related to computers and communication. Thus, if an individual, organization, or nation wanted to cause damage to this nation, it could attack not just with traditional weapons but also with computers through the Internet. It is not surprising to hear that among the other information seized in raids on terrorist organizations, computers and Internet information are usually seized as well. While the insider can certainly still do tremendous damage to an organization, the external threat is again becoming the chief concern among many.

So, where do you, the IT professional seeking more knowledge on security, start your studies? The IT world is overflowing with certifications that can be obtained by those attempting to learn more about their chosen profession. The security sector is no different, and the CompTIA Security+ exam offers a basic level of certification for security. In the pages of this exam guide, you will find not only material that can help you prepare for taking the CompTIA Security+ examination but also the basic information that you will need in order to understand the issues involved in securing your computer systems and networks today. In no way is this exam guide the final source for learning all about protecting your organization’s systems, but it serves as a point from which to launch your security studies and career.

One thing is certainly true about this field of study—it never gets boring. It constantly changes as technology itself advances. Something else you will find as you progress in your security studies is that no matter how much technology advances and no matter how many new security devices are developed, at its most basic level, the human is still the weak link in the security chain. If you are looking for an exciting area to delveinto, then you have certainly chosen wisely. Security offers a challenging blend of technology and people issues. We, the authors of this exam guide, wish you luck as you embark on an exciting and challenging career path.

—Gregory B. White, Ph.D.

INTRODUCTION

Computer security is becoming increasingly important today as the number of security incidents steadily climbs. Many corporations now spend significant portions of their budget on security hardware, software, services, and personnel. They are spending this money not because it increases sales or enhances the product they provide, but because of the possible consequences should they not take protective actions.

Why Focus on Security?

Security is not something that we want to have to pay for; it would be nice if we didn’t have to worry about protecting our data from disclosure, modification, or destruction from unauthorized individuals, but that is not the environment we find ourselves in today. Instead, we have seen the cost of recovering from security incidents steadily rise along with the number of incidents themselves. Since September 11, 2001, this has taken on an even greater sense of urgency as we now face securing our systems not just from attack by disgruntled employees, juvenile hackers, organized crime, or competitors; we now also have to consider the possibility of attacks on our systems from terrorist organizations. If nothing else, the events of September 11, 2001, showed that anybody is a potential target. You do not have to be part of the government or a government contractor; being an American is sufficient reason to make you a target to some, and with the global nature of the Internet, collateral damage from cyber attacks on one organization could have a worldwide impact.

A Growing Need for Security Specialists

In order to protect our computer systems and networks, we will need a significant number of new security professionals trained in the many aspects of computer and network security. This is not an easy task as the systems connected to the Internet become increasingly complex with software whose lines of codes number in the millions. Understanding why this is such a difficult problem to solve is not hard if you consider just how many errors might be present in a piece of software that is several million lines long. When you add the additional factor of how fast software is being developed—from necessity as the market is constantly changing—understanding how errors occur is easy.

Not every “bug” in the software will result in a security hole, but it doesn’t take many to have a drastic affect on the Internet community. We can’t just blame the vendors for this situation because they are reacting to the demands of government and industry. Most vendors are fairly adept at developing patches for flaws found in their software, and patches are constantly being issued to protect systems from bugs that may introduce security problems. This introduces a whole new problem for managers and administrators—patch management. How important this has become is easily illustrated by how many of the most recent security events have occurred as a result of a security bug that was discovered months prior to the security incident, and for which a patch has been available, but for which the community has not correctly installed the patch, thus making the incident possible. One of the reasons this happens is that many of the individuals responsible for installing the patches are not trained to understand the security implications surrounding the hole or the ramifications of not installing the patch. Many of these individuals simply lack the necessary training.

Because of the need for an increasing number of security professionals who are trained to some minimum level of understanding, certifications such as the Security+ have been developed. Prospective employers want to know that the individual they are considering hiring knows what to do in terms of security. The prospective employee, in turn, wants to have a way to demonstrate his or her level of understanding, which can enhance the candidate’s chances of being hired. The community as a whole simply wants more trained security professionals.

Preparing Yourself for the Security+ Exam

CompTIA Security+ Certification All-in-One Exam Guide is designed to help prepare you to take the CompTIA Security+ certification exam. When you pass it, you will demonstrate you have that basic understanding of security that employers are looking for. Passing this certification exam will not be an easy task, for you will need to learn many things to acquire that basic understanding of computer and network security.

How This Book Is Organized

The book is divided into sections and chapters to correspond with the objectives of the exam itself. Some of the chapters are more technical than others—reflecting the nature of the security environment where you will be forced to deal with not only technical details but also other issues such as security policies and procedures as well as training and education. Although many individuals involved in computer and network security have advanced degrees in math, computer science, information systems, or computer or electrical engineering, you do not need this technical background to address security effectively in your organization. You do not need to develop your own cryptographic algorithm; for example, you simply need to be able to understand how cryptography is used along with its strengths and weaknesses. As you progress in your studies, you will learn that many security problems are caused by the human element. The best technology in the world still ends up being placed in an environment where humans have the opportunity to foul things up—and all too often do.

Part I: Security Concepts The book begins with an introduction of some of the basic elements of security.

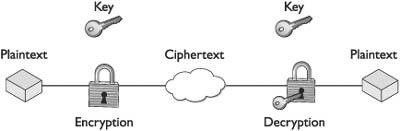

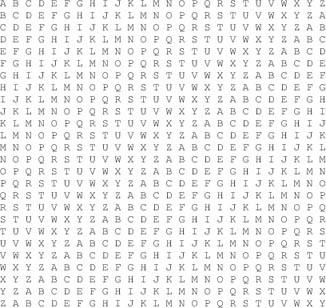

Part II: Cryptography and Applications Cryptography is an important part of security, and this part covers this topic in detail. The purpose is not to make cryptographers out of readers but to instead provide a basic understanding of how cryptography works and what goes into a basic cryptographic scheme. An important subject in cryptography, and one that is essential for the reader to understand, is the creation of public key infrastructures, and this topic is covered as well.

Part III: Security in the Infrastructure The next part concerns infrastructure issues. In this case, we are not referring to the critical infrastructures identified by the White House several years ago (identifying sectors such as telecommunications, banking and finance, oil and gas, and so forth) but instead the various components that form the backbone of an organization’s security structure.

Part IV: Security in Transmissions This part discusses communications security. This is an important aspect of security because, for years now, we have connected our computers together into a vast array of networks. Various protocols in use today and that the security practitioner needs to be aware of are discussed in this part.

Part V: Operational Security This part addresses operational and organizational issues. This is where we depart from a discussion of technology again and will instead discuss how security is accomplished in an organization. Because we know that we will not be absolutely successful in our security efforts—attackers are always finding new holes and ways around our security defenses—one of the most important topics we will address is the subject of security incident response and recovery. Also included is a discussion of change management (addressing the subject we alluded to earlier when addressing the problems with patch management), security awareness and training, incident response, and forensics.

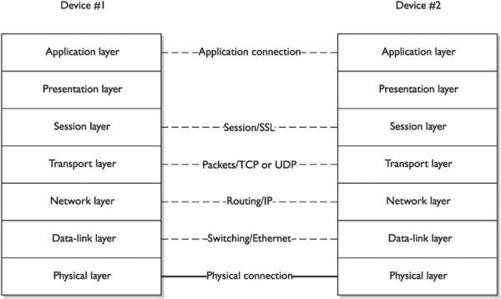

Part VI: Appendixes There are two appendixes in CompTIA Security+ Certification All-in-One Exam Guide. Appendix A explains how best to use the CD-ROM included with this book, and Appendix B provides an additional in-depth explanation of the OSI model and Internet protocols, should this information be new to you.

Glossary Located just before the Index, you will find a useful glossary of security terminology, including many related acronyms and their meaning. We hope that you use the Glossary frequently and find it to be a useful study aid as you work your way through the various topics in this exam guide.

Special Features of the All-in-One Certification Series

To make our exam guides more useful and a pleasure to read, we have designed the All-in-One Certification series to include several conventions.

Icons

To alert you to an important bit of advice, a shortcut, or a pitfall, you’ll occasionally see Notes, Tips, Cautions, and Exam Tips peppered throughout the text.

NOTE Notes offer nuggets of especially helpful stuff, background explanations, and information, and terms are defined occasionally.

TIP Tips provide suggestions and nuances to help you learn to finesse your job. Take a tip from us and read the Tips carefully.

CAUTION When you see a Caution, pay special attention. Cautions appear when you have to make a crucial choice or when you are about to undertake something that may have ramifications you might not immediately anticipate. Read them now so you don’t have regrets later.

EXAM TIP Exam Tips give you special advice or may provide information specifically related to preparing for the exam itself.

End-of-Chapter Reviews and Chapter Tests

An important part of this book comes at the end of each chapter where you will find a brief review of the high points along with a series of questions followed by the answers to those questions. Each question is in multiple-choice format. The answers provided also include a small discussion explaining why the correct answer actually is the correct answer.

The questions are provided as a study aid to you, the reader and prospective Security+ exam taker. We obviously can’t guarantee that if you answer all of our questions correctly you will absolutely pass the certification exam. Instead, what we can guarantee is that the questions will provide you with an idea about how ready you are for the exam.

The CD-ROM

CompTIA Security+ Certification All-in-One Exam Guide also provides you with a CD-ROM of even more test questions and their answers to help you prepare for the certification exam. Read more about the companion CD-ROM in Appendix A.

Onward and Upward

At this point, we hope that you are now excited about the topic of security, even if you weren’t in the first place. We wish you luck in your endeavors and welcome you to the exciting field of computer and network security.

PART I

Security Concepts

Chapter 1 General Security Concepts

Chapter 1 General Security Concepts

Chapter 2 Operational Organizational Security

Chapter 2 Operational Organizational Security

Chapter 3 Legal Issues, Privacy, and Ethics

Chapter 3 Legal Issues, Privacy, and Ethics

CHAPTER 1

General Security Concepts

Learn about the Security+ exam

- Learn basic terminology associated with computer and information security

- Discover the basic approaches to computer and information security

- Discover various methods of implementing access controls

- Determine methods used to verify the identity and authenticity of an individual

Why should you be concerned with taking the Security+ exam? The goal of taking the Computing Technology Industry Association (CompTIA) Security+ exam is to prove that you’ve mastered the worldwide standards for foundation-level security practitioners. With a growing need for trained security professionals, the CompTIA Security+ exam gives you a perfect opportunity to validate your knowledge and understanding of the computer security field. The exam is an appropriate mechanism for many different individuals, including network and system administrators, analysts, programmers, web designers, application developers, and database specialists to show proof of professional achievement in security. The exam’s objectives were developed with input and assistance from industry and government agencies, including such notable examples as the Federal Bureau of Investigation (FBI), the National Institute of Standards and Technology (NIST), the U.S. Secret Service, the Information Systems Security Association (ISSA), the Information Systems Audit and Control Association (ISACA), Microsoft Corporation, RSA Security, Motorola, Novell, Sun Microsystems, VeriSign, and Entrust.

The Security+ Exam

The Security+ exam is designed to cover a wide range of security topics—subjects about which a security practitioner would be expected to know. The test includes information from six knowledge domains:

Knowledge Domain | Percent of Exam |

Systems Security | 21% |

Network Infrastructure | 20% |

Access Control | 17% |

Assessments & Audits | 15% |

Cryptography | 15% |

Organizational Security | 12% |

The Systems Security knowledge domain covers the security threats to computer systems and addresses the mechanisms that systems use to address these threats. A major portion of this domain concerns the factors that go into hardening the operating system as well as the hardware and peripherals. The Network Infrastructure domain examines the security threats introduced when computers are connected in local networks and with the Internet. It is also concerned with the various elements of a network as well as the tools and mechanisms put in place to protect networks. Since a major security goal is to prevent unauthorized access to computer systems and the data they process, the third domain examines the many ways that we attempt to control who can access our systems and data. Since security is a difficult goal to obtain, we must constantly examine the ever-changing environment in which our systems operate. The fourth domain, Assessments & Audits, covers things individuals can do to check that security mechanisms that have been implemented are adequate and are sufficiently protecting critical data and resources. Cryptography has long been part of the basic security foundation of any organization, and an entire domain is devoted to its various aspects. The last domain, Organizational Security, takes a look at what an organization should be doing after all the other security mechanisms are in place. This domain covers incident response and disaster recovery, in addition to topics more appropriately addressed at the organizational level.

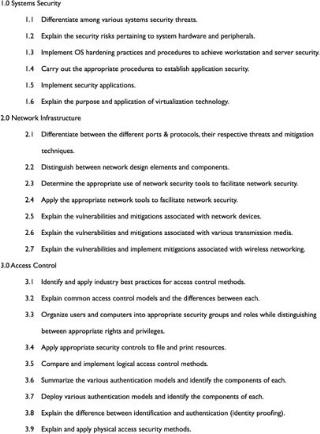

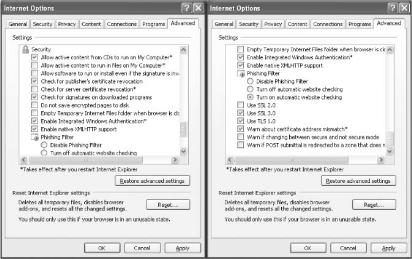

The exam consists of a series of questions, each designed to have a single best answer or response. The other available choices are designed to provide options that an individual might choose if he or she had an incomplete knowledge or understanding of the security topic represented by the question. The exam questions are chosen from the more detailed objectives listed in the outline shown in Figure 1-1, an excerpt from the 2008 objectives document obtainable from the CompTIA web site at http://certification.comptia.org/resources/objectives.aspx.

The Security+ exam is designed for individuals who have at least two years of networking experience and who have a thorough understanding of TCP/IP with a focus on security. Originally administered only in English, the exam is now offered in testing centers around the world in the English, Japanese, Korean, and German languages. Consult the CompTIA web site at www.comptia.org to determine a location near you.

The exam consists of 100 questions to be completed in 90 minutes. A minimum passing score is considered 764 out of a possible 900 points. Results are available immediately after you complete the exam. An individual who fails to pass the exam the first time will be required to pay the exam fee again to retake the exam, but no mandatory waiting period is required before retaking it the second time. If the individual again fails the exam, a minimum waiting period of 30 days is required for each subsequent retake. For more information on retaking exams, consult CompTIA’s retake policy, which can be found on its web site.

This All-in-One Security + Certification Exam Guide is designed to assist you in preparing for the Security+ exam. It is organized around the same objectives as the exam and attempts to cover the major areas the exam includes. Using this guide in no way guarantees that you will pass the exam, but it will greatly assist you in preparing to meet the challenges posed by the Security+ exam.

Figure 1-1 The CompTIA Security+ objectives

Basic Security Terminology

The term hacking is used frequently in the media. A hacker was once considered an individual who understood the technical aspects of computer operating systems and networks. Hackers were individuals you turned to when you had a problem and needed extreme technical expertise. Today, as a result of the media use, the term is used more often to refer to individuals who attempt to gain unauthorized access to computer systems or networks. While some would prefer to use the terms cracker and cracking when referring to this nefarious type of activity, the terminology generally accepted by the public is that of hacker and hacking. A related term that is sometimes used is phreaking, which refers to the “hacking” of computers and systems used by the telephone company.

Security Basics

Computer security is a term that has many meanings and related terms. Computer security entails the methods used to ensure that a system is secure. The ability to control who has access to a computer system and data and what they can do with those resources must be addressed in broad terms of computer security.

Seldom in today’s world are computers not connected to other computers in networks. This then introduces the term network security to refer to the protection of the multiple computers and other devices that are connected together in a network. Related to these two terms are two others, information security and information assurance, which place the focus of the security process not on the hardware and software being used but on the data that is processed by them. Assurance also introduces another concept, that of the availability of the systems and information when users want them.

Since the late 1990s, much has been published about specific lapses in security that have resulted in the penetration of a computer network or in denying access to or the use of the network. Over the last few years, the general public has become increasingly aware of its dependence on computers and networks and consequently has also become interested in their security.

As a result of this increased attention by the public, several new terms have become commonplace in conversations and print. Terms such as hacking, virus, TCP/IP, encryption, and firewalls now frequently appear in mainstream news publications and have found their way into casual conversations. What was once the purview of scientists and engineers is now part of our everyday life.

With our increased daily dependence on computers and networks to conduct everything from making purchases at our local grocery store to driving our children to school (any new car these days probably uses a small computer to obtain peak engine performance), ensuring that computers and networks are secure has become of paramount importance. Medical information about each of us is probably stored in a computer somewhere. So is financial information and data relating to the types of purchases we make and store preferences (assuming we have and use a credit card to make purchases). Making sure that this information remains private is a growing concern to the general public, and it is one of the jobs of security to help with the protection of our privacy. Simply stated, computer and network security is essential for us to function effectively and safely in today’s highly automated environment.

The “CIA” of Security

Almost from its inception, the goals of computer security have been threefold: confidentiality, integrity, and availability—the “CIA” of security. Confidentiality ensures that only those individuals who have the authority to view a piece of information may do so. No unauthorized individual should ever be able to view data to which they are not entitled. Integrity is a related concept but deals with the modification of data. Only authorized individuals should be able to change or delete information. The goal of availability is to ensure that the data, or the system itself, is available for use when the authorized user wants it.

As a result of the increased use of networks for commerce, two additional security goals have been added to the original three in the CIA of security. Authentication deals with ensuring that an individual is who he claims to be. The need for authentication in an online banking transaction, for example, is obvious. Related to this is nonrepudiation, which deals with the ability to verify that a message has been sent and received so that the sender (or receiver) cannot refute sending (or receiving) the information.

EXAM TIP Expect questions on these concepts as they are basic to the understanding of what we hope to guarantee in securing our computer systems and networks.

The Operational Model of Security

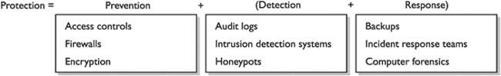

For many years, the focus of security was on prevention. If you could prevent somebody from gaining access to your computer systems and networks, you assumed that they were secure. Protection was thus equated with prevention. While this basic premise was true, it failed to acknowledge the realities of the networked environment of which our systems are a part. No matter how well you think you can provide prevention, somebody always seems to find a way around the safeguards. When this happens, the system is left unprotected. What is needed is multiple prevention techniques and also technology to alert you when prevention has failed and to provide ways to address the problem. This results in a modification to the original security equation with the addition of two new elements—detection and response. The security equation thus becomes

Protection = Prevention + (Detection + Response)

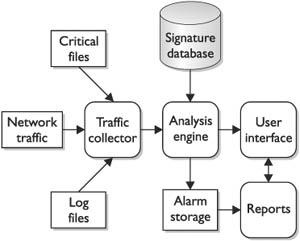

This is known as the operational model of computer security. Every security technique and technology falls into at least one of the three elements of the equation. Examples of the types of technology and techniques that represent each are depicted in Figure 1-2.

Security Principles

An organization can choose to address the protection of its networks in three ways: ignore security issues, provide host security, and approach security at a network level. The last two, host and network security, have prevention as well as detection and response components.

Figure 1-2 Sample technologies in the operational model of computer security

If an organization decides to ignore security, it has chosen to utilize the minimal amount of security that is provided with its workstations, servers, and devices. No additional security measures will be implemented. Each “out-of-the-box” system has certain security settings that can be configured, and they should be. To protect an entire network, however, requires work in addition to the few protection mechanisms that come with systems by default.

Host Security Host security takes a granular view of security by focusing on protecting each computer and device individually instead of addressing protection of the network as a whole. When host security is implemented, each computer is expected to protect itself. If an organization decides to implement only host security and does not include network security, it will likely introduce or overlook vulnerabilities. Many environments involve different operating systems (Windows, UNIX, Linux, Macintosh), different versions of those operating systems, and different types of installed applications. Each operating system has security configurations that differ from other systems, and different versions of the same operating system can in fact have variations among them. Trying to ensure that every computer is “locked down” to the same degree as every other system in the environment can be overwhelming and often results in an unsuccessful and frustrating effort.

Host security is important and should always be addressed. Security, however, should not stop there, as host security is a complementary process to be combined with network security. If individual host computers have vulnerabilities embodied within them, network security can provide another layer of protection that will hopefully stop intruders getting that far into the environment. Topics covered in this book dealing with host security include bastion hosts, host-based intrusion detection systems (devices designed to determine whether an intruder has penetrated a computer system or network), antivirus software (programs designed to prevent damage caused by various types of malicious software), and hardening of operating systems (methods used to strengthen operating systems and to eliminate possible avenues through which attacks can be launched).

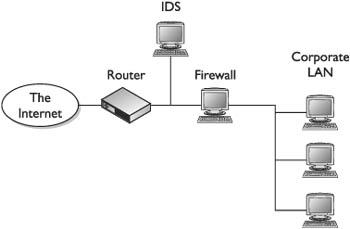

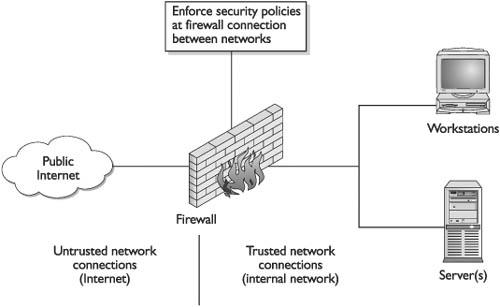

Network Security In some smaller environments, host security alone might be a viable option, but as systems become connected into networks, security should include the actual network itself. In network security, an emphasis is placed on controlling access to internal computers from external entities. This control can be through devices such as routers, firewalls, authentication hardware and software, encryption, and intrusion detection systems (IDSs).

Network environments have a tendency to be unique entities because usually no two networks have exactly the same number of computers, the same applications installed, the same number of users, the exact same configurations, or the same available servers. They will not perform the same functions or have the same overall architecture. Because networks have so many differences, they can be protected and configured in many different ways. This chapter covers some foundational approaches to network and host security. Each approach can be implemented in myriad ways.

Least Privilege

One of the most fundamental approaches to security is least privilege. This concept is applicable to many physical environments as well as network and host security. Least privilege means that an object (such as a user, application, or process) should have only the rights and privileges necessary to perform its task, with no additional permissions. Limiting an object’s privileges limits the amount of harm that can be caused, thus limiting an organization’s exposure to damage. Users may have access to the files on their workstations and a select set of files on a file server, but they have no access to critical data that is held within the database. This rule helps an organization protect its most sensitive resources and helps ensure that whoever is interacting with these resources has a valid reason to do so.

Different operating systems and applications have different ways of implementing rights, permissions, and privileges. Before operating systems are actually configured, an overall plan should be devised and standardized methods developed to ensure that a solid security baseline is implemented. For example, a company might want all of the accounting department employees, but no one else, to be able to access employee payroll and profit margin spreadsheets stored on a server. The easiest way to implement this is to develop an Accounting group, put all accounting employees in this group, and assign rights to the group instead of each individual user.

As another example, a company could require implementing a hierarchy of administrators that perform different functions and require specific types of rights. Two people could be tasked with performing backups of individual workstations and servers; thus they do not need administrative permissions with full access to all resources. Three people could be in charge of setting up new user accounts and password management, which means they do not need full, or perhaps any, access to the company’s routers and switches. Once these baselines are delineated, indicating what subjects require which rights and permissions, it is much easier to configure settings to provide the least privileges for different subjects.

The concept of least privilege applies to more network security issues than just providing users with specific rights and permissions. When trust relationships are created, they should not be implemented in such a way that everyone trusts each other simply because it is easier to set it up that way. One domain should trust another for very specific reasons, and the implementers should have a full understanding of what the trust relationship allows between two domains. If one domain trusts another, do all of the users automatically become trusted, and can they thus easily access any and all resources on the other domain? Is this a good idea? Can a more secure method provide the same functionality? If a trusted relationship is implemented such that users in one group can access a plotter or printer that is available on only one domain, for example, it might make sense to purchase another plotter so that other more valuable or sensitive resources are not accessible by the entire group.

Another issue that falls under the least privilege concept is the security context in which an application runs. All applications, scripts, and batch files run in the security context of a specific user on an operating system. These objects will execute with specific permissions as if they were a user. The application could be Microsoft Word and be run in the space of a regular user, or it could be a diagnostic program that needs access to more sensitive system files and so must run under an administrative user account, or it could be a program that performs backups and so should operate within the security context of a backup operator. The crux of this issue is that programs should execute only in the security context that is needed for that program to perform its duties successfully. In many environments, people do not really understand how to make programs run under different security contexts, or it just seems easier to have them all run under the administrator account. If attackers can compromise a program or service running under the administrative account, they have effectively elevated their access level and have much more control over the system and many more possibilities to cause damage.

EXAM TIP The concept of least privilege is fundamental to many aspects of security. Remember the basic idea is to give people access only to the data and programs that they need to do their job. Anything beyond that can lead to a potential security problem.

Separation of Duties

Another fundamental approach to security is separation of duties. This concept is applicable to physical environments as well as network and host security. Separation of duty ensures that for any given task, more than one individual needs to be involved. The task is broken into different duties, each of which is accomplished by a separate individual. By implementing a task in this manner, no single individual can abuse the system for his or her own gain. This principle has been implemented in the business world, especially financial institutions, for many years. A simple example is a system in which one individual is required to place an order and a separate person is needed to authorize the purchase.

While separation of duties provides a certain level of checks and balances, it is not without its own drawbacks. Chief among these is the cost required to accomplish the task. This cost is manifested in both time and money. More than one individual is required when a single person could accomplish the task, thus potentially increasing the cost of the task. In addition, with more than one individual involved, a certain delay can be expected as the task must proceed through its various steps.

Implicit Deny

What has become the Internet was originally designed as a friendly environment where everybody agreed to abide by the rules implemented in the various protocols. Today, the Internet is no longer the friendly playground of researchers that it once was. This has resulted in different approaches that might at first seem less than friendly but that are required for security purposes. One of these approaches is implicit deny.

Frequently in the network world, decisions concerning access must be made. Often a series of rules will be used to determine whether or not to allow access. If a particular situation is not covered by any of the other rules, the implicit deny approach states that access should not be granted. In other words, if no rule would allow access, then access should not be granted. Implicit deny applies to situations involving both authorization and access.

The alternative to implicit deny is to allow access unless a specific rule forbids it. Another example of these two approaches is in programs that monitor and block access to certain web sites. One approach is to provide a list of specific sites that a user is not allowed to access. Access to any site not on the list would be implicitly allowed. The opposite approach (the implicit deny approach) would block all access to sites that are not specifically identified as authorized. As you can imagine, depending on the specific application, one or the other approach would be appropriate. Which approach you choose depends on the security objectives and policies of your organization.

EXAM TIP Implicit deny is another fundamental principle of security and students need to be sure they understand this principle. Similar to least privilege, this principle states if you haven’t specifically been allowed access, then access should be denied.

Job Rotation

An interesting approach to enhance security that is gaining increasing attention is through job rotation. The benefits of rotating individuals through various jobs in an organization’s IT department have been discussed for a while. By rotating through jobs, individuals gain a better perspective of how the various parts of IT can enhance (or hinder) the business. Since security is often a misunderstood aspect of IT, rotating individuals through security positions can result in a much wider understanding of the security problems throughout the organization. It also can have the side benefit of not relying on any one individual too heavily for security expertise. When all security tasks are the domain of one employee, and if that individual were to leave suddenly, security at the organization could suffer. On the other hand, if security tasks were understood by many different individuals, the loss of any one individual would have less of an impact on the organization.

One significant drawback to job rotation is relying on it too heavily. The IT world is very technical and often expertise in any single aspect takes years to develop. This is especially true in the security environment. In addition, the rapidly changing threat environment with new vulnerabilities and exploits routinely being discovered requires a level of understanding that takes considerable time to acquire and maintain.

Layered Security

A bank does not protect the money that it stores only by placing it in a vault. It uses one or more security guards as a first defense to watch for suspicious activities and to secure the facility when the bank is closed. It probably uses monitoring systems to watch various activities that take place in the bank, whether involving customers or employees. The vault is usually located in the center of the facility, and layers of rooms or walls also protect access to the vault. Access control ensures that the people who want to enter the vault have been granted the appropriate authorization before they are allowed access, and the systems, including manual switches, are connected directly to the police station in case a determined bank robber successfully penetrates any one of these layers of protection.

Networks should utilize the same type of layered security architecture. No system is 100 percent secure and nothing is foolproof, so no single specific protection mechanism should ever be trusted alone. Every piece of software and every device can be compromised in some way, and every encryption algorithm can be broken by someone with enough time and resources. The goal of security is to make the effort of actually accomplishing a compromise more costly in time and effort than it is worth to a potential attacker.

Consider, for example, the steps an intruder has to take to access critical data held within a company’s back-end database. The intruder will first need to penetrate the firewall and use packets and methods that will not be identified and detected by the IDS (more on these devices in Chapter 11). The attacker will have to circumvent an internal router performing packet filtering and possibly penetrate another firewall that is used to separate one internal network from another. From here, the intruder must break the access controls on the database, which means performing a dictionary or brute-force attack to be able to authenticate to the database software. Once the intruder has gotten this far, he still needs to locate the data within the database. This can in turn be complicated by the use of access control lists (ACLs) outlining who can actually view or modify the data. That’s a lot of work.

This example illustrates the different layers of security many environments employ. It is important that several different layers are implemented, because if intruders succeed at one layer, you want to be able to stop them at the next. The redundancy of different protection layers assures that no single point of failure can breach the network’s security. If a network used only a firewall to protect its assets, an attacker successfully able to penetrate this device would find the rest of the network open and vulnerable. Or, because a firewall usually does not protect against viruses attached to e-mail, a second layer of defense is needed, perhaps in the form of an antivirus program.

Every network environment must have multiple layers of security. These layers can employ a variety of methods such as routers, firewalls, network segments, IDSs, encryption, authentication software, physical security, and traffic control. The layers need to work together in a coordinated manner so that one does not impede another’s functionality and introduce a security hole. Security at each layer can be very complex, and putting different layers together can increase the complexity exponentially.

Although having layers of protection in place is very important, it is also important to understand how these different layers interact either by working together or in some cases by working against each other. One example of how different security methods can work against each other occurs when firewalls encounter encrypted network traffic. An organization can use encryption so that an outside customer communicating with a specific web server is assured that sensitive data being exchanged is protected. If this encrypted data is encapsulated within Secure Sockets Layer (SSL) packets and is then sent through a firewall, the firewall will not be able to read the payload information in the individual packets. This could enable the customer, or an outside attacker, to send undetected malicious code or instructions through the SSL connection. Other mechanisms can be introduced in similar situations, such as designing web pages to accept information only in certain formats and having the web server parse through the data for malicious activity. The important piece is to understand the level of protection that each layer provides and how each layer can be affected by activities that occur in other layers.

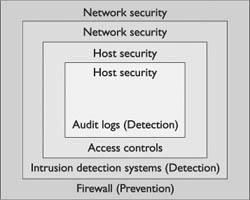

These layers are usually depicted starting at the top, with more general types of protection, and progress downward through each layer, with increasing granularity at each layer as you get closer to the actual resource, as you can see in Figure 1-3. The top-layer protection mechanism is responsible for looking at an enormous amount of traffic, and it would be overwhelming and cause too much of a performance degradation if each aspect of the packet were inspected here. Instead, each layer usually digs deeper into the packet and looks for specific items. Layers that are closer to the resource have to deal with only a fraction of the traffic that the top-layer security mechanism considers, and thus looking deeper and at more granular aspects of the traffic will not cause as much of a performance hit.

Diversity of Defense

Diversity of defense is a concept that complements the idea of various layers of security; layers are made dissimilar so that even if an attacker knows how to get through a system making up one layer, she might not know how to get through a different type of layer that employs a different system for security.

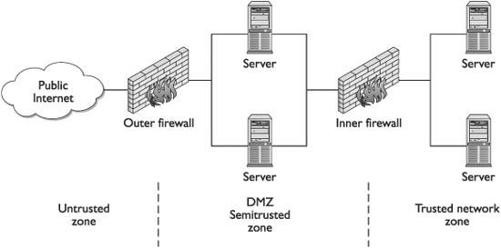

If, for example, an environment has two firewalls that form a demilitarized zone (a DMZ is the area between the two firewalls that provides an environment where activities can be more closely monitored), one firewall can be placed at the perimeter of the Internet and the DMZ. This firewall will analyze traffic that passes through that specific access point and enforces certain types of restrictions. The other firewall can be placed between the DMZ and the internal network. When applying the diversity of defense concept, you should set up these two firewalls to filter for different types of traffic and provide different types of restrictions. The first firewall, for example, can make sure that no File Transfer Protocol (FTP), Simple Network Management Protocol (SNMP), or Telnet traffic enters the network, but allow Simple Mail Transfer Protocol (SMTP), Secure Shell (SSH), Hypertext Transfer Protocol (HTTP), and SSL traffic through. The

Figure 1-3 Various layers of security

second firewall may not allow SSL or SSH through and can interrogate SMTP and HTTP traffic to make sure that certain types of attacks are not part of that traffic.

Another type of diversity of defense is to use products from different vendors. Every product has its own security vulnerabilities that are usually known to experienced attackers in the community. A Check Point firewall, for example, has different security issues and settings than a Sidewinder firewall; thus, different exploits can be used to crash or compromise them in some fashion. Combining this type of diversity with the preceding example, you might use the Check Point firewall as the first line of defense. If attackers are able to penetrate it, they are less likely to get through the next firewall if it is a Cisco PIX or Sidewinder firewall (or another maker’s firewall).

You should consider an obvious trade-off before implementing diversity of security using different vendors’ products. This setup usually increases operational complexity, and security and complexity are seldom a good mix. When implementing products from more than one vendor, security staff must know how to configure two different systems, the configuration settings will be totally different, the upgrades and patches will be released at different times and contain different changes, and the overall complexity of maintaining these systems can cause more headaches than security itself. This does not mean that you should not implement diversity of defense by installing products from different vendors, but you should know the implications of this decision.

Security Through Obscurity

With security through obscurity, security is considered effective if the environment and protection mechanisms are confusing or supposedly not generally known. Security through obscurity uses the approach of protecting something by hiding it—out of sight, out of mind. Noncomputer examples of this concept include hiding your briefcase or purse if you leave it in the car so that it is not in plain view, hiding a house key under a ceramic frog on your porch, or pushing your favorite ice cream to the back of the freezer so that nobody else will see it. This approach, however, does not provide actual protection of the object. Someone can still steal the purse by breaking into the car, lift the ceramic frog and find the key, or dig through the items in the freezer to find the ice cream. Security through obscurity may make someone work a little harder to accomplish a task, but it does not prevent anyone from eventually succeeding.

Similar approaches occur in computer and network security when attempting to hide certain objects. A network administrator can, for instance, move a service from its default port to a different port so that others will not know how to access it as easily, or a firewall can be configured to hide specific information about the internal network in the hope that potential attackers will not obtain the information for use in an attack on the network.

In most security circles, security through obscurity is considered a poor approach, especially if it is the organization’s only approach to security. An organization can use security through obscurity measures to try to hide critical assets, but other security measures should also be employed to provide a higher level of protection. For example, if an administrator moves a service from its default port to a more obscure port, an attacker can still find this service; thus a firewall should be used to restrict access to the service.

Keep It Simple

The terms security and complexity are often at odds with each other, because the more complex something is, the more difficult it is to understand, and you cannot truly secure something if you do not understand it. Another reason complexity is a problem within security is that it usually allows too many opportunities for something to go wrong. An application with 4000 lines of code has far fewer places for buffer overflows, for example, than an application with 2 million lines of code.

As with any other type of technology, when something goes wrong with security mechanisms, a troubleshooting process is used to identify the problem. If the mechanism is overly complex, identifying the root of the problem can be overwhelming if not impossible. Security is already a very complex issue because many variables are involved, many types of attacks and vulnerabilities are possible, many different types of resources must be secure, and many different ways can be used to secure them. You want your security processes and tools to be as simple and elegant as possible. They should be simple to troubleshoot, simple to use, and simple to administer.

Another application of the principle of keeping things simple concerns the number of services that you allow your system to run. Default installations of computer operating systems often leave many services running. The keep-it-simple principle tells us to eliminate those services that we don’t need. This is also a good idea from a security standpoint because it results in fewer applications that can be exploited and fewer services that the administrator is responsible for securing. The general rule of thumb should be to eliminate all nonessential services and protocols. This of course leads to the question, how do you determine whether a service or protocol is essential or not? Ideally, you should know for what your computer system or network is being used, and thus you should be able to identify those elements that are essential and activate only them. For a variety of reasons, this is not as easy as it sounds. Alternatively, a stringent security approach that you can take is to assume that no service is necessary (which is obviously absurd) and activate services and ports only as they are requested. Whatever approach you take, it’s a never-ending struggle to try to strike a balance between providing functionality and maintaining security.

Access Control

The term access control describes a variety of protection schemes. It sometimes refers to all security features used to prevent unauthorized access to a computer system or network. In this sense, it may be confused with authentication. More properly, access is the ability of a subject (such as an individual or a process running on a computer system) to interact with an object (such as a file or hardware device). Authentication, on the other hand, deals with verifying the identity of a subject.

To understand the difference, consider the example of an individual attempting to log in to a computer system or network. Authentication is the process used to verify to the computer system or network that the individual is who he claims to be. The most common method to do this is through the use of a user ID and password. Once the individual has verified his identity, access controls regulate what the individual can actually do on the system—just because a person is granted entry to the system does not mean that he should have access to all data the system contains.

Consider another example. When you go to your bank to make a withdrawal, the teller at the window will verify that you are indeed who you claim to be by asking you to provide some form of identification with your picture on it, such as your driver’s license. You might also have to provide your bank account number. Once the teller verifies your identity, you will have proved that you are a valid (authorized) customer of this bank. This does not, however, mean that you have the ability to view all information that the bank protects—such as your neighbor’s account balance. The teller will control what information, and funds, you can access and will grant you access only to information for which you are authorized to see. In this example, your identification and bank account number serve as your method of authentication and the teller serves as the access control mechanism.

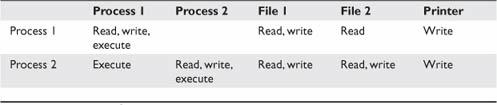

In computer systems and networks, access controls can be implemented in several ways. An access control matrix provides the simplest framework for illustrating the process and is shown in Table 1-1. In this matrix, the system is keeping track of two processes, two files, and one hardware device. Process 1 can read both File 1 and File 2 but can write only to File 1. Process 1 cannot access Process 2, but Process 2 can execute Process 1. Both processes have the ability to write to the printer.

While simple to understand, the access control matrix is seldom used in computer systems because it is extremely costly in terms of storage space and processing. Imagine the size of an access control matrix for a large network with hundreds of users and thousands of files. The actual mechanics of how access controls are implemented in a system varies, though access control lists (ACLs) are common. An ACL is nothing more than a list that contains the subjects that have access rights to a particular object. The list identifies not only the subject but the specific access granted to the subject for the object. Typical types of access include read, write, and execute as indicated in the example access control matrix.

No matter what specific mechanism is used to implement access controls in a computer system or network, the controls should be based on a specific model of access. Several different models are discussed in security literature, including discretionary access control (DAC), mandatory access control (MAC), role-based access control (RBAC), and rule-based access control (also RBAC).

Table 1-1 An Access Control Matrix

Discretionary Access Control

Both discretionary access control and mandatory access control are terms originally used by the military to describe two different approaches to controlling an individual’s access to a system. As defined by the “Orange Book,” a Department of Defense document that at one time was the standard for describing what constituted a trusted computing system, DACs are “a means of restricting access to objects based on the identity of subjects and/or groups to which they belong. The controls are discretionary in the sense that a subject with a certain access permission is capable of passing that permission (perhaps indirectly) on to any other subject.” While this might appear to be confusing “government-speak,” the principle is rather simple. In systems that employ DACs, the owner of an object can decide which other subjects can have access to the object and what specific access they can have. One common method to accomplish this is the permission bits used in UNIX-based systems. The owner of a file can specify what permissions (read/write/execute) members in the same group can have and also what permissions all others can have. ACLs are also a common mechanism used to implement DAC.

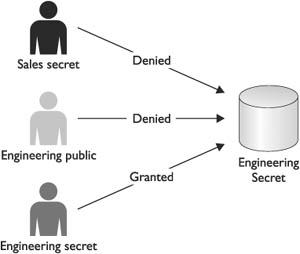

Mandatory Access Control

A less frequently employed system for restricting access is mandatory access control. This system, generally used only in environments in which different levels of security classifications exist, is much more restrictive regarding what a user is allowed to do. Referring to the “Orange Book,” a mandatory access control is “a means of restricting access to objects based on the sensitivity (as represented by a label) of the information contained in the objects and the formal authorization (i.e., clearance) of subjects to access information of such sensitivity.” In this case, the owner or subject can’t determine whether access is to be granted to another subject; it is the job of the operating system to decide.

In MAC, the security mechanism controls access to all objects, and individual subjects cannot change that access. The key here is the label attached to every subject and object. The label will identify the level of classification for that object and the level to which the subject is entitled. Think of military security classifications such as Secret and Top Secret. A file that has been identified as Top Secret (has a label indicating that it is Top Secret) may be viewed only by individuals with a Top Secret clearance. It is up to the access control mechanism to ensure that an individual with only a Secret clearance never gains access to a file labeled as Top Secret. Similarly, a user cleared for Top Secret access will not be allowed by the access control mechanism to change the classification of a file labeled as Top Secret to Secret or to send that Top Secret file to a user cleared only for Secret information. The complexity of such a mechanism can be further understood when you consider today’s windowing environment. The access control mechanism will not allow a user to cut a portion of a Top Secret document and paste it into a window containing a document with only a Secret label. It is this separation of differing levels of classified information that results in this sort of mechanism being referred to as multilevel security.

Finally, just because a subject has the appropriate level of clearance to view a document, that does not mean that she will be allowed to do so. The concept of “need to know,” which is a DAC concept, also exists in MAC mechanisms. “Need to know” means that a person is given access only to information that she needs in order to accomplish her job or mission.

EXAM TIP If trying to remember the difference between MAC and DAC, just remember that MAC is associated with multilevel security.

Role-Based Access Control

ACLs can be cumbersome and can take time to administer properly. Another access control mechanism that has been attracting increased attention is the role-based access control (RBAC). In this scheme, instead of each user being assigned specific access permissions for the objects associated with the computer system or network, each user is assigned a set of roles that he or she may perform. The roles are in turn assigned the access permissions necessary to perform the tasks associated with the role. Users will thus be granted permissions to objects in terms of the specific duties they must perform—not according to a security classification associated with individual objects.

Rule-Based Access Control

The first thing that you might notice is the ambiguity that is introduced with this access control method also using the acronym RBAC. Rule-based access control again uses objects such as ACLs to help determine whether access should be granted or not. In this case, a series of rules are contained in the ACL and the determination of whether to grant access will be made based on these rules. An example of such a rule is one that states that no employee may have access to the payroll file after hours or on weekends. As with MAC, users are not allowed to change the access rules, and administrators are relied on for this. Rule-based access control can actually be used in addition to or as a method of implementing other access control methods. For example, MAC methods can utilize a rule-based approach for implementation.

EXAM TIP Do not become confused between rule-based and role-based access controls, even though they both have the same acronym. The name of each is descriptive of what it entails and will help you distinguish between them.

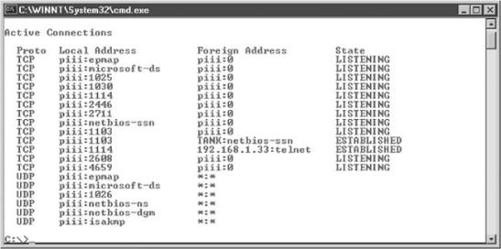

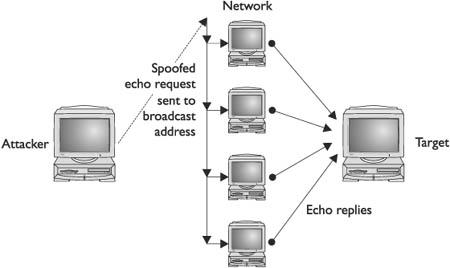

Authentication