ALSO BY TERRENCE W. DEACON

The Symbolic Species

Incomplete

Nature

How Mind

Emerged from Matter

Terrence W. Deacon

W. W. NORTON & COMPANY New York • London

To my parents, Bill and JoAnne Deacon (and “the Pirates”)

CONTENTS

Acknowledgments

The missing cipher

What matters?

Calculating with absence

A Zeno’s paradox of the mind

“As simple as possible, but not too simple”

A stone’s throw

What’s missing?

Denying the magic

Telos ex machina

Ex nihilo nihil fit

When less is more

The little man in my head

Homuncular representations

The vessel of teleology

Hiding final cause

Gods of the gaps

Preformation and epigenesis

Mentalese

Mind all the way down?

Elimination schemes

Heads of the Hydra

Dead truth

The ghost in the computer

The ballad of Deep Blue

Back to the future

The Law of Effect

Pseudopurpose

Blood, brains, and silicon

Fractions of life

The road not taken

Novelty

The evolution of emergence

Reductionism

The emergentists

A house of cards?

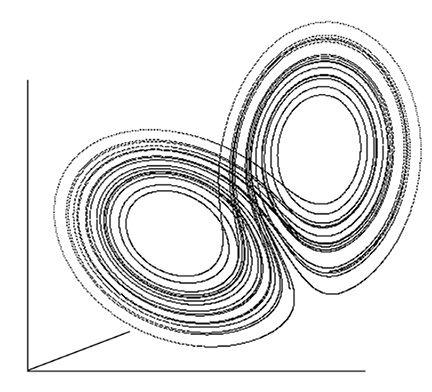

Complexity and “chaos”

Processes and parts

Habits

Redundancy

More similar = less different

Concrete abstraction

Nothing is irreducible

Why things change

A brief history of energy

Falling and forcing

Reframing thermodynamics

A formal cause of efficient causes?

Order from disorder

Self-simplification

Far-from-equilibrium thermodynamics

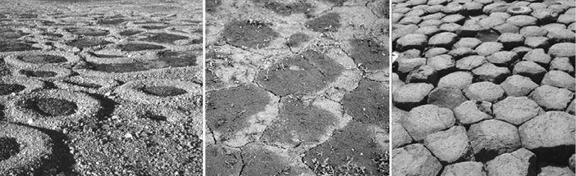

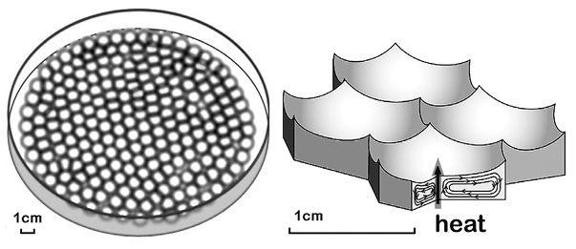

Rayleigh-Bénard convection: A case study

The diversity of morphodynamic processes

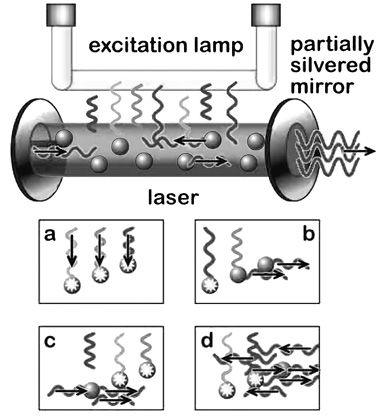

The exception that proves the rule

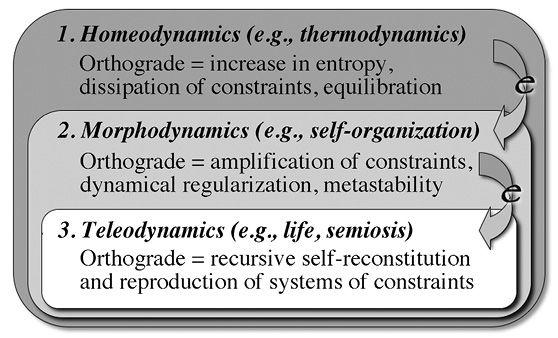

A common dynamical thread

Linked origins

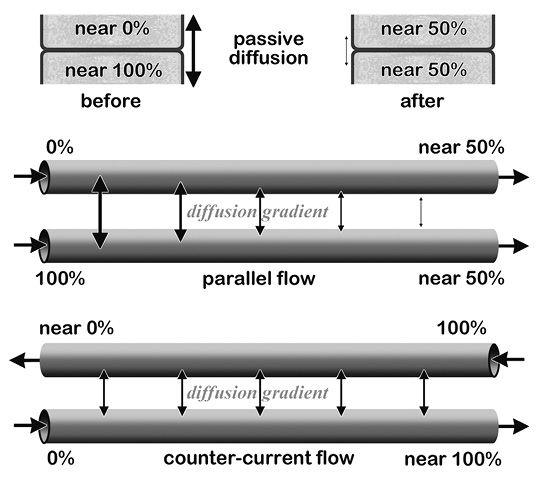

Compounded asymmetries

Self-reproducing mechanisms

What is life?

Frankencells

The threshold of function

Autocatalysis

Containment

Synergy

Autogens

Autogenic evolution

The ratchet of life

The emergence of teleodynamics

Forced to change

Effort

Against spontaneity

Transformation

Morphodynamic work

Teleodynamic work

Emergent causal powers

Missing the difference

Omissions, expectations, and absences

Two entropies

Information and reference

It takes work

Taming the demon

Aboutness matters

Beyond cybernetics

Working it out

Interpretation

Noise versus error

Darwinian information

From noise to signal

Information emerging

Representation

Natural elimination

“Life’s several powers”

Abiogenesis

The replicator’s new clothes

Autogenic interpretation

Energetics to genetics

Falling into complexity

Starting small

Individuation

Selves made of selves

Neuronal self

Self-differentiation

The locus of agency

Evolution’s answer to nominalism

The extentionless cogito

Missing the forest for the trees

Sentience versus intelligence

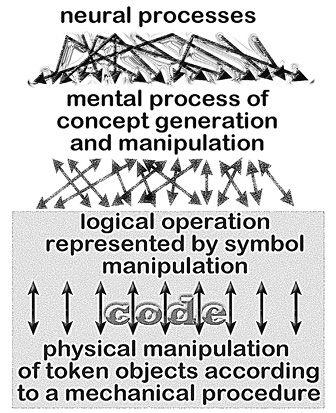

The complement to computation

Computing with meat

From organism to brain

The hierarchy of sentience

Emotion and energy

The thermodynamics of thought

Whence suffering?

Being here

Conclusion of the beginning

EPILOGUE

Nothing matters

The calculus of intentionality

Value

Glossary

Notes

References

Index

ACKNOWLEDGMENTS

If any of us can claim to see the world from a new vantage point it is because, as Isaac Newton once remarked, we are standing on the shoulders of giants. This is beyond question in my case, since each of the many threads that form the fabric of the theory presented in this book trace their origin to the life works of some of the greatest minds in history. But much more than this, it is often the case that it takes the convergence of like minds stubbornly questioning even the least dubious of our collective assumptions to shed the blinders that limit a particular conceptual paradigm. This is also true here. Few of the novel ideas explored here emerged from my personal reflections fully formed, and few even exhibited the rough-hewn form described in this book. These embryonic ideas were lucky to have been nourished by the truly dedicated and unselfish labors of a handful of brilliant, insightful, and questioning colleagues, who gathered in my living room, week after week, year after year, to question the assumptions, brainstorm about the right term for a new idea, or just struggle to grasp a concept that at every turn seemed to slip through our grasp. We were dubbed “Terry and the Pirates” (after the title of a postwar comic strip) because of our intense intellectual camaraderie and paradigm-challenging enterprise. This continuous thread of conversation has persisted over the course of nearly a decade and has helped to turn what was once nearly inconceivable into something merely counterintuitive.

The original Pirates include (in alphabetical order) Tyrone Cashman, Jamie Haag, Julie Hui, Eduardo Kohn, Jay Ogilvy, and Jeremy Sherman, with Ursula Goodenough and Michael Silberstein playing the role of periodic drop-ins from across the country. Most recently Alok Srivastava, Hajime Yamauchi, Drew Halley, and Dillon Niederhut have joined, as Jamie and Eduardo moved away to careers elsewhere in the country. Most have read through major portions of this manuscript and have offered useful comments and editorial feedback. Portions of the chapter on “Self” were re-edited from a paper co-written with Jay Ogilvy and Jamie Haag, and fragments of a number of chapters were influenced by papers co-authored either with Tyrone Cashman or with Jeremy Sherman. The close involvement of these many colleagues was demonstrated also in the long days that Ty Cashman, Jeremey Sherman, Julie Hui, and Hajime Yamauchi spent with me struggling through more than 700 pages of copy-editing queries and doing the necessary error checking that led to the final manuscript. Without the collective intelligence and work of this extended mind and body, it is difficult for me to imagine how these ideas would ever have seen the light of day, at least in a semi-readable form. In addition, I owe considerable thanks to the many colleagues around the country who have read bits of the manuscript and have offered their wise counsel. Ursula Goodenough, Michael Silberstein, Ambrose Nahas, and Don Favereau, in particular, read through early versions of various parts and provided extensive editorial feedback. Also my editor on The Symbolic Species, Hilary Hinzman, did extensive editorial work on the first four chapters, which therefore will be the most readable. These efforts have helped to greatly improve the presentation by cleaning up some of the oversights and confusions, and simplifying my sometimes tortured prose. Indeed, I have received far more editorial feedback than I have been able to take advantage of, given time and space.

So, it is only by the great good fortune of being in the right place and time in the history of ideas—heir to countless works of genius—and surrounded by a loyal band of fellow travelers that these ideas can be shared in a form that is even barely comprehensible. This loyal band of “Pirates” has been critical, supportive, patient, and insistent in just the right mix to make it happen. The book is a testament to the marvelous synergy of these many converging contributions, which have made this effort both a most exciting journey and a source of enduring friendships. Thank you all.

Finally, and most important, has been the constant unfaltering encouragement of my wife and best friend, Ella Ray, who has understood more than anyone else the limitations of my easily distracted mind and how to help me focus on the challenge of putting these ideas on paper, month after month, year after year.

Berkeley, September 2010

0

ABSENCE1

In the history of culture, the discovery of zero

will always stand out as one of the greatest single achievements of the human race.

—TOBIAS DANTZIG, 1930

THE MISSING CIPHER

Science has advanced to the point where we can precisely arrange individual atoms on a metal surface or identify people’s continents of ancestry by analyzing the DNA contained in their hair. And yet ironically we lack a scientific understanding of how sentences in a book refer to atoms, DNA, or anything at all. This is a serious problem. Basically, it means that our best science—that collection of theories that presumably come closest to explaining everything—does not include this one most fundamental defining characteristic of being you and me. In effect, our current “Theory of Everything” implies that we don’t exist, except as collections of atoms.

So what’s missing? Ironically and enigmatically, something missing is missing.

Consider the following familiar facts. The meaning of a sentence is not the squiggles used to represent letters on a piece of paper or a screen. It is not the sounds these squiggles might prompt you to utter. It is not even the buzz of neuronal events that take place in your brain as you read them. What a sentence means, and what it refers to, lack the properties that something typically needs in order to make a difference in the world. The information conveyed by this sentence has no mass, no momentum, no electric charge, no solidity, and no clear extension in the space within you, around you, or anywhere. More troublesome than this, the sentences you are reading right now could be nonsense, in which case there isn’t anything in the world that they could correspond to. But even this property of being a pretender to significance will make a physical difference in the world if it somehow influences how you might think or act.

Obviously, despite this something not-present that characterizes the contents of my thoughts and the meaning of these words, I wrote them because of the meanings that they might convey. And this is presumably why you are focusing your eyes on them, and what might prompt you to expend a bit of mental effort to make sense of them. In other words, the content of this or any sentence—a something-that-is-not-a-thing—has physical consequences. But how?

Meaning isn’t the only thing that presents a problem of this sort. Several other everyday relationships share this problematic feature as well. The function of a shovel isn’t the shovel and isn’t a hole in the ground, but rather the potential it affords for making holes easier to create. The reference of the wave of a hand in greeting is not the hand movement, nor the physical convergence of friends, but the initiation of a possible sharing of thoughts and remembered experiences. The purpose of my writing this book is not the tapping of computer keys, nor the deposit of ink on paper, nor even the production and distribution of a great many replicas of a physical book, but to share something that isn’t embodied by any of these physical processes and objects: ideas. And curiously, it is precisely because these ideas lack these physical attributes that they can be shared with tens of thousands of readers without ever being depleted. Even more enigmatically, ascertaining the value of this enterprise is nearly impossible to link with any specific physical consequence. It is something almost entirely virtual: maybe nothing more than making certain ideas easier to conceive, or, if my suspicions prove correct, increasing one’s sense of belonging in the universe.

Each of these sorts of phenomena—a function, reference, purpose, or value—is in some way incomplete. There is something not-there there. Without this “something” missing, they would just be plain and simple physical objects or events, lacking these otherwise curious attributes. Longing, desire, passion, appetite, mourning, loss, aspiration—all are based on an analogous intrinsic incompleteness, an integral without-ness.

As I reflect on this odd state of things, I am struck by the fact that there is no single term that seems to refer to this elusive character of such things. So, at the risk of initiating this discussion with a clumsy neologism, I will refer to this as an absential2 feature, to denote phenomena whose existence is determined with respect to an essential absence. This could be a state of things not yet realized, a specific separate object of a representation, a general type of property that may or may not exist, an abstract quality, an experience, and so forth—just not that which is actually present. This paradoxical intrinsic quality of existing with respect to something missing, separate, and possibly nonexistent is irrelevant when it comes to inanimate things, but it is a defining property of life and mind. A complete theory of the world that includes us, and our experience of the world, must make sense of the way that we are shaped by and emerge from such specific absences. What is absent matters, and yet our current understanding of the physical universe suggests that it should not. A causal role for absence seems to be absent from the natural sciences.

WHAT MATTERS?

Modern science is of course interested in explaining things that are materially and energetically present. We are interested in how physical objects behave under all manner of circumstances, what sorts of objects they are in turn composed of, and how the physical properties expressed in things at one moment influence what will happen at later moments. This includes even phenomena (I hesitate to call them objects or events) as strange and as hard to get a clear sense of as the quantum processes occurring at the unimaginably small subatomic scale. But even though quantum phenomena are often described in terms of possible physical properties not yet actualized, they are physically present in some as-yet-unspecified sense, and not absent, or represented. A purpose not yet actualized, a quality of feeling, a functional value just discovered—these are not just superimposed probable physical relationships. They are each an intrinsically absent aspect of something present.

The scientific focus on things present and actualized also helps to explain why, historically, scientific accounts have endured an uneasy co-existence with absential accounts of why things transpire as they do. This is exemplified by the relationship that each has with the notion of order. Left alone, an arrangement of a set of inanimate objects will naturally tend to fall into disorder, but we humans have a preference for certain arrangements, as do many species. Many functions and purposes are determined with respect to preferred arrangements, whether this is the arrangement of words in sentences or twigs in a bird’s nest. But things tend not to be regularly organized (i.e., tend to be disordered). Both thermodynamics and common sense predict that things will only get less ordered on their own. So when we happen to encounter well-ordered phenomena, or observe changes that invert what should happen naturally, we tend to invoke the influence of absential influences, like human design or divine intervention, to explain them. From the dawn of recorded history the regularity of celestial processes, the apparently exquisite design of animal and plant bodies, and causes of apparently meaningful coincidences have been attributed to supernatural mentalistic causes, whether embodied by invisible demons, an all-powerful divine artificer, or some other transcendental purposiveness. Not surprisingly, these influences were imagined to originate from disembodied sources, lacking any physical form.

However, when mechanistic accounts of inorganic phenomena as mysterious as heat, chemical reactions, and magnetism began to ascend to the status of precisely formalized science in the late nineteenth century, absential accounts of all kinds came into question. So when in 1859 Charles Darwin provided an account of a process—natural selection—that could account for the remarkable functional correspondence of species’ traits to the conditions of their existence, even the special order of living design seemed to succumb to a non-absential account. The success of mechanistically accounting for phenomena once considered only explainable in mentalistic terms reached a zenith in the latter half of the twentieth century with the study of so-called self-organizing inorganic processes. As processes as common as snow crystal formation and regularized heat convection began to be seen as natural parallels to such unexpected phenomena as superconductivity and laser light generation, it became even more common to hear of absential accounts described as historical anachronisms and illusions of a prescientific era. Many scholars now believe that developing a science capable of accurately characterizing complex self-organizing phenomena will be sufficient to finally describe organic and mental relationships in entirely non-absential terms.

I agree that a sophisticated understanding of Darwinian processes, coupled with insights from complex system dynamics, has led to enormous advances in our understanding of the orderliness observed in living, neuronal, and even social processes. The argument of this book will, indeed, rely heavily on this body of work to supply critical stepping stones on the way to a complete theory. However, I will argue that this approach can only provide intermediate steps in this multistep analysis. Dynamical systems theories are ultimately forced to explain away the end-directed and normative characteristics of organisms, because they implicitly assume that all causally relevant phenomena must be instantiated by some material substrate or energetic difference. Consequently, they are as limited in their power to deal with the representational and experiential features of mind as are simple mechanistic accounts. From either perspective, absential features must, by definition, be treated as epiphenomenal glosses that need to be reduced to specific physical substrates or else excluded from the analysis. The realm that includes what is merely represented, what-might-be, what-could-have-been, what-it-feels-like, or is-good-for, presumably can be of no physical relevance.

Beginning in the 1980s, it was becoming clear to some scholars that dynamical systems and evolutionary approaches to life and mind would fall short of this claim to universality. Because of their necessary grounding in what is physically here and now, they would not be able to escape this implicit dualism. Researchers who had been strongly influenced by systems thinking—like Gregory Bateson, Heinz von Foerster, Humberto Maturana, and Francisco Varela (to name only a few)—began to articulate this problem, and struggled with various attempts to augment systems thinking in ways that might be able to reintegrate the purposiveness of living processes and the experiential component of mental processes back into the theory. But the metaphysical problem of reintegrating purposiveness and subjectivity into theories of physical processes led many thinkers to propose a kind of forced marriage of convenience between mental and physical modes of explanation. For example, Heinz von Foerster in 1984 argued that a total theory would need to include, not exclude, the act of observation. From a related theoretical framework, Maturana and Varela in 1980 developed the concept of autopoiesis (literally, “self-creating”) to describe the core self-referential dynamics of both life and mind that constitutes an observational perspective. But in their effort to make the autonomous observer-self a fundamental element of the natural sciences, the origin of this self-creative dynamic is merely taken for granted, taken as a fundamental axiom. The theory thereby avoids the challenges posed by phenomena whose existence is determined with respect to something displaced, absent, or not yet actualized, because these are defined in internalized self-referential form. Information, in this view, is not about something; it is a formal relationship that is co-created both inside and outside this autopoietic closure. Absential phenomena just don’t seem to be compatible with the explanatory strictures of contemporary science, and so it is not surprising for many to conclude that only a sort of preestablished harmony between inside and outside perspectives, absential and physical accounts, can be achieved.

So, although the problem is ancient, and the weaknesses of contemporary methodologies have been acknowledged, there is no balanced resolution. For the most part, the mental half of any explanation is discounted as merely heuristic, and likely illusory, in the natural sciences. And even the most sophisticated efforts to integrate physical theories able to account for spontaneous order with theories of mental causality end up positing a sort of methodological dualism. Simply asserting this necessary unity—that an observing subject must be a physical system with a self-referential character—avoids the implicit absurdity of denying absential phenomena, and yet it defines them out of existence. We seem to still be living in the shadow of Descartes.

This persistent dualism is perhaps made most evident by the recent flurry of interest in the problem of consciousness, and the often extreme theoretical views concerning its nature and scientific status that have been proposed—everything from locating some hint of it in all material processes to denying that it exists at all. The problem with consciousness, like all other phenomena exhibiting an absential character, is that it doesn’t appear to have clear physical correlates, even though it is quite unambiguously associated with having an awake, functioning brain. Materialism, the view that there are only material things and their interactions in the world, seems impotent here. Even major advances in neuroscience may leave the mystery untouched. As the philosopher David Chalmers sees it:

For any physical process we specify there will be an unanswered question: Why should this process give rise to experience? Given any such process, it is conceptually coherent that it could be instantiated in the absence of experience. It follows that no mere account of the physical process will tell us why experience arises. The emergence of experience goes beyond what can be derived from physical theory.3

What could it mean that consciousness cannot be derived from any physical theory? Chalmers argues that we just need to face up to the fact that consciousness is non-physical and yet also not transcendent, in the sense of an ephemeral eternal soul. As one option, Chalmers champions the view that consciousness may be a property of the world that is as fundamental to the universe as electric charge or gravitational mass. He is willing to entertain this possibility because he believes that there is no way to reduce experiential qualities to physical processes. Consciousness is always a residual phenomenon remaining unaccounted for after all correlated physical processes are described. So, for example, although we can explain how a device might be built to distinguish red light from green light—and can even explain how retinal cells accomplish this—this account provides no purchase in explaining why red light looks red.

But does accepting this anti-materialist claim about consciousness require that there must be fundamental physical properties yet to be discovered? In this book I advocate a less dramatic, though perhaps more counterintuitive approach. It’s not that the difficulty of locating consciousness among the neural signaling forces us to look for it in something else—that is, in some other sort of special substrate or ineffable ether or extra-physical realm. The anti-materialist claim is compatible with another, quite materially grounded approach. Like meanings and purposes, consciousness may not be something there in any typical sense of being materially or energetically embodied, and yet may still be materially causally relevant.

The unnoticed option is that, here too, we are dealing with a phenomenon that is defined by its absential character, though in a rather more all-encompassing and unavoidable form. Conscious experience confronts us with a variant of the same problem that we face with respect to function, meaning, or value. None of these phenomena are materially present either and yet they matter, so to speak. In each of these cases, there is something present that marks this curious intrinsic relation to something absent. In the case of consciousness, what is present is an awake, functioning brain, buzzing with trillions of signaling processes each second. But there is an additional issue with consciousness that makes it particularly insistent, in a way that these other absential relations aren’t: that which is explicitly absent is me.

CALCULATING WITH ABSENCE

The difficulty we face when dealing with absences that matter has a striking historical parallel: the problems posed by the concept of zero. As the epigraph for this chapter proclaims, one of the greatest advances in the history of mathematics was the discovery of zero. A symbol designating the lack of quantity was not merely important because of the convenience it offered for notating large quantities. It transformed the very concept of number and revolutionized the process of calculation. In many ways, the discovery of the usefulness of zero marks the dawn of modern mathematics. But as many historians have noted, zero was at times feared, banned, shunned, and worshiped during the millennia-long history that preceded its acceptance in the West. And despite the fact that it is a cornerstone of mathematics and a critical building block of modern science, it remains problematic, as every child studying the operation of division soon learns.

A convention for marking the absence of numerical value was a late development in the number systems of the world. It appears to have originated as a way of notating the state of an abacus4 when a given line of beads is left unmoved in a computation. But it literally took millennia for marking the null value to become a regular part of mathematics in the West. When it did, everything changed. Suddenly, representing very large numbers no longer required coming up with new symbols or writing unwieldy long strings of symbols. Regular procedures, algorithms, could be devised for adding, subtracting, multiplying, and dividing. Quantity could be understood in both positive and negative terms, thus defining a number line. Equations could represent geometric objects and vice versa—and much more. After centuries of denying the legitimacy of the concept—assuming that to incorporate it into reasoning about things would be a corrupting influence, and seeing its contrary properties as reasons for excluding it from quantitative analysis—European scholars eventually realized that these notions were unfortunate prejudices. In many respects, zero can be thought of as the midwife of modern science. Until Western scholars were able to make sense of the systematic properties of this non-quantity, understanding many of the most common properties of the physical world remained beyond their reach.

What zero shares in common with living and mental phenomena is that these natural processes also each owe their most fundamental character to what is specifically not present. They are also, in effect, the physical tokens of this absence. Functions and meanings are explicitly entangled with something that is not intrinsic to the artifacts or signs that constitute them. Experiences and values seem to inhere in physical relationships but are not there at the same time. This something-not-there permeates and organizes what is physically present in these phenomena. Its absent mode of existence, so to speak, is at most only a potentiality, a placeholder.

Zero is the paradigm exemplar of such a placeholder. It marks the columnar position where the quantities 1 through 9 can potentially be inserted in the recursive pattern that is our common decimal notation (e.g., the tens, hundreds, thousands columns), but it itself does not signify a quantity. Analogously, the hemoglobin molecules in my blood are also placeholders for something they are not: oxygen. Hemoglobin is exquisitely shaped in the negative image of this molecule’s properties, like a mold in clay, and at the same time reflects the demands of the living system that gives rise to it. It only holds the oxygen molecule tightly enough to carry it through the circulation, where it gives it up to other tissues. It exists and exhibits these properties because it mediates a relationship between oxygen and the metabolism of an animal body. Similarly, a written word is also a placeholder. It is a pointer to a space in a network of meanings, each also pointing to one another and to potential features of the world. But a meaning is something virtual and potential. Though a meaning is more familiar to us than a hemoglobin molecule, the scientific account of concepts like function and meaning essentially lags centuries behind the sciences of these more tangible phenomena. We are, in this respect, a bit like our medieval forbears, who were quite familiar with the concepts of absence, emptiness, and so on, but could not imagine how the representation of absence could be incorporated into operations involving the quantities of things present. We take meanings and purposes for granted in our everyday lives, and yet we have been unable to incorporate these into the framework of the natural sciences. We seem only willing to admit that which is materially present into the sciences of things living and mental.

For medieval mathematicians, zero was the devil’s number. The unnatural way it behaved with respect to other numbers when incorporated into calculations suggested that it could be dangerous. Even today schoolchildren are warned of the dangers of dividing by zero. Do this and you can show that 1 = 2 or that all numbers are equal.5 In contemporary neuroscience, molecular biology, and dynamical systems theory approaches to life and mind, there is an analogous assumption about concepts like representation and purposiveness. Many of the most respected researchers in these fields have decided that these concepts are not even helpful heuristics. It is not uncommon to hear quite explicit injunctions against their use to describe organism properties or cognitive operations. The almost universal assumption is that modern computational and dynamical approaches to these subjects have made these concepts as anachronistic as phlogiston.6

So the idea of allowing the potentially achievable consequence characterizing a function, a reference, or an intended goal to play a causal role in our explanations of physical change has become anathema for science. A potential purpose or meaning must either be reducible to a merely physical parameter identified within the phenomenon in question, or else it must be treated as a useful fiction only allowed into discussion as a shorthand appeal to folk psychology for the sake of non-technical communication. Centuries of battling against explanations based on superstition, magic, supernatural beings, and divine purpose have trained us to be highly suspicious of any mention of such intentional and teleological properties, where things are explained as existing “for-the-sake-of” something else. These phenomena can’t be what they seem. Besides, assuming that they are what they seem will almost certainly lead to absurdities as problematic as dividing by zero.

Nevertheless, learning how to operate with zero, despite the fact that it violated principles that hold for all other numbers, opened up a vast new repertoire of analytic possibilities. Mysteries that seemed logically necessary and yet obviously false not only became tractable but provided hints leading to powerful and currently indispensable tools of scientific analysis: in other words, calculus.

Consider the famous Zeno’s paradox, which was framed in terms of a race between swift Achilles and a tortoise, which was given a slight head start. Zeno argued that moving any distance involved moving through an infinite series of fractions of that distance (1/2, 1/4, 1/8, 1/16 of the distance, and so on). Because of the infinite number of these fractions, Achilles could apparently never traverse them all and so would never reach the finish line. Worse yet, it appeared that Achilles could never even overtake the tortoise, because every time he reached that fraction of the distance to where the tortoise had just been, the tortoise would have moved just a bit further.

To resolve this paradox, mathematicians had to figure out how to deal with infinitely many divisions of space and time and infinitely small distances and durations. The link with calculus is that differentiation and integration (the two basic operations of calculus) represent and exploit the fact that many infinite series of mathematical operations converge to a finite solution. This is the case with Zeno’s problem. Thus, running at constant speed, Achilles might cover half the distance to the finish line in 20 seconds, then the next quarter of the distance in 10 seconds, then the next smaller fraction of the distance in a correspondingly shorter span of time, and so forth, with each microscopically smaller fraction of the distance taking smaller and smaller fractions of a second to cover. The result is that the total distance can still be covered in a finite time. Taking this convergent feature into account, the operation of differentiation used in calculus allows us to measure instantaneous velocities, accelerations, and so forth, even though effectively the distance traveled in that instant is zero.

A ZENO’S PARADOX OF THE MIND

I believe that we have been under the spell of a sort of Zeno’s paradox of the mind. Like the ancient mathematicians confused by the behavior of zero, and unwilling to countenance incorporating it into their calculations, we seem baffled by the fact that absent referents, unrealized ends, and abstract values have definite physical consequences, despite their apparently null physicality. As a result, we have excluded these relations from playing constitutive roles in the natural sciences. So, despite the obvious and unquestioned role played by functions, purposes, meanings, and values in the organization of our bodies and minds, and in the changes taking place in the world around us, our scientific theories still have to officially deny them anything but a sort of heuristic legitimacy. This has contributed to many tortured theoretical tricks and contorted rhetorical maneuvers in order either to obscure this deep inconsistency or else to claim that it must forever remain beyond the reach of science. We will explore some of the awkward responses to this dilemma in the chapters that follow.

More serious, however, is the way this has divided the natural sciences from the human sciences, and both from the humanities. In the process, it has also alienated the world of scientific knowledge from the world of human experience and values. If the most fundamental features of human experience are considered somehow illusory and irrelevant to the physical goings-on of the world, then we, along with our aspirations and values, are effectively rendered unreal as well. No wonder the all-pervasive success of the sciences in the last century has been paralleled by a rebirth of fundamentalist faith and a deep distrust of the secular determination of human values.

The inability to integrate these many species of absence-based causality into our scientific methodologies has not just seriously handicapped us, it has effectively left a vast fraction of the world orphaned from theories that are presumed to apply to everything. The very care that has been necessary to systematically exclude these sorts of explanations from undermining our causal analyses of physical, chemical, and biological phenomena has also stymied our efforts to penetrate beyond the descriptive surface of the phenomena of life and mind. Indeed, what might be described as the two most challenging scientific mysteries of the age—explaining the origin of life and explaining the nature of conscious experience—both are held hostage by this presumed incompatibility. Recognizing this contemporary parallel to the unwitting self-imposed handicap that limited the mathematics of the Middle Ages is, I believe, a first step toward removing this impasse. It is time that we learned how to integrate the phenomena that define our very existence into the realm of the physical and biological sciences.

Of course, it is not enough to merely recognize this analogous situation. Ultimately, we need to identify the principles by which these unruly absential phenomena can be successfully woven into the exacting warp and weft of the natural sciences. It took centuries and the lifetime efforts of some of the most brilliant minds in history to eventually tame the troublesome non-number: zero. But it wasn’t until the rules for operating with zero were finally precisely articulated that the way was cleared for the development of the physical sciences. Likewise, as long as we remain unable to explain how these curious relationships between what-is-not-there and what-is-there make a difference in the world, we will remain blind to the possibilities of a vast new realm of knowledge. I envision a time in the near future when these blinders will finally be removed, a door will open between our currently incompatible cultures of knowledge, the physical and the meaningful, and a house divided will become one.

“AS SIMPLE AS POSSIBLE, BUT NOT TOO SIMPLE”

In this book I propose a modest first step toward the goal of unifying these long-isolated and apparently incompatible ways of conceptualizing the world, and our place within it. I am quite aware that in articulating these thoughts, I am risking a kind of scientific heresy. Almost certainly, many first reactions will be dismissive: “Haven’t such ideas been long relegated to the trash heap of history?” . . . “Absence as a causal influence? Poetry, not science” . . . “Mystical nonsense.” Even suggesting that there is such a gaping blind spot in our current vision of the world is like claiming that the emperor has no clothes. But worse than being labeled a heretic is being considered too uninformed and self-assured to recognize one’s own blindness. Challenging something so basic, so well accepted, so seemingly obviously true is more often than not the mark of a crackpot or an uninformed and misguided dilettante. Proposing such a radical rethinking of these foundational assumptions of science, then, inevitably risks exposing one’s hubris. Who can honestly claim to have a sufficient grasp of the many technical fields that are relevant to making such a claim? In pursuing this challenge, I expect to make more than a few technical gaffs and leave a few serious explanatory gaps.

But if the cracks in the foundation were obvious, if the intellectual issues posed were without risk to challenge, if the technical details could be easily mastered, then the attempt would long ago have been rendered trivial. That issues involving absential phenomena still mark uncrossable boundaries between disciplines, that the most enduring scientific mysteries appear to be centered around them, and that both academic and cultural upheavals still erupt over discussions of these issues, indicates that this is far from being an issue long ago settled and relegated to the dustbin of scientific history. Developing formal tools capable of integrating this missing cipher—absential influence—into the fabric of the natural sciences is an enterprise that should be at the center of scientific and philosophical debate. We should be prepared that it will take many decades of work by the most brilliant minds of the current century to turn these intuitions into precise scientific tools. But the process can’t begin until we are willing to take the risk of stepping outside of the cul-de-sac of current assumptions, to try and determine where we took a slightly wrong turn.

The present exclusion of these absence-based relationships from playing any legitimate role in our theories of how the world works has implicitly denied our very existence. Is it any wonder, then, that scientific knowledge is viewed with distrust by many, as an enemy of human values, the handmaid of cynical secularism, and a harbinger of nihilism? The intolerability of this alienating worldview and the absurdity of its assumptions should be enough to warrant an exploration of the seemingly crazy idea that something not immediately present can actually be an important source of physical influence in the world. This means that if we are able to make sense of absential relationships, it won’t merely illuminate certain everyday mysteries. If the example of zero is any hint, even just glimpsing the outlines of a systematic way to integrate these phenomena into the natural sciences could light the path to whole new fields of inquiry. And making scientific sense of these most personal of nature’s properties, without trashing them, has the potential to transform the way we personally see ourselves within the scheme of things.

The title of this section is Albert Einstein’s oft-quoted rule of thumb for scientific theorizing. “As simple as possible, but not too simple” characterizes my view of the problem. In our efforts to explain the workings of the world with the fewest possible basic assumptions, we have settled on a framework that is currently too simple to incorporate that part of the world that is sentient, conscious, and evaluative. The challenge is to determine in what way our foundational concepts are too simple and what minimally needs to be done to complicate them just enough to reincorporate us.

Einstein’s admonition is also a recipe for clear thinking and good communication. This book is not just addressed to future physicists or biologists, or even philosophers of science. The subject it addresses has exceedingly wide relevance, and so this effort to probe into its mysteries deserves to be accessible to anyone with the willingness to entertain the challenging and counterintuitive ideas it explores. As a result, I have attempted to the best of my abilities to make it accessible to anyone whose intellectual curiosity has led them into this labyrinth of scientific and philosophical mysteries. I operate on the principle that if I can’t explain an idea to any well-educated reader, with a minimum of technical paraphernalia, then I probably don’t thoroughly understand it myself.

So, in order to reach a broad audience, and because it is the best guarantee of my own clarity of understanding, I have tried to present the entire argument in purely qualitative terms, even though this risks sacrificing rigor. I have minimized technical jargon and have not included any mathematical formalization of the principles and relationships that I describe. In most cases, I have tried to describe the relevant mechanisms and principles in ways that assume a minimum of prior knowledge on the part of the reader, and by employing examples that are as basic as I can imagine, but that still convey the critical logic behind the argument. This may make some accounts appear overly simplified and pedantic to technical readers, but I hope that the clarity gained will outweigh the time spent working cautiously step-by-step through familiar examples in order to get to concepts that are more counterintuitive and challenging. I will admit from the start that I have felt compelled to coin a few neologisms to designate some of the concepts for which I could find no commonly recognized terms. But wherever I felt that non-technical terminology would be adequate, even at the risk of dragging along irrelevant theoretical baggage, I have resisted the tendency to use specialized terminology. I have included a glossary that defines the few neologisms and technical terms that are sprinkled throughout the text.

This book is basically organized into three parts: articulating the problem, outlining an alternative theory, and exploring its implications. In chapters 1 to 5, I show that the conceptual riddles posed by absential phenomena have not been dealt with, despite claims that they have been overcome, but rather that they have been swept under the rug in various ingenious ways. By critiquing the history of efforts to explain them or explain them away, I argue that our various efforts have only served to insinuate these difficulties more cryptically into our current scientific and humanistic paradigms. In chapters 6 to 10, I outline an alternative approach—a theory of emergent dynamics that shows how dynamical process can become organized around and with respect to possibilities not realized. This is intended to provide the scaffolding for a conceptual bridge from mechanistic relationships to end-directed, informational, and normative relationships such as are found in simple life forms. In chapters 11 to 17, having laid the groundwork for an expanded science capable of encompassing these basic absential relations, I explore a few of the implications for reformulating theories of work, information, evolution, self, sentience, and value. This is a vast territory, and in these final chapters I only intend to hint at the ways that this figure/background shift in perspective necessarily reformulates how these fundamental concepts need to be reconsidered. Each of these chapters frames new theoretical and practical approaches to these presumably familiar topics, and each could easily be expanded into major books in order to do justice to these complex questions.

I consider this work to be only a first hesitant effort to map an unfamiliar and poorly explored domain. I can’t even promise to have mastered the few steps I have taken into this strange territory. It is a realm in which unquestioned intuitions are seldom reliable guides, and where even the everyday terminology that we use to understand the world can insinuate misleading assumptions. Moreover, many of the scientific ideas that need to be addressed are outside my technical training and at the edge of my grasp. In those cases where I offer my best guesses, I hope that I have at least stated this still embryonic understanding with sufficient detail and clarity to enable those with better tools and training to clear away some of the ambiguities and confusions that I leave behind.

But although I cannot pretend to have fashioned a precise calculus of this physical causal analogue to operating with zero, I believe that I can demonstrate how a form of causality dependent on specifically absent features and unrealized potentials can be compatible with our best science. I believe that this can be done without compromising either the rigor of our scientific tools or the special character of these enigmatic phenomena. I hope that by revealing the glaring presence of this fundamental incompleteness of nature, it will become impossible to ignore it any longer. So, if I can coax you to consider this apparently crazy idea—even if at first only as an intellectual diversion—I feel confident that you too will begin to glimpse the qualitative outlines of a future science that is subtle enough to include us, and our enigmatically incomplete nature, as legitimate forms of knotting in the fabric of the universe.

1

(W)HOLES

Thirty spokes converge at the wheel’s hub, to a hole that allows it to turn.

Clay is shaped into a vessel, to enclose an emptiness that can be filled.

Doors and windows are cut into walls, to provide access to their protection.

Though we can only work with what is there, use comes from what is not there.

—LAO TSU1

A STONE’S THROW

A beach stone may be moved from place to place through the action of waves, currents, and tides. Or it may be moved thanks to the arm muscles of a boy trying to skip it over the surface of the water. In either case, physical forces acting on the mass of the stone cause it to move. Of course the waves that move beach stones owe their own movement to the winds that perturb them, the winds arise from convection processes induced by the energy of sunlight, and so on, in an endless series of forces affecting forces back through time. As for a child’s muscle movements, they too can be analyzed in terms of forces affecting forces back through time. But where should that account be focused? With the oxidative metabolism of glucose and glycogen that provides the energy to contract muscles and move the arm that launches the otherwise inert stone? With the carbohydrates eaten a few hours earlier? Or with the sunlight that nourished the plants from which that food was made? One could even consider things at a more microscopic scale, such as the molecular processes causing the contraction of the child’s muscles: for example, the energy imparted by adenosine triphosphate (ATP) molecules that causes the actin and myosin molecules in each muscle fiber to slide against one another and contract that fiber; the shifts in ionic potential propagated down millions of nerve fibers that cause acetylcholine release at neuromuscular synapses and initiate this chemical process; and the patterns of neuronal firing in the cerebral cortex that organize and initiate the propagation of these signals. We might even need to include the evolutionary history that gave rise to the hands and arms and brains capable of such interactions. Even so, the full causal history of this process leaves out what is arguably the most important fact.

What about the role of the child’s mental conception of what this stone might look like skipped over the waves, his knowledge of how one should hold and throw it to achieve this intriguing result, or his fascination with the sight of a stone resisting its natural tendency to sink in water—if only for a few seconds? These certainly involve the physical-chemical mechanisms of the child’s brain; but mental experience and agency are not exactly neural firing patterns, nor brain states. Neither are they phenomena occurring outside the child’s brain, and clearly not other physical objects or events in any obvious sense. These neural activities are in some way about stone-skipping, and are crucial to the initiation of this activity. But they are not in themselves either past or future stones dancing across the water; they are more like the words on this page. Both these words and the mental images in the boy’s mind provide access to something these things are not. They are both representations of something not-quite-realized and not-quite-actual. And yet these bits of virtual reality—the contents of these representations—surely are as critical to events that will likely follow as the energy that will be expended. Something critical will be missing from the explanation of the skipped stone’s subsequent improbable trajectory if this absential feature is ignored.

Something very different from a stone’s shifting position under the influence of the waves has become involved in a child’s throwing it—something far more indirect, even if no less physical. Indeed, within a few minutes, this same boy might cause a dozen stones to dance across the surface of the water along this one stretch of beach. In contrast, prior to the evolution of humans, the probability that any stone on any beach on Earth might exhibit this behavior was astronomically minute. This difference exemplifies a wide chasm separating the domains in which two almost diametrically opposed modes of causality rule—two worlds that are nevertheless united in the hurtling of this small spinning projectile.

This exemplifies only one among billions of unprecedented and inconceivably large improbabilities associated with the presence of our species. We could just as easily have made the same point by describing a modern technological artifact, like the computer that I type on to write these sentences. This device was fashioned from materials gathered from all parts of the globe, each made unnaturally pure, and combined with other precisely purified and shaped materials in just the right way so that it could control the flow of electrons from region to region within its vast maze of metallic channels. No non-cognitive spontaneous physical process anywhere in the universe could have produced such a vastly improbable combination of materials, much less millions of nearly identical replicas in just a few short years of one another. These sorts of commonplace human examples typify the radical discontinuity separating the physics of the spontaneously probable from the deviant probabilities that organisms and minds introduce into the world.

Detailed knowledge about the general organization of the body and nervous system of the child would be usefully predictive of only the final few seconds of events as the child caught sight of an appropriately shaped stone. In comparison, knowing about the causal organization of the human body in only a very superficial way, but also knowing that a year before, during another walk on another beach, someone showed this child how to skip a stone, would confer far greater predictive power than would knowing a thousand times more physiological details. None of the incredibly many details about physical and physiological history would be as helpful as this bit of comparatively imprecise and very general information about an event that occurred months earlier and miles away. In many ways, this one past event on a different beach with a different stone in which the child may only have been an observer is even less physically linked to this present event than is the stone’s periodic tumbling in the waves during the centuries that it lay on this beach. The shift from this incalculably minuscule probability to near certainty, despite ignoring an astronomically vast amount of physical data and only learning of a few nebulous macro details of human interaction, captures the essence of a very personal riddle.

The boy’s idea that it might be possible to treat this stone like another that he’d once seen skipped is far more relevant to the organization of these causal probabilities than what he ate for breakfast, or how the stone came to be deposited in this place on this beach. Even though the force imparted to the stone is largely derived from the energy released from the chemical bonds in digested food, and its location at that spot is entirely due to the energetics of geology, wind, and waves, these facts are almost irrelevant. The predictive value of shifting attention to very general types of events, macroscopic global similarities, predictions of possible thought processes, and so forth, offers a critical hint that we are employing a very different and in some ways orthogonal logic of causality when we consider this mental analysis of the event rather than its physics. Indeed, this difference makes the two analyses appear counterintuitively incompatible. The thought is about a possibility, and a possibility is something that doesn’t yet exist and may never exist. It is as though a possible future is somehow influencing the present.

The discontinuity of causality implicit in human action parallels a related discontinuity between living and non-living processes. Ultimately, both involve what amounts to a reversal of causal logic: order developing from disorder, the lack of a state of affairs bringing itself into existence, and a potential tending to realize itself. We describe this in general terms as “ends determining means.” But compared to the way things work in the non-living, non-thinking world, it is as though a fundamental phase change has occurred in the dynamical fabric of the world. Crossing the border from machines to minds, or from chemical reactions to life, is leaving one universe of possibilities to enter another.

Ultimately, we need an account of these properties that does not make it absurd that they exist, or that we exist, with the phenomenology we have. Our brains evolved as one product of a 3-billion-year incremental elaboration of an explicitly end-directed process called life. Like the idle thoughts of the boy strolling along the beach, this process has no counterpart in the inorganic world. It would be absurd to argue that these differences are illusory. Whatever else we can say about life and mind, they have radically reorganized the causal fabric of events taking place on the surface of the Earth. Of course, life and mind are linked. The sentience that we experience as consciousness has its precursors in the adaptive processes of life in general. They are two extreme ends of a single thread of evolution. So it’s not just mind that requires us to come to a scientific understanding of end-directed forms of causality; it’s life itself.

WHAT’S MISSING?

This missing explanation exposes a large and gaping hole in our understanding of the world. It has been there for millennia. For most of this time it was merely a mysterious distraction. But as our scientific and technological powers have grown to the point that we are unwittingly interfering with the metabolism of our entire planet, our inability to fill in this explanatory hole has become more and more problematic.

In recent centuries, scientists’ ceaseless probing into the secrets of nature has triumphed over mystery upon mystery. In just the past century, scientists have harnessed the power of the stars to produce electric power or to destroy whole cities. They have identified and catalogued the details of the molecular basis for biological inheritance. And they have designed machines that in fractions of a second can perform calculations that would have taken thousands of people thousands of years to complete. Yet this success has also unwittingly undercut the motivation that has been its driving force: faith in the intrinsic value for humankind that such knowledge would provide. Not only have some of the most elegant discoveries been turned to horrific uses, but applying our most sophisticated scientific tools to the analysis of human life and mind has apparently demoted rather than ennobled the human spirit. It’s not just that we have failed to uncover the twists of physics and chemistry that set us apart from the non-living world. Our scientific theories have failed to explain what matters most to us: the place of meaning, purpose, and value in the physical world.

Our scientific theories haven’t exactly failed. Rather, they have carefully excluded these phenomena from consideration, and treated them as irrelevant. This is because the content of a thought, the goal of an action, or the conscious appreciation of an experience all share a troublesome feature that appears to make them unsuited for scientific study. They aren’t exactly anything physical, even though they depend on the material processes going on in brains.

Ask yourself: What does it mean to be reading these words? To provide an adequate answer, it is unnecessary to include information about the histories of the billions of atoms constituting the paper and ink or electronic book in front of you. A Laplacian demon2 might know the origins of these atoms in ancient, burned-out stars and the individual trajectories that eventually caused them to end up as you see them, but this complete physical knowledge wouldn’t provide any clue to their meaning. Of course, these physical details and vastly many more are a critical part of the story, but even this complete physical description wouldn’t include the most crucial causal fact of the matter.

You are reading these words because of something that they and you are not: the ideas they convey. Whatever else we can say about these ideas, it is clear that they are not the stuff that you and this book are made of.

The problem is this: Such concepts as information, function, purpose, meaning, intention, significance, consciousness, and value are intrinsically defined by their fundamental incompleteness. They exist only in relation to something that they are not. Thus, the information provided by this book is not the paper and ink or electronic medium that conveys it, the cleaning function of soap is not merely its chemical interaction with water and oils, the regulatory function of a stop sign is not the wood, metal, and paint that it is composed of or even its distinctive shape, and the aesthetic value of a sculpture is not constituted by the chemistry of the marble, its weight, or its color. The “something” that each of these is not is precisely what matters most. But notice the paradox in this English turn of phrase. To “matter” is to be substantial, to resist modification, to be beyond creation or destruction—and yet what matters about an idea or purpose is dependent on something that is not substantial in any obvious sense.

So, what is shared in common between all these phenomena? In a word, nothing—or rather, something not present. In the last chapter, I introduced the somewhat clumsy term absential to refer to the essential absent feature of each of these kinds of phenomena. This doesn’t quite tell the whole story, however, because as should already be obvious from the examples just discussed, the role that absence plays in these varied phenomena is different in each case. And it’s not just something missing, but an orientation toward or existence conditional upon this something-not-there.

The most characteristic and developed exemplar of an absential relationship is purpose. Historically, the concept of purpose has been a persistent source of controversy for both philosophers and scientists. The philosophical term for the study of purposive phenomena is teleology, literally, the logic of end-directedness. The term has its roots in ancient Greek. Curiously, there are two different ancient Greek roots that get transcribed into English as tele, and each is relevant in its own way. The first, τελε (and also τελοσ or “end”), is the one from which teleology derives. It can variously mean completion, end, goal, or purpose. So that teleology refers to the study of purposeful phenomena. The second τηλε (roughly translated as “afar”) forms the familiar prefix of such English terms as telescope, telephone, television, telemetry, and telepathy, all of which imply relationship to something occurring at a distance or despite a physical discontinuity.

Though the concept of teleology specifically derives from this first derivation of tele, by coincidence of transliteration (and possibly by some deeper etymological sound symbolism) the concept of separateness and physical displacement indicated by the second is also relevant. We recognize teleological phenomena by their development toward something they are not, but which they are implicitly determined with respect to. Without this intrinsic incompleteness, they would merely be objects or events. It is the end for the sake of which they exist—the possible state of things that they bring closer to existing—that characterizes them. Teleology also involves such relationships as representation, meaning, and relevance. A purpose is something represented. The missing something that characterizes each of these semiotic relationships can simply be physically or temporally dissociated from that which is present, or it can be more metaphorically distant in the sense of being abstract, potential, or only hypothetically existent. In these phenomena there is no disposition to bring the missing something into existence, only a marking of the essential relationship of what is present to something not present.

In an important sense, purpose is more complex than other absential relationships because we find all other forms of absential relationship implicit in the concept of purpose. It is most commonly associated with a psychological state of acting or intending to act so as to potentially bring about the realization of a mentally represented goal. This not only involves an orientation toward a currently non-existing state of affairs, it assumes an explicit representation of that end, with respect to which actions may be organized. Also, the various actions and processes typically employed to achieve that goal function for the sake of it. Finally, the success or failure to achieve that goal has value because it is in some way relevant to the agency for the sake of which it is pursued. And all these features are contributors to the sentience of simple organisms and the conscious experience of thinking beings like ourselves.

Because it is complex, the concept of mental purpose can be progressively decomposed, revealing weaker forms of this consequence-organized relationship that do not assume intrinsic mentality. Thus the concepts of function, information, and value have counterparts in living processes, which do not entail psychological states, and from which these more developed forms likely derive. The function of a designed mechanism or a biological organ is also constructed or organized (respectively) with respect to the end of promoting the production of some as-yet-unrealized state of things.

With respect to artifacts crafted by human users, there is no ambiguity. We recognize that an artifact or mechanical device is designed and constructed for a purpose. In other words, achieving this type of outcome guides the selection and modification of its physical characteristics. A bowl should be shaped to prevent its contents from draining onto the surface it sits on, and a nail should be made of a material that is more rigid than the material it needs to penetrate. The function that guides a tool’s construction as well as its use is located extrinsically, and so a tool derives its end-directed features parasitically, from the teleology of the designer or user. It is not intrinsic.

In contrast, the function of a biological organ is not parasitic on any extrinsic teleology in the same sense.3 An organ like the heart or a molecule like hemoglobin inherits its function via involvement in organism survival and reproduction. Unlike a mentally conceived purpose, a biological function lacks an explicit representation of the end with respect to which it operates. Nevertheless, it exists because of the consequences it tends to produce. Indeed, this is the essence of a natural selection explanation for certain of the properties that an organ exhibits.

Two similarly homonymous terms with different but related meanings are also associated with purpose, representation, and value. In common usage, the word “intention” typically refers to the predisposition of a person to act with respect to achieving a particular goal, as in intending to skip a stone across the water. Being intentional is in this sense essentially synonymous with being purposeful, and having an intention is having a purpose. In philosophical circles, however, the term intention is used differently and more technically. It is defined as the property of being about something. Ideas and beliefs are, in this sense, intentional phenomena. The etymology of the term admits to both uses, since its literal meaning is something like “inclined toward,” as in tending or leaning toward something. Again, the common attribute is an involvement with respect to something extrinsic and absent: for example, a content, meaning, or ideal type. So both of these terminological juxtapositions suggest a deeper commonality amongst these different modes of intrinsic incompleteness.

Unfortunately, because it is not just mentality that exhibits intrinsic incompleteness and other-dependence, the terms teleology and intentionality (in both forms) are burdened with numerous mentalistic connotations. To include the more primitive biological counterparts to these mental relationships, such as function and adaptation, or the sort of information characteristic of genetic inheritance, we need a more inclusive terminology. I will argue that these more basic forms (or grades) of intrinsic incompleteness, characteristic of even the simplest organisms, are the evolutionary precursors of mentalistic relationships in an evolutionary sense. Organ functions exist for the sake of maintaining the life of the plant or animal. While this is not purpose in any usual sense, neither is it merely a chemical-mechanical relationship. Though subjective awareness is different from the simple functional responsiveness of organisms in general, both life and mind have crossed a threshold to a realm where more than just what is materially present matters.

Currently, we lack a single term in the English language (or others that I know of) that captures this more generic sense of existing with-respect-to, for-the-sake-of, or in-order-to-generate something that is absent that also includes function at one extreme and value at the other. The recognition that there is a common core property characterizing how each of these involves a necessary linkage with, and inclination toward, something absent argues for finding a term to refer to it. To avoid repeatedly listing these attributes, we need to introduce a more generic term for all such phenomena, irrespective of whether they are associated with minds or merely features of life.

To address this need, I propose that we use the term ententional as a generic adjective to describe all phenomena that are intrinsically incomplete in the sense of being in relationship to, constituted by, or organized to achieve something non-intrinsic. By combining the prefix en- (for “in” or “within”) with the adjectival form meaning something like “inclined toward,” I hope to signal this deep and typically ignored commonality that exists in all the various phenomena that include within them a fundamental relationship to something absent.

Ententional phenomena include functions that have satisfaction conditions, adaptations that have environmental correlates, thoughts that have contents, purposes that have goals, subjective experiences that have a self/other perspective, and values that have a self that benefits or is harmed. Although functions, adaptations, thoughts, purposes, subjective experiences, and values each have distinct attributes that distinguish them, they all also have an orientation to a specific constitutive absence, a particular and precise missing something that is their critical defining attribute. When talking about cognitive and semiotic topics (sign processes), I will, however, continue to use the colloquial terminology of teleology, purpose, meaning, intention, interpretation, and sentience. And when talking about living processes that do not involve any obvious mental features, I will likewise continue to use the standard terminology of function, information, receptor, regulation, and adaptation. But when referring to all such phenomena in general, I will use entention to characterize their internal relationship to a telos—an end, or otherwise displaced and thus non-present something, or possible something.

As an analysis of the concept of teleology demonstrates, different ententional phenomena depend on, and are interrelated with, one another in an asymmetrical and hierarchical way. For example, purposive behaviors depend on represented ends, representations depend on information relationships, information depends on functional organization, and biological functions are organized with respect to their value in promoting survival, well-being, and an organism’s reproductive potential. This hierarchic dependence becomes clear when we consider some of the simplest ententional processes, such as functional relationships in living organisms. Thus, although we may be able to specify in considerable detail the physical chemical relationships constituting a particular molecular interaction, like the function of hemoglobin to bind free oxygen, none of these facts of chemistry provides the key news that hemoglobin molecules have these properties because of something that is both quite general and quite distinct from hemoglobin chemistry: the evolution of myriad molecular processes within living cells that are dependent on electrons captured from oxygen for energy, and the fact that they can’t get it by direct diffusion in large bodies. Explaining exactly how the simplest ententional phenomena arise, and how the more complex forms evolve from these simpler forms, is a major challenge of this book.

Scholars who study intentional phenomena generally tend to consider them as processes and relationships that can be characterized irrespective of any physical objects, material changes, or motive forces. But this is exactly what poses a fundamental problem for the natural sciences. Scientific explanation requires that in order to have causal consequences, something must be susceptible of being involved in material and energetic interactions with other physical objects and forces. Accepting this view confronts us with the challenge of explaining how these absential features of ententional phenomena could possibly make a difference in the world. And yet assuming that mentalistic terms like purpose and intention merely provide descriptive glosses for constellations of material events does not resolve the problem. Arguing that the causal efficacy of mental content is illusory is equally pointless, given the fact that we are surrounded by the physical consequences of people’s ideas and purposes.

Consider money. A dollar buys a cup of coffee no matter whether it takes the form of a paper bill, coins, an electronic debit on a bank account, or an interest-bearing charge on a credit card. A dollar has no essential specific physical substrate, but appears to be something of a higher order, autonomous from any particular physical realization. Since the unifying absential aspect of this economic process is only a similar type of outcome, and not any specific intrinsic attribute, one might be inclined to doubt that there is any common factor involved. Is the exchange of a paper dollar and a dollar’s worth of coins really an equivalent action in some sense, or is it simply that we ignore the causal details in describing it? Obviously, there is a difference in many of the component physical activities involved, even though the end product—receiving a cup of coffee—may be superficially similar irrespective of these other details. But does having a common type of end have some kind of independent role to play in the causal process over and above all the specific physical events in between? More troublesome yet is the fact that this end is itself not any specific physical configuration or event, but rather a general type of outcome in which the vast majority of specific physical details are interchangeable.

Philosophers often describe independence from any specific material details as “multiple realizability.” All ententional phenomena, such as a biological adaptation like flight, a mental experience like pain, an abstract convention like a grammatical function, a value assessment like a benefit, and so on, are multiply realizable. They can all be embodied in highly diverse kinds of physical-chemical processes and substrates.

Consideration of the not-quite-specific nature of the missing something that defines functions, intentions, and purposes will force us to address another conundrum that has worried philosophers since the time of the Greeks: the problem of the reality of generals. A “general,” in this sense of the word, is a type of thing rather than a specific object or event. Plato’s ideal forms were generals, as is the notion of “life” or the concept of “a dollar.” The causal status of general types has been debated by philosophers for millennia, and is often described as the Realism/Nominalism debate.4 It centers on the issue of whether types of phenomena or merely their specific individual exemplars are the source of causal influence. Ententional phenomena force the issue for this reason. And this further explains why they are problematic for the natural sciences. A representation may be about some specific thing but also about some general property, the same function may be realized by different mechanisms, and a purpose like skipping a stone may be diversely realized. So, accepting the possibility that ententional phenomena are causally important in the world appears to require that types of things have real physical consequences independent of any specific embodiment.

This requirement is well exemplified by the function of hemoglobin. This function is multiply realizable. Thus biologists were not too surprised to discover that there are other phyla of organisms that use an entirely different bloodborne molecule to capture and distribute oxygen. Clams and insects have evolved an independent molecular trick for capturing and delivering oxygen to their tissues. Instead of hemoglobin, they have evolved hemocyanins—so named for the blue-green color of their blood that results from the use of copper instead of iron in the blood protein that handles oxygen transfer. This is not considered biologically unusual because it is assumed that the detailed mechanism is unimportant so long as certain general physical attributes that are essential to the function are realized. The function would also be achieved if medical scientists devised an artificial hemoglobin substitute for use when blood transfusions were unavailable. Science fiction writers have regularly speculated that extraterrestrial life forms might also have blue or green blood. Perhaps this is behind the now colloquial reference to alien beings as “little green men.” Indeed, copper-based blood was explicitly cited in the Star Trek TV series and movies as a characteristic of the Vulcan race, though the colorful characterization of alien beings appears to be much older than this.5

The idea that something can have real causal efficacy in the world independent of the specific components that constitute it is the defining claim of the metaphysical theory called “functionalism.” Multiple realizability is one of its defining features. A common example used to illustrate this approach comes from computer science. Computer software comprises a set of instructions that are used to organize the circuits of the machine to shuttle signals around in a specific way that achieves some end, like adding numbers. To the extent that the same software produces the same effects on different computer hardware, we can say that the result is functionally equivalent, despite the entirely separate physical embodiment. Computational multiple realizability has been a crucial (though not always reliable) factor in composing this text on the different computers that I have used.6