Linux Server Hacks

Table of Contents

A Note Regarding Supplemental Files

How to Become a Hacker

The Hacker Attitude

1. 1. The world is full of fascinating problems waiting to be solved.

2. 2. No problem should ever have to be solved twice.

3. 3. Boredom and drudgery are evil.

4. 4. Freedom is good.

5. 5. Attitude is no substitute for competence.

Preface

How This Book is Organized

How to Use This Book

Conventions Used in This Book

How to Contact Us

Gotta Hack?

Credits

Acknowledgments

1. Server Basics

1.1. Hacks #1-22

Hack #1. Removing Unnecessary Services

1.1. See also:

Hack #2. Forgoing the Console Login

Hack #3. Common Boot Parameters

37. See also:

Hack #4. Creating a Persistent Daemon with init

4.1. See also:

Hack #5. n>&m: Swap Standard Output and Standard Error

Hack #6. Building Complex Command Lines

6.1. Hacking the Hack

Hack #7. Working with Tricky Files in xargs

7.1. Listing: albumize

Hack #8. Immutable Files in ext2/ext3

Hack #9. Speeding Up Compiles

Hack #10. At Home in Your Shell Environment

Hack #11. Finding and Eliminating setuid/setgid Binaries

Hack #12. Make sudo Work Harder

Hack #13. Using a Makefile to Automate Admin Tasks

13.1. Listing: Makefile.mail

13.2. Listing: Makefile.push

Hack #14. Brute Forcing Your New Domain Name

Hack #15. Playing Hunt the Disk Hog

Hack #16. Fun with /proc

Hack #17. Manipulating Processes Symbolically with procps

17.1. See also:

Hack #18. Managing System Resources per Process

18.1. See also:

Hack #19. Cleaning Up after Ex-Users

Hack #20. Eliminating Unnecessary Drivers from the Kernel

20.1. See also:

Hack #21. Using Large Amounts of RAM

Hack #22. hdparm: Fine Tune IDE Drive Parameters

2. Revision Control

2.1. Hacks #23-36

Hack #23. Getting Started with RCS

Hack #24. Checking Out a Previous Revision in RCS

Hack #25. Tracking Changes with rcs2log

Hack #26. Getting Started with CVS

26.1. Typical Uses

26.2. Creating a Repository

26.3. Importing a New Module

26.4. Environment Variables

26.5. See Also:

Hack #27. CVS: Checking Out a Module

Hack #28. CVS: Updating Your Working Copy

Hack #29. CVS: Using Tags

Hack #30. CVS: Making Changes to a Module

Hack #31. CVS: Merging Files

Hack #32. CVS: Adding and Removing Files and Directories

32.1. Removing Files

32.2. Removing Directories

Hack #33. CVS: Branching Development

Hack #34. CVS: Watching and Locking Files

Hack #35. CVS: Keeping CVS Secure

35.1. Remote Repositories

35.2. Permissions

35.3. Developer Machines

Hack #36. CVS: Anonymous Repositories

36.1. Creating an Anonymous Repository

36.2. Installing pserver

36.3. Using a Remote pserver

3. Backups

3.1. Hacks #37-44

Hack #37. Backing Up with tar over ssh

37.1. See also:

Hack #38. Using rsync over ssh

38.1. See also:

Hack #39. Archiving with Pax

39.1. Creating Archives

39.2. Expanding Archives

39.3. Interactive Restores

39.4. Recursively Copy a Directory

39.5. Incremental Backups

39.6. Skipping Files on Restore

39.7. See also:

Hack #40. Backing Up Your Boot Sector

40.1. See also:

Hack #41. Keeping Parts of Filesystems in sync with rsync

41.1. Listing: Balance-push.sh

Hack #42. Automated Snapshot-Style Incremental Backups with rsync

42.1. Extensions: Hourly, Daily, and Weekly Snapshots

42.2. Listing: make_snapshot.sh

42.3. Listing: Daily_snapshot_rotate.sh

42.4. Sample Output of ls -l /snapshot/home

42.5. See also:

Hack #43. Working with ISOs and CDR/CDRWs

43.1. See also:

Hack #44. Burning a CD Without Creating an ISO File

4. Networking

4.1. Hacks #45-53

Hack #45. Creating a Firewall from the Command Line of any Server

45.1. See also:

Hack #46. Simple IP Masquerading

46.1. See also:

Hack #47. iptables Tips & Tricks

47.1. Advanced iptables Features

47.2. See also:

Hack #48. Forwarding TCP Ports to Arbitrary Machines

48.1. See also:

Hack #49. Using Custom Chains in iptables

49.1. See also:

Hack #50. Tunneling: IPIP Encapsulation

50.1. See also:

Hack #51. Tunneling: GRE Encapsulation

51.1. See also:

Hack #52. Using vtun over ssh to Circumvent NAT

52.1. See also:

Hack #53. Automatic vtund.conf Generator

53.1. Listing: vtundconf

5. Monitoring

5.1. Hacks #54-65

Hack #54. Steering syslog

54.1. Mark Who?

54.2. Remote Logging

Hack #55. Watching Jobs with watch

55.1. See also:

Hack #56. What's Holding That Port Open?

Hack #57. Checking On Open Files and Sockets with lsof

57.1. See also:

Hack #58. Monitor System Resources with top

58.1. See also:

Hack #59. Constant Load Average Display in the Titlebar

59.1. Listing: tl

Hack #60. Network Monitoring with ngrep

60.1. Listing: go-ogle

60.2. See also:

Hack #61. Scanning Your Own Machines with nmap

61.1. See also:

Hack #62. Disk Age Analysis

62.1. Listing: diskage

Hack #63. Cheap IP Takeover

63.1. Listing: takeover

63.2. See also:

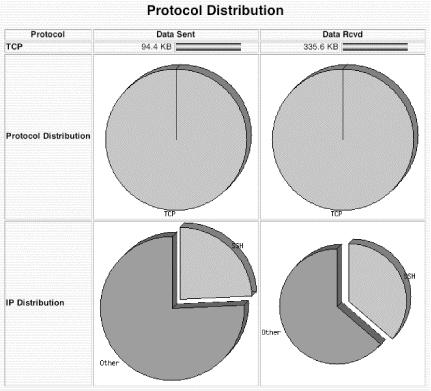

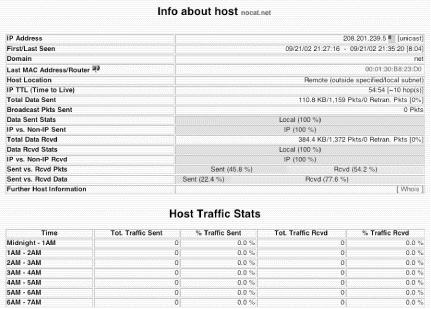

Hack #64. Running ntop for Real-Time Network Stats

64.1. See also:

Hack #65. Monitoring Web Traffic in Real Time with httptop

65.1. Listing: httptop

6. SSH

6.1. Hacks #66-71

Hack #66. Quick Logins with ssh Client Keys

66.1. Security Concerns

66.2. See Also:

Hack #67. Turbo-mode ssh Logins

67.1. See also:

Hack #68. Using ssh-Agent Effectively

Hack #69. Running the ssh-Agent in a GUI

69.1. See Also:

Hack #70. X over ssh

70.1. See also:

Hack #71. Forwarding Ports over ssh

71.1. See also:

7. Scripting

7.1. Hacks #72-75

Hack #72. Get Settled in Quickly with movein.sh

72.1. Listing: movein.sh

72.2. See also:

Hack #73. Global Search and Replace with Perl

73.1. See also:

Hack #74. Mincing Your Data into Arbitrary Chunks (in bash)

74.1. Listing: mince

Hack #75. Colorized Log Analysis in Your Terminal

8. Information Servers

8.1. Hacks #76-100

Hack #76. Running BIND in a chroot Jail

76.1. See also:

Hack #77. Views in BIND 9

77.1. Basic Syntax

77.2. Defining Zones in Views

77.3. Views in Slave Name Servers

Hack #78. Setting Up Caching DNS with Authority for Local Domains

78.1. See also:

Hack #79. Distributing Server Load with Round-Robin DNS

79.1. See also:

Hack #80. Running Your Own Top-Level Domain

Hack #81. Monitoring MySQL Health with mtop

81.1. See also:

Hack #82. Setting Up Replication in MySQL

82.1. See also:

Hack #83. Restoring a Single Table from a Large MySQL Dump

Hack #84. MySQL Server Tuning

84.1. See also:

Hack #85. Using proftpd with a mysql Authentication Source

85.1. See also:

Hack #86. Optimizing glibc, linuxthreads, and the Kernel for a Super MySQL Server

86.1. Step 1: Build glib

86.2. Step 2: The Kernel

86.3. Step 3: Build a New MySQL

86.4. Step 4: Expand the Maximum Filehandles at Boot

Hack #87. Apache Toolbox

87.1. See Also:

Hack #88. Display the Full Filename in Indexes

Hack #89. Quick Configuration Changes with IfDefine

Hack #90. Simplistic Ad Referral Tracking

90.1. Listing: referral-report.pl

Hack #91. Mimicking FTP Servers with Apache

Hack #92. Rotate and compress Apache Server Logs

92.1. Listing: logflume.pl

Hack #93. Generating an SSL cert and Certificate Signing Request

93.1. See also:

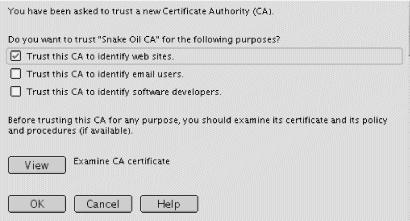

Hack #94. Creating Your Own CA

94.1. See also:

Hack #95. Distributing Your CA to Client Browsers

95.1. See also:

Hack #96. Serving multiple sites with the same DocumentRoot

Hack #97. Delivering Content Based on the Query String Using mod_rewrite

97.1. See also:

Hack #98. Using mod_proxy on Apache for Speed

Hack #99. Distributing Load with Apache RewriteMap

99.1. See Also:

Hack #100. Ultrahosting: Mass Web Site Hosting with Wildcards, Proxy, and Rewrite

Linux Server Hacks

Rob Flickenger

Editor

Rael Dornfest

Copyright © 2009 O'Reilly Media, Inc.

O'Reilly Media

A Note Regarding Supplemental Files

Supplemental files and examples for this book can be found at http://examples.oreilly.com/9780596004613/. Please use a standard desktop web browser to access these files, as they may not be accessible from all ereader devices.

All code files or examples referenced in the book will be available online. For physical books that ship with an accompanying disc, whenever possible, we’ve posted all CD/DVD content. Note that while we provide as much of the media content as we are able via free download, we are sometimes limited by licensing restrictions. Please direct any questions or concerns to [email protected].

How to Become a Hacker

The Jargon File contains a bunch of definitions of the term "hacker," most having to do with technical adeptness and a delight in solving problems and overcoming limits. If you want to know how to become a hacker, though, only two are really relevant.

There is a community, a shared culture, of expert programmers and networking wizards that traces its history back through decades to the first time-sharing minicomputers and the earliest ARPAnet experiments. The members of this culture originated the term "hacker." Hackers built the Internet. Hackers made the Unix operating system what it is today. Hackers run Usenet. Hackers make the Web work. If you are part of this culture, if you have contributed to it and other people in it know who you are and call you a hacker, you're a hacker.

The hacker mind-set is not confined to this software-hacker culture. There are people who apply the hacker attitude to other things, like electronics or music — actually, you can find it at the highest levels of any science or art. Software hackers recognize these kindred spirits elsewhere and may call them "hackers" too — and some claim that the hacker nature is really independent of the particular medium the hacker works in. But in the rest of this document, we will focus on the skills and attitudes of software hackers, and the traditions of the shared culture that originated the term "hacker."

There is another group of people who loudly call themselves hackers, but aren't. These are people (mainly adolescent males) who get a kick out of breaking into computers and breaking the phone system. Real hackers call these people "crackers" and want nothing to do with them. Real hackers mostly think crackers are lazy, irresponsible, and not very bright — being able to break security doesn't make you a hacker any more than being able to hotwire cars makes you an automotive engineer. Unfortunately, many journalists and writers have been fooled into using the word "hacker" to describe crackers; this irritates real hackers no end.

The basic difference is this: hackers build things, crackers break them.

If you want to be a hacker, keep reading. If you want to be a cracker, go read the alt.2600 newsgroup and get ready to do five to ten in the slammer after finding out you aren't as smart as you think you are. And that's all I'm going to say about crackers.

The Hacker Attitude

Hackers solve problems and build things, and they believe in freedom and voluntary mutual help. To be accepted as a hacker, you have to behave as though you have this kind of attitude yourself. And to behave as though you have the attitude, you have to really believe the attitude.

But if you think of cultivating hacker attitudes as just a way to gain acceptance in the culture, you'll miss the point. Becoming the kind of person who believes these things is important for you — for helping you learn and keeping you motivated. As with all creative arts, the most effective way to become a master is to imitate the mind-set of masters — not just intellectually but emotionally as well.

Or, as the following modern Zen poem has it:

| To follow the path: |

| look to the master, |

| follow the master, |

| walk with the master, |

| see through the master, |

| become the master. |

So if you want to be a hacker, repeat the following things until you believe them.

1. The world is full of fascinating problems waiting to be solved.

Being a hacker is a lot of fun, but it's a kind of fun that takes a lot of effort. The effort takes motivation. Successful athletes get their motivation from a kind of physical delight in making their bodies perform, pushing themselves past their own physical limits. Similarly, to be a hacker you have to get a basic thrill from solving problems, sharpening your skills, and exercising your intelligence.

If you aren't the kind of person that feels this way naturally, you'll need to become one in order to make it as a hacker. Otherwise you'll find your hacking energy is zapped by distractions like sex, money, and social approval.

(You also have to develop a kind of faith in your own learning capacity — a belief that even though you may not know all of what you need to solve a problem, if you tackle just a piece of it and learn from that, you'll learn enough to solve the next piece — and so on, until you're done.)

2. No problem should ever have to be solved twice.

Creative brains are a valuable, limited resource. They shouldn't be wasted on re-inventing the wheel when there are so many fascinating new problems waiting out there.

To behave like a hacker, you have to believe that the thinking time of other hackers is precious — so much so that it's almost a moral duty for you to share information, solve problems, and then give the solutions away just so other hackers can solve new problems instead of having to perpetually re-address old ones.

(You don't have to believe that you're obligated to give all your creative product away, though the hackers that do are the ones that get most respect from other hackers. It's consistent with hacker values to sell enough of it to keep you in food and rent and computers. It's fine to use your hacking skills to support a family or even get rich, as long as you don't forget your loyalty to your art and your fellow hackers while doing it.)

3. Boredom and drudgery are evil.

Hackers (and creative people in general) should never be bored or have to drudge at stupid repetitive work, because when this happens it means they aren't doing what only they can do — solve new problems. This wastefulness hurts everybody. Therefore boredom and drudgery are not just unpleasant but actually evil.

To behave like a hacker, you have to believe this enough to want to automate away the boring bits as much as possible, not just for yourself but for everybody else (especially other hackers).

(There is one apparent exception to this. Hackers will sometimes do things that may seem repetitive or boring to an observer as a mind-clearing exercise, or in order to acquire a skill or have some particular kind of experience you can't have otherwise. But this is by choice — nobody who can think should ever be forced into a situation that bores them.)

4. Freedom is good.

Hackers are naturally anti-authoritarian. Anyone who can give you orders can stop you from solving whatever problem you're being fascinated by — and, given the way authoritarian minds work, will generally find some appallingly stupid reason to do so. So the authoritarian attitude has to be fought wherever you find it, lest it smother you and other hackers.

(This isn't the same as fighting all authority. Children need to be guided and criminals restrained. A hacker may agree to accept some kinds of authority in order to get something he wants more than the time he spends following orders. But that's a limited, conscious bargain; the kind of personal surrender authoritarians want is not on offer.)

Authoritarians thrive on censorship and secrecy. And they distrust voluntary cooperation and information-sharing — they only like "cooperation" that they control. So to behave like a hacker, you have to develop an instinctive hostility to censorship, secrecy, and the use of force or deception to compel responsible adults. And you have to be willing to act on that belief.

5. Attitude is no substitute for competence.

To be a hacker, you have to develop some of these attitudes. But copping an attitude alone won't make you a hacker, any more than it will make you a champion athlete or a rock star. Becoming a hacker will take intelligence, practice, dedication, and hard work.

Therefore, you have to learn to distrust attitude and respect competence of every kind. Hackers won't let posers waste their time, but they worship competence — especially competence at hacking, but competence at anything is good. Competence at demanding skills that few can master is especially good, and competence at demanding skills that involve mental acuteness, craft, and concentration is best.

If you revere competence, you'll enjoy developing it in yourself — the hard work and dedication will become a kind of intense play rather than drudgery. That attitude is vital to becoming a hacker.

The complete essay can be found online at http://www.catb.org/~esr/faqs/hacker-howto.html and in an appendix to the "The Cathedral and the Bazaar" book (O'Reilly.)

— Eric S. Raymond[1]

[1] Eric S. Raymond is the author of the New Hacker's Dictionary, based on the Jargon File, and the famous "Cathedral and the Bazaar" essay that served as a catalyst for the Open Source movement. The following is an excerpt from his 1996 essay, "What is a hacker?" Raymond argues that hackers are ingenious at solving interesting problems, an idea that is the cornerstone of O'Reilly's new Hacks series.

Preface

A hacker does for love what others would not do for money.

—/usr/games/fortune

The word hack has many connotations. A "good hack" makes the best of the situation of the moment, using whatever resources are at hand. An "ugly hack" approaches the situation in the most obscure and least understandable way, although many "good hacks" may also appear unintelligible to the uninitiated.

The effectiveness of a hack is generally measured by its ability to solve a particular technical problem, inversely proportional to the amount of human effort involved in getting the hack running. Some hacks are scalable and some are even sustainable. The longest running and most generally accepted hacks become standards and cause many more hacks to be invented. A good hack lasts until a better hack comes along.

A hack reveals the interface between the abstract and wonderfully complex mind of the designer, and the indisputable and vulgar experience of human needs. Sometimes, hacks may be ugly and only exist because someone had an itch that needed scratching. To the engineer, a hack is the ultimate expression of the Do-It-Yourself sentiment: no one understands how a hack came to be better than the person who felt compelled to solve the problem in the first place. If a person with a bent for problem solving thinks a given hack is ugly, then they are almost always irresistibly motivated to go one better — and hack the hack, something that we encourage the readers of this book to do.

In the end, even the most capable server, with the most RAM and running the fastest (and most free) operating system on the planet, is still just a fancy back-scratcher fixing the itch of the moment, until a better, faster and cheaper back-scratcher is required.

Where does all of this pseudo-philosophical rambling get you? Hopefully, this background will give you some idea of the mindset that prompted the compiling of this collection of solutions that we call Linux Server Hacks. Some are short and simple, while some are quite complex. All of these hacks are designed to solve a particular technical problem that the designer simply couldn't let go without "scratching." I hope that some of them will be directly applicable to an "itch" or two that you may have felt yourself as a new or experienced administrator of Linux servers.

How This Book is Organized

A competent sysadmin must be a jack-of-all-trades. To be truly effective, you'll need to be able to handle every problem the system throws at you, from power on to halt. To assist you in the time in between, I present this collection of time-saving and novel approaches to daily administrative tasks.

- Server Basics begins by looking at some of the most common sorts of tasks that admins encounter: manipulating the boot process, effectively working with the command line, automating common tasks, watching (and regulating) how system resources are used, and tuning various pieces of the Linux kernel to make everything run more efficiently. This isn't an introduction to system administration but a look at some very effective and non-obvious techniques that even seasoned sysadmins may have overlooked.

- Revision Control gives a crash-course in using two fundamental revision control systems, RCS and CVS. Being able to recall arbitrary previous revisions of configuration files, source code, and documentation is a critical ability that can save your job. Too many professional admins are lacking in revision control basics (preferring instead to make the inevitable, but unsupportable .old or .orig backup). This section will get you up and running quickly, giving you commands and instructions that are succinct and to the point.

- The next section, Backups, looks at quick and easy methods for keeping spare copies of your data. I pay particular attention to network backups, rsync, and working with ISOs. I'll demonstrate some of the enormous flexibility of standard system backup tools and even present one way of implementing regular "snapshot" revisions of a filesystem (without requiring huge amounts of storage).

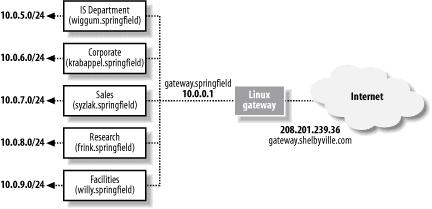

- Networking is my favorite section of this entire book. The focus isn't on basic functionality and routing, but instead looks at some obscure but insanely useful techniques for making networks behave in unexpected ways. I'll set up various kinds of IP tunnels (both encrypted and otherwise), work with NAT, and show some advanced features that allow for interesting behavior based on all kinds of parameters. Did you ever want to decide what to do with a packet based on its data contents? Take a look at this section.

- Monitoring is an eclectic mix of tips and tools for finding out exactly what your server is up to. It looks at some standard (and some absolutely required "optional") packages that will tell you volumes about who is using what, when, and how on your network. It also looks at a couple of ways to mitigate inevitable service failures and even help detect when naughty people attempt to do not-so-nice things to your network.

- Truly a font of hackery unto itself, the SSH section describes all sorts of nifty uses for ssh, the cryptographically strong (and wonderfully flexible) networking tool. There are a couple of versions of ssh available for Linux, and while many of the examples will work in all versions, they are all tested and known to work with OpenSSH v3.4p1.

- Scripting provides a short digression by looking at a couple of odds and ends that simply couldn't fit on a single command line. These hacks will save you time and will hopefully serve as examples of how to do some nifty things in shell and Perl.

- Information Services presents three major applications for Linux: BIND 9, MySQL, and Apache. This section assumes that you're well beyond basic installation of these packages, and are looking for ways to make them deliver their services faster and more efficiently, without having to do a lot of work yourself. You will see methods for getting your server running quickly, helping it scale to very large installations and behave in all sorts of slick ways that save a lot of configuration and maintenance time.

How to Use This Book

You may find it useful to read this book from cover to cover, as the hacks do build on each other a bit from beginning to end. However, each hack is designed to stand on its own as a particular example of one way to accomplish a particular task. To that end, I have grouped together hacks that fit a particular theme into sections, but I do cross-reference quite a bit between hacks from different sections (and also to more definitive resources on the subject). Don't consider a given section as a cut-and-dried chapter with rigidly defined subject boundaries but more as a convenient way of collecting similar (and yet independent) hacks. You may want to read this book much like the way most people browse web pages online: follow whatever interests you, and if you get lost, follow the links within the piece to find more information.

Conventions Used in This Book

The following is a list of the typographical conventions used in this book:

Italic

Used to indicate new terms, URLs, filenames, file extensions, directories, commands and options, and program names.

Constant Width

Used to show code examples, the contents of files, or the output from commands.

Constant Width Bold

Used in examples and tables to show commands or other text that should be typed literally.

Constant Width Italic

Used in examples and tables to show text that should be replaced with user-supplied values.

The thermometer icons, found next to each hack, indicate the relative complexity of the hack:

|

|

|

How to Contact Us

We have tested and verified the information in this book to the best of our ability, but you may find that features have changed (or even that we have made mistakes!). Please let us know about any errors, inaccuracies, bugs, misleading or confusing statements, and typos that you find in this book.

You can write to us at:

| O'Reilly & Associates, Inc. |

| 1005 Gravenstein Hwy N. |

| Sebastopol, CA 95472 |

| (800) 998-9938 (in the U.S. or Canada) |

| (707) 829-0515 (international/local) |

| (707) 829-0104 (fax) |

To ask technical questions or to comment on the book, send email to:

| [email protected] |

Visit the web page for Linux Server Hacks to find additional support information, including examples and errata. You can find this page at:

| http://www.oreilly.com/catalog/linuxsvrhack |

For more information about this book and others, see the O'Reilly web site:

| http://www.oreilly.com |

Gotta Hack?

Got a good hack you'd like to share with others? Go to the O'Reilly Hacks web site at:

| http://hacks.oreilly.com |

You'll find book-related resources, sample hacks and new hacks contributed by users. You'll find information about additional books in the Hacks series.

Credits

- Rob Flickenger authored the majority of hacks in this book.

Rob has worked with Linux since Slackware 3.5. He was previously the system administrator of the O'Reilly Network (an all-Linux shop, naturally) and is the author of Building Wireless Community Networks, also by O'Reilly. - Rael Dornfest ([Hack #87])

Rael is a Researcher at the O'Reilly & Associates focusing on technologies just beyond the pale. He assesses, experiments, programs, and writes for the O'Reilly network and O'Reilly publications. - Seann Herdejurgen ([Hack #47], [Hack #62])

Seann has been working with UNIX since 1987 and now architects high availability solutions as a senior systems engineer with D-Tech corporation in Dallas, Texas. He holds a Master's degree in Computer Science from Texas A&M University. He may be reached at: http://seann.herdejurgen.com/. - Dru Lavigne ([Hack #39])

Dru is an instructor at a private technical college in Kingston, ON where she teaches the fundamentals of TCP/IP networking, routing, and security. Her current hobbies include matching every TCP and UDP port number to its associated application(s) and reading her way through all of the RFCs. - Cricket Liu ([Hack #77])

Cricket matriculated at the University of California's Berkeley campus, that great bastion of free speech, unencumbered Unix, and cheap pizza. He worked for a year as Director of DNS Product Management for VeriSign Global Registry Services, and is a co-author of DNS and BIND, also published by O'Reilly & Associates. - Mike Rubel ([Hack #42])

Mike (http://www.mikerubel.org) studied Mechanical Engineering at Rutgers University (B.S. 1998) and Aeronautics at Caltech (M.S. 1999), where he is now a graduate student. He has enjoyed using Linux and GNU software for several years in the course of his numerical methods research. - Jennifer Vesperman (all of the CVS pieces except [Hack #36] were adapted from her online CVS pieces for O'ReillyNet)

Jenn contributes to open source, as a user, writer and occasional programmer. Her coding experience ranges from the hardware interface to an HDLC card to the human interface of Java GUIs. Jenn is the current coordinator and co-sysadmin for Linuxchix.org. - Schuyler Erle (contributed code for httptop, mysql-table-restore, balance-push, find-whois, and vtundgen)

By day, Schuyler is a mild-mannered Internet Systems Developer for O'Reilly & Associates. By night, he crusades for justice and freedom as a free software hacker and community networking activist. - Morbus Iff ([Hack #89], [Hack #90], [Hack #91])

Kevin Hemenway, better known as Morbus Iff, is the creator of disobey.com, which bills itself as "content for the discontented." Publisher and developer of more home cooking than you could ever imagine, he'd love to give you a Fry Pan of Intellect upside the head. Politely, of course. And with love.

Acknowledgments

I would like to thank my family and friends for their support and encouragement. Thanks especially to my dad for showing me "proper troubleshooting technique" at such an early age and inevitably setting me on the path of the Hacker before I had even seen a computer.

Of course, this book would be nothing without the excellent contributions of all the phenomenally talented hackers contained herein. But of course, our hacks are built by hacking the shoulders of giants (to horribly mix a metaphor), and it is my sincere hope that you will in turn take what you learn here and go one better, and most importantly, tell everyone just how you did it.

Chapter 1. Server Basics

Hacks #1-22

A running Linux system is a complex interaction of hardware and software where invisible daemons do the user's bidding, carrying out arcane tasks to the beat of the drum of the uncompromising task master called the Linux kernel.

A Linux system can be configured to perform many different kinds of tasks. When running as a desktop machine, the visible portion of Linux spends much of its time controlling a graphical display, painting windows on the screen, and responding to the user's every gesture and command. It must generally be a very flexible (and entertaining) system, where good responsiveness and interactivity are the critical goals.

On the other hand, a Linux server generally is designed to perform a couple of tasks, nearly always involving the squeezing of information down a network connection as quickly as possible. While pretty screen savers and GUI features may be critical to a successful desktop system, the successful Linux server is a high performance appliance that provides access to information as quickly and efficiently as possible. It pulls that information from some sort of storage (like the filesystem, a database, or somewhere else on the network) and delivers that information over the network to whomever requested it, be it a human being connected to a web server, a user sitting in a shell, or over a port to another server entirely.

It is under these circumstances that a system administrator finds their responsibilities lying somewhere between deity and janitor. Ultimately, the sysadmin's job is to provide access to system resources as quickly (and equitably) as possible. This job involves both the ability to design new systems (that may or may not be rooted in solutions that already exist) and the talent (and the stomach) for cleaning up after people who use that system without any concept of what "resource management" really means.

The most successful sysadmins remove themselves from the path of access to system resources and let the machines do all of the work. As a user, you know that your sysadmin is effective when you have the tools that you need to get the job done and you never need to ask your sysadmin for anything. To pull off (that is, to hack) this impossible sounding task requires that the sysadmin anticipate what the users' needs will be and make efficient use of the resources that are available.

To begin with, I'll present ways to optimize Linux to perform only the work that is required to get the job done and not waste cycles doing work that you're not interested in doing. You'll see some examples of how to get the system to do more of the work of maintaining itself and how to make use of some of the more obscure features of the system to make your job easier. Parts of this section (particularly Command Line and Resource Management) include techniques that you may find yourself using every day to help build a picture of how people are using your system and ways that you might improve it.

These hacks assume that you are already familiar with Linux. In particular, you should already have root on a running Linux system available with which to experiment and should be comfortable with working on the system from the command line. You should also have a good working knowledge of networks and standard network services. While I hope that you will find these hacks informative, they are certainly not a good introduction to Linux system administration. For in-depth discussion on good administrative techniques, I highly recommend the Linux Network Administrator's Guide and Essential System Administration, both by O'Reilly and Associates.

The hacks in this chapter are grouped together into the following five categories: Boot Time, Command Line, Automation, Resource Management, and Kernel Tuning.

Boot Time

- Removing Unnecessary Services

- Forgoing the Console Login

- Common Boot Parameters

- Creating a Persistent Daemon with init

Command Line

- n>&m: Swap Standard Output and Standard Error

- Building Complex Command Lines

- Working with Tricky Files in xargs

- Immutable Files in ext2/ext3

- Speeding Up Compiles

Automation

- At home in your shell environments

- Finding and eliminating setuid/setgid binaries

- Make sudo work harder for you

- Using a Makefile to automate admin tasks

- Brute forcing your new domain name

Resource Management

- Playing Hunt the Disk Hog

- Fun with /proc

- Manipulating processes symbolically with procps

- Managing system resources per process

- Cleaning up after ex-users

Kernel Tuning

Hack #1. Removing Unnecessary Services

Fine tune your server to provide only the services you really want to serve

When you build a server, you are creating a system that should perform its intended function as quickly and efficiently as possible. Just as a paint mixer has no real business being included as an espresso machine attachment, extraneous services can take up resources and, in some cases, cause a real mess that is completely unrelated to what you wanted the server to do in the first place. This is not to say that Linux is incapable of serving as both a top-notch paint mixer and making a good cup of coffee simultaneously — just be sure that this is exactly what you intend before turning your server loose on the world (or rather, turning the world loose on your server).

When building a server, you should continually ask yourself: what do I really need this machine to do? Do I really need FTP services on my web server? Should NFS be running on my DNS server, even if no shares are exported? Do I need the automounter to run if I mount all of my volumes statically?

To get an idea of what your server is up to, simply run a ps ax . If nobody is logged in, this will generally tell you what your server is currently running. You should also see what programs for which your inetd is accepting connections, with either a grep -v ^# /etc/inetd.conf or (more to the point) netstat -lp . The first command will show all uncommented lines in your inetd.conf, while the second (when run as root) will show all of the sockets that are in the LISTEN state, and the programs that are listening on each port. Ideally, you should be able to reduce the output of a ps ax to a page of information or less (barring preforking servers like httpd, of course).

Here are some notorious (and typically unnecessary) services that are enabled by default in many distributions:

portmap, rpc.mountd, rpc.nfsd

These are all part of the NFS subsystem. Are you running an NFS server? Do you need to mount remote NFS shares? Unless you answered yes to either of these questions, you don't need these daemons running. Reclaim the resources that they're taking up and eliminate the potential security risk.

smbd and nmbd

These are the Samba daemons. Do you need to export SMB shares to Windows boxes (or other machines)? If not, then these processes can be safely killed.

automount

The automounter can be handy to bring up network (or local) filesystems on demand, eliminating the need for root privileges when accessing them. This is especially handy on client desktop machines, where a user needs to use removable media (such as CDs or floppies) or to access network resources. But on a dedicated server, the automounter is probably unnecessary. Unless your machine is providing console access or remote network shares, you can kill the automounter (and set up all of your mounts statically, in /etc/fstab).

named

Are you running a name server? You don't need named running if you only need to resolve network names; that's what /etc/resolv.conf and the bind libraries are for. Unless you're running name services for other machines, or are running a caching DNS server (see [Hack #78]), then named isn't needed.

lpd

Do you ever print to this machine? Chances are, if it's serving Internet resources, it shouldn't be accepting print requests anyway. Remove the printer daemon if you aren't planning on using it.

inetd

Do you really need to run any services from inetd? If you have ssh running in standalone mode, and are only running standalone daemons (such as Apache, BIND, MySQL, or ProFTPD) then inetd may be superfluous. In the very least, review which services are being accepted with the grep command grep -v ^# /etc/inetd.conf. If you find that every service can be safely commented out, then why run the daemon? Remove it from the boot process (either by removing it from the system rc's or with a simple chmod -x /usr/sbin/inetd).

telnet, rlogin, rexec, ftp

The remote login, execution, and file transfer functionality of these venerable daemons has largely been supplanted by ssh and scp, their cryptographically secure and tremendously flexible counterparts. Unless you have a really good reason to keep them around, it's a good idea to eliminate support for these on your system. If you really need to support ftp connections, you might try the mod_sql plugin for proftpd (see [Hack #85]).

finger, comsat, chargen, echo, identd

The finger and comsat services made sense in the days of an open Internet, where users were curious but generally well-intentioned. In these days of stealth portscans and remote buffer overflow exploits, running extraneous services that give away information about your server is generally considered a bad idea. The chargen and echo ports were once good for testing network connectivity, but are now too inviting for a random miscreant to fiddle with (and perhaps connect to each other to drive up server load quickly and inexpensively).

Finally, the identd service was once a meaningful and important source of information, providing remote servers with an idea of which users were connecting to their machines. Unfortunately, in these days of local root exploits and desktop Linux machines, installing an identd that (perish the thought!) actually lies about who is connected has become so common that most sites ignore the author information anyway. Since identd is a notoriously shaky source of information, why leave it enabled at all?

To eliminate unnecessary services, first shut them down (either by running service stop in /etc/rc.d/init.d/, removing them from /etc/inetd.conf, or by killing them manually). Then to be sure that they don't start again the next time the machine reboots, remove their entry from /etc/rc.d/*. Once you have your system trimmed down to only the services you intend to serve, reboot the machine and check the process table again.

If you absolutely need to run insecure services on your machine, then you should use tcp wrappers or local firewalling to limit access to only the machines that absolutely need it.

Hack #2. Forgoing the Console Login

All of the access, none of the passwords

It will happen to you one day. You'll need to work on a machine for a friend or client who has "misplaced" the root password on which you don't have an account.

If you have console access and don't mind rebooting, traditional wisdom beckons you to boot up in single user mode. Naturally, after hitting Control-Alt-Delete, you simply wait for it to POST and then pass the parameter single to the booting kernel. For example, from the LILO prompt:

LILO: linux single

On many systems, this will happily present you with a root shell. But on some systems (notably RedHat), you'll run into the dreaded emergency prompt:

Give root password for maintenance

(or type Control-D for normal startup)

If you knew the root password, you wouldn't be here! If you're lucky, the init script will actually let you hit ^C at this stage and will drop you to a root prompt. But most init processes are "smarter" than that, and trap ^C. What to do? Of course, you could always boot from a rescue disk and reset the password, but suppose you don't have one handy (or that the machine doesn't have a CD-ROM drive).

All is not lost! Rather than risk running into the above mess, let's modify the system with extreme prejudice, right from the start. Again, from the LILO prompt:

LILO: linux init=/bin/bash

What does this do? Rather than start /sbin/init and proceed with the usual /etc/rc.d/* procedure, we're telling the kernel to simply give us a shell. No passwords, no filesystem checks (and for that matter, not much of a starting environment!) but a very quick, shiny new root prompt.

Unfortunately, that's not quite enough to be able to repair your system. The root filesystem will be mounted read-only (since it never got a chance to be checked and remounted read/write). Also, networking will be down, and none of the usual system daemons will be running. You don't want to do anything more complicated than resetting a password (or tweaking a file or two) at a prompt like this. Above all: don't hit ^D or type Exit! Your little shell (plus the kernel) constitutes the entire running Linux system at the moment. So, how can you manipulate the filesystem in this situation, if it is mounted read-only? Try this:

# mount -o remount,rw /

That will force the root filesystem to be remounted read-write. You can now type passwd to change the root password (and if the original admin lost the password, consider the ramifications of giving them access to the new one. If you were the original admin, consider writing it in invisible ink on a post-it note and sticking it to your screen, or stitching it into your underwear, or maybe even taking up another hobby).

Once the password is reset, DO NOT REBOOT. Since there is no init running, there is no process in place for safely taking the system down. The quickest way to shutdown safely is to remount root again:

# mount -o remount,ro /

With the root partition readonly, you can confidently hit the Reset button, bring it up in single-user mode, and begin your actual work.

Hack #3. Common Boot Parameters

Manipulate kernel parameters at boot time

As we saw in [Hack #2], it is possible to pass parameters to the kernel at the LILO prompt allowing you to change the program that is first called when the system boots. Changing init (with the init=/bin/bash line) is just one of many useful options that can be set at boot time. Here are more common boot parameters:

single

Boots up in single user mode.

root=

Changes the device that is mounted as /. For example:

root=/dev/sdc4

will boot from the fourth partition on the third scsi disk (instead of whatever your boot loader has defined as the default).

hdX=

Adjusts IDE drive geometry. This is useful if your BIOS reports incorrect information:

hda=3649,255,63 hdd=cdrom

This defines the master/primary IDE drive as a 30GB hard drive in LBA mode, and the slave/secondary IDE drive as a CD-ROM.

console=

Defines a serial port console on kernels with serial console support. For example:

console=ttyS0,19200n81

Here we're directing the kernel to log boot messages to ttyS0 (the first serial port), at 19200 baud, no parity, 8 data bits, 1 stop bit. Note that to get an actual serial console (that you can log in on), you'll need to add a line to /etc/inittab that looks something like this:

s1:12345:respawn:/sbin/agetty 19200 ttyS0 vt100

nosmp

Disables SMP on a kernel so enabled. This can help if you suspect kernel trouble on a multiprocessor system.

mem=

Defines the total amount of available system memory. See [Hack #21].

ro

Mounts the / partition read-only (this is typically the default, and is remounted read-write after fsck runs).

rw

Mounts the / partition read-write. This is generally a bad idea, unless you're also running the init hack. Pass your init line along with rw, like this:

init=/bin/bash rw

to eliminate the need for all of that silly mount -o remount,rw / stuff in [Hack #2]. Congratulations, now you've hacked a hack.

You can also pass parameters for SCSI controllers, IDE devices, sound cards, and just about any other device driver. Every driver is different, and typically allows for setting IRQs, base addresses, parity, speeds, options for auto-probing, and more. Consult your online documentation for the excruciating details.

See also:

- man bootparam

- /usr/src/linux/Documentation/*

Hack #4. Creating a Persistent Daemon with init

Make sure that your process stays up, no matter what

There are a number of scripts that will automatically restart a process if it exits unexpectedly. Perhaps the simplest is something like:

$ while : ; do echo "Run some code here..."; sleep 1; done

If you run a foreground process in place of that echo line, then the process is always guaranteed to be running (or, at least, it will try to run). The : simply makes the while always execute (and is more efficient than running /bin/true, as it doesn't have to spawn an external command on each iteration). Definitely do not run a background process in place of the echo, unless you enjoy filling up your process table (as the while will then spawn your command as many times as it can, one every second). But as far as cool hacks go, the while approach is fairly lacking in functionality.

What happens if your command runs into an abnormal condition? If it exits immediately, then it will retry every second, without giving any indication that there is a problem (unless the process has its own logging system or uses syslog). It might make sense to have something watch the process, and stop trying to respawn it if it returns too quickly after a few tries.

There is a utility already present on every Linux system that will do this automatically for you: init . The same program that brings up the system and sets up your terminals is perfectly suited for making sure that programs are always running. In fact, that is its primary job.

You can add arbitrary lines to /etc/inittab specifying programs you'd like init to watch for you:

zz:12345:respawn:/usr/local/sbin/my_daemon

The inittab line consists of an arbitrary (but unique) two character identification string (in this case, zz), followed by the runlevels that this program should be run in, then the respawn keyword, and finally the full path to the command. In the above example, as long as my_daemon is configured to run in the foreground, init will respawn another copy whenever it exits. After making changes to inittab, be sure to send a HUP to init so it will reload its configuration. One quick way to do this is:

# kill -HUP 1

If the command respawns too quickly, then init will postpone execution for a while, to keep it from tying up too many resources. For example:

zz:12345:respawn:/bin/touch /tmp/timestamp

This will cause the file /tmp/timestamp to be touched several times a second, until init decides that enough is enough. You should see this message in /var/log/messages almost immediately:

Sep 8 11:28:23 catlin init: Id "zz" respawning too fast: disabled for 5 minutes

In five minutes, init will try to run the command again, and if it is still respawning too quickly, it will disable it again.

Obviously, this method is fine for commands that need to run as root, but what if you want your auto-respawning process to run as some other user? That's no problem: use sudo:

zz:12345:respawn:/usr/bin/sudo -u rob /bin/touch /tmp/timestamp

Now that touch will run as rob, not as root. If you're trying these commands as you read this, be sure to remove the existing /tmp/timestamp before trying this sudo line. After sending a HUP to init, take a look at the timestamp file:

rob@catlin:~# ls -al /tmp/timestamp

-rw-r--r-- 1 rob users 0 Sep 8 11:28 /tmp/timestamp

The two drawbacks to using init to run arbitrary daemons are that you need to comment out the line in inittab if you need to bring the daemon down (since it will just respawn if you kill it) and that only root can add entries to inittab. But for keeping a process running that simply must stay up no matter what, init does a great job.

Hack #5. n>&m: Swap Standard Output and Standard Error

Direct standard out and standard error to wherever you need them to go

By default, a command's standard error goes to your terminal. The standard output goes to the terminal or is redirected somewhere (to a file, down a pipe, into backquotes).

Sometimes you want the opposite. For instance, you may need to send a command's standard output to the screen and grab the error messages (standard error) with backquotes. Or, you might want to send a command's standard output to a file and the standard error down a pipe to an error-processing command. Here's how to do that in the Bourne shell. (The C shell can't do this.)

File descriptors 0, 1, and 2 are the standard input, standard output, and standard error, respectively. Without redirection, they're all associated with the terminal file /dev/tty. It's easy to redirect any descriptor to any file — if you know the filename. For instance, to redirect file descriptor to errfile, type:

$ command

2> errfile

You know that a pipe and backquotes also redirect the standard output:

$ command

| ...\

$ var=`

command`

But there's no filename associated with the pipe or backquotes, so you can't use the 2> redirection. You need to rearrange the file descriptors without knowing the file (or whatever) that they're associated with. Here's how.

Let's start slowly. By sending both standard output and standard error to the pipe or backquotes, the Bourne shell operator n>&m rearranges the files and file descriptors. It says "make file descriptor n point to the same file as file descriptor m." Let's use that operator on the previous example. We'll send standard error to the same place standard output is going:

$ command

2>&1 | ...

$ var=`

command

2>&1`

In both those examples, 2>&1 means "send standard error (file descriptor 2) to the same place standard output (file descriptor 1) is going." Simple, eh?

You can use more than one of those n>&m operators. The shell reads them left-to-right before it executes the command.

"Oh!" you might say, "To swap standard output and standard error — make stderr go down a pipe and stdout go to the screen — I could do this!"

$ command

2>&1 1>&2 | ...

(wrong...)

Sorry, Charlie. When the shell sees 2>&1 1>&2, the shell first does 2>&1. You've seen that before — it makes file descriptor 2 (stderr) go the same place as file descriptor 1 (stdout). Then, the shell does 1>&2. It makes stdout (1) go the same place as stderr (2), but stderr is already going the same place as stdout, down the pipe.

This is one place that the other file descriptors, 3 through 9, come in handy. They normally aren't used. You can use one of them as a "holding place" to remember where another file descriptor "pointed." For example, one way to read the operator 3>&2 is "make 3 point the same place as 2". After you use 3>&2 to grab the location of 2, you can make 2 point somewhere else. Then, make 1 point to where 2 used to (where 3 points now).

The command line you want is one of these:

$ command

3>&2 2>&1 1>&3 | ...

$ var=`

command

3>&2 2>&1 1>&3`

Open files are automatically closed when a process exits. But it's safer to close the files yourself as soon as you're done with them. That way, if you forget and use the same descriptor later for something else (for instance, use F.D. 3 to redirect some other command, or a subprocess uses F.D. 3), you won't run into conflicts. Use m<&- to close input file descriptor m and m>&- to close output file descriptor m. If you need to close standard input, use <&- ; >&- will close standard output.

Hack #6. Building Complex Command Lines

Build simple commands into full-fledged paragraphs for complex (but meaningful) reports

Studying Linux (or indeed any Unix) is much like studying a foreign language. At some magical point in the course of one's studies, halting monosyllabic mutterings begin to meld together into coherent, often used phrases. Eventually, one finds himself pouring out entire sentences and paragraphs of the Unix Mother Tongue, with one's mind entirely on the problem at hand (and not on the syntax of any particular command). But just as high school foreign language students spend much of their time asking for directions to the toilet and figuring out just what the dative case really is, the path to Linux command-line fluency must begin with the first timidly spoken magic words.

Your shell is very forgiving, and will patiently (and repeatedly) listen to your every utterance, until you get it just right. Any command can serve as the input for any other, making for some very interesting Unix "sentences." When armed with the handy (and probably over-used) up arrow, it is possible to chain together commands with slight tweaks over many tries to achieve some very complex behavior.

For example, suppose that you're given the task of finding out why a web server is throwing a bunch of errors over time. If you type less error_log, you see that there are many "soft errors" relating to missing (or badly linked) graphics:

[Tue Aug 27 00:22:38 2002] [error] [client 17.136.12.171] File does not

exist: /htdocs/images/spacer.gif

[Tue Aug 27 00:31:14 2002] [error] [client 95.168.19.34] File does not

exist: /htdocs/image/trans.gif

[Tue Aug 27 00:36:57 2002] [error] [client 2.188.2.75] File does not

exist: /htdocs/images/linux/arrows-linux-back.gif

[Tue Aug 27 00:40:37 2002] [error] [client 2.188.2.75] File does not

exist: /htdocs/images/linux/arrows-linux-back.gif

[Tue Aug 27 00:41:43 2002] [error] [client 6.93.4.85] File does not

exist: /htdocs/images/linux/hub-linux.jpg

[Tue Aug 27 00:41:44 2002] [error] [client 6.93.4.85] File does not

exist: /htdocs/images/xml/hub-xml.jpg

[Tue Aug 27 00:42:13 2002] [error] [client 6.93.4.85] File does not

exist: /htdocs/images/linux/hub-linux.jpg

[Tue Aug 27 00:42:13 2002] [error] [client 6.93.4.85] File does not

exist: /htdocs/images/xml/hub-xml.jpg

and so on. Running a logging package (like analog) reports exactly how many errors you have seen in a day but few other details (which is how you were probably alerted to the problem in the first place). Looking at the logfile directly gives you every excruciating detail but is entirely too much information to process effectively.

Let's start simple. Are there any errors other than missing files? First we'll need to know how many errors we've had today:

$ wc -l error_log

1265 error_log

And how many were due to File does not exist errors?

$ grep "File does not exist:" error_log | wc -l

1265 error_log

That's a good start. At least we know that we're not seeing permission problems or errors in anything that generates dynamic content (like cgi scripts.) If every error is due to missing files (or typos in our html that point to the wrong file) then it's probably not a big problem. Let's generate a list of the filenames of all bad requests. Hit the up arrow and delete that wc -l:

$ grep "File does not exist:" error_log | awk '{print $13}' | less

That's the sort of thing that we want (the 13th field, just the filename), but hang on a second. The same couple of files are repeated many, many times. Sure, we could email this to the web team (all whopping 1265 lines of it), but I'm sure they wouldn't appreciate the extraneous spam. Printing each file exactly once is easy:

$ grep "File does not exist:" error_log | awk '{print $13}' | sort | uniq |

less

This is much more reasonable (substitute a wc -l for that less to see just how many unique files have been listed as missing). But that still doesn't really solve the problem. Maybe one of those files was requested once, but another was requested several hundred times. Naturally, if there is a link somewhere with a typo in it, we would see many requests for the same "missing" file. But the previous line doesn't give any indication of which files are requested most. This isn't a problem for bash; let's try out a command line for loop.

$ for x in `grep "File does not exist" error_log | awk '{print $13}' | sort

| uniq`; do \

echo -n "$x : "; grep $x error_log | wc -l; done

We need those backticks ( `) to actually execute our entire command from the previous example and feed the output of it to a for loop. On each iteration through the loop, the $x variable is set to the next line of output of our original command (that is, the next unique filename reported as missing). We then grep for that filename in the error_log, and count how many times we see it. The echo at the end just prints it in a somewhat nice report format.

I call it a somewhat nice report because not only is it full of single hit errors (which we probably don't care about), the output is very jagged, and it isn't even sorted! Let's sort it numerically, with the biggest hits at the top, numbers on the left, and only show the top 20 most requested "missing" files:

$ for x in `grep "File does not exist" error_log | awk '{print $13}' | sort

| uniq`; do \

grep $x error_log | wc -l | tr -d '\n'; echo " : $x"; done | sort +2 -rn |

head -20

That's much better and not even much more typing than the last try. We need the tr to eliminate the trailing newline at the end of wc's output (why it doesn't have a switch to do this, I'll never know). Your output should look something like this:

595 : /htdocs/images/pixel-onlamp.gif.gif

156 : /htdocs/image/trans.gif

139 : /htdocs/images/linux/arrows-linux-back.gif

68 : /htdocs/pub/a/onjava/javacook/images/spacer.gif

50 : /htdocs/javascript/2001/03/23/examples/target.gif

From this report, it's very simple to see that almost half of our errors are due to a typo on a popular web page somewhere (note the repeated .gif.gif in the first line). The second is probably also a typo (should be images/, not image/). The rest are for the web team to figure out:

$ ( echo "Here's a report of the top 20 'missing' files in the error_log.";

echo; \

for x in `grep "File does not exist" error_log | awk '{print $13}' | sort |

uniq`; do \

grep $x error_log | wc -l | tr -d '\n'; echo " : $x"; done | sort +2 -rn |

head -20 )\

| mail -s "Missing file report" [email protected]

and maybe one hardcopy for the weekly development meeting:

$ for x in `grep "File does not exist" error_log | awk '{print $13}' | sort

| uniq`; do \

grep $x error_log | wc -l | tr -d '\n'; echo " : $x"; done | sort +2 -rn |

head -20 \

| enscript

Hacking the Hack

Once you get used to chunking groups of commands together, you can chain their outputs together indefinitely, creating any sort of report you like out of a live data stream. Naturally, if you find yourself doing a particular task regularly, you might want to consider turning it into a shell script of its own (or even reimplementing it in Perl or Python for efficiency's sake, as every | in a command means that you've spawned yet another program). On a modern (and unloaded) machine, you'll hardly notice the difference, but it's considered good form to clean up the solution once you've hacked it out. And on the command line, there's plenty of room to hack.

Hack #7. Working with Tricky Files in xargs

Deal with many files containing spaces or other strange characters

When you have a number of files containing spaces, parentheses, and other "forbidden" characters, dealing with them can be daunting. This is a problem that seems to come up frequently, with the recent explosive popularity of digital music. Luckily, tab completion in bash makes it simple to handle one file at a time. For example:

rob@catlin:~/Music$ ls

Hallucinogen - The Lone Deranger

Misc - Pure Disco

rob@catlin:~/Music$ rm -rf Misc[TAB]

rob@catlin:~/Music$ rm -rf Misc\ -\ Pure\ Disco/

Hitting the Tab key for [TAB] above replaces the command line with the line below it, properly escaping any special characters contained in the file. That's fine for one file at a time, but what if we want to do a massive transformation (say, renaming a bunch of mp3s to include an album name)? Take a look at this:

rob@catlin:~/Music$ cd Hall[TAB]

rob@catlin:~/Music$ cd Hallucinogen\ -\ The\ Lone\ Deranger/

rob@catlin:~/Music/Hallucinogen - The Lone Deranger$ ls

Hallucinogen - 01 - Demention.mp3

Hallucinogen - 02 - Snakey Shaker.mp3

Hallucinogen - 03 - Trancespotter.mp3

Hallucinogen - 04 - Horrorgram.mp3

Hallucinogen - 05 - Snarling (Remix).mp3

Hallucinogen - 06 - Gamma Goblins Pt. 2.mp3

Hallucinogen - 07 - Deranger.mp3

Hallucinogen - 08 - Jiggle of the Sphinx.mp3

rob@catlin:~/Music/Hallucinogen - The Lone Deranger$

When attempting to manipulate many files at once, things get tricky. Many system utilities break on whitespace (yielding many more chunks than you intended) and will completely fall apart if you throw a ) or a { at them. What we need is a delimiter that is guaranteed never to show up in a filename, and break on that instead.

Fortunately, the xargs utility will break on NULL characters, if you ask it to nicely. Take a look at this script:

Listing: albumize

#!/bin/sh

if [ -z "$ALBUM" ]; then

echo 'You must set the ALBUM name first (eg. export ALBUM="Greatest Hits")'

exit 1

fi

for x in *; do

echo -n $x; echo -ne '\000'

echo -n `echo $x|cut -f 1 -d '-'`

echo -n " - $ALBUM - "

echo -n `echo $x|cut -f 2- -d '-'`; echo -ne '\000'

done | xargs -0 -n2 mv

We're actually doing two tricky things here. First, we're building a list consisting of the original filename followed by the name to which we'd like to mv it, separated by NULL characters, for all files in the current directory. We then feed that entire list to an xargs with two switches: -0 tells it to break on NULLs (instead of newlines or whitespace), and -n2 tells it to take two arguments at a time on each pass, and feed them to our command (mv).

Save the script as ~/bin/albumize. Before you run it, set the $ALBUM environment variable to the name that you'd like injected into the filename just after the first -. Here's a trial run:

rob@catlin:~/Music/Hallucinogen - The Lone Deranger$ export ALBUM="The Lone Deranger"

rob@catlin:~/Music/Hallucinogen - The Lone Deranger$ albumize

rob@catlin:~/Music/Hallucinogen - The Lone Deranger$ ls

Hallucinogen - The Lone Deranger - 01 - Demention.mp3

Hallucinogen - The Lone Deranger - 02 - Snakey Shaker.mp3

Hallucinogen - The Lone Deranger - 03 - Trancespotter.mp3

Hallucinogen - The Lone Deranger - 04 - Horrorgram.mp3

Hallucinogen - The Lone Deranger - 05 - Snarling (Remix).mp3

Hallucinogen - The Lone Deranger - 06 - Gamma Goblins Pt. 2.mp3

Hallucinogen - The Lone Deranger - 07 - Deranger.mp3

Hallucinogen - The Lone Deranger - 08 - Jiggle of the Sphinx.mp3

rob@catlin:~/Music/Hallucinogen - The Lone Deranger$

What if you would like to remove the album name again? Try this one, and call it ~/bin/dealbumize:

#!/bin/sh

for x in *; do

echo -n $x; echo -ne '\000'

echo -n `echo $x|cut -f 1 -d '-'`; echo -n ' - '

echo -n `echo $x|cut -f 3- -d '-'`; echo -ne '\000'

done | xargs -0 -n2 mv

and simply run it (no $ALBUM required):

rob@catlin:~/Music/Hallucinogen - The Lone Deranger$ dealbumize

rob@catlin:~/Music/Hallucinogen - The Lone Deranger$ ls

Hallucinogen - 01 - Demention.mp3

Hallucinogen - 02 - Snakey Shaker.mp3

Hallucinogen - 03 - Trancespotter.mp3

Hallucinogen - 04 - Horrorgram.mp3

Hallucinogen - 05 - Snarling (Remix).mp3

Hallucinogen - 06 - Gamma Goblins Pt. 2.mp3

Hallucinogen - 07 - Deranger.mp3

Hallucinogen - 08 - Jiggle of the Sphinx.mp3

rob@catlin:~/Music/Hallucinogen - The Lone Deranger$

The -0 switch is also popular to team up with the -print0 option of find (which, naturally, prints matching filenames separated by NULLs instead of newlines). With find and xargs on a pipeline, you can do anything you like to any number of files, without ever running into the dreaded Argument list too long error:

rob@catlin:~/Pit of too many files$ ls *

bash: /bin/ls: Argument list too long

A find/xargs combo makes quick work of these files, no matter what they're called:

rob@catlin:/Pit of too many files$ find -type f -print0 | xargs -0 ls

To delete them, just replace that trailing ls with an rm, and away you go.

Hack #8. Immutable Files in ext2/ext3

Create files that even root can't manipulate

Here's a puzzle for you. Suppose we're cleaning up /tmp, and run into some trouble:

root@catlin:/tmp# rm -rf junk/

rm: cannot unlink `junk/stubborn.txt': Operation not permitted

rm: cannot remove directory `junk': Directory not empty

root@catlin:/tmp# cd junk/

root@catlin:/tmp/junk# ls -al

total 40

drwxr-xr-x 2 root root 4096 Sep 4 14:45 ./

drwxrwxrwt 13 root root 4096 Sep 4 14:45 ../

-rw-r--r-- 1 root root 29798 Sep 4 14:43 stubborn.txt

root@catlin:/tmp/junk# rm ./stubborn.txt

rm: remove write-protected file `./stubborn.txt'? y

rm: cannot unlink `./stubborn.txt': Operation not permitted

What's going on? Are we root or aren't we? Let's try emptying the file instead of deleting it:

root@catlin:/tmp/junk# cp /dev/null stubborn.txt

cp: cannot create regular file `stubborn.txt': Permission denied

root@catlin:/tmp/junk# > stubborn.txt

bash: stubborn.txt: Permission denied

Well, /tmp certainly isn't mounted read-only. What is going on?

In the ext2 and ext3 filesystems, there are a number of additional file attributes that are available beyond the standard bits accessible through chmod. If you haven't seen it already, take a look at the manpages for chattr and its companion, lsattr .

One of the very useful new attributes is -i, the immutable flag. With this bit set, attempts to unlink, rename, overwrite, or append to the file are forbidden. Even making a hard link is denied (so you can't make a hard link, then edit the link). And having root privileges makes no difference when immutable is in effect:

root@catlin:/tmp/junk# ln stubborn.txt another.txt

ln: creating hard link `another.txt' to `stubborn.txt': Operation not permitted

To view the supplementary ext flags that are in force on a file, use lsattr:

root@catlin:/tmp/junk# lsattr

---i--------- ./stubborn.txt

and to set flags a la chmod, use chattr:

root@catlin:/tmp/junk# chattr -i stubborn.txt

root@catlin:/tmp/junk# rm stubborn.txt

root@catlin:/tmp/junk#

This could be terribly useful for adding an extra security step on files you know you'll never want to change (say, /etc/rc.d/* or various configuration files.) While little will help you on a box that has been r00ted, immutable files probably aren't vulnerable to simple overwrite attacks from other processes, even if they are owned by root.

There are hooks for adding compression, security deletes, undeletability, synchronous writes, and a couple of other useful attributes. As of this writing, many of the additional attributes aren't implemented yet, but keep watching for new developments on the ext filesystem.

Hack #9. Speeding Up Compiles

Make sure you're keeping all processors busy with parallel builds

If you're running a multiprocessor system (SMP) with a moderate amount of RAM, you can usually see significant benefits by performing a parallel make when building code. Compared to doing serial builds when running make (as is the default), a parallel build is a vast improvement.

To tell make to allow more than one child at a time while building, use the -j switch:

rob@mouse:~/linux$ make -j4; make -j4 modules

Some projects aren't designed to handle parallel builds and can get confused if parts of the project are built before their parent dependencies have completed. If you run into build errors, it is safest to just start from scratch this time without the -j switch.

By way of comparison, here are some sample timings. They were performed on an otherwise unloaded dual PIII/600 with 1GB RAM. Each time I built a bzImage for Linux 2.4.19 (redirecting STDOUT to /dev/null), and removed the source tree before starting the next test.

time make bzImage:

real 7m1.640s

user 6m44.710s

sys 0m25.260s

time make -j2 bzImage:

real 3m43.126s

user 6m48.080s

sys 0m26.420s

time make -j4 bzImage:

real 3m37.687s

user 6m44.980s

sys 0m26.350s

time make -j10 bzImage:

real 3m46.060s

user 6m53.970s

sys 0m27.240s

As you can see, there is a significant improvement just by adding the -j2 switch. We dropped from 7 minutes to 3 minutes and 43 seconds of actual time. Increasing to -j4 saved us about five more seconds, but jumping all the way to -j10 actually hurt performance by a few seconds. Notice how user and system seconds are virtually the same across all four runs. In the end, you need to shovel the same sized pile of bits, but -j on a multi-processor machine simply lets you spread it around to more people with shovels.

Of course, bits all eventually end up in the bit bucket anyway. But hey, if nothing else, performance timings are a great way to keep your cage warm.

Hack #10. At Home in Your Shell Environment

Make bash more comfortable through environment variables

Consulting a manpage for bash can be a daunting read, especially if you're not precisely sure what you're looking for. But when you have the time to devote to it, the manpage for bash is well worth the read. This is a shell just oozing with all sorts of arcane (but wonderfully useful) features, most of which are simply disabled by default.

Let's start by looking at some useful environment variables, and some useful values to which to set them:

export PS1=`echo -ne "\033[0;34m\u@\h:\033[0;36m\w\033[0;34m\$\033[0;37m "`

As you probably know, the PS1 variable sets the default system prompt, and automatically interprets escape sequences such as \u (for username) and \w (for the current working directory.) As you may not know, it is possible to encode ANSI escape sequences in your shell prompt, to give your prompt a colorized appearance. We wrap the whole string in backticks (` ) in order to get echo to generate the magic ASCII escape character. This is executed once, and the result is stored in PS1. Let's look at that line again, with boldface around everything that isn't an ANSI code:

export PS1=`echo -ne "\033[0;34m\u@\h:\033[0;36m\w\033[0;34m\$\033[0;37m "`

You should recognize the familiar \u@\h:\w\$prompt that we've all grown to know and love. By changing the numbers just after each semicolon, you can set the colors of each part of the prompt to your heart's content.

Along the same lines, here's a handy command that is run just before bash gives you a prompt:

export PROMPT_COMMAND='echo -ne "\033]0;${USER}@${HOSTNAME}: ${PWD}\007"'

(We don't need backticks for this one, as bash is expecting it to contain an actual command, not a string.) This time, the escape sequence is the magic string that manipulates the titlebar on most terminal windows (such as xterm, rxvt, eterm, gnometerm, etc.). Anything after the semicolon and before the \007 gets printed to your titlebar every time you get a new prompt. In this case, we're displaying your username, the host you're logged into, and the current working directory. This is quite handy for being able to tell at a glance (or even while within vim) to which machine you're logged in, and to what directory you're about to save your file. See [Hack #59] if you'd like to update your titlebar in real time instead of at every new bash prompt.

Have you ever accidentally hit ^D too many times in a row, only to find yourself logged out? You can tell bash to ignore as many consecutive ^D hits as you like:

export IGNOREEOF=2

This makes bash follow the Snark rule ("What I tell you three times is true") and only log you out if you hit ^D three times in a row. If that's too few for you, feel free to set it to 101 and bash will obligingly keep count for you.

Having a directory just off of your home that lies in your path can be extremely useful (for keeping scripts, symlinks, and other random pieces of code.) A traditional place to keep this directory is in bin underneath your home directory. If you use the ~ expansion facility in bash, like this:

export PATH=$PATH:~/bin

then the path will always be set properly, even if your home directory ever gets moved (or if you decide you want to use this same line on multiple machines with potentially different home directories — as in movein.sh). See [Hack #72].

Did you know that just as commands are searched for in the PATH variable (and manpages are searched for in the MANPATH variable), directories are likewise searched for in the CDPATH variable every time you issue a cd? By default, it is only set to ".", but can be set to anything you like:

export CDPATH=.:~

This will make cd search not only the current directory, but also your home directory for the directory you try to change to. For example:

rob@caligula:~$ ls

bin/ devel/ incoming/ mail/ test/ stuff.txt

rob@caligula:~$ cd /usr/local/bin

rob@caligula:/usr/local/bin$ cd mail

bash: cd: mail: No such file or directory

rob@caligula:/usr/local/bin$ export CDPATH=.:~

rob@caligula:/usr/local/bin$ cd mail

/home/rob/mail

rob@caligula:~/mail$

You can put as many paths as you like to search for in CDPATH, separating each with a : (just as with the PATH and MANPATH variables.)

We all know about the up arrow and the history command. But what happens if you accidentally type something sensitive on the command line? Suppose you slip while typing and accidentally type a password where you meant to type a command. This accident will faithfully get recorded to your ~/.bash_history file when you logout, where another unscrupulous user might happen to find it. Editing your .bash_history manually won't fix the problem, as the file gets rewritten each time you log out.

To clear out your history quickly, try this from the command line:

export HISTSIZE=0

This completely clears out the current bash history and will write an empty .bash_history on logout. When you log back in, your history will start over from scratch but will otherwise work just as before. From now on, try to be more careful!

Do you have a problem with people logging into a machine, then disconnecting their laptop and going home without logging back out again? If you've ever run a w and seen a bunch of idle users who have been logged in for several days, try setting this in their environment:

export TMOUT=600

The TMOUT variable specifies the number of seconds that bash will wait for input on a command line before logging the user out automatically. This won't help if your users are sitting in a vi window but will alleviate the problem of users just sitting at an idle shell. Ten minutes might be a little short for some users, but kindly remind them that if they don't like the system default, they are free to reset the variable themselves.

This brings up an interesting point: exactly where do you go to make any of these environment changes permanent? There are several files that bash consults when starting up, depending on whether the shell was called at login or from within another shell.

From bash(1), on login shells: