MADE IN AMERICA

Bill Bryson

Illustrations by Bruce McCall

Contents

3 A ‘Democratic Phrenzy’: America in the Age of Revolution

5 By the Dawn’s Early Light: Forging a National Identity

6 We’re in the Money: The Age of Invention

8 ‘Manifest Destiny’: Taming the West

9 The Melting-Pot: Immigration in America

10 When the Going was Good: Travel in America

11 What’s Cooking?: Eating in America

12 Democratizing Luxury: Shopping in America

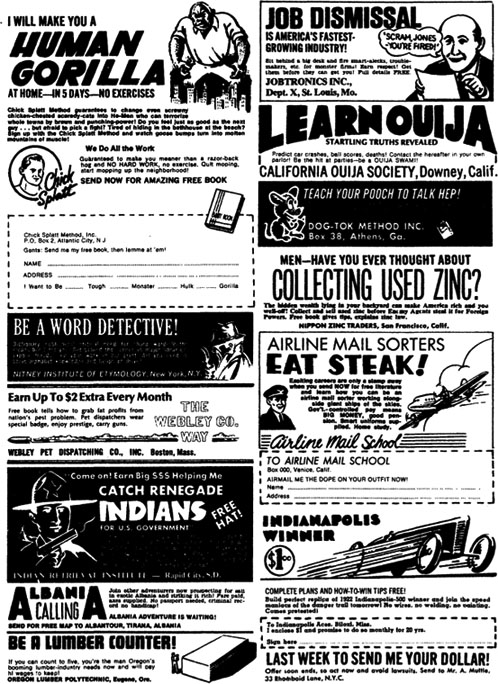

14 The Hard Sell: Advertising in America

16 The Pursuit of Pleasure: Sport and Play

17 Of Bombs and Bunkum: Politics and War

20 Welcome to the Space Age: The 1950s and Beyond

This eBook is copyright material and must not be copied, reproduced, transferred, distributed, leased, licensed or publicly performed or used in any way except as specifically permitted in writing by the publishers, as allowed under the terms and conditions under which it was purchased or as strictly permitted by applicable copyright law. Any unauthorised distribution or use of this text may be a direct infringement of the author’s and publisher’s rights and those responsible may be liable in law accordingly.

Version 1.0

Epub ISBN 9781409095699

TRANSWORLD PUBLISHERS

61–63 Uxbridge Road, London W5 5SA

A Random House Group Company

MADE IN AMERICA

A BLACK SWAN BOOK: 9780552998055

First published in Great Britain in 1994 by Martin Secker & Warburg Ltd

Minerva edition published 1995

Black Swan edition published 1998

Copyright © Bill Bryson 1994

Illustrations copyright © by Bruce McCall

Bill Bryson has asserted his right under the Copyright, Designs and Patents Act 1988 to be identified as the author of this work.

A CIP catalogue record for this book is available from the British Library.

This book is sold subject to the condition that it shall not, by way of trade or otherwise, be lent, resold, hired out, or otherwise circulated without the publisher’s prior consent in any form of binding or cover other than that in which it is published and without a similar condition, including this condition, being imposed on the subsequent purchaser.

Addresses for Random House Group Ltd companies outside the UK can be found at: www.randomhouse.co.uk The Random House Group Ltd Reg. No. 954009

The Random House Group Limited supports The Forest Stewardship Council (FSC), the leading international forest certification organisation. All our titles that are printed on Greenpeace approved FSC certified paper carry the FSC logo. Our paper procurement policy can be found at www.rbooks.co.uk/environment

Typeset in 10.5/13pt Giovanni by Falcon Oast Graphic Art Ltd.

Printed in the UK by CPI Cox & Wyman, Reading, RG1 8EX.

20 19

About the Author

Bill Bryson is one of the funniest writers alive. For the past two decades he has been entertaining readers with bravura displays of wit and wisdom. His first book, The Lost Continent, in which he put small town America under the microscope, was an instant classic of modern travel literature. Although he has returned to America many times since, never has he been more funny, more memorable, more acute than in his most recent book, The Life and Times of the Thunderbolt Kid, in which he revisits that most fecund of topics, his childhood. The trials and tribulations of growing up in 1950s America are all here. Des Moines, Iowa, is recreated as a backdrop to a golden age where everything was good for you, including DDT, cigarettes and nuclear fallout. This is as much a story about an almost forgotten, innocent America as it is about Bryson’s childhood. The past is a foreign country. They did things differently then ...

Bill Bryson’s bestselling travel books include The Lost Continent, Notes from a Small Island, A Walk in the Woods and Down Under. A Short History of Nearly Everything was shortlisted for the Samuel Johnson Prize, and won the Aventis Prize for Science Books and the Descartes Science Communication Prize. His latest book is his bestselling childhood memoir, The Life and Times of the Thunderbolt Kid.

To David, Felicity, Catherine and Sam

List of Illustrations

Founding Fathers’ Day, Plymouth Rock

Dame Railway and Her Choo-Choo Court, Cincinnati Ironmongery Fair, 1852

Let us show you for just $1 – how to pack BIG ad ideas into small packages!!

Hoplock’s amazing catch in the 1946 World Series

Wing dining, somewhere over France, 1929

New as nuclear fission and twice as powerful – that’s the new, newer, newest, all-new Bulgemobile!!

Acknowledgements

Among the many people to whom I am indebted for assistance and encouragement during the preparation of this book, I would like especially to thank Maria Guarnaschelli, Geoff Mulligan, Max Eilenberg, Carol Heaton, Dan Franklin, Andrew Franklin, John Price, Erla Zwingle, Karen Voelkening, Oliver Salzmann, Hobie and Lois Morris, Heidi Du Belt, James Mansley, Samuel H. Beamesderfer, Bonita Lousie Billman, Dr John L. Sommer, Allan M. Siegal, Bruce Corson, and the staffs of the Drake University Library in Des Moines and the National Geographic Society Library in Washington. Above all, and as ever, my infinite, heartfelt thanks and admiration to my wife, Cynthia.

Introduction

In the 1940s, a British traveller to Anholt, a small island fifty miles out in the Kattegat strait between Denmark and Sweden, noticed that the island children sang a piece of doggerel that was clearly nonsense to them. It went:

Jeck og Jill

Vent op de hill

Og Jell kom tombling after.

The ditty, it turned out, had been brought to the island by occupying British soldiers during the Napoleonic wars, and had been handed down from generation to generation of children for 130 years, even though the words meant nothing to them.

In London, this small discovery was received with interest by a couple named Peter and lona Opie. The Opies had dedicated their lives to the scholarly pursuit of nursery rhymes. No one had put more effort into investigating the history and distribution of these durable but largely uncelebrated components of childhood life. Something that had long puzzled the Opies was the curious fate of a rhyme called ‘Brow Bender’. Once as popular as ‘Humpty Dumpty’ and ‘Hickory Dickory Dock’, it was routinely included in children’s nursery books up until the late eighteenth century, but then it quietly and mysteriously vanished. It had not been recorded in print anywhere since 1788. Then one night as the Opies’ nanny was tucking their children in to bed, they overheard her reciting a nursery rhyme to them. It was, as you will have guessed, ‘Brow Bender’, exactly as set down in the 1788 version and with five lines never before recorded.

Now what, you may reasonably ask, does any of this have to do with a book on the history and development of the English language in America? I bring it up for two reasons. First, to make the point that it is often the little, unnoticed things that are most revealing about the history and nature of language. Nursery rhymes, for example, are fastidiously resistant to change. Even when they make no sense, as in the case of ‘Jack and Jill’ with children on an isolated Danish isle, they are generally passed from generation to generation with solemn precision, like a treasured incantation. Because of this, they are often among the longest-surviving features of any language. ‘Eenie, meenie, minie, mo’ is based on a counting system that predates the Roman occupation of Britain, and that may even be pre-Celtic. If so, it is one of our few surviving links with the very distant past. It not only gives us a fragmentary image of how children were being amused at the time that Stonehenge was built, but tells us something about how their elders counted and thought and ordered their speech. Little things, in short, are worth looking at.

The second point is that songs, words, phrases, ditties – any feature of language at all – can survive for long periods without anyone particularly noticing, as the Opies discovered with ‘Brow Bender’. That a word or phrase hasn’t been recorded tells us only that it hasn’t been recorded, not that it hasn’t existed. The inhabitants of England in the age of Chaucer commonly used an expression, to be in hide and hair, meaning to be lost or beyond discovery. But then it disappears from the written record for four hundred years before resurfacing, suddenly and unexpectedly, in America in 1857 as neither hide nor hair. It is dearly unlikely that the phrase went into a linguistic coma for four centuries. So who was quietly preserving it for four hundred years, and why did it so abruptly return to prominence in the sixth decade of the nineteenth century in a country two thousand miles away?

Why, come to that, did the Americans save such good old English words as skedaddle and chitterlings and chore, but not fortnight or heath? Why did they keep the irregular British pronunciations in words like colonel and hearth, but go our own way with lieutenant and schedule and clerk? Why in short is American English the way it is?

This is, it seems to me, a profoundly worthwhile and fascinating question, and yet until relatively recent times it is one that hardly anyone thought to ask. Until well into this century serious studies of American speech were left almost entirely to amateurs – people like the heroic Richard Harwood Thornton, an English-born lawyer who devoted years of his spare time to poring through books, journals and manuscripts from the earliest colonial period in search of the first appearances of hundreds of American terms. In 1912 he produced the two-volume American Glossary. It was a work of invaluable scholarship, yet he could not find a single American publisher prepared to take it on. Eventually, to the shame of American scholarship, it was published in London.

Not until the 1920s and ’30s, with the successive publications of H. L. Mencken’s incomparable The American Language, George Philip Krapp’s The English Language in America and Sir William Craigie and James R. Hulbert’s Dictionary of American English on Historical Principles, did America at last get books that seriously addressed the question of its language. But by then the inspiration behind many hundreds of American expressions had passed into the realms of the unknowable, so that now no one can say why Americans paint the town red, talk turkey, take a powder or hit practice flies with a fungo bat.

This book is a modest attempt to examine how and why American speech came to be the way it is. It is not, I hope, a conventional history of the American language. Much of it is unashamedly discursive. You could be excused for wondering what Mrs Stuyvesant Fish’s running over her servant three times in succession with her car has to do with the history and development of the English language in the United States, or how James Gordon Bennett’s lifelong habit of yanking the cloths from every table he passed in a restaurant connects to the linguistic development of the American people. I would argue that unless we understand the social context in which words were formed – unless we can appreciate what a bewildering novelty the car was to those who first encountered it, or how dangerously extravagant and out of touch with the masses a turn-of-the-century business person could be – we cannot begin to appreciate the richness and vitality of the words that make American speech.

Oh, and I’ve included them for a third reason: because I thought they were interesting and hoped you might enjoy them. One of the small agonies of researching a book like this is that you come across stories that have no pressing relevance to the topic and must be let lie. I call them Ray Buduick stories. I came across Ray Buduick when I was thumbing through a 1941 volume of Time magazines looking for something else altogether. It happened that one day in that year Buduick decided, as he often did, to take his light aircraft up for an early Sunday morning spin. Nothing remarkable in that, except that Buduick lived in Honolulu and that this panicular morning happened to be 7 December 1941. As he headed out over Pearl Harbour, Buduick was taken aback, to say the least, to find the western skies dense with Japanese Zeros, all bearing down on him. The Japanese raked his plane with fire and Buduick, presumably issuing utterances along the lines of ‘Golly Moses!’, banked sharply and cleared off. Miraculously he managed to land his plane safely in the midst of the greatest airborne attack yet seen in history, and lived to tell the tale, and in so doing became the first American to engage the Japanese in combat, however inadvertently.

Of course, this has nothing at all to do with the American language. But everything else that follows does. Honestly.

Founding Fathers’ Day, Plymouth Rock

1

The Mayflower and Before

I

The image of the spiritual founding of America that generations of Americans have grown up with was created, oddly enough, by a poet of limited talents (to put it in the most magnanimous possible way) who lived two centuries after the event in a country three thousand miles away. Her name was Felicia Dorothea Hemans and she was not American but Welsh. Indeed, she had never been to America and appears to have known next to nothing about the country. It just happened that one day in 1826 her local grocer in north Wales wrapped her purchases in a sheet of two-year-old newspaper from Massachusetts, and her eye was caught by a small article about a founders’ day celebration in Plymouth. It was very probably the first she had heard of the Mayflower or the Pilgrims, but inspired as only a mediocre poet can be, she dashed off a poem, ‘The Landing of the Pilgrim Fathers (in New England)’ which begins:

The breaking waves dashed high

On a stern and rock-bound coast,

And the woods, against a stormy sky,

Their giant branches toss’d

And the heavy night hung dark

The hills and water o’er,

When a band of exiles moor’d their bark

On the wild New England shore ...

and continues in a vigorously grandiloquent, indeterminately rhyming vein for a further eight stanzas. Although the poem was replete with errors – the Mayflower was not a bark, it was not night when they moored, Plymouth was not ‘where first they trod’ but in fact their fourth landing site – it became an instant classic, and formed the essential image of the Mayflower landing that most Americans carry with them to this day.*1

The one thing the Pilgrims certainly didn’t do was step ashore on Plymouth Rock. Quite apart from the consideration that it may have stood well above the high-water mark in 1620, no prudent mariner would try to bring a ship alongside a boulder on a heaving December sea when a sheltered inlet beckoned from near by. Indeed, it is doubtful that the Pilgrims even noticed Plymouth Rock. No mention of the rock is found among any of the surviving documents and letters of the age, and it doesn’t make its first recorded appearance until 1715, almost a century later.1 Not until about the time Ms Hemans wrote her swooping epic did Plymouth Rock become indelibly associated with the landing of the Pilgrims.

Wherever they first trod, we can assume that the 102 Pilgrims stepped from their storm-tossed little ship with unsteady legs and huge relief. They had just spent nine and a half damp and perilous weeks at sea, crammed together on a creaking vessel about the size of a modern double-decker bus. The crew, with the customary graciousness of sailors, referred to them as puke stockings, on account of their apparently boundless ability to spatter the latter with the former, though in fact they had handled the experience reasonably well.2 Only one passenger had died en route, and two had been added through births (one of whom revelled ever after in the exuberant name of Oceanus Hopkins).

They called themselves Saints. Those members of the party who were not Saints they called Strangers. Pilgrims, in reference to these early voyagers, would not become common for another two hundred years. Nor, strictly speaking, is it correct to call them Puritans. They were Separatists, so called because they had left the Church of England. Puritans were those who remained in the Anglican Church but wished to purify it. They would not arrive in America for another decade, but when they did they would quickly eclipse, and eventually absorb, this little original colony.

It would be difficult to imagine a group of people more ill-suited to a life in the wilderness. They packed as if they had misunderstood the purpose of the trip. They found room for sundials and candle snuffers, a drum, a trumpet, and a complete history of Turkey. One William Mullins packed 126 pairs of shoes and 13 pairs of boots. Yet between them they failed to bring a single cow or horse or plough or fishing line. Among the professions represented on the Mayflower’s manifest were two tailors, a printer, several merchants, a silk worker, a shopkeeper and a hatter – occupations whose importance is not immediately evident when one thinks of surviving in a hostile environment.3 Their military commander, Miles Standish, was so diminutive of stature that he was known to all as ’Captain Shrimpe’4 – hardly a figure to inspire awe in the savage natives whom they confidently expected to encounter. With the uncertain exception of the little captain, probably none in the party had ever tried to bring down a wild animal. Hunting in seventeenth-century Europe was a sport reserved for the aristocracy. Even those who labelled themselves farmers generally had scant practical knowledge of husbandry, since farmer in the 1600s, and for some time afterwards, signified an owner of land rather than one who worked it.

They were, in short, dangerously unprepared for the rigours ahead, and they demonstrated their manifest incompetence in the most dramatic possible way: by dying in droves. Six expired in the first two weeks, eight the next month, seventeen more in February, a further thirteen in March. By April, when the Mayflower set sail back to England*2 just fifty-four people, nearly half of them children, were left to begin the long work of turning this tenuous toehold into a self-sustaining colony.5

At this remove, it is difficult to conceive just how alone this small, hapless band of adventurers was. Their nearest kindred neighbours – at Jamestown in Virginia and at a small and now all but forgotten colony at Cupers (now Cupids) Cove in Newfoundland*3 – were five hundred miles off in opposite directions. At their back stood a hostile ocean, and before them lay an inconceivably vast and unknown continent of ‘wild and savage hue’, in William Bradford’s uneasy words. They were about as far from the comforts of civilization as anyone had ever been (certainly as far as anyone had ever been without a fishing line).

For two months they tried to make contact with the natives, but every time they spotted any, the Indians ran off. Then one day in February a young brave of friendly mien approached a party of Pilgrims on a beach. His name was Samoset and he was a stranger in the region himself, but he had a friend named Tisquantum from the local Wampanoag tribe, to whom he introduced them. Samoset and Tisquantum became the Pilgrims’ fast friends. They showed them how to plant corn and catch wildfowl and helped them to establish friendly relations with the local sachem, or chief. Before long, as every schoolchild knows, the Pilgrims were thriving, and Indians and settlers were sitting down to a cordial Thanksgiving feast. Life was grand.

A question that naturally arises is how they managed this. Algonquian, the language of the eastern tribes, is an extraordinarily complex and agglomerative tongue (or more accurately family of tongues), full of formidable consonant clusters that are all but unpronounceable to the untutored, as we can see from the first primer of Algonquian speech prepared some twenty years later by Roger Williams in Connecticut (a feat of scholarship deserving of far wider fame, incidentally). Try saying the following and you may get some idea of the challenge:

Nquitpausuckowashâwmen – There are a hundred of us.

Chénock wonck cuppee-yeâumen? – When will you return?

Tashúckqunne cummauchenaûmis – How long have you been sick?

Ntannetéimmin – I will be going.6

Clearly this was not a language you could pick up in a weekend, and the Pilgrims were hardly gifted linguists. They weren’t even comfortable with Tisquantum’s name; they called him Squanto. The answer, surprisingly glossed over by most history books, is that the Pilgrims didn’t have to learn Algonquian for the happy and convenient reason that Samoset and Squanto spoke English. Samoset spoke it only a little, but Squanto spoke English with total assurance (and Spanish into the bargain).

That a straggly band of English settlers could in 1620 cross a vast ocean and find a pair of Indians able to welcome them in their own tongue seems little short of miraculous. It was certainly lucky – the Pilgrims would very probably have perished or been slaughtered without them – but not as wildly improbable as it at first seems. The fact is that in 1620 the New World wasn’t really so new at all.

II

No one knows who the first European visitors to the New World were. The credit generally goes to the Vikings, who reached the New World in about AD 1000, but there are grounds to suppose that others may have been there even earlier. An ancient Latin text, the Navigatio Sancti Brendani Abbatis (The Voyage of St. Brendan the Abbot), recounts with persuasive detail a seven-year trip to the New World made by this Irish saint and a band of acolytes some four centuries before the Vikings – and this on the advice of another Irishman who claimed to have been there earlier still.

Even the Vikings didn’t think themselves the first. Their sagas record that when they first arrived in the New World they were chased from the beach by a group of wild white people. They subsequently heard stories from natives of a settlement of Caucasians who ‘wore white garments and ... carried poles before them to which rags were attached’7 – precisely how an Irish religious procession would have looked to the uninitiated. (Intriguingly, five centuries later Columbus’s men would hear a similar story in the Caribbean.) Whether by Irish or Vikings – or Italians or Welsh or Bretons or any of the other many groups for whom credit has been sought – crossing the Atlantic in the Middle Ages was not quite as daring a feat as it would at first appear, even allowing for the fact that it was done in small, open boats. The North Atlantic is conveniently scattered with islands that could serve as stepping-stones – the Shetland Islands, Faeroes, Iceland, Greenland and Baffin. It would be possible to sail from Scandinavia to Canada without once crossing more than 250 miles of open sea. We know beyond doubt that Greenland – and thus, technically, North America – was discovered in 982 by one Eric the Red, father of Leif Ericson (or Leif Eríksson), and that he and his followers began settling it in 986. Anyone who has ever flown over the frozen wastes of Greenland could be excused for wondering what they saw in the place. But in fact Greenland’s southern fringes are further south than Oslo and offer an area of grassy lowlands as big as the whole of Britain.8 Certainly it suited the Vikings. For nearly five hundred years they kept a thriving colony there, which at its peak boasted sixteen churches, two monasteries, some 300 farms and a population of 4,000. But the one thing Greenland lacked was wood with which to build new ships and repair old ones – a somewhat vital consideration for a sea-going people. Iceland, the nearest land mass to the east, was known to be barren. The most natural thing would be to head west to see what was out there. In about 1000, according to the sagas, Leif Ericson did just that. His expedition discovered a new land mass, probably Baffin Island, far up in northern Canada, over a thousand miles north of the present-day United States, and many other places, most notably the region they called Vinland.

Vinland is one of history’s more tantalizing posers. No one knows where it was. By careful readings of the sagas and calculations of Viking sailing times, various scholars have put Vinland all over the place – on Newfoundland or Nova Scotia, in Massachusetts or even as far south as Virginia. A Norwegian scholar named Helge Ingstad claimed in 1964 to have found Vinland at a place called L’Anse au Meadow in Newfoundland. Others suggest that the artefacts Ingstad found were not of Viking origin at all, but merely the detritus of later French colonists.9 No one knows. The name is no help. According to the sagas, the Vikings called it Vinland because of the grape-vines they found growing in profusion there. The problem is that no place within a thousand miles of where they must have been could possibly have supported grapes. One possible explanation is that Vinland was a mistranslation. Vinber, the Viking word for grapes, could be used to describe many other fruits – cranberries, gooseberries and red currants, among them – that might have been found at these northern latitudes. Another possibility is that Vinland was merely a bit of deft propaganda, designed to encourage settlement. These were after all the people who thought up the name Greenland.

The Vikings made at least three attempts to build permanent settlements in Vinland, the last in 1013, before finally giving up. Or possibly not. What is known beyond doubt is that sometime after 1408 the Vikings abruptly disappeared from Greenland. Where they went or what became of them is unknown.10 The tempting presumption is that they found a more congenial life in North America.

There is certainly an abundance of inexplicable clues. Consider the matter of lacrosse, a game long popular with Indians across wide tracts of North America. Interestingly, the rules of lacrosse are uncannily like those of a game played by the Vikings, including one feature – the use of paired teammates who may not be helped or impeded by other players – so unusual, in the words of one anthropologist, ‘as to make the probability of independent origin vanishingly small’. Then there were the Haneragmiuts, a tribe of Inuits living high above the Arctic Circle on Victoria Island in northern Canada, a place so remote that its inhabitants were not known to the outside world until early this century. Yet several members of the tribe not only looked unsettlingly European but were found to be carrying indubitably European genes.11 No one has ever provided a remotely satisfactory explanation of how this could be. Or consider the case of Olof and Edward Ohman, father and son respectively, who in 1888 were digging up tree stumps on their farm near Kensington, Minnesota, when they came upon a large stone slab covered with runic inscriptions, which appear to describe how a party of thirty Vikings had returned to that spot after an exploratory survey to find the ten men they had left behind ‘red with blood and dead’. The inscriptions have been dated to 1363. The one problem is how to explain why a party of weary explorers, facing the prospect of renewed attack by hostile natives, would take the time to make elaborate carvings on a rock deep in the American wilderness, thousands of miles from where anyone they knew would be able to read it. Still, if a hoax, it was executed with unusual skill and verisimilitude.

All this is by way of making the point that word of the existence of a land beyond the Ocean Sea, as the Atlantic was then known, was filtering back to Europeans long before Columbus made his epic voyage. The Vikings did not operate in isolation. They settled all over Europe and their exploits were widely known. They even left a map – the famous Vinland map – which is known to have been circulating in Europe by the fourteenth century. We don’t positively know that Columbus was aware of this map, but we do know that the course he set appeared to be making a beeline for the mythical island of Antilla, which featured on it.

Columbus never found Antilla or anything else he was looking for. His epochal voyage of 1492 was almost the last thing – indeed almost the only thing – that went right in his life. Within eight years, he would find himself summarily relieved of his post as Admiral of the Ocean Sea, returned to Spain in chains and allowed to sink into such profound obscurity that even now we don’t know for sure where he is buried. To achieve such a precipitous fall in less than a decade required an unusual measure of incompetence and arrogance. Columbus had both.

He spent most of those eight years bouncing around the islands of the Caribbean and coasts of South America without ever having any real idea of where he was or what he was doing. He always thought that Cipangu, or Japan, was somewhere near by and never divined that Cuba was an island. To his dying day he insisted that it was part of the Asian mainland (though there is some indication that he may have had his own doubts since he made his men swear under oath that it was Asia or have their tongues cut out). His geographic imprecision is most enduringly preserved in the name he gave to the natives: Indios, which of course has come down to us as Indians. He cost the Spanish crown a fortune and gave in return little but broken promises. And throughout he behaved with the kind of impudence – demanding to be made hereditary Admiral of the Ocean Sea, as well as Viceroy and Governor of the lands that he conquered, and to be granted one-tenth of whatever wealth his enterprises generated – that all but invited his eventual downfall.

In this he was not alone. Many other New World explorers met misfortune in one way or another. Juan Díaz de Solís and Giovanni da Verrazano were eaten by natives. Balboa, after discovering the Pacific, was executed on trumped-up charges, betrayed by his colleague Francisco Pizarro, who in his turn ended up murdered by rivals. Hernando de Soto marched an army pointlessly all over the south-western US for four years until he caught a fever and died. Scores of adventurers, enticed by tales of fabulous cities – Quivira, Bimini, the City of the Caesars and Eldorado (’the gilded one’) went looking for wealth, eternal youth, or a shortcut to the Orient and mostly found misery. Their fruitless searches live on, sometimes unexpectedly, in the names on the landscape. California commemorates a Queen Califía, unspeakably rich but unfortunately non-existent. The Amazon is named for a tribe of one-breasted women. Brazil and the Antilles recall fabulous, but also fictitious, islands.

Further north the English fared little better. Sir Humphrey Gilbert perished in a storm off the Azores in 1583 after trying unsuccessfully to found a colony on Newfoundland. His half-brother Sir Walter Raleigh attempted to establish a settlement in Virginia and lost a fortune, and eventually his head, in the effort. Henry Hudson pushed his crew a little too far while looking for a north-west passage and found himself, Bligh-like, being put to sea in a little boat, never to be seen again. The endearingly hopeless Martin Frobisher explored the Arctic region of Canada, found what he thought was gold and carried 1,500 tons of it home on a dangerously overloaded boat only to be informed that it was worthless iron pyrite. Undaunted, Frobisher returned to Canada, found another source of gold, carted 1,300 tons of it back and was informed, no doubt with a certain weariness on the part of the royal assayer, that it was the same stuff. After that, we hear no more of Martin Frobisher.

It is interesting to speculate what these daring adventurers would think if they knew how whimsically we commemorate them today. Would Giovanni da Verrazano think being eaten by cannibals a reasonable price to pay for having his name attached to a toll bridge between Brooklyn and Staten Island? I suspect not. De Soto found transient fame in the name of an automobile, Frobisher in a distant icy bay, Raleigh in a city in North Carolina, a brand of cigarettes and a type of bicycle, Hudson in several waterways and a chain of department stores. On balance, Columbus, with a university, two state capitals, a country in South America, a province in Canada and high schools almost without number, among a great deal else, came out of it pretty well. But in terms of linguistic immortality no one got more mileage from less activity than a shadowy Italian-born businessman named Amerigo Vespucci.

A Florentine who had moved to Seville where he ran a ship supply business (one of his customers was his compatriot Christopher Columbus), Vespucci seemed destined for obscurity. How two continents came to be named in his honour required an unlikely measure of coincidence and error. Vespucci did make some voyages to the New World (authorities differ on whether it was three or four), but always as a passenger or lowly officer. He was not, by any means, an accomplished seaman. Yet in 1504-5, there began circulating in Florence letters of unknown authorship, collected under the title Nuovo Mundo (New World), which stated that Vespucci had not only been captain of these voyages but had discovered the New World.

The mistake would probably have gone no further except that an instructor at a small college in eastern France named Martin Waldseemüller was working on a revised edition of Ptolemy and decided to freshen it up with a new map of the world. In the course of his research he came upon the Florentine letters and, impressed with their spurious account of Vespucci’s exploits, named the continent in his honour. (It wasn’t quite as straightforward as that: first he translated Amerigo into the Latin Americus and then transformed that into its feminine form, America, on the ground that Asia and Europe were feminine. He also considered the name Amerige.) Even so it wasn’t until forty years later that people began to refer to the New World as America, and then they meant by it only South America.

Vespucci did have one possible, if slightly marginal, claim to fame. He is thought to have been the brother of Simonetta Vespucci, the model for Venus in the famous painting by Botticelli.12

III

Since neither Columbus nor Vespucci ever set foot on the land mass that became the United States, it would be more aptly named for Giovanni Caboto, an Italian mariner better known to history by his Anglicized name of John Cabot. Sailing from Bristol in 1495, Cabot ‘discovered’ Newfoundland and possibly Nova Scotia and a number of smaller islands, and in the process became the first known European to visit North America, though in fact he more probably was merely following fishing fleets that were trawling the Grand Banks already. What is certain is that in 1475, because of war in Europe, British fisherman lost access to the traditional fishing grounds off Iceland. Yet British cod stocks did not fall, and in 1490 (two years before Columbus sailed) when Iceland offered the British fishermen the chance to come back, they declined. The presumption is that they had discovered the cod-rich waters off Newfoundland and didn’t want anyone else to know about them.13

Whether Cabot inspired the fishermen or they him, by the early 1500s the Atlantic was thick with English vessels. A few came to prey on Spanish treasure ships, made sluggish and vulnerable by the weight of gold and silver they were carrying back to the Old World. Remarkably good money could be made from this.*4 On a single voyage Sir Francis Drake returned to England with booty worth $60 million in today’s money.14 On the same voyage, Drake briefly put ashore in what is now Virginia, claimed it for the crown and called it New Albion.15

To give the claim weight, and to provide a supply base for privateers, Queen Elizabeth I decided it might be an idea to establish a colony. She gave the task to Sir Walter Raleigh. The result was the ill-fated ‘lost colony’ of Roanoke, whose 114 members were put ashore just south of Albemarle Sound in what is now North Carolina in 1587. From that original colony sprang seven names that still feature on the landscape: Roanoke (which has the distinction of being the first Indian word borrowed by English settlers), Cape Fear, Cape Hatteras, the Chowan and Neuse rivers, Chesapeake and Virginia.16 (Previously Virginia had been called Windgancon, meaning ‘what gay clothes you wear’ – apparently what the locals had replied when an early reconnoitring party had asked them what they called the place.) But that alas was about all the colony achieved. Because of the war with Spain, no English ship was able to return for three years. When at last a relief ship called, it found the colony deserted. For years afterwards, visitors would occasionally spot a blond-haired Indian child, and the neighbouring Croatoan tribe was eventually discovered to have incorporated several words of Elizabethan English into its own tongue, but no firmer evidence of the colony’s fate was ever found.

Other settlements followed, among them the now forgotten Popham Colony, formed in 1610 in what is now Maine, but abandoned after two years, and the rather more durable but none the less ever-precarious colony of Jamestown, founded in Virginia in 1607.

Mostly, however, what drew the English to the New World was the fishing, especially along the almost unimaginably bounteous waters off the north-east coast of North America. For at least 120 years before the Mayflower set sail European fishing fleets had been an increasingly common sight along the eastern seaboard. Often the fleets would put ashore to dry fish, replenish stocks of food and water, or occasionally wait out a harsh winter. As many as a thousand fishermen at a time would gather on the beaches. It was from such groups that Samoset had learned his few words of English.

As a result, by 1620 there was scarcely a bay in New England and eastern Canada that didn’t bear some relic of their passing. The Pilgrims themselves soon came upon an old cast-iron cooking pot, obviously of European origin, and while plundering some Indian graves (an act of crass in-judiciousness, all but inviting their massacre) they uncovered the body of a blond-haired man, ‘possibly a Frenchman who had died in captivity’.17

New England may have been a new world to the Pilgrims, but it was hardly terra incognita. Much of the land around them had already been mapped. Eighteen years earlier, Bartholomew Gosnold and a party described as ‘24 gentlemen and eight sailors’ had camped for a few months on nearby Cuttyhunk Island and left behind many names, two of which endure: Cape Cod and the romantically mysterious Martha’s Vineyard (mysterious because we don’t know who Martha was).

Seven years before, John Smith, passing by on a whaling expedition, had remapped the region, diligently taking heed of the names the Indians themselves used. He added just one name of his own devising: New England. (Previously the region had been called Norumbega on most maps. No one now has any clear idea why.) But in a consummate display of brown-nosing, upon his return to England Smith presented his map to Charles Stuart, the sixteen-year-old heir apparent, along with a note ‘humbly intreating’ his Highness ‘to change their barbarous names for such English, as posterity might say Prince Charles was their Godfather.’ The young prince fell to the task with relish. He struck out most of the Indian names that Smith had so carefully transcribed and replaced them with a whimsical mix that honoured himself and his family, or that simply took his fancy. Among his creations were Cape Elizabeth, Cape Anne, the Charles River and Plymouth. In consequence when the Pilgrims landed at Plymouth one of the few tasks they didn’t have to manage was thinking up names for the landmarks all around them. They were already named.

Sometimes the early explorers took Indians back to Europe with them. Such had been the fate of the heroic Squanto, whose life story reads like an implausible picaresque novel. He had been picked up by a seafarer named George Weymouth in 1605 and carried off – whether voluntarily or not is unknown – to England. There he had spent nine years working at various jobs before returning to the New World as interpreter for John Smith on his voyage of 1613. As reward for his help, Smith gave Squanto his liberty. But no sooner had Squanto been reunited with his tribe than he and nineteen of his fellows were kidnapped by another Englishman, who carried them off to Málaga, and sold them as slaves. Squanto worked as a house servant in Spain before somehow managing to escape to England, where he worked for a time for a merchant in the City of London before finally, in 1619, returning to the New World on yet another exploratory expedition of the New England coast.18 Altogether he had been away for nearly fifteen years, and he returned to find that only a short while before his tribe had been wiped out by a plague – almost certainly smallpox introduced by visiting sailors.

Thus Squanto had certain grounds to be disgruntled. Not only had Europeans inadvertently exterminated his tribe, but twice had carried him off and once sold him into slavery. Fortunately for the Pilgrims, Squanto was of a forgiving nature. Having spent the greater part of his adult life among the English, he may well have felt more comfortable among Britons than among his own people. In any case, he settled with them and for the next year, until he died of a sudden fever, served as their faithful teacher, interpreter, ambassador and friend. Thanks to him, the future of English in the New World was assured.

The question of what kind of English it was, and would become, lies at the heart of what follows.

2

Becoming Americans

We whoſe names are underwritten, the loyal subjects of our dread Sovereigne Lord, King James, by ye grace of God, of Great Britaine, France and Ireland, King, defender of ye faith, etc., haveing undertaken for ye glory of God and advancement of ye Christian faith, and honour of our King and countrie, a voyage to plant ye firſt Colonie in ye Northerne parts of Virginia, doe by theſe presents Solemnly, and mutualy ... covenant and combine our?elves togeather into a civil body politick for our better ordering and preſervation and furtherance of ye end aforeſaid ...

So begins the Mayflower Compact, written in 1620 shortly before the Mayflower Pilgrims stepped ashore. The passage, I need hardly point out, contains some differences from modern English. We no longer use S interchangeably with s, or ye for the*5 A few spellings – Britaine, togeather, Northerne – clearly vary from modern practice, but generally only slightly and not enough to confuse us, whereas only a generation before we would find far greater irregularities (for example, gelousie, conseil, audacite, wiche, loware for jealousy, council, audacity, which and lower). We would not nowadays refer to a ‘dread sovereign’, and if we did we would not mean by it one to be held in awe. But allowing for these few anachronisms, the passage is clear, recognizable, wholly accessible English. Were we, however, somehow to be transported to the Plymouth colony of 1620 and allowed to eavesdrop on the conversations of those who drew up and signed the Mayflower Compact, we would almost certainly be astonished at how different – how frequently incomprehensible – much of their spoken language would be to us. Though it would be clearly identifiable as English, it would be a variety of English unlike any we had heard before. Among the differences that would most immediately strike us:

- Kn-, which was always sounded in Middle English, was at the time of the Pilgrims going through a transitional phase in which it was commonly pronounced tn. Where the Pilgrims’ parents or grandparents would have pronounced knee as ‘kuh-nee,’ they themselves would have been more likely to say ‘t’nee.’

- The interior gh in words like night and light had been silent for about a generation, but on or near the end of words – in laugh, nought, enough, plough – it was still sometimes pronounced, sometimes left silent and sometimes given an f sound.

- There was no sound equivalent to the ah in the modern father and calm. Father would have rhymed with the present-day gather and calm with ram.

- Was was pronounced not ‘woz’ but ‘wass’, and remained so, in some circles at least, long enough for Byron to rhyme it with pass. Conversely, kiss was often rhymed with is.

- War rhymed with car or care. It didn’t gain its modem pronunciation until sometime after the turn of the nineteenth century.1

- Home was commonly spelled ‘whome’ and pronounced, by at least some speakers, as it was spelled, with a distinct wh- sound.

- The various o and u sounds were, to put it mildly, confused and unsettled. Many people rhymed cut with put, plough with screw, book with moon, blood with load. Dryden, as late as the second half of the seventeenth century, made no distinction between flood, mood and good, though quite how he intended them to be pronounced is anybody’s guess. The vicissitudes of the wandering oo sound are evident both in its multiplicity of modern pronunciations (for example, flood, wood, mood) and the number of such words in which the pronunciation is not fixed even now, notably roof, soot and hoof.

- Oi was sounded with a long i, so that coin’d sounded like kind and voice like vice. The modern oi sound was sometimes heard, but was considered a mark of vulgarity until about the time of the American Revolution.

- Words that now have a short e were often pronounced and sometimes spelled with a short i. Shakespeare commonly wrote ‘bin’ for been, and as late as the tail-end of the eighteenth century Benjamin Franklin was defending a short i pronunciation for get, yet, steady, chest, kettle and the second syllable of instead2 – though by this time he was fighting a losing battle.

- Speech was in general much broader, with stresses and a greater rounding of rs. A word like never would have been pronounced more like ’nev-arrr’.3 Interior vowels and consonants were more frequently suppressed, so that nimbly became ‘nimly’, fault and salt became ‘faut’ and ‘saut’, somewhat was ‘summat’. Other letter combinations were pronounced in ways strikingly at variance with their modern forms. In his Special Help to Orthographie or the True-writing of English (1643), a popular book of the day, Richard Hodges listed the following pairs of words as being ‘so neer alike in sound ... that they are sometimes taken one for another’: ream and realm, shoot and suit, room and Rome, were and wear, poles and Paul’s, flea and flay, eat and ate, copies and coppice, person and parson, Easter and Hester, Pierce and parse, least and lest. The spellings – and misspellings – of names in the earliest records of towns like Plymouth and Dedham give us some idea of how much more fluid early colonial pronunciation was. These show a man named Parson sometimes referred to as Passon and sometimes as Passen; a Barsham as Barsum or Bassum; a Garfield as Garfill; a Parkhurst as Parkis; a Holmes as Holums; a Pickering as Pickram; a St John as Senchion; a Seymour as Seamer; and many others.4

- Differences in idiom abounded, notably with the use of definite and indefinite articles. As Baugh and Cable note, Shakespeare commonly discarded articles where we would think them necessary – ‘creeping like snail’, ‘with as big heart as thou’ and so on – but at the same time he employed them where we would not, so that where we say ‘at length’ and ‘at last’, he wrote ‘at the length’ and ‘at the last’. The preposition of was also much more freely employed. Shakespeare used it in many places where we would require another: ‘it was well done of [by] you’, ‘I brought him up of [from] a puppy’, ‘I have no mind of [for] feasting’, ‘That did but show thee of [as] a fool’, and so on.5 One relic of this practice survives in American English in the way we tell time. Where Americans commonly say that it is ‘ten of three’ or ‘twenty of four’, the British only say ‘ten to’ or ‘twenty to’.

- Er and ear combinations were frequently, if not invariably, pronounced ‘ar’, so that convert became ‘convart’, heard was ‘hard’ (though also ‘heerd’), and serve was ‘sarve’. Merchant was pronounced and often spelled ‘marchant’. The British preserve the practice in several words today – as with clerk and derby, for instance – but in America the custom was long ago abandoned but for a few well-established exceptions like heart, hearth and sergeant, or else the spelling was amended, as with making sherds into shards or Hertford, Connecticut, into Hartford.

- Generally, words containing ea combinations – tea, meat, deal and so on – were pronounced with a long a sound (and of course many still are: great, break, steak, for instance), so that, for example, meal and mail were homonyms. The modern ee pronunciation was just emerging, so that Shakespeare could, as his whim took him, rhyme please with either grace or knees. Among more conservative users the old style persisted well into the eighteenth century, as in the well-known lines by the poet William Cowper:

I am monarch of all I survey ...

From the centre all round to the sea.

Different as this English was from modern English, it was nearly as different again from the English spoken only a generation or two before in the mid-1500s. In countless ways, the language of the Pilgrims was strikingly more advanced, less visibly rooted in the conventions and inflections of Middle English, than that of their grandparents or even parents.

The old practice of making plurals by adding -n was rapidly giving way to the newer convention of adding – s, so that by 1620 most people were saying knees instead of kneen, houses instead of housen, fleas instead offlean. The transition was by no means complete at the time of the Pilgrims – we can find eyen for eyes and shoon for shoes in Shakespeare – and indeed survives yet in a few words, notably children, brethren and oxen, but the process was well under way.

A similar transformation was happening with the terminal -th on verbs like maketh, leadeth and runneth, which also were increasingly being given an -s ending in the modern way. Shakespeare used -s terminations almost exclusively except for hath and doth.*6 Only the most conservative works, such as the King James Bible of 1611, which contains no -s forms, stayed faithful to the old pattern. Interestingly, it appears that by the early seventeenth century even when the word was spelled with a -th termination it was pronounced as if spelled with an -s. In other words, people wrote ‘hath’ but said ‘has’, saw ‘doth’ but thought ‘does’, read ‘goeth’ as ‘goes’. The practice is well illustrated in Hodges’ Special Help to Orthographie, which lists as homophones such seemingly odd bedfellows as weights and waiteth, cox and cocketh, rights and righteth, rose and roweth.

At the same time, endings in -ed were beginning to be blurred. Before the Elizabethan age, an -ed ending was accorded its full phonetic value, a practice we preserve in a few words like beloved and blessed. But by the time of the Pilgrims the modern habit of eliding the ending (except after t and d) was taking over. For nearly two hundred years, the truncated pronunciation was indicated in writing with an apostrophe – drown’d, frown’d, weav’d and so on. Not until the end of the eighteenth century would the elided pronunciation become so general as to render this spelling distinction unnecessary.

The median t in Christmas, soften and hasten and other such words was beginning to disappear (though it has been re-introduced by many people in often). Just coming into vogue, too, was the sh sound of ocean, creation, passion and sugar. Previously such words had been pronounced as sibilants, as many Britons still say ‘tissyou’ and ‘iss-you’ for tissue and issue. The early colonists were among the first to use the new word good-bye, contracted from God be with you and still at that time often spelled ‘Godbwye’, and were among the first to employ the more democratic forms ye and you in preference to the traditional thee, thy and thou, though many drifted uncertainly between the forms, as Shakespeare himself did, even sometimes in adjoining sentences, as in 1 Henry IV: ’I love thee infinitely. But hark you, Kate.’*7

They were also among the first to make use of the newly minted letter j. Previously i had served this purpose, so that Chaucer, for instance, wrote ientyl and ioye for gentle and joy. At first, j was employed simply as a variant of i, as S was a variant for s. Gradually j took on its modern juh sound, a role previously filled by g (and hence the occasional freedom in English to choose between the two letters, as with jibe and gibe).

In terms of language, the Pilgrims could scarcely have chosen a more exciting time to come. Perhaps no other period in history has been more linguistically diverse and dynamic, more accommodating to verbal invention, than that into which they were born. It was after all the age of Shakespeare, Marlowe, Lyly, Spenser, Donne, Ben Jonson, Francis Bacon, Sir Walter Raleigh, Elizabeth I. As Mary Helen Dohan has put it: ‘Had the first settlers left England earlier or later, had they learned their speechways and their attitudes, linguistic and otherwise, in a different time, our language – like our nation – would be a different thing.‘6

Just in the century or so that preceded the Pilgrims’ arrival in the New World, English gained 10,000 additional words, about half of them sufficiently useful as to be with us still. Shakespeare alone created some 2,000 – reclusive, gloomy, barefaced, radiance, dwindle, countless, gust, leapfrog, frugal, summit, to name but a few – but he was by no means alone in this unparalleled outpouring.

A bare sampling of words that entered English around the time of the Pilgrims gives some hint (another Shakespeare coinage, incidentally) of the lexical vitality of the age: alternative (1590); incapable (1591); noose (1600); nomination (1601); fairy, surrogate and sophisticated (1603); option (1604); creak, in the sense of a noise, and susceptible (1605); coarse, in the sense of being rough (as opposed to natural), and castigate (1607); obscenity (1608); tact (1609); commitment, slope, recrimination and gothic (1611); coalition (1612); freeze, in a metaphoric sense (1613); nonsense (1614); cult, boulder and crazy, in the sense of insanity (1617); customer (1621); inexperienced (1626).

If the Pilgrims were aware of this linguistic ferment into which they had been born, they gave little sign of it. Nowhere in any surviving colonial writings of the seventeenth century is there a single reference to Shakespeare or even the Puritans’ own revered Milton. And in some significant ways their language is curiously unlike that of Shakespeare. They did not employ the construction ‘methinks’, for instance. Nor did they show any particular inclination to engage in the new fashion of turning nouns into verbs, a practice that gave the age such perennially useful innovations as to gossip (1590), to fuel (1592), to attest (1596), to inch (1599), to preside (1611), to surround (1616), to hurt (1662) and several score others, many of which (to happy, to property, to malice) didn’t last.

Though they were by no means linguistic innovators, the peculiar circumstances in which they found themselves forced the first colonists to begin tinkering with their vocabulary almost from the first day. As early as 1622, they were using pond, which in England designated a small artificial pool, to describe large and wholly natural bodies of water, as in Walden Pond. Creek in England described an inlet of the sea; in America it came to signify a stream. For reasons that have never, so far as I can tell, been properly investigated, the colonials quickly discarded many seemingly useful English topographic words – hurst, mere, mead, heath, moor, marsh and (except in New England) brook, and began coming up with new ones, like swamp (first recorded in John Smith’s Generall Historie of Virginia in 1626),7 ravine, hollow, range (for an open piece of ground) and bluff. Often these were borrowed from other languages. Bluff, which has the distinction of being the first word attacked by the British as a misguided and obviously unnecessary Americanism, was probably borrowed from the Dutch blaf, meaning a flat board. Swamp appears to come from the German zwamp, and ravine, first recorded in 1781 in the diaries of George Washington though almost certainly used much earlier, is from the French.

Oddly, considering the extremities of the American climate, weather words were slow in arising. Snowstorm, the first meteorological Americanism, is not recorded before 1771 and no one appears to have noticed a tornado before 1804. In between came cold snap in 1776, and that about exhausts America’s contribution to the world of weather terms in its first two hundred years. Blizzard, a word without which any description of a northern American winter would seem incomplete, did not in fact come to describe a heavy snowstorm until as late as 1870, when a newspaper editor in Estherville, Iowa, applied it to a particularly fierce spring snow. The word, of unknown origin, had been coined in America some fifty years earlier, but previously had denoted a blow or series of blows, as from fists or guns.

Where they could, however, the first colonists stuck doggedly to the words of the Old World. They preserved words with the diligence of archivists. Scores, perhaps hundreds, of English terms that would later perish from neglect in their homeland live on in America thanks to the essentially conservative nature of the early colonists. Fall for autumn is perhaps the best known. It was a relatively new word at the time of the Pilgrims – its first use in England was recorded in 1545 – but it remained in common use in England until as late as the second half of the nineteenth century. Why it died out there when it did is unknown. The list of words preserved in America is practically endless. Among them: cabin in the sense of a humble dwelling, bug for any kind of insect, hog for a pig, deck as in a pack of cards and jack for a knave within the deck, raise for rear, junk for rubbish, mad in the sense of angry rather than unhinged, bushel as a common unit of measurement, closet for cupboard, adze, attic, jeer and hatchet, stocks as in stocks and bonds, cross-purposes, livestock, gap and (principally in New England) notch for a pass through hills, gully for a ravine, rooster for the male fowl, slick as a variant of sleek, zero for nought, back and forth (instead of backwards and forwards), plumb in the sense of utter or complete, noon*8 in favour of midday, molasses for treacle, cesspool, home-spun, din, trash, talented, chore, mayhem, maybe, copious, and so on. And that is just a bare sampling.

The first colonists also brought with them many regional terms, little known outside their private corners of Britain, which prospered on American soil and have often since spread to the wider English-speaking world: drool, teeter, hub, swamp, squirt (as a term descriptive of a person), spool (for thread), to wilt, cater-cornered, skedaddle (a north British dialect word meaning to spill something noisy, such as a bag of coal), gumption, chump (an Essex word meaning a lump of wood and now preserved in the expression ‘off your chump’),8 scalawag, dander (as in to get one’s dander up), chitterlings, chipper, chisel in the sense of to cheat, and skulduggery. The last named has nothing to do with skulls which is why it is spelled with one l. It comes from the Scottish sculdudrie, a word denoting fornication. Chitterlings, or chitlins, for the small intestines of the pig, was unknown outside Hampshire until nourished to wider glory in the New World.9 That it evolved in some quarters of America into kettlings suggests that the ch- may have been pronounced by at least some people with the hard k of chaos or chorus.

And of course they brought many words with them that have not survived in either America or Britain, to the lexical impoverishment of both: flight for a dusting of snow, fribble for a frivolous person, bossloper for a hermit, spong for a parcel of land, bantling for an infant, sooterkin for a sweetheart, gurnet for a protective sandbar, and the much-missed slobberchops for a messy eater.

Everywhere they turned in their new-found land, the early colonists were confronted with objects that they had never seen before, from the mosquito (at first spelled mosketoe or musketto) to the persimmon to poison ivy, or ‘poysoned weed’ as they called it. At first, no doubt overwhelmed by the wealth of unfamiliar life in their new Eden, they made no distinction between pumpkins and squashes or between the walnut and pecan trees. They misnamed plants and animals. Bay, laurel, beech, walnut, hemlock, the robin (actually a thrush), blackbird, hedgehog, lark, swallow and marsh hen all signify different species in America from those of England.10 The American rabbit is actually a hare. (That the first colonists couldn’t tell the difference offers some testimony to their incompetence in the wild.) Often they took the simplest route and gave the new creatures names imitative of the sounds they made – bob white, whippoorwill, katydid – and when that proved impractical they fell back on the useful, and eventually distinctively American, expedient of forming a new compound from two older words.

Colonial American English positively teems with such constructions: jointworm, glowworm, eggplant, canvasback, copperhead, rattlesnake, bluegrass, backtrack, bobcat, catfish, blue-jay, bullfrog, sapsucker, timberland, underbrush, cookbook, frostbite, hillside (at first sometimes called a sidehill), plus such later additions as tightwad, sidewalk, cheapskate, sharecropper, skyscraper, rubberneck, drugstore, barbershop, hangover, rubdown, blowout and others almost without number. These new terms had the virtues of directness and instant comprehensibility – useful qualities in a land whose populace included increasingly large numbers of non-native speakers – which their British counterparts often lacked. Frostbite is clearly more descriptive than chilblains, sidewalk, than pavement, eggplant than aubergine, doghouse than kennel, bedspread than counterpane, whatever the English might say.

One creature that very much featured in the lives of the earliest colonists was the passenger pigeon. The name comes from an earlier sense of passenger as one that passes by, and passenger pigeons certainly did that in almost inconceivably vast numbers. One early observer estimated a passing flock as being a mile wide and 240 miles long. They literally darkened the sky. At the time of the Mayflower landing there were perhaps nine billion passenger pigeons in North America, more than twice the number of all the birds found on the continent today. With such numbers they were absurdly easy to hunt. One account from 1770 reported that a hunter brought down 125 with a single shot from a blunderbuss. Some people ate them, but most were fed to pigs. Millions more were slaughtered for the sport of it. By 1800 their numbers had been roughly halved and by 1900 they were all but gone. On 1 September 1914 the last one died at Cincinnati Zoo.

The first colonists were not, however, troubled by several other creatures that would one day plague the New World. One was the common house rat. It wouldn’t reach Europe for another century (emigrating there abruptly and in huge numbers from Siberia for reasons that have never been explained) and did not make its first recorded appearance in America until 1775, in Boston. (Such was its adaptability that, by the time of the 1849 gold rush, early arrivals to California found the house rat waiting for them. By the 1960s there were an estimated one hundred million house rats in America.) Many other now common animals, among them the house mouse and the common pigeon, were also yet to make their first trip across the ocean.

For certain species we know with some precision when they arrived, most notoriously with that airborne irritant the starling, which was brought to America by one Eugene Schieffelin, a wealthy German emigrant who had the odd, and in the case of starlings regrettable, idea that he should introduce to the American landscape all the birds mentioned in the writings of Shakespeare. Most of the species he introduced failed to prosper, but the forty pairs of starlings he released in New York’s Central Park in the spring of 1890, augmented by twenty more pairs the following spring, so thrived that within less than a century they had become the most abundant bird species in America, and one of its greatest pests. The common house sparrow (actually not a sparrow at all but an African weaverbird) was in similar fashion introduced to the New World in 1851 or 1852 by the president of the Natural History Society of Brooklyn, and the carp by the secretary of the Smithsonian Institution in the 1870s.11 That there were not greater ecological disasters from such well-meaning but often misguided introductions is a wonder for which we may all be grateful.

Partly from lack of daily contact with the British, partly from conditions peculiar to American life, and partly perhaps from whim, American English soon began wandering off in new directions. As early as 1682, Americans were calling folding money bills rather than notes. By 1751, bureau had lost its English meaning of a writing desk and come to mean a chest of drawers. Barn in Britain was and generally still is a storehouse for grain, but in America it took on the wider sense of being a general-purpose farm building. By 1780 avenue was being used to designate any wide street in America; in Britain it implied a line of trees – indeed, it still does to the extent that many British towns have streets called Avenue Road, which sounds comically redundant to American ears. Other words for which Americans gradually enlarged the meanings include apartment, pie, store, closet, pavement and block. Block in late eighteenth-century America described a group of buildings having a similar appearance – what the British call a terrace – then came to mean a collection of adjoining lots and finally, by 1823, was being used in its modern sense to designate an urban rectangle bounded by streets.12

But the handiest, if not always the simplest, way of filling voids in the American lexicon was to ask the local Indians what words they used. At the time of the first colonists there were perhaps fifty million Indians in the New World (though other estimates have put the figure as high as one hundred million and as low as eight million). Most lived in Mexico and the Andes. The whole of North America had perhaps no more than two million inhabitants. The Indians of North America are generally broken down into six geographic, rather than linguistic or cultural, families: those from the plains (among them the Blackfoot, Cheyenne and Pawnee), eastern woodlands (the Algonquian family and Iroquois confederacy), south-west (Apache, Navaho, Pueblo), northwest coast (Haida, Modoc, Tsimshian), plateau (Paiute, Nez Percé), and northern (Kutchin, Naskapi). Within these groups considerable variety was to be found. Among the plains Indians, the Omaha and Pawnee were settled farmers, while the Cheyenne and Commanches were nomadic hunters. There was also considerable movement: the Blackfoot and Cheyenne, for example, began as eastern seaboard Indians, members of the Algonquian family, before pushing west into the plains.

Despite, or perhaps because of, the relative paucity of inhabitants in North America, the variety of languages spoken on the continent was particularly rich, with as many as 500 altogether. Put another way, the Indians of North America accounted for only 5 per cent of the population of the New World, but perhaps as much as a quarter of its tongues. Many of these languages – Puyallup, Tupi, Assinboin, Hidatsa, Bella Coola – were spoken by only a relative handful of people. Even among related tribes the linguistic chasm could be considerable. As the historian Charlton Laird has put it: ‘The known native languages of California alone show greater linguistic variety than all the known languages of the continent of Europe.‘13

Almost all of the Indian terms taken directly into English by the first colonists come from the two eastern families: the Iroquois confederacy, whose members included the Mohawk, Cherokee, Oneida, Seneca, Delaware and Huron tribes, and the even larger Algonquian group, which included Algonquin, Arapaho, Cree, Delaware, Illinois, Kickapoo, Narragansett, Ojibwa, Penobscot, Pequot and Sac and Fox, among many others. But here, too, there was huge variability, so that to the Delaware Indians the river was the Susquehanna, while to the neighbouring Hurons it was the Kanastoge (or Conestoga).

The early colonists began borrowing words from the Indians almost from the moment of first contact. Moose and papoose were taken into English as early as 1603. Raccoon is first recorded in 1608, caribou and opossum in 1610, moccasin and tomahawk in 1612, hickory in 1618, powwow in 1624, wigwam in 1628.14 Altogether, the Indians provided some 150 terms to the early colonists. Another 150 came later, often after being filtered through intermediate sources. Toboggan, for instance, entered English by way of Canadian French. Hammock, maize and barbecue reached the continent via Spanish from the Caribbean.

Occasionally Indian terms could be adapted fairly simply. The Algonquian seganku became without too much difficulty skunk. Wuchak settled into English almost inevitably as woodchuck (despite the tongue-twister, no woodchuck ever chucked wood). Wampumpeag became wampum. The use of neck in the northern colonies was clearly influenced by the Algonquian naiack, meaning a point or corner, and from which comes the expression that neck of the woods. Similarly the preponderance of capes in New England is at least partly due to the existence of an Algonquian word, kepan, meaning ‘a closed-up passage’.15

Most Indian terms, however, were not so amenable to simple transliteration. Many had to be brusquely and repeatedly pummelled into shape, like a recalcitrant pillow, before any English speaker could feel comfortable with them. John Smith’s first attempt at transcribing the Algonquian word for a tribal leader came out as cawcawwassoughes. Realizing that this was not remotely satisfactory he modified it to a still somewhat hopeful coucorouse. It took a later generation to simplify it further to the form we know today: caucus.16 Raccoon was no less challenging. Smith tried raugroughcum and rahaugcum in the same volume, then later made it rarowcun, and subsequent chroniclers attempted many other forms – aracoune and rockoon, among them – before finally finding phonetic comfort with rackoone.17 Misickquatash evolved into sacatash and eventually succotash. Askutasquash became isquontersquash and finally squash. Pawcohiccora became pohickery and then hickory.

Tribal names, too, required modification. Cherokee was really Tsalaki. Algonquin emerged from Algoumequins. Irinakhoiw yielded Iroquois. Choctaw was variously rendered as Chaqueta, Shacktau and Choktah before settling into its modern form. Even the seemingly straightforward Mohawk has as many as 142 recorded spellings.*9

Occasionally the colonists gave up. For a time they referred to an edible cactus by its Indian name, metaquesunauk, but eventually abandoned the fight and called it a prickly pear.18. Success depended largely on the phonetic accessibility of the nearest contact tribe. Those who encountered the Ojibwa Indians found their dialect so deeply impenetrable that they couldn’t even agree on the tribe’s name. Some said Ojibwa, others Chippewa. By whatever name, the tribe employed consonant clusters of such a confounding density – mtik, pskikye, kchimkwa, to name but three19 – as to convince the new colonists to leave their tongue in peace.

Often, as might be expected, the colonists misunderstood the Indian terms and misapplied them. To the natives, pawcohiccora signified not the tree but the food made from its nuts. Pakan or paccan was an Algonquian word for any hard-shelled nut. The colonists made it pecan (after toying with such variants as pekaun and pecaun) and with uncharacteristic specificity reserved it for the produce of the tree known to science as the Carya illinoensis.

Despite the difficulties, the first colonists were perennially fascinated by the Indian tongues, partly no doubt because they were exotic, but also because they had a beauty that was irresistible. As William Penn wrote: ‘I know not a language spoken in Europe, that hath words of more sweetness or greatness, in accent or emphasis, than theirs.‘20 And he was right. You have only to list a handful of Indian place names – Mississippi, Susquehanna, Rappahannock – to see that the Indians found a poetry in the American landscape that has all too often eluded those who displaced them.

If the early American colonists treated the Indians’ languages with respect, they did not always show such scruples with the Indians themselves. From the outset they often treated the natives badly, albeit sometimes unwittingly. One of the first acts of the Mayflower Pilgrims, as we have seen, was to plunder Indian graves. (One wonders how the Pilgrims would have felt had they found Indians picking through the graves in an English churchyard.) Confused and easily frightened, the early colonists often attacked friendly tribes, mistaking them for hostile ones. Even when they knew the tribes to be friendly, they sometimes took hostages in the decidedly perverted belief that this would keep them respectful.

When circumstances were deemed to warrant it, they did not hesitate to impose a quite shocking severity, as a note from soldiers to the governor of the Massachusetts Bay Colony during King Philip’s War reminds us: ‘This aforesaid Indian was ordered to be tourn to peeces by dogs, and she was so dealt with.‘21 Indeed, early accounts of American encounters with Indians tell us as much about colonial violence as about seventeenth-century orthography. Here, for instance, is William Bradford describing a surprise attack on a Pequot village in his History of Plimouth Plantation. The victims, it may be noted, were mostly women and children: Those that scaped the fire were slaine with the sword; some hewed to peeces, others rune throw with rapiers, so as they were quickly dispatchte ... It was a fearful sight to see them thus frying in the fyre ... and horrible was the styncke and sente there of, but the victory seemed a sweete sacrifice.‘22 In 1675 in Virginia, John Washington, an ancestor of George, was involved in a not untypical incident in which the Indians were invited to settle a dispute by sending their leaders to a powwow. They sent five chiefs to parley and when things did not go to the European settlers’ satisfaction, the chiefs were taken away and killed. Even the most faithful Indians were treated as expendable. When John Smith was confronted by hostile savages in Virginia in 1608 his first action was to shield himself behind his native guide.

In the circumstances, it is little wonder that the Indians began to view their new rivals for the land with a certain suspicion and to withdraw their goodwill. This was a particular blow to the Virginia colonists – or ‘planters’, as they were somewhat hopefully called – who were as helpless at fending for themselves as the Mayflower Pilgrims would prove to be a decade later. In the winter of 1609-10, they underwent what came to be known as the ‘starving time’, during which brief period the number of Virginia colonists fell from five hundred to about sixty. When Sir Thomas Gates arrived to take over as the new governor the following spring, he found ‘the portes open, the gates from the hinges, the church ruined and unfrequented, empty howses (whose owners untimely death had taken newly from them) rent up and burnt, the living not able, as they pretended, to step into the woodes to gather other fire-wood; and, it is true, the Indian as fast killing without as the famine and pestilence within.23

Fresh colonists were constantly dispatched from England, but between the perils of the Indians without and the famine and pestilence within, they perished almost as fast as they could be replaced. Between December 1606 and February 1625, Virginia received 7,289 immigrants and buried 6,040 of them. Most barely had time to settle in. Of the 3,500 immigrants who arrived in the three years 1619-21, 3,000 were dead at the end of the period. To go to Virginia was effectively to commit suicide.

For those who survived, life was a succession of terrors and discomforts, from hunger and homesickness to the dread possibility of being tomahawked in one’s bed. As the colonist Richard Frethorne wrote with a touch of forgivable histrionics: ‘I thought no head had been able to hold so much water as hath and doth dailie flow from mine eyes. He was dead within the year.24

At least he was spared the messy end that awaited many of those who survived him. On Good Friday, 1622, during a period of amity between the colonists and native Americans, the Indian chief Opechancanough sent delegations of his tribes to the newly planted Virginia settlements of Kecoughtan, Henricus (also called Henrico or Henricopolis) and Charles City and their neighbouring farms. It was presented as a goodwill visit – some of the Indians even ‘sate down at Breakfast’, as one appalled colonial wrote afterwards – but upon a given signal, the Indians seized whatever implements happened to come to hand and murdered every man, woman and child they could catch, 350 in all, or about a third of Virginia’s total population.25