This Is a Borzoi Book Published by Alfred A. Knopf

Translation copyright 2010 © by Martin Bojowald

All rights reserved. Published in the United States by Alfred A. Knopf, a division of Random House, Inc., New York, and in Canada by Random House of Canada Limited, Toronto.

Originally published in Germany as Zurück vor den Urknall: Die ganze Geschichte des Universums by S. Fischer Verlag GmbH, Frankfurt am Main, in 2009. Copyright © 2009 by S. Fischer Verlag GmbH, Frankfurt am Main.

Knopf, Borzoi Books, and the colophon are registered trademarks of Random House, Inc.

Library of Congress Cataloging-in-Publication

Bojowald, Martin.

Once before time : a whole story of the universe / by Martin Bojowald.

p. cm.

eISBN: 978-0-307-59425-9

1. Cosmology. 2. Beginning. 3. Space and time. I. Title.

QB981.B684 2010

523.1—dc22 2010015937

v3.1

CONTENTS

Cover

Title Page

Copyright

PREFACE

INTRODUCTION

3. AN INTERLUDE ON THE ROLE OF MATHEMATICS

11. THE LIMITS OF SCIENCE AND THE NOBILITY OF NATURE

NOTES

A Note About the Author

PREFACE

… and if he does not do it solely for his own pleasure, he is not an artist at all.

—OSCAR WILDE, “The Soul of Man Under Socialism”

There are many reasons for a scientist to write a popular book, and many others not to. Research has primacy in science; this is where careers are forged and honors earned. Everything else wastes precious time—at least in the eyes of many a colleague who might one day be asked for an evaluation of one’s work.

But what good does all scientific progress do if it cannot be communicated? Do we really understand the world if we cannot explain it without the requirement of long, demanding studies? Learning a complex matter too often means that we merely accept its crucial ingredients and principles, getting used to standard methods of calculation. A true test of our understanding comes only when knowledge is to be explained to an open-minded layperson free of preconceived notions. In this sense, quantum mechanics as one example is, despite all its many successes and technological applications, far from being understood (as indicated, perhaps, by the third chapter of this book). Writing a popular book is thus an exercise of utmost relevance for a scientist’s own work.

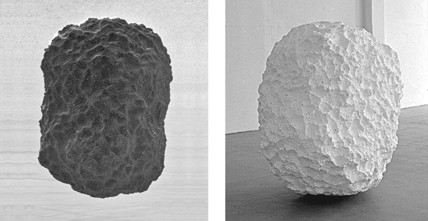

A popular book is, moreover, the ideal place to allude to the unity of science, literature, and art. In all these areas one tries to picture the world and to communicate it. This unity, of course, does not exist in reality but only as an ideal. But a book that aims to be widely accessible has a right to call upon this ideal. For this reason, I am grateful to those who helped me tap this unity. In the realm of art, I thank Gianni Caravaggio, some of whose works are represented here and who contributed in several discussions to my understanding. Thanks also go to Rüdiger Vaas, who, over many years, has helped me to understand and to communicate my understanding. He was among the first to find my scientific results worthy of wide dissemination. Many others, who cannot all be named here, have continually forced me to leave the fortified ivory tower of science.

This book would not have come into existence without the original suggestion of Jörg Bong from S. Fischer Verlag, and the subsequent support of Alexander Roesler. For reading parts of my manuscript and for their many useful suggestions, I am grateful to Gisele Ben-Dor, Maryam Shaeri, and Hannah Williams. I thank the Physics Department of Pennsylvania State University, who know how to provide an exceptionally agreeable and stimulating atmosphere for its members. Early on, they offered me a free semester without even knowing about my writing plans! Penn State’s Institute for Gravitation and the Cosmos has afforded me unique opportunities for multidisciplinary discussions and research related to topics in this book. The expertise of many of my colleagues has, at least subliminally, found its way into my writing.

I thank Elisabeth and Stefan Bojowald for their critical reading of an early version of the book, and for some hints such as those about cyclic images in Egyptology. In conceptualizing some passages, I was inspired by the tranquillity of their retreat at the edge of the Eifel Range.

STATE COLLEGE, PENNSYLVANIA

APRIL 2008 / SEPTEMBER 2009

INTRODUCTION

The more abstract the truth you want to teach, the more you must seduce the senses to it.

—FRIEDRICH NIETZSCHE, Beyond Good and Evil

The goal of science has always been nothing less than as complete an understanding as possible of the laws of the world—nothing less than as unequivocal a description as possible of what we see and probe. Nothing less than coming as close as possible to what can be considered truth—in a nonsubjective way, the only way that counts.

Over the course of the last century, physical research in particular has progressed far to build a dominant theoretical edifice: quantum mechanics and general relativity. Understanding nature on the large and the small scale has become possible, from the whole universe in cosmology all the way down to single molecules, atoms, and even elementary particles by means of quantum theory. Precise descriptions and a deep understanding of a wide variety of phenomena have resulted, and they have been spectacularly confirmed by observations. Especially during the past decade, this hallmark of scientific success has also been achieved in the cosmology of the early universe.

Aside from its technological relevance in almost all areas of everyday life, an unmistakable sign of the quality of scientific progress is that for quite some time, some fields of scientific inquiry have touched upon questions traditionally held to be in the realm of philosophy (giving rise to the term “experimental metaphysics,” coined by the physicist and philosopher Abner Shimony). Since Aristotle, the aim of all theory has been to shed light on general phenomena and to understand their causes, in contrast to collecting disconnected bits of knowledge. Philosophy, by contrast, asks for the deepest origin or principles of all that exists. In this sense, the merging of some physical and philosophical issues can in fact be considered a distinguishing feature of scientific progress. When physics pushes ahead to such questions, it gains a position that allows it to contribute to discussions of far more general—and more far-reaching—interest. In the context of a combination of cosmology and quantum theory, the most important question is that of the emergence and the earliest phases of the universe, a question that has preoccupied humankind ever since the beginning of philosophy—and even before.

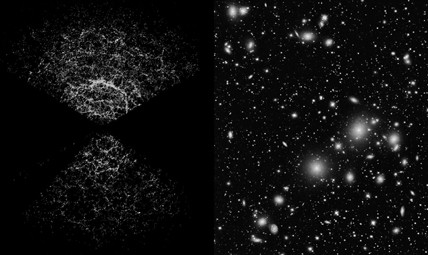

Other questions that have engaged thinkers over the centuries, and that remain of great significance, in quantum theory as well as general relativity, are the role of observers in the world and the question of what can be observed at all and what perhaps cannot. In cosmology, the entrance of physical research methods means the emergence of empirically testable scenarios for the whole world. The big bang model is founded on general relativity—as a description of space, time, and the driving gravitational force—as well as on quantum theory, which is indispensable to understanding properties of matter in the early universe. From all this, a breathtaking explanation results for the successive emergence of all matter—nuclei, atoms, and compound material objects all the way up to galaxies—out of an extremely hot initial phase.

At this rarefied place, the limits of the established worldview become visible. In spite of all their successes, general relativity together with quantum theory, as they are being used today, do not provide a complete description of the universe. When one solves the mathematical equations of general relativity in hopes of finding a model for the temporal evolution of the universe and its long-term history, one always reaches a point—the so-called big bang singularity—where the temperature of the universe was infinite. It is no surprise that the universe was very hot in the big bang phase; the expanding universe was, after all, much smaller and more densely compressed at those times than it is now, implying an enormous increase in temperature. But infinity as the result of a physical theory simply means that the theory has been stretched beyond its limits; its equations lose all meaning at such a place. In the case of the big bang model, one should not misunderstand the breakdown of equations as the prediction of a beginning of the world, even though it is often presented in this way. A point in time at which a mathematical equation results in an infinite value is not the beginning (nor the end) of time; it is rather a place where the theory shows its limitations. In spite of all its successes in other areas, the theory given by general relativity in combination with the quantum theory of matter remains to be extended.

The problem lies in the incompleteness of the revolution brought about by physical research during the last century. Quantum theory is used to describe matter in the universe, but not gravity or even space and time themselves. The latter are firmly in the domain of general relativity, largely independent of quantum physics. A successful combination of quantum theory and general relativity, even in the realms of space and time, would significantly extend known theories. Such a combination—quantum gravity—is particularly important for a description of the hot big bang phase of the universe, and it can explain, or so we hope, what happened at the infinity of the big bang singularity. Was this really the origin of the world and of time, or was there something before? And if there was something before the big bang, what was it?

Unfortunately, quantum gravity is extremely complicated. Even separately, general relativity and quantum theory are distinguished by a mathematical machinery unknown to preceding branches of physics. To make matters worse, these two areas require markedly different mathematical methods. A combination of the physical theories also requires a unification of the underlying mathematical principles, further magnifying their degree of difficulty. For this reason, no completely formulated quantum theory of gravity is available yet, in spite of many decades of research and vigorous efforts by a large number of scientists. What we have seen nonetheless, in particular in the last few years, are numerous promising indications for some properties of quantum gravity that can already be analyzed. As so often in research, the situation resembles the first stages of assembling a jigsaw puzzle, when one may already have an inkling of the emerging picture but could well be on the wrong track. Our current view indicates what a completion of physical theory can bring about: It allows us to see what possibly happened at and even before the big bang. We are granted a glimpse of the earliest times of our universe and can, for the first time, analyze how it may have arisen.

In this book, recent results of the theory as well as plans for satellite observations in the near future are explained, and it is shown how radically they could alter our worldview. Loop quantum gravity in particular, one of the variants currently put forward as a possible combination of general relativity and quantum theory, has provided first results concerning a nonsingular description of the big bang. In this framework, the universe existed before the big bang, and one can roughly estimate how it could have differed from what we see now. By its influence on later stages of the cosmic expansion, detectable by sensitive observations, this ancient prehistory of the universe can be explored. This book provides a firsthand report of this line of research, followed by a discussion of black holes, which also show fascinating effects. The final chapters touch upon further issues regarding a general understanding of the world, among them cosmogony, the riddle of time and its direction, and the grail of a “theory of everything.” In parallel with progress in our scientific understanding, the human trail of knowledge will itself, from a personal perspective, be illuminated by examples of modern research.

Although the required theory is highly mathematical, many calculations are by now intuitively understood. Intuition is not only useful for explorations in an unknown territory; it also allows broad explanations. As suggested by the Nietzsche passage at the beginning of this Introduction, the aims of this book may be realized without the use of mathematical formalism, except for a single crucial equation in chapter 4. While mathematics cannot be dispensed with if one wishes to discover and fully understand the issues underlying this book, an intuitive grasp is possible without too much effort. One may not always discern why things are supposed to be one way rather than another, but with some trust in the travel guide, connections can be grasped.

A warning is required nonetheless: Many areas of research in quantum gravity are still to be considered speculative. In contrast to the first half of the past century, the period when general relativity and quantum theory were developed, there are as yet no observations to corroborate the theoretical formulations of quantum gravity. What currently propels research is a motley collection of conceptual considerations regarding the incompleteness of general relativity, as well as mathematical consistency conditions in the formulation of equations. For instance, there is no guarantee whatsoever that a combination of certain mathematical methods, as they are used in general relativity and quantum physics, will allow solutions for a reliable description of the universe. The required mathematical tools are indeed so restrictive that formulating a theory with reasonable solutions would in itself represent immense success. Whether there may be other reasonable theories is a different, so far incompletely understood question. This shows the fragility of the pillars on which quantum gravity presently rests. But optimism does prevail, for many independent indications such as those in this book point in the same direction. What’s more, it is expected that cosmological observations made in the not too distant future could reveal phenomena predicted by quantum gravity. Such potential observations, which are also described in this book, would finally render quantum gravity an empirically tested theory.

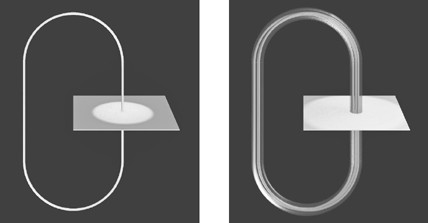

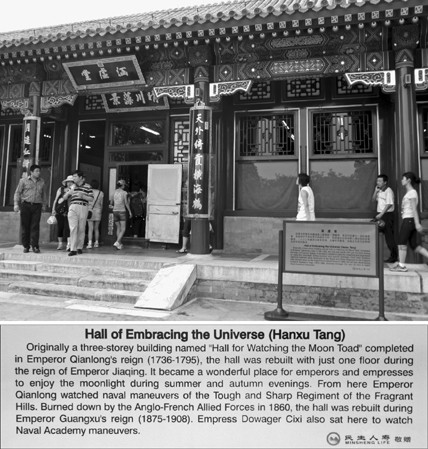

1. The philosopher’s stone melts away. What counts as certain knowledge may, upon further examination, turn out to be in need of correction. Evaluating the results or promises of science must always take into account its limits. Often, such limits are even more important than established results, for they show the way to new insights. (Sculpture by Gianni Caravaggio: Spreco di energia assoluta [Absolute waste of energy], 2006. Photograph: Robert Marossi.)

As of now, the status of quantum gravity resembles the earliest stages of claiming a new territory. Mathematics is the pioneer who opens up new areas beyond established frontiers. In our case, these frontiers are literally those of the universe and of time. Mathematics also serves to explore newly won lands; but in an empirical science such as physics, these lands can be claimed and secured only by observations. This remains to be accomplished for quantum gravity, which thus resembles territory still full of danger, where one can all too easily get lost or be swallowed up in swamps of speculation.

Such a land demands a humility toward nature that is not always shown. The language of physicists often sounds very determined (and sometimes even shows signs of hubris), but concerning a law of nature one must bear in mind, “It may be accurate, it may be faulty. If it is not accurate, the scientist, not nature, is to blame.”1 A physicist constructs laws of nature, but is the one responsible if they are broken. Nobody is a physicist’s subject, least of all Nature herself. This is true in particular for theoretical sketches such as quantum gravity. In the meantime, before observations can show that Nature pays at least some respect to the laws imposed on her, Intuition will be the guide in this unknown land—on an adventurous trip back before the big bang.

1. GRAVITATION

MASS ATTRACTION

Should something from the window fall

(and if it just the smallest be)

how jumps the law of gravity

as mighty as wind from the sea

at every ball or blueberry

and takes them to the core of all.

—RAINER MARIA RILKE, The Book of Hours

Over large distances, the universe is governed by the gravitational force. In physics, the action of a force is the cause of motion or of any form of change. Complete rest is possible only if no net forces are acting. One scenario in which this can happen is the absence of any matter whatsoever—a state called vacuum. But matter quite obviously does exist, and just by its mass it causes gravitational forces on other masses. To realize motionless states of rest, at least approximately, all acting forces must compensate each other. In addition to gravity, there are the electric and magnetic forces to be considered, as well as two kinds of forces called weak and strong interactions, reigning in the realm of elementary particles.

While the electric force is easily compensated over large distances by the existence of positive and negative charges, mutually neutralizing each other, the forces that come into play in the interior of nuclei act only at extremely short range. What remains over long distances is gravity alone. It rules the general attraction of masses and energy distributions in space, and thus dictates the behavior of the universe itself. In contrast to electricity, there are no negative masses: Gravitational attraction cannot be fully compensated. Once massive objects such as stars or entire galaxies form, the resulting gravitational interaction dominates all that happens. The facets of this commonplace force, often ignored in recent research and yet—in cosmology and black holes—giving rise to a rich variety of exotic phenomena, are the topic of this book.

NEWTON’S LAW OF GRAVITY:

DISTANT ACTION AND A FATAL FLAW

The first general law of gravity was formulated by Isaac Newton. As is typical for many important steps in gravitational research, this theoretical development required a unified view on well-known phenomena on Earth with a long list of intricate observations of objects in space: the moon and some planets. The latter was accomplished thanks to technologies that, for those times, were highly sophisticated; conversely, such research has spawned the development of new instruments. Combining fundamental questions and technological applications, in many areas of science and in gravitational research in particular, is a success story that continues into the present day.

Even before Newton, the initial untidy flood of data, as it was accumulated by astronomers such as Tycho Brahe, Johannes Kepler, and many others, was ordered into a model of the solar system. Since Nicolaus Copernicus and Kepler, this model has largely held the form we know today: Planets orbit around the sun along trajectories that, by a good approximation, can be considered as ellipses, or slightly oblong circles. But what is propelling the planets along their curved tracks? From common observations we know that a force is necessary to keep a body from moving stubbornly along a straight line. How can one describe or even explain the required force in the case of the planets?

Newton’s groundbreaking insight—the existence of a universal force of gravity causing not only the motion of all planets around the sun, and of the moon around the earth, but also the everyday phenomena of falling objects—is impressive. It is an excellent example of the origin of scientific explanation: not an answer to a “why” question in the sense of an anthropomorphic motivation, but a plethora of complicated phenomena, unrelated at first sight, reduced to a single mechanism: a law of nature. Newton’s mathematical description of the situation is very compact and highly efficient for predictions of new phenomena described by the same law. In the case of Newton’s law of gravity, the unfathomable power of theoretical prediction has repeatedly been employed—for instance, to find new planets via small deviations imposed by their gravitational pull on the trajectories of other planets, or in planning modern satellite missions.

Such success stories, in which an elegant mathematical description explains and predicts a multitude of phenomena, can be found throughout physics; they are indeed the landmarks of its progress. Reliving such insights is often so gratifying that scientists employ the term “beauty”—a pragmatic kind of beauty whose core, the mathematical formulation, can be seen only by the initiated, but which in its concrete successes can also be appreciated by outsiders.1

Concretely, Newton’s law of gravity describes the attractive force between two bodies caused by their masses. The force increases proportionally with the amounts of the masses: The attraction between two heavy bodies is larger than that between two light ones. It is also inversely proportional to the squared distance between the bodies; it weakens considerably when the bodies are farther apart. In addition to these proportionalities, the exact quantitative strength of the force is determined by a mathematical parameter, now called Newton’s gravitational constant. In this value one can see the unification of earthly and heavenly phenomena. The gravitational constant can be derived from the tiny attraction of two masses on Earth, as was first accomplished in Henry Cavendish’s laboratory in 1797 and ’98; using the same value to calculate the force exerted by the sun on the planets shows exactly the right nudge required to hold the planets on their observed orbits.

In contrast to its clear dependence on distance, Newton’s gravitational force is completely independent of time. Time independence sounds plausible, for a fundamental law of nature should, after all, be valid at all times in the same way. It is also consistent with the dominant understanding of space and time in Newton’s age and long thereafter, not to mention our everyday conceptions of them. Although one can easily change the positions and distances of objects in space, space itself appears unchangeable. Also, time seems to pass simply and uniformly, without being influenced by physical processes or technical instruments. Since gravity, according to Newton, acts instantaneously—independently of how far apart the masses are—the force need be formulated only for the case of two masses not at the same place, but at the same time.

Despite its plausible form and celebrated successes, Newton’s theory did have a flaw in its beauty. Like the beauty of the theory itself, this flaw, too, can be understood completely only with a sufficient amount of background knowledge. But even on the surface, it is a good example of the progress of theoretical physics. Newton himself had reportedly been uneasy about the “animalistic” tendencies of his law of gravitation: As an animal is attracted from far away by the expectation of food or companionship, a massive body appeared to move toward another one from a distance. This action at a distance, apparently without the more intuitive type of local interactions as realized for bodies pushing each other at close contact, was considered a serious conceptual weakness in spite of all concrete successes.

It is extremely difficult to correct this weak spot by constructing a theory only of local interactions that, of course, should otherwise remain compatible with the astronomical successes of Newton’s theory. To start with, one will have to consider the time dimension, too, for such a local interaction must take some time to propagate from one body to the other. As it turned out, a consistent reformulation is possible only by radically changing Newton’s—and our—intuitive conceptions of space and time. It requires much more highly sophisticated mathematical machineries and substantial efforts, but these efforts are rewarded by a theory of unprecedented beauty in the sense described above. All this required dedicated physical research and, not least, a strong mathematical grounding. The flaw in Newton’s theory was to be corrected only long after Newton—by Albert Einstein.

RELATIVITY OF SPACE AND TIME:

SPACE-TIME TRANSFORMERS

All this took a long time, or a short time: for, strictly speaking, no time on earth exists for such things.

—FRIEDRICH NIETZSCHE, Thus Spoke Zarathustra

In physics, as in all of science, it is important to distinguish between properties that depend on the person making an observation and properties independent of an observer. The mass of a particle refers only to the particle itself and will, if the particle remains unchanged, always be measured as the same value. Except for unavoidable experimental inaccuracies, it does not matter who is doing the measurement. A particle’s velocity, on the other hand, appears different, and sometimes drastically so, depending on whether an observer is moving with respect to the particle. An observer moving along with the particle at exactly the same speed would perceive the particle as being at rest, well known from two cars cruising side by side along a straight stretch of highway. To the driver of one car, the other one seems not to be moving. Any other observer would see the car (or the particle) move and attribute to it a nonzero velocity. Relativity in general terms is the mathematical analysis of such relationships; it ultimately tells us what we can learn about nature in a fully objective, observer-independent way.

For many centuries, space and time were thought of as observer-independent. Distances between points and durations of temporal periods appeared absolute, no matter how an observer would be positioned or move. But the first fault lines in this worldview opened up toward the end of the nineteenth century, eventually leading to special relativity. In this new view, space and time cannot be seen in separation but are intertwined, interchangeable, and observer-dependent. Like the velocity of a particle, the values measured for them depend on the motion of an observer. In abstract terms, they describe different dimensions of a single physical object: space-time; and only space-time concepts, but not space or time themselves, are independent of the person making a measurement.

How can this be demonstrated by physical means? To answer this question and to explain the role of dimensions, we first consider space alone. Space has different dimensions, namely three: we can move sideways, back and forth, and up or down. Here, one might ask why these should be considered as three dimensions of a single space, rather than three completely independent directions: width, depth, height. The answer is simple. Width, depth, and height are not absolute and independent properties; they can be commuted into one another. We have only to turn around in space to make the height of a cube appear as its width, and in this sense height and width can be interchanged. This is not a transformation by a physical process, like a chemical reaction, but a much simpler one by means of changing our viewpoint. What we see as height, width, and depth depend on the place of an observer (or on conventions such as the use of Earth’s surface along which to measure width and depth); they cannot be considered properties of space itself as a physical object. For this reason, one speaks of three-dimensional space, not of the existence of three independent one-dimensional directions.

Time is similar, although its transformation is harder. By simply turning around one can influence only one’s view on space; the change of the angle of view (or, more precisely, the tangent of the angle as a mathematical function, which does not differ much from the angle when it is small) is expressed by the ratio of spatial extensions, such as the height before and after changing the viewpoint. By changing the angle, one can only transform spatial extensions into one another. If we want to transform space into time, we must vary a quantity given by a ratio of spatial and time extensions: a velocity. Traversing a certain distance in some period of time means that one moves at a velocity obtained as the ratio of that distance to the required time.

This consideration does, in fact, lead to the basic phenomenon of special relativity. If we are moving faster than a second observer while viewing a certain scene, spatial and time distances appear different to each of us. As changing the angle of view transforms spatial extensions, changing the velocity of an observer commutes spatial distances to timelike ones and vice versa. Distinguishing space and time extensions is thus dependent on the viewpoint (or the “viewtrack,” if we are indeed moving); it cannot have a physical basis independent of observers’ properties. Instead of separate space and time, there is only one joint object: space-time. Special relativity is the theory of these changing viewtracks (also called inertial observers) in the absence of the gravitational force.

As an illustration, these considerations are certainly no proof; not every ratio implies a transformation when it is changed. For instance, the birthrate of a country is the ratio of newborns to the total population, but a change in the birthrate does not mean that inhabitants are transformed into newborns. An important difference from the previous example is the role of observers: Changes are caused by observers taking different positions and states of motion; and since physical laws must be independent of the special private and personal properties of those making the observations, concepts distinguished only by viewpoints must be discarded. In special relativity, this “transformability” of space and time, forcing us to deny them separate meaning, has not only been substantiated mathematically; it has also been verified experimentally myriad times, especially in reactions of elementary particles. While the Newtonian concepts of a rigid space and an independent time would not agree with many measurements made in the last century, in a special relativistic view no inconsistencies arise.

Newton’s view was able to enjoy great success for such a long time because noticeably transforming space and time requires very large observer velocities. Unless measurements are extremely refined and precise, in order to see an effect, speeds must be close to the immense velocity of light: roughly 300,000 kilometers per second. In everyday life, this makes the transformability of space and time imperceptible.2 For an observational verification, one needs either very high velocities or very precise time measurements in order to notice the tiny time changes at low velocities. Both methods have been developed in the past century.

Very precise time measurements are achieved by atomic clocks, making space-time transformations detectable even at the typical speeds of airplanes. (Since planes have to move at a certain height, additional effects arise due to a reduction of gravity acting on the clock farther away from the center of the earth. This general relativistic effect, depending on the gravitational force, is introduced below.)

At velocities close to that of light, space-time changes drastically: As an observer at rest would describe it, time is transformed almost completely into space, and thus passes ever more slowly. Once the speed of light is reached, which is possible only for massless objects such as light itself, all timelike distances vanish. Going beyond that speed limit is impossible, for all time has already been used up when we reach the speed of light. No signal can move faster than light. Delays in any transmission of information must always occur; they may be small, but they do become noticeable at large distances. (This maximum speed is that of light in a vacuum. In transparent media such as water, light usually moves more slowly than in a vacuum. There are thus signals in such media that propagate faster than light in the same medium, but not faster than light in a vacuum.)

High velocities can be probed, too, although not by violently accelerating a clock, but by employing fast clocks that nature provides. Earth is bombarded from outer space by highly energetic particles moving at nearly the speed of light.3 Most of them do not reach the ground, because they react with oxygen and nitrogen nuclei in the upper atmosphere and produce new particles, among them the muons. Muons are a heavy kind of electron and quite similar to them, except that they are unstable: A muon at rest decays after just a millionth of a second, producing an electron and two other stable particles called neutrinos. The decay time may be used as a unit of time telling us when a millionth of a second has passed at the speed of a muon. By the standards of modern technology, this “muon clock” is not very precise. But muons can be brought to high velocities much more easily than atomic clocks, and a free supply of them is showered down on Earth in a quickly moving state every day in the form of natural cosmic radiation.

Cosmic muons lead to an impressive confirmation of special relativity and its transformability of space and time. Even racing at such high velocities as they acquire in the upper atmosphere, the muons’ lifetime of a millionth of a second would not be nearly enough for them to reach the ground. And yet detectors do register many of these particles, even though they are supposed to have decayed along the way. The resolution of this problem is that a millionth of a second, within which a muon at rest would decay, seems much longer for a muon traveling at high speed and watched from the ground. Thanks to the high muon velocity, from the particles’ viewpoint enough space is transformed into time for them to reach the ground before their decay.

Measurements with atomic clocks or of muons were not available when Einstein developed his theory of special relativity. Rather, he derived his equations for the transformation of space and time from a deep consideration of the theory of light, introduced in 1861 by James Clerk Maxwell. (As a mathematical curiosity, the same transformation law, but without a physical interpretation, had already been found by Hendrik Antoon Lorentz.) The process of applying such principles independently of observations can be compared with Newton’s realization of his own theory’s incompleteness. Newton’s law of gravity was, at its inception and long thereafter, highly successful in the description of astronomical observations; it was centuries before the first minute and unexplained deviations from the law were observed. Even so, as mentioned above, Newton was not completely happy, for his law looked too animalistic. What makes two masses attract each other even when they may be arbitrarily far apart? This flaw, already looked upon with suspicion by Newton, becomes acute in special relativity.

In Newton’s understanding of separate space and time, there is no problem in principle with his gravitational law; there may at best be an aesthetic one. In special relativity, however, the law flatly becomes inconsistent. Newton’s gravitational force depends on the distance between two bodies in space, but there is no quantity related to time. If one tries to combine this with a transformability of space and time, a strict application of the law would mean that the gravitational force had to depend on the state of motion, e.g., the velocity of a measurement apparatus: A change in the velocity must transform space in time, causing Newton’s law to be time-dependent. The smaller spatial distance would then be compensated by the larger time lapse in such a way that all observers, moving at different speeds, would calculate the correct force. But this requirement was not taken into account when Newton formulated his law; thus the need to extend Newton’s theory.

A similar situation is realized in the theory of electromagnetism. Coulomb’s law for the electrostatic attraction (or repulsion) of two charged bodies, so called after its formulator, Charles Augustin de Coulomb, is very similar to Newton’s for the gravitational attraction of two masses. One simply has to replace masses with charges and Newton’s gravitational constant with an analogous one to quantify the electric force. (Moreover, one has to change the sign of the force, indicating its direction: Two charges of equal sign repel each other, while two—always positive—masses attract each other.) In particular, the dependence on the distance is the same in both cases, and in none of these formulas does a distance in time appear. For Coulomb’s law, an extension had already been found by Maxwell for different reasons, based on the relationship between electric and magnetic phenomena. This extension turned out to be consistent with the transformability of space and time; and it had been found well before Einstein, for whom it played a major role in his considerations leading to special relativity. Maxwell, however, did not recognize the connection between his extension of Coulomb’s law and the changes of space and time.

In 1905, when Einstein developed special relativity, uncovering the observer-dependent form of space and time, no reformulation of Newton’s law existed. The necessary extension of the theory, addressed afterward by Einstein himself, proved to be much more complicated than Maxwell’s generalization of Coulomb’s law. Another decade had to pass before Einstein came up with its final form—general relativity—in 1915. The payoff was not only a gravitational law reconciled with the principles of special relativity, but also a further radical change in our understanding of space and time as well as a mathematical foundation for cosmology. In the remainder of this chapter we will primarily be occupied with the structure of space and time, and much later come back to its role in the behavior of the whole universe, in the chapter on cosmogony (chapter 8).

GENERAL RELATIVITY:

SPACE AND TIME UNBOUND

Effortlessly swings he the world, by his knowing and willing alone.

—XENOPHANES OF KOLOPHON, Fragment

Special relativity does not include the gravitational force; in this sense it is not general enough. With general relativity a gravitational law is available that is consistent with special relativity. But it is not only an extended, more contrived form of Newton’s law; the theory of general relativity constitutes nothing less than the final promotion of space-time to an object of physical meaning. What is considered space and what is considered time is not only changeable, as in special relativity, depending on the viewpoint of an observer; it is itself dynamic and subject to physical processes: The form of space-time is determined by the matter it contains. Space-time is not a straight, flat, four-dimensional hypercube extending unchanged all the way to infinity. Like a piece of old rubber, it writhes under its own inner tensions into a curved structure. The inner tension of space-time is the gravitational force.

As velocities have to be very large to clearly bring out the effects of special relativity, changing space and time just by having a different viewpoint, the influence of gravity on space-time is usually weak. By currently available means it is impossible to exploit this technologically (even though we sometimes speculate about the construction of wormholes, warp drives, or mini–black holes). In astrophysics or cosmology, however, there are often objects so heavy that a precise description must take into account not only their matter content but also properties of space and time themselves. This has led to many tests of general relativity along with, as will be detailed later, new worldviews in cosmology.

In 1915, Einstein did not have such observations at his disposal, just as his earlier formulation of special relativity was driven by thought rather than experiments; his constructions were based solely, but solidly, on the possibility of a mathematically consistent realization of his overarching principles. The result is a theory whose elegance remains unsurpassed in physics. Drawing on general principles and a geometrical form of mathematics, which through a long and noble pedigree can be traced back to its eminent beginnings in ancient Greece—in the case of geometry, to Plato and Euclid—one must almost inevitably arrive at a unique form of equations describing the entire cosmos.

Einstein had to struggle for a long time before he understood the right principles and the required mathematics, but his work was eventually crowned with immense success. Not only did the theory satisfy the highest demands of mathematics, a field in which it continues to provide important stimulus for further research, but later it was also able to explain many observations that Newton’s theory could not.

Such long-lasting implications surely justify the great interest in Einstein’s work; but in the last decades, alas, this success has often turned into a curse. In wide circles of physicists, the prevailing opinion often seems to be that general relativity is already completely understood and experimentally fully verified. Sometimes such a view is even used to justify cutting back research, and with it jobs, in this area. A complete verification of a theory is in any case never possible, and for this reason alone we should never forgo new experiments that might provide independent comparisons of theory and observations—especially with such an important and fundamental idea as general relativity. The set of experiments testing a theory can, at any given time, cover only a limited range of phenomena. An experimentally tested theory may be successful to a certain degree, but we can never be certain that it correctly describes all processes to which it can in principle be applied. Just as Newton’s theory was consistent with observations for a long time, until it was recognized as a special case of general relativity with a limited range of validity, general relativity, too, could turn out to be a limiting case of an unknown theory yet to be found. Even on a purely theoretical basis, relativity remains incompletely understood; there are many unanswered questions, of direct importance in particular for cosmology, and thus an acute need for research remains. There is indeed mounting evidence that general relativity itself needs to be extended.

Most physical theories are established through a long and tedious process starting from a creative idea, or in other cases from an observation not explicable by available knowledge. An idea may be followed up on because it might appear attractive from an aesthetic or mathematical perspective; a new, unexplained experimental result might force us to change current theories so that they agree with the new observation. Such a process can go on for decades, and it keeps scores of physicists busy—theoretical as well as experimental ones. Many currently hot theories, such as those of particle physics or quantum gravity, are still subjected to this process. The development of quantum mechanics also followed this course for a long time, until it was cast in its currently accepted form. (Even here, many foundational questions remain open; from the perspective of physical applications, however, quantum mechanics can be said to be understood.) The end result, as it enters textbooks later on, is often hardly recognizable compared with the early formulations; many a historical contribution has turned out to be unimportant, too complicated, or just plain wrong. For theories still in development, it is not even clear whether they will ever become a solid part of our worldview; entire branches of physics can come to a dead end, even though research always provides lessons that then become important elsewhere.

The situation was completely different when Einstein devised general relativity. Einstein alone, supported only by a few friends such as Marcel Grossmann and in a certain competition with the mathematician David Hilbert, provided the decisive work. Not all contributions followed a direct step-by-step route, and some of the published articles did turn out to be fruitless. But in a relatively short amount of time he completed his work, which soon proved successful in confrontations with observations. One may easily get the impression that Einstein had immediately created his theory in perfect form, without the need for a lengthy series of studies and improvements. Certainly, this impression can explain why even some physicists no longer consider general relativity worthy of new research.4

In reality, however, the picture is different. Only the simplest solutions of general relativity are understood, which, fortunately, suffice for many questions in physics; even the simplest and most highly symmetric solutions afford impressive insights into cosmology and astrophysical objects such as black holes. But if one tries to go only one step in complexity beyond such solutions, one encounters immense difficulties owing to the complicated form of the theory. Its equations are of a type allowing hardly any standard solution procedures to be applied. Every situation has to be analyzed anew, and only in a few lucky cases can exact solutions be found. Using computers sometimes helps, but even then the equations resist easy analysis. For these reasons, many mathematicians are interested in several issues of general relativity, and time and again they have contributed to our understanding of it. Open questions also exist, such as that of predictability (see chapter 6), that have a bearing on the foundation of physics as a whole.

A numerical analysis of Einstein’s equations—often the last hope when direct mathematical solutions turn out to be too complicated—is extremely difficult. Computational research was begun in the 1970s and received considerable support in the 1990s. Collisions of heavy stars or black holes were of particular interest because they were expected to be strong sources of an entirely new kind of signal: gravitational waves. General relativity predicts that space-time itself can be excited to vibrations, periodic ripples that then propagate in the form of waves just like those on the sea. We have strong hopes of seeing these in coming years with sensitive detectors; such developments would not only further test general relativity but also open up a new branch of astronomy. We could start to explore the cosmos not only by light or other forms of electromagnetic radiation, but also with the help of gravitational waves. It is as if we would be able not only to glance into the sky, but also to listen to it. A new sense would be opened, enabling and ennobling unprecedented experiences and insights.

To detect gravitational waves, like any new signal, one has to know what to look for: One must know the intensity of a gravitational wave as it changes in time while traveling to us, much like a wave through water, starting from its creation in a collision. Unfortunately, the mathematical equations are too complicated for a direct solution, and even computers have been of little use; frustratingly, the programs available crashed before they could show interesting results—it was like having to type a long text in a program that would quit after entering every single word. Only after intensive activity over many years, performed in several groups (whose total number is still small compared to collaborations in particle or condensed matter physics), did a breakthrough recently become possible. As first shown in the work of Frans Pretorius in 2005, stable computer programs can now be developed that are able to provide valuable insights into heavy object collision results. Just in time, the construction of gravitational wave detectors such as LIGO, a set of detectors in the states of Louisiana and Washington, GEO600 in Germany, Virgo in Italy, and TAMA in Japan is rapidly under way; the dream of gravitational wave astronomy may soon become a reality. None of this would be possible without the foundation of general relativity, as buttressed ever more firmly by continuing research.

But back to the historical developments. Einstein was certainly not working completely independently of observations, for he sought to extend Newton’s astronomically tested gravitational law. Grounding in established laws is important for any kind of progress in physics. But Einstein had little further experimental guidance as to how Newton’s theory was to be extended. There were merely tiny observed deviations in some planetary trajectories, in particular in Mercury’s orbit, which, as noticed first by Urbain Le Verrier in 1855, seemed to shift compared to Newtonian calculations by the small amount of 43 arc seconds (about one one-hundredth of a degree) per century. The influence of Venus, the planet closest to Mercury, was already factored in, as were small perturbations such as possible irregularities in the shape of the sun, without managing to reconcile the observations with theoretical considerations. Only Einstein was able to explain the shifted trajectory in a natural way by using his new equations of motion in general relativity.

Fortunately, new data soon arrived that were also incompatible with Newton’s law but that had already been predicted correctly by Einstein. These were tiny shifts, or bending, experienced by starlight in close passage around the sun. Measured by Arthur Eddington during a total solar eclipse in 1919, these shifts led to the first triumphant verification of general relativity. (By now, more precise measurements of this type have been performed using radio waves emitted by quasars, as done for the first time by Edward Fomalont and Richard Sramek in 1976.) If deviations between Einstein’s predictions and those observations had occurred, Einstein’s theory would have been long forgotten, despite his quip “If nature does not coincide with theory, it is all the worse for nature” (“Wenn die Natur nicht mit der Theorie übereinstimmt, so ist dies um so schlimmer für die Natur”).

In 1960, Robert Pound and Glen Rebka performed the first test of general relativity in an experiment on earth, and this test, too, was passed with highest marks. Here, the transformation of time at different altitudes, implying different positions in space-time, was measured. Farther away from the center of the earth, the gravitational force is weaker, which geometrically implies, as we will soon see, a changed form of space-time. Time progresses differently at higher altitudes, becoming faster than at lower ones. This speed-up is normally not noticeable, but it can be detected by sensitive measurements. To that end, Pound and Rebka exploited the Mössbauer effect, endowing some crystals with very finely tuned frequencies for the emission and absorption of light. Matter such as an atom can usually emit and absorb light near certain frequencies in the so-called spectrum, as it is used in fluorescent lights or lasers. The reason for the select set of frequencies is the quantum nature of matter (the topic of the next chapter). Since single atoms or molecules, on which such measurements would be performed, move in a gas, emission and absorption processes occur in different states of motion; the atoms, after all, move due to heat. Emission and absorption take place at different velocities; and according to special relativity, the progress of time, thus the frequency as the number of oscillations per unit of time, depends on the state of motion. Therefore, light is emitted and absorbed not at fixed and precise frequencies, but in frequency intervals of finite width.

In bodies subject to the Mössbauer effect, emission and absorption happen not at single atoms but at the entire crystal. As a whole, the crystal moves less than atoms in a gas. Accordingly, emission or absorption frequencies are much more precise. Special relativity no longer implies deviations of frequencies; but when a light-emitting crystal and an absorbing one of the same type are positioned at different altitudes, general relativity comes into play. Time progresses differently for the emitting crystal than it does for the absorbing one, causing a frequency mismatch in the light that reaches the absorbing crystal. A great enough mismatch prevents the light from being absorbed, and this is exactly what one can detect without even using large differences in altitude: The height of a building of several stories is sufficient.

The same relativistic phenomenon, using not the Mössbauer effect but the precision of atomic clocks, was observed in 1971 by J. C. Hafele and Richard Keating through sensitive comparisons of the progress of time aboard airplanes. In this case, it is special relativity that is important owing to flight velocity, as well as general relativity owing to altitude, as the airplanes changed positions along the gravitational force. Even so, the importance of general relativity was not always recognized even long after this successful experiment. On June 23, 1977, the satellite NTS-2 was launched, the first to carry an atomic clock for experimental purposes. The atomic clock was constructed so as to correct for the relativistic changes in the flow of time. But the developers of the satellite were not fully convinced of the need for corrections for general relativity; instead, the clock was equipped with a gadget to shift the clock rate to the correct value if necessary. After about twenty days in space, the signals indeed showed a deviation in the clock rate compared to clocks on Earth, exactly as predicted by general relativity. In this case, fortunately, the mistake could be corrected by switching on the frequency shift.

Perhaps the most impressive confirmation of general relativity was achieved through observations of double pulsars. These are systems of two stars closely orbiting around each other, one of which (the pulsar) emits radiation at regular intervals. The reason for the emission is usually a rapidly rotating neutron star that, like a lighthouse, emits signals into space and to us. Depending on the position of the pulsar in the double system, signals are delayed at different rates, for they must traverse different routes to reach us. The orbit and possible changes of the system’s radius can thus be determined very precisely. In particular, general relativity predicts that gravitational waves are emitted during the orbiting process, causing the system to lose energy. The loss of energy implies that the two stars approach each other, which should be detectable by precisely measuring the orbit. The loss would be largest when the stars are closest. Each of them then sits more deeply in the gravitational field of its partner and general relativistic effects are stronger.

In 1974, Joseph Taylor and Russell Hulse identified a very close double pulsar consisting of two 20-kilometer-radius neutron stars orbiting around each other in just one-third of a day.5 Their closest distance from each other is a mere 700,000 kilometers, roughly the radius of the sun! This is an ideal test system for slight deviations in the orbit as they are predicted by general relativity, and indeed, observations still being made up to the present day agree exactly with the predictions. (This system is, by the way, also subject to an additional shift in the orbit just like Mercury’s, which does not involve a change of the radius as the energy loss due to gravitational waves does. Rather, one can visualize it as a slowly rotating oval whose shape shows the planet’s orbit and its shift. In the double pulsar, the angular shift is four degrees per year—far larger than Mercury’s—and can be used to estimate the neutron stars’ masses.) Since then, many more close double pulsars with a wide range of orbital properties have been discovered, allowing a large variety of observational tests.

One of the most recent experiments is Gravity Probe B—a satellite launched on April 20, 2004, to collect data for sixteen months in orbit. The idea for this mission was first conceived in 1959, but developing the required technology took a long and tedious road even under the skillful direction of Francis Everitt. The effects aimed at—namely, a “yanking” exerted on space-time in the vicinity of the rotating Earth—demand extremely precise gyroscopes. To avoid perturbations by too uneven a shape, which would prevent any measurement of such sensitive effects, the gyros had to contain the most perfect spheres ever constructed. Even the whole universe can offer few rivals; only some very dense neutron stars are smoother spheres. If one of the spheres were enlarged to the size of the earth, its highest mountain would be only twelve feet high. First results were announced in early 2007, and they again confirmed general relativity.

CURVED SPACE-TIME: A STAGE SHAKING UNDER THE WEIGHT OF THE ACTORS

Ay!

There are times when the great universe

Like cloth in some unskilful dyer’s vat

Shrivels into a hand’s-breadth, and perchance

That time is now! Well! Let that time be now.

—OSCAR WILDE, A Florentine Tragedy

In general relativity, the form of space-time is determined by the matter it contains. Here the gravitational force finds its very origin, intimately connected with the structure of space and time in a way not realized for any of the other known forces in physics. Mathematically, all this is described by means of a curved space-time, a space-time whose degree of transformation between space and time, in the relativistic sense, depends not only on an observer’s motion but also on the position in space and time. By this dependence on the observer’s position, the concepts of special relativity are generalized. The theory is no longer constrained to what (inertial) observers moving at different but constant velocities along straight lines see. This assumption was a simplification employed in special relativity to understand the effects of different velocities, but it is not realistic: When we make observations, we are moving along complicated trajectories in space and time. We may be standing more or less still in a lab, but we are standing on the earth, which is rotating and orbiting the sun. The sun is moving, too, and so is the Milky Way. A general theory must be able to describe observers moving along arbitrary curves, possibly accelerating when forces are acting on them. General relativity does so by allowing for position-dependent transformations of space and time, thereby endowing space-time with a curved form.

The prime example of a curved space is the two-dimensional surface of a ball, or a sphere. It is a curved surface, and also closed in on itself, although the latter property is not shared by all curved spaces. What is illustrated by the sphere is the fact that lines on its surface must be curved, as seen from the surrounding space, in order to stay on the surface. Every straight line in space starting on its surface would immediately leave it. This behavior can be seen as a general consequence of curvature, even though abstract curved spaces do not need a surrounding space such as the three-dimensional one around the sphere. Space-time itself, for instance, is four-dimensional and would require an even higher dimensional ambient space. All consequences of curvature can mathematically be described without referring to such surrounding spaces—a convenient fact crucially exploited by Einstein in his formulation of general relativity. The relevant branch of mathematics, differential geometry, was founded on work by Bernhard Riemann in the nineteenth century.

Returning to the example of the sphere in its ambient three-dimensional space, we can see a further important consequence of curvature. When we move and change our position on a sphere, as we regularly do on the earth’s surface, we are, as seen from the ambient space, forced to rotate. We usually do not notice this because, for one thing, Earth is very large, and moreover, we can rarely take this view from outer space. But the forced rotation can easily be visualized on a globe: The head of a person in Europe points in a different direction in space than does that of a person in America, even if both people are standing up ramrod-straight.6 No such rotation would occur if one were moving on a planar surface such as the level floor of a room; it must be a consequence of curvature.

Space-time is curved by the matter it contains and should show effects comparable to those on a sphere. This is more difficult to visualize, for we now have a four-dimensional situation involving time as well. Our earlier analogy shows the most important consequence, which is directly related to the gravitational force: From special relativity we know that the transformability of space and time is connected with changes of velocities, just as the transformability of the three spatial dimensions is related to changes of angles. Just as a curved surface in space enforces a rotation when moving, moving in curved space-time should imply a change of velocities. Changes of velocities, or accelerations, are always caused by forces. The curvature of space-time thus implies the action of a force, which according to general relativity is nothing but gravity.

With this astonishing trick, Einstein was able to extend Newton’s theory and erase its flaw. In Einstein’s gravity there are no spooky interactions between distant objects in a direct way. By incorporating space and time—not as a rigid and given stage as Newton had assumed, but as a changing object with an inherent structure subject to physical laws—this action at a distance is forever banned from physics. Masses cause their directly surrounding space-time region to curve, upon which other masses experience a gravitational force as a result of the curvature. That this does not constitute action at a distance can be seen when the first mass is moving, causing the gravitational force on other masses to change. As shown by general relativity, this change does not occur instantaneously: The change in curvature has to propagate sufficiently far in space-time before it can reach distant masses. Physical interactions happen only locally, and what haunted Newton is resolved; a consistent theoretical underpinning has been gained. But perhaps the most impressive consequence of curved space-time can be experienced in cosmology, in which general relativity determines the temporal evolution of the universe itself.

LIMITS OF SPACE AND TIME: THE END OF A THEORY

Beware of asking for more time: no ill fate ever grants it.

—MIRABEAU

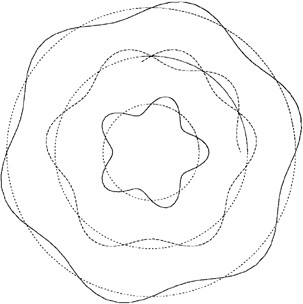

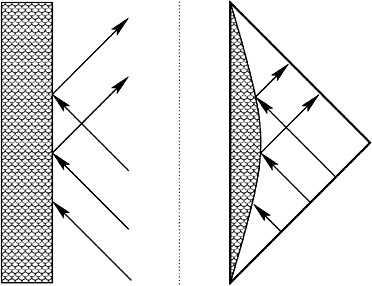

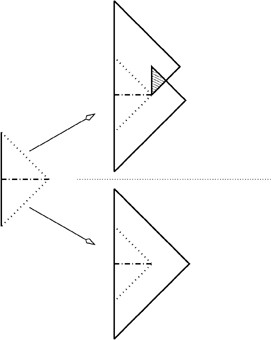

Promoting space-time from a mere stage, serving only to support the change of matter, to a physical object in the theory of relativity is a revolution (figure 2). The complicated interplay—matter curves space, and its own motion is influenced by curvature—leads to a mathematical description of unprecedented difficulty, keeping not only physicists but also mathematicians busy up to the present day. Fortunately, the theory is now sufficiently well understood to reveal many fundamental implications for our understanding of physical behavior, in particular that of the universe. The role of space-time, now seen as a physical object, is often compared to a novel in which one of the characters is the book itself. Consequences of such a novel would surely be surprising, though hard to imagine. Independently of imagination, consequences of the physical role of space-time can reliably be computed by means of the underlying mathematics. As we will see, this has even more ominous consequences in general relativity than are suggested by the example of the novel.

Before entering this dark chapter of so-called singularities, we will once more have a look at the relationship between gravity and the transformability of space and time. The division between space and time is influenced not only by velocity, but also gravity as caused by matter. For instance, time proceeds faster at higher altitudes, where the distance to the center of the earth is larger.7 As before, such changes are usually imperceptibly small, but they do by now have technological relevance. The most precise atomic clocks experience a significant change in their rate when they are raised by just ten meters! As already mentioned, these effects, in addition to those due to velocities, must be taken into account if relativity is to be tested by means of atomic clocks on airplanes. (In this case, the gravitational effect is even larger than the velocity effect, and both are opposite to each other: At high velocities, clocks should be slower, but in an actual experiment with the typical altitudes and velocities of airplanes they are faster as a result of gravity.)

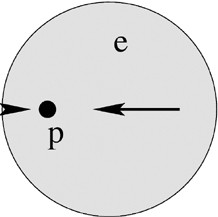

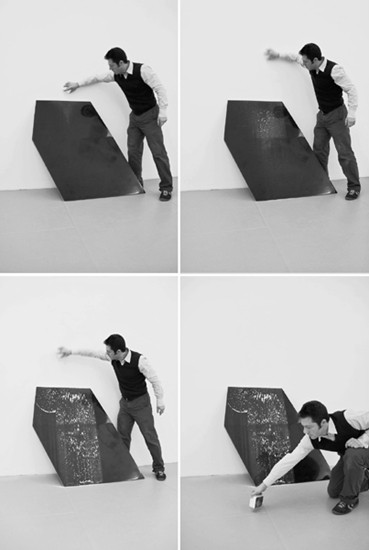

2. Objects move along trajectories in space-time, but space-time itself is changing. (Orbita [Orbit]), 2007. Sculpture and photograph: Gianni Caravaggio.)

The global positioning system (GPS) is an example of an applied technology with crucial general relativistic effects. This is a system of twenty-four satellites, all carrying atomic clocks. They are distributed around the earth so that every place is almost always in reach of at least four satellites above the horizon. Each satellite sends out regular signals encoding its position and the time its clock measures. Comparing the signals of several satellites at a given point on the earth’s surface allows one to compute one’s position very precisely, usually with an accuracy of five to ten centimeters!8

If one were to ignore relativity while computing the travel time of GPS signals, the resulting position measurements would be useless. After just two minutes of uncorrected clock time in orbit, clear deviations would be seen in the measured positions on Earth; waiting a single day would make measurements deviate from the correct values by up to ten kilometers. The role of general relativity in this system is of enormous importance, but it is very complicated and for a long time remained incompletely understood. The first GPS satellites were launched in 1978, but in 1979, 1985, and finally 1995—the year of the system’s official inauguration—entire conferences were still being organized with the aim of understanding the role of general relativity in the GPS. Even so, an erroneous technical report was apparently published in 1996. Examples of difficulties are the role of synchronization and of the comparison of clocks on different satellites, and even the possible influence of the sun’s gravity, whose strength would differ slightly on the day and night sides of Earth with their different distances from the sun. The latter turned out not to be important, given the current precision of clocks. (Which, to be sure, are very precise: They are rubidium clocks, whose measured times after ten days would differ by just half a nanosecond, that is, by half a billionth of a second.) But the changing gravitational field around Earth, and in particular its deviations from a perfect spherical shape caused by the oblong nature of the rotating planet, are of great importance to the clocks’ precision. One can also ask whether special relativistic or general relativistic effects are dominant. At the altitude of GPS, about 27,500 kilometers, the clear winner is general relativity.

GPS applications are becoming more and more numerous, for instance with a cell phone feature especially popular in predominantly Islamic countries that always shows the precise direction to Mecca. The military applications for which the system was initially developed are by now clearly a minority, and those of geological exploration dominate, such as releasing light GPS devices in tropical storms to measure their temperature and pressure with position resolution. Also, the motion of the earth’s crust as a consequence of tectonics can be followed precisely, and these data can perhaps one day be used to predict earthquakes. Even the motion and deformation of buildings or bridges under weight can be registered with the enormous precision of GPS. In agriculture, GPS is sometimes used to distribute fertilizer and pesticides very precisely, and archaeologists use it to find and map ancient historical sites. GPS, a child of relativity, now provides feedback on the exploration of general relativity itself: It serves to define a worldwide clock standard, which can then be used, for instance, to precisely measure the orbits in Hulse and Taylor’s double pulsar.

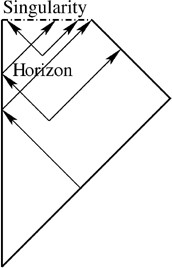

All this shows the technological relevance of relativity theory, whose implications may be tiny in quantitative terms but are important given the current sensitivity of applications. When considering cosmic scales, on the other hand, the consequences of relativity become immense. Time is no longer rigid, as it was for Newton, but is influenced by matter in the universe. In extreme situations, this can, according to general relativity, imply that time itself comes to an end. The influence of matter on space-time is then so strong that time stops or space reaches an unsurpassable limit. Following relativity, this is supposed to have happened at the big bang (when we consider the universe in its backward evolution) or in black holes, where gravitational forces become so large that spatial or timelike distances shrink ever more and eventually vanish completely. Without timelike separations between events, time itself must die, and with it everything that happens. This dreadful conclusion applies to all material bodies as it does to the universe itself: Nothing can reach beyond such a point, a singularity.

What exactly would happen at a singularity? To see this, one must study the mathematical equations of general relativity, for they describe the structure of space and time. According to the continuous form of space-time, represented mathematically by differential geometry, those are differential equations. That is, Einstein’s equations determine how the form of space-time near a point must behave on account of the matter present, or more precisely, on account of its energy density and pressure. Thus the equations correspond to a continuum picture, visualizing space-time as a curved and wrinkled sheet, albeit in four dimensions. In contrast to a real fabric woven from threads with gaps in between, the sheet of general relativity has no structure whatsoever when it is viewed on the smallest distance scales: hence the use of differential equations, which describe the change of space-time under the smallest shifts of position in it. These smallest changes are not atomic, but smaller than any possible size: They arise in a mathematical limiting procedure to conceptualize the continuum picture.

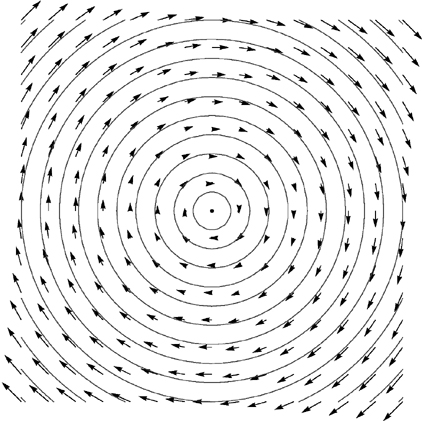

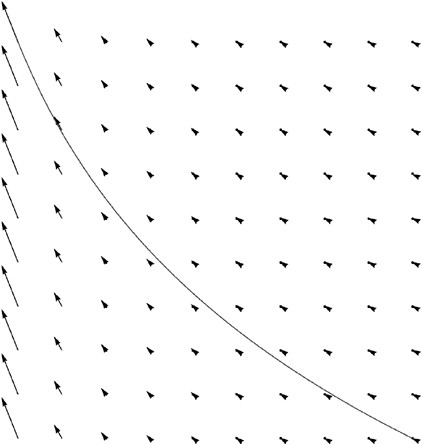

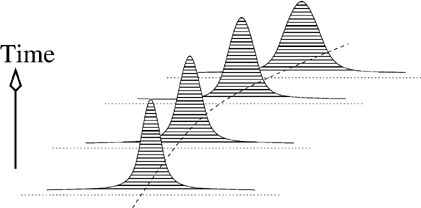

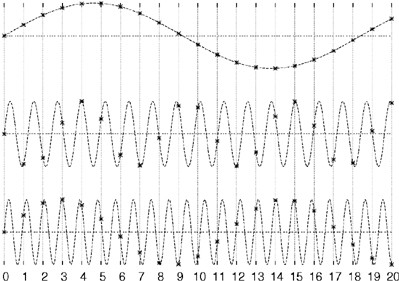

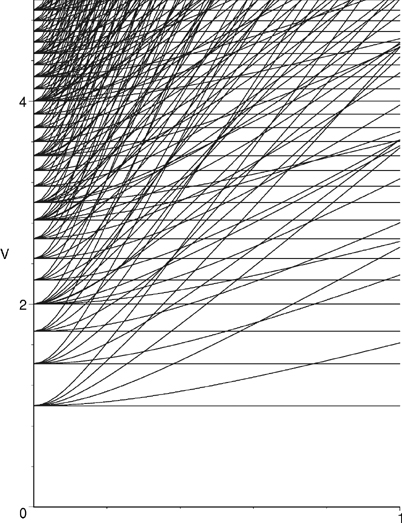

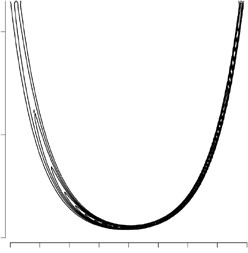

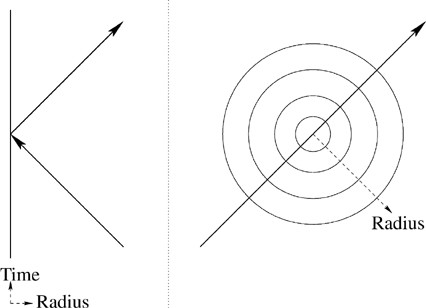

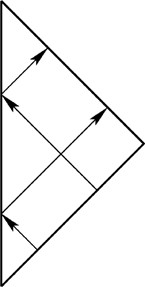

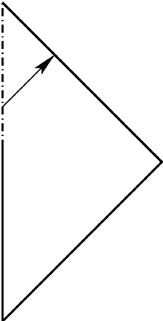

A differential equation can be visualized by its velocity field, in which an arrow is planted at every point to indicate the direction and size of the rate of change. In general relativity, the ground of the field in the simplest cases is a plane on which each point, by its two coordinates, determines time and the expansion of the universe at that time. A solution to the differential equation is a curve in the plane required to follow the direction of the velocity arrow at each point. One can often represent the solutions graphically, as in figure 3, but there are also mathematical methods to directly represent them in the form of a function, or numerical techniques to find such curves with computers.

The graphic construction shows that the velocity field is not sufficient to determine a unique solution; it indicates, after all, just the direction to move at each point. First one must know where to start: A point, the initial condition, must also be chosen. If this is done, one obtains a unique solution in most cases, but the form of initial conditions can, depending on the problem analyzed, take more complex forms than a single point.

In cosmology, the velocity field of cosmic expansion is determined by matter in the universe. Small changes of space-time caused by the smallest shifts in position are then given by the size of the energy density and pressure of the surrounding matter. As the initial condition we can posit a state resembling what we see now in the universe, a mathematical configuration with expanding space and diluting matter content. This leaves different possibilities for future development: If the amount of matter is small, below a certain critical density, the universe will expand forever and become more and more diluted. But amounts of matter above the critical density would exert a stronger gravitational force, which, in the distant future, can first stop the expansion of the whole universe and then make it collapse on itself. In this case, there will be a time when the universe turns around, like a rock thrown up in the air, and then contracts. To decide which case will be realized for our universe, we must determine the exact amount of matter—not an easy task. We can estimate and add the masses of all stars and of the hydrogen gas in and between the galaxies, but, as we will see later in the context of observational cosmology, there are further forms of matter and energy more difficult to quantify. Their origin is not known, but observations indicate that the total energy density is very near the critical one. It thus remains uncertain whether our universe will expand forever or one day collapse.

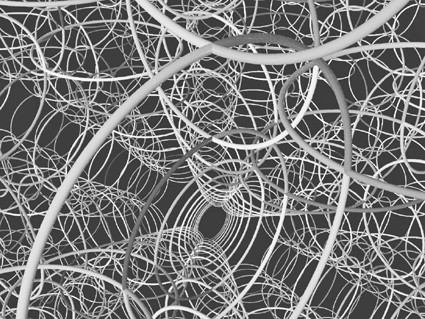

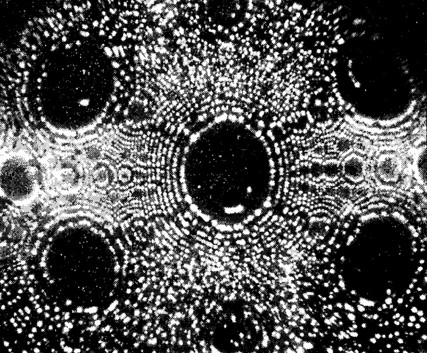

3. Most physical systems are mathematically given by specifying their rates of change everywhere, formulated as a differential equation. The rate of change can graphically be represented by arrows such that a solution to the problem is a curve always taking the direction of the arrows. In this example, the velocity field of a vortex is shown, whose solutions are circles around the center point.

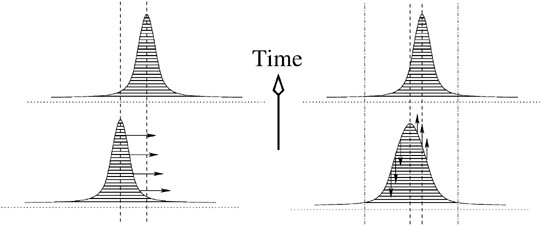

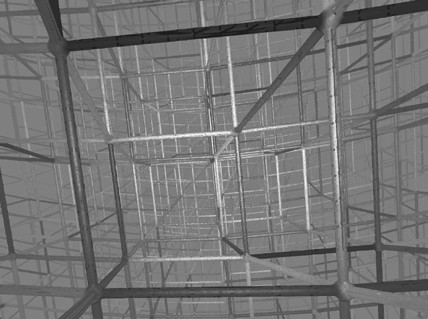

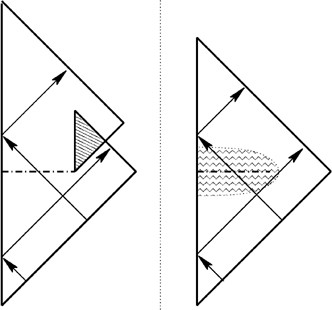

The future is always uncertain; we should therefore have a look at what happened in the past. Owing to its expansion, the younger universe was smaller than it is now, and very long ago it was hotter than any kind of matter existing on Earth or even in stars. Under such extreme conditions, the behavior of matter is little known, but general consequences for the universe only weakly depend on it: A detailed analysis of Einstein’s equations shows that some of the spatial or timelike distances must be unimaginably small at the moment of the big bang (and in black holes). One can see this in the velocity field of figure 4, which pushes every solution curve to a point of vanishing volume. The energy density of matter—the ratio of the total energy to the occupied volume—becomes infinite: One divides by zero when an extension, and thus the volume as a product of extensions, vanishes. Here we have the physical dilemma that no matter can persist at infinite density; also, such a divergence is mathematically so problematic that it leads to the breakdown of Einstein’s equations. Even if we tried to ignore the problem of infinite density, the equations would tell us that the form of space-time changes by an infinite amount even at the tiniest change of position (or time). This cannot possibly be a useful coherent structure: Space-time is torn asunder at the singularity.

Singularities constitute a serious problem, a theoretical misdemeanor that will eventually force us to abandon general relativity as a fundamental description, or to extend it. The tremendous consequence that space and time reach an end in their theoretical description does not mean a physically predicted beginning or end of the world. Even though the mathematical equations show that a point is reached at which all distances vanish, the equations themselves then lose all their meaning. The theory becomes unreliable at this point and simply can no longer be used for predictions; it leads us to this singular place near the abyss, but then leaves us alone with the question of its meaning and of what lies beyond. From then on, we have to look for a new guide.

4. The differential equation of cosmology is shown here by its velocity field as it follows from Einstein’s equation. The volume of the universe changes horizontally; vertically, the density of the matter content grows. The closer one comes to the left border, where the universe volume vanishes, the longer the arrows become. Every solution curve along the arrows is inescapably drawn to the border. Moreover, the matter density grows without bounds and becomes infinite for vanishing volume at the left border.

Just like Newton’s theory, Einstein’s gravitational law is flawed, but much more seriously so. While Newton was uneasy with the animalistic tendencies of his theory, the shadow looming over general relativity is decidedly more ominous. In the words of the physicist John Wheeler, general relativity contains the seed of its own destruction—which is a condition for greatness: “All great things perish on their own, by an act of self-elimination.”9 Indeed, general relativity is unique not just in its power but also in telling us its own limits in a strong, undeniable way. Singularity problems of this form exist nowhere else, since only general relativity, so far, addresses the behavior of space and time without bias. By being such a strong barrier, the singularity problem attracts much interest in trying to overcome it by more complete theories. In the comparison with a novel, this means that the book itself is not only a character but will, in the course of the action, perish.10

Suicidal theories are very uncommon in physics, and so it is not surprising that consequences are often misinterpreted.11 Even Einstein thought that limits of space and time due to singularities appear only in special cases and not in general situations. In his time, such an opinion was not entirely unrealistic, for not much was known about the various mathematical solutions of general relativity. (Einstein did, however, make fruitless attempts to prove the irrelevance of singularities.) Only after later studies by Stephen Hawking, Roger Penrose, and others in the 1960s did it become clear that limits of space-time cannot be avoided within general relativity and must be taken seriously.12 Mathematical solutions, required only to be compatible with the present form of the universe, have at least one singularity—a limit to space and time—where general relativity itself loses its validity.

LACK OF REPULSION: THE DANGERS OF BEING TOO ATTRACTIVE

We saw this for the simplest of all natural phenomena, gravity, which does not stop striving and pushing toward an extensionless center point, which when reached would be its own and matter’s demise, which does not stop even if the whole world would already be conglomerated.

—ARTHUR SCHOPENHAUER, The World as Will and Representation