Praise for Science Friction

“You may disagree with Michael Shermer, but you’d better have a good reason—and you’ll have your work cut out finding it. He describes skepticism as a virtue, but I think that understates his own unique contribution to contemporary intellectual discourse. Worldly-wise sounds wearily cynical, so I’d call Shermer universe-wise. I’d call him shrewd, but it doesn’t do justice to the breadth and depth of his inspired scientific vision. I’d call him a spirited controversialist, but that doesn’t do justice to his urbane good humor. Just read this book. Once you start, you won’t stop.”

—Richard Dawkins, author of The Selfish Gene and A Devil’s Chaplain

“It is both an art and a discipline to rise above our inevitable human biases and look in the eye truths about how the world works that conflict with the way we would like it to be. In Science Friction, Michael Shermer shines his beacon on a delicious range of subjects, often showing that the truth is more interesting and awe-inspiring than the common consensus. Bravo.”

—John McWhorter, author of The Power of Babel and Losing the Race

“Michael Shermer challenges us all to candidly confront what we believe and why. In each of the varied essays in Science Friction, he warns how the fundamentally human pursuit of meaning can lead us astray into a fog of empty illusions and vacuous idols. He implores us to stare honestly at our beliefs and he shows how, through adherence to bare reason, the profound pursuit of meaning can instead lead us to truth—and how, in turn, truth can lead us to meaning.”

—Janna Levin, author of How the Universe Got Its Spots

“Whether the subject is ultra-marathon cycling or evolutionary science, Michael Shermer—who has excelled at the former and become one of our leading defenders of the latter—never writes with anything less than full-throttled engagement. Incisive, penetrating, and mercifully witty, Shermer throws himself with brio into some of the most serious and disturbing topics of our times. Like the best passionate thinkers, Shermer has the power to enrage his opponents. But even those who don’t agree with him will be sharpened by the encounter with this feisty book.”

—Margaret Wertheim, author of Pythagoras’ Trousers

Also by Michael Shermer

The Science of Good and Evil

In Darwin’s Shadow:

The Life and Science of Alfred Russel Wallace

The Skeptic Encyclopedia of Pseudoscience (general editor)

The Borderlands of Science

Denying History

How We Believe

Why People Believe Weird Things

Science Friction

SCIENCE FRICTION

Where the Known

Meets the Unknown

MICHAEL SHERMER

Owl Books

Henry Holt and Company, LLC

Publishers since 1866

175 Fifth Avenue

New York, New York 10010

www.henryholt.com

An Owl Book® and  ® are registered trademarks of Henry Holt and Company, LLC.

® are registered trademarks of Henry Holt and Company, LLC.

Copyright © 2005 by Michael Shermer

All rights reserved.

Distributed in Canada by H. B. Fenn and Company Ltd.

All artwork and illustrations, except as noted in the text, are by Pat Linse, are copyrighted by Pat Linse, and are reprinted with permission.

For further information on the Skeptics Society and Skeptic magazine, contact P.O. Box 338, Altadena, CA 91001, 626-794-3119; fax: 626-794-1301; e-mail: [email protected]. www.skeptic.com

Library of Congress Cataloging-in-Publication Data

Shermer, Michael.

Science friction: where the known meets the unknown/

Michael Shermer.—1st ed.

p. cm.

Includes index.

ISBN-13: 978-0-8050-7914-2

ISBN-10: 0-8050-7914-9

1. Science—Philosophy. 2. Science—Miscellanea. I. Title.

Q175.S53437 2005

501—dc22

2004051708

Henry Holt books are available for special promotions and premiums. For details contact: Director, Special Markets.

Originally published in hardcover in 2005 by Times Books

First Owl Books Edition 2006

Designed by Victoria Hartman

Printed in the United States of America

10 9 8 7 6 5 4 3

To Pat Linse

For her steadfast loyalty, penetrating intelligence, and illustrative originality

Contents

Introduction: Why Not Knowing

I / Science and the Virtues of Not Knowing

1. Psychic for a Day

2. The Big “Bright” Brouhaha

3. Heresies of Science

4. The Virtues of Skepticism

II / Science and the Meaning of Body, Mind, and Spirit

5. Spin-Doctoring Science

6. Psyched Up, Psyched Out

7. Shadowlands

III / Science and the (Re)Writing of History

8. Darwin on the Bounty

9. Exorcising Laplace’s Demon

10. What If?

11. The New New Creationism

12. History’s Heretics

IV / Science and the Cult of Visionaries

13. The Hero on the Edge of Forever

Introduction

Why Not Knowing

Science and the Search for Meaning

THAT OLD PERSIAN TENTMAKER (and occasional poet) Omar Khayyám well captured the human dilemma of the search for meaning in an apparently meaningless cosmos:

Into this Universe, and Why not knowing,

Nor Whence, like Water willy-nilly flowing;

And out of it, as Wind along the Waste,

I know not Whither, willy-nilly blowing.

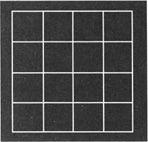

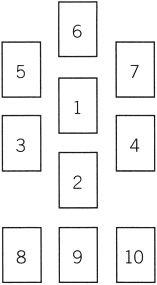

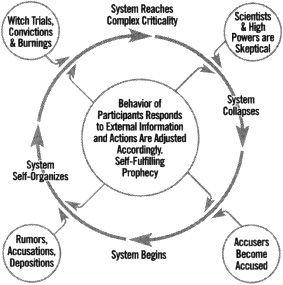

It is in the vacuum of such willy-nilly whencing and whithering that we humans are so prone to grasp for transcendent interconnectedness. As pattern-seeking primates we scan the random points of light in the night sky of our lives and connect the dots to form constellations of meaning. Sometimes the patterns are real, sometimes not. Who can tell? Take a look at figure I.1. How many squares are there?

The answer most people give upon first glance is 16 (4×4) . Upon further reflection, most observers note that the entire figure is a square, upping the answer to 17. But wait! There’s more. Note the 2×2 squares.

Figure I.1

There are 9 of those, increasing our count total to 26. Look again. Do you see the 3×3 squares? There are 4 of those, producing a final total of 30. So the answer to a simple question for even such a mundane pattern as this ranged from 16 to 30. Compared to the complexities of the real world, this is about as straightforward as it gets, and still the correct answer is not immediately forthcoming.

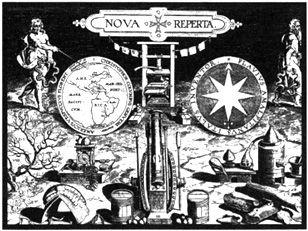

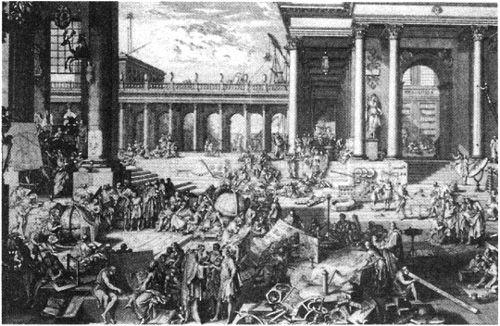

Ever since the rise of modern science beginning in the sixteenth century, scientists and philosophers have been aware that the facts never speak for themselves. Objective data are filtered through subjective minds that distort the world in myriad ways. One of the founders of early modern science, the seventeenth-century English philosopher Sir Francis Bacon, sought to overthrow the traditions of his own profession by turning away from the scholastic tradition of logic and reason as the sole road to truth, as well as rejecting the Renaissance (literally “rebirth”) quest to restore the perfection of ancient Greek knowledge. In his great work entitled Novum Organum (“new tool’ patterned after, yet intended to surpass, Aristotle’s Organon), Bacon portrayed science as humanity’s savior that would inaugurate a “Great Instauration,” or a restoration of all natural knowledge through a proper blend of empiricism and reason, observation and logic, data and theory.

Bacon was no naive Utopian, however. He understood that there are significant psychological barriers that interfere with our understanding of the natural world, of which he identified four types, which he called idols: idols of the cave (peculiarities of thought unique to the individual that distort how facts are processed in a single mind), idols of the marketplace (the limits of language and how confusion arises when we talk to one another to express our thoughts about the facts of the world), idols of the theater (preexisting beliefs, like theater plays, that may be partially or entirely fictional, and influence how we process and remember facts), and idols of the tribe (the inherited foibles of human thought endemic to all of us—the tribe—that place limits on knowledge). “Idols are the profoundest fallacies of the mind of man,” Bacon explained. “Nor do they deceive in particulars . . . but from a corrupt and crookedly-set predisposition of the mind; which doth, as it were, wrest and infect all the anticipations of the understanding.”

Consider the analogy of a swimming pool with a cleaning brush on a long pole, half in and half out of the water—the pole appears impossibly bent; but we recognize the illusion and do not confuse the straight pole for a bent one. Bacon brilliantly employs something like this analogy in his conclusion about the effects of the idols on how we know what we know about the world: “For the mind of man is far from the nature of a clear and equal glass, wherein the beams of things should reflect according to their true incidence; nay, it is rather like an enchanted glass, full of superstition and imposture, if it be not delivered and reduced.” In the end, thought Bacon, science offers the best hope to deliver the mind from such superstition and imposture. I concur, although the obstacles are greater than even Bacon realized.

For example, do you see a young woman or an old woman in figure I.2?

This is an intentionally ambiguous figure where both are equal in perceptual strength. Indeed, roughly half see the young woman upon first observation, and half see the old woman. For most, the young and old woman image switches back and forth. In experiments in which subjects are first shown a stronger image of the old woman, when shown this ambiguous figure almost all see the old woman first. Subjects who are initially exposed to a stronger image of the young woman, when shown this ambiguous figure almost all see the young woman first. The metaphoric extrapolation to both science and life is clear: we see what we are programmed to see—Bacon’s idol of the theater.

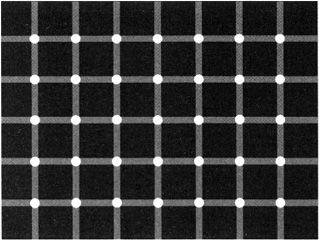

Idols of the tribe are the most insidious because we all succumb to them, thus making them harder to spot, especially in ourselves. For example, count the number of black dots in figure I.3.

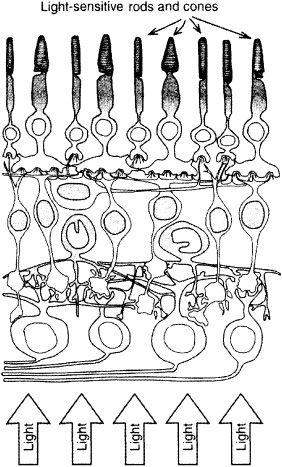

The answer, as you will frustratingly realize within a few seconds, is that it depends on what constitutes a “dot.” In the figure itself there are no black dots. There are only white dots on a highly contrasting background that creates an eye-brain illusion of blinking black-and-white dots. Thus, in the brain, one could make the case that there are 35 black dots that exist as long as you don’t look at any one of them directly. In any case, this illusion is a product of how our eyes and brains are wired. It is in our nature, part of the tribe, a product of rods and cones and neurons only. And it doesn’t matter if you have an explanation for the illusion or not; it is too powerful to override.

Figure I.2

Figure I.3

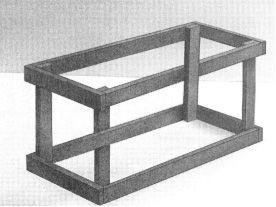

Figures 1.4 and 1.5. The 3-D impossible crate

Figure I.4, the “impossible crate,” is another impossible figure. Can you see why?

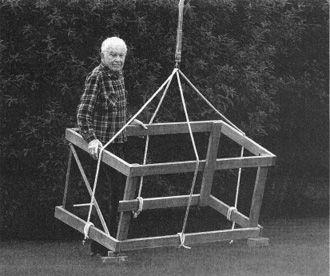

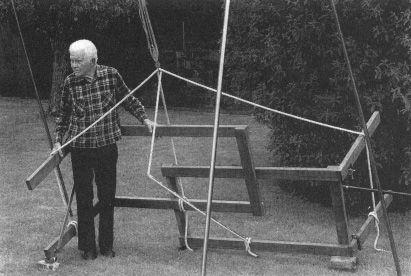

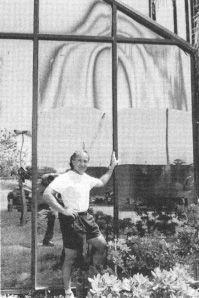

All of our experiences have programmed our brains to know that a straight beam of wood in the back of the crate cannot also cross another beam in the front of the crate. Although we know that this is impossible in the real world, and that it is simply an illusion created by a mischievous psychologist, we are disturbed by it nonetheless because it jars our perceptual intuitions about how the world is supposed to work. We also know that this is a two-dimensional figure on a piece of paper, so our sensibilities about the three-dimensional world are preserved. How, then, do you explain figure I.5, a three-dimensional impossible crate?

This is a real crate with a real man standing inside of it. I know because the man is a friend of mine—the brilliant magician and illusionist Jerry Andrus—and I’ve seen the 3-D impossible crate myself. Like other professional magicians and illusionists, Jerry makes his living creating interesting and unusual ways to fool us. He depends on the idols of the tribe operating the same way every time. And they do. Magicians do not normally reveal their secrets, but Jerry has posted this one on the Internet and shown it to countless audiences, so as a lesson in willy-nilly knowing, figure I.6 provides the solution to the 3-D impossible crate.

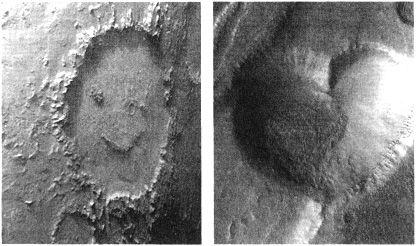

Buried deep in our tribal instincts are idols of recognizable importance to our personal and cultural lives. As an example of the former, note the striking feature in the photograph from Mars in figure I.7, taken in July 1976 by the Viking Orbiter 1 from a distance of 1,162 miles, as it was photographing the surface in search of a viable landing site for the Viking Lander 2.

The face is unmistakable. Two eye sockets, a nose, and a mouth gash form the rudiments of a human face. What’s that doing on Mars? For decades this feature (about a mile across), as well as others gleaned from eager searchers perceptually poised to confirm their beliefs in extraterrestrial intelligence, claimed it was an example of Martian monumental architecture, the remnants of a once-great civilization now lost to the ravages of time. Numerous articles, books, documentaries, and Web pages breathlessly speculated about this lost Martian civilization, demanding that NASA reveal the truth. This NASA did when it released the photograph of the “face” taken by the Mars Global Surveyor in 2001, seen in figure I.8.

Figure I.6. The 3-D impossible-crate illusion explained. Camera angle is everything!

Figure I.7 (above left). An unmistakable feature on Mars photographed by a NASA spacecraft in 1976.

Figure I.8 (above right). The “face” on Mars morphs into an eroded mountain range.

In the light of a high-resolution camera, the “face” suddenly morphs into an oddly eroded mountain range, the product of natural, not artificial, forces. Erosion, not Martians, carved the mountain. This silenced all but the most hard-core Ufologists.

Such random patterns are often seen by humans as faces, such as the “happy face” on Mars “discovered” in 1999 (figure I.9). If astronomers were romantic poets would they find hearts on distant planets, like the one in figure I.9, also from Mars?

We see faces because we were programmed by evolution to see the expressions of those most powerful in our social group, starting with imprinting on the most important faces in our sphere: those of our parents.

We also see at work Bacon’s idols of the cave in the peculiarities of religious icons that often make their appearances in the most unusual of places, such as the famous “nun bun” discovered by a Nashville, Tennessee, coffee shop owner in 1996. The idea of seeing a nun’s face in a pastry provokes laughter among most lay audiences (it was featured on David Letterman’s show, for example). But some deeply religious people flocked to show their reverence when the nun bun was put on display at the Bongo Java coffee shop. (An attorney representing Mother Teresa forced the bun’s owner, Bob Bernstein, to remove her name from the icon.)

Figure I.9. The “happy face” on Mars, along with a Martian heart

Arguably the greatest religious icon in history (after the cross, of course) is the Virgin Mary, who has made routine appearances around the world and throughout history. In 1993, for instance, she appeared on the side of an oak tree in Watsonville, California, a small town whose population is 62 percent Mexican-Americans and whose dominant religion is Catholicism. In 1996 the Virgin Mary manifested on the side of a bank building in Clearwater, Florida. Once again, believers gathered around the icon, often in wheelchairs and on crutches, in some cases hoping to be healed.

A Christian group purchased the building in order to preserve the religious image, fencing off the parking lot, which is now chockablock full of candles lit in veneration. However, as I discovered in visiting the site in 2003, it turns out that there are several Virgin Marys on the sides of this building, appearing wherever there happens to be a sprinkler and a palm tree. The water, contrary to the name of the city, is not so clear. In fact, it is rather brackish, loaded with minerals that stain windows such as these (see figure I.10; the palm tree that originally stood in front of the window where the big Virgin Mary image appears has since been cut down by the owners).

The image is a striking example of the power of beliefs to determine perceptions. Instead of saying, “I wouldn’t have believed it if I hadn’t seen it,” we probably should be saying, “I wouldn’t have seen it if I hadn’t believed it.” As with faces, we see religious icons because we were programmed by history and culture to see those features most representative of those institutions of great power, starting with the religion of our parents.

Nowhere are such idols harder to see in ourselves than the subtle psychological biases we harbor. Consider the confirmation bias, in which we look for and find confirmatory evidence for what we already believe and ignore disconfirmatory evidence. For my monthly column in Scientific American I wrote an essay (June 2003) on the so-called Bible Code, in which the claim is made that the first five books of the Bible—the Pentateuch—in its original Hebrew contain hidden patterns that spell out events in world history, even future history. A journalist named Michael Drosnin wrote two books on the subject, both New York Times bestsellers, in which he claimed in the second volume to have predicted 9/11. My analysis was very skeptical of this claim (I told him in a personal letter that it would have been nice if he had alerted everyone to 9/11 before the event instead of after). He wrote a letter to the magazine (and had an attorney threaten them and me with a libel suit), which they published. In response, I received a most insightful letter from John Byrne, a well-known comic book writer and illustrator of Spider-Man and other superheroes. I reprint it here because he makes the point about this cognitive bias so well.

Figure I.10 (above left). The Virgin Mary on the side of a bank building in Clearwater, Florida. (The author, in the middle, is bracketed by Richard Dawkins, left, and James Randi.)

Figure I.11 (above right). Another Virgin Mary on another side of the bank building

Reading Michael Drosnin’s response to Michael Shermer’s column on the Bible “code” and its ability to accurately predict the future, I could not help but laugh. I have been a writer and illustrator of comic books for the past 30 years, and in that time I have “predicted” the future so many times in my work my colleagues have actually taken to referring to it as “the Byrne Curse.”

It began in the late 1970s. While working on a Spider-Man series titled “Marvel Team-Up” I did a story about a blackout in New York. There was a blackout the month the issue went on sale (six months after I drew it). While working on “Uncanny X-Men” I hit Japan with a major earthquake, and again the real thing happened the month the issue hit the stands.

Now, those things are fairly easy to “predict,” but consider these: When working on the relaunch of Superman, for DC Comics, I had the Man of Steel fly to the rescue when disaster beset the NASA space shuttle. The Challenger tragedy happened almost immediately thereafter, with time, fortunately, for the issue in question to be redrawn, substituting a “space plane” for the shuttle.

Most recent, and chilling, came when I was writing and drawing “Wonder Woman,” and did a story in which the title character was killed, as a prelude to her becoming a goddess. The cover for that issue was done as a newspaper front page, with the headline “Princess Diana Dies.” (Diana is Wonder Woman’s real name.) That issue went on sale on a Thursday. The following Saturday . . . I don’t have to tell you, do I?

My ability as a prognosticator, like Drosnin’s, would seem assured—provided, of course, we reference only the above, and skip over the hundreds of other comic books I have produced which featured all manner of catastrophes, large and small, which did not come to pass.

In short, we remember the hits and forget the misses, another variation on the confirmation bias.

In recent decades experimental psychologists have discovered a number of cognitive biases that interfere with our understanding of ourselves and our world. The self-serving bias, for example, dictates that we tend to see ourselves in a more positive light than others see us: national surveys show that most businesspeople believe they are more moral than other businesspeople. In one College Entrance Examination Board survey of 829,000 high school seniors, 0 percent rated themselves below average in “ability to get along with others,” while 60 percent put themselves in the top 10 percent. This is also called the “Lake Wobegon effect,” after the mythical town where everyone is above average. Lake Wobegon exists in the spiritual realm as well. According to a 1997 U.S. News and World Report study on who Americans believe are most likely to go to heaven, for example, 60 percent chose Princess Diana, 65 percent thought Michael Jordan, 79 percent selected Mother Teresa, and, at 87 percent, the person most likely to go to heaven was the survey taker!

Experimental evidence of such cognitive idols has been provided by Princeton University psychology professor Emily Pronin and her colleagues, who tested a generalized idol called “bias blind spot,” in which subjects recognized the existence and influence in others of eight different specific cognitive biases, but they failed to see those same biases in themselves. In one study on Stanford University students, when asked to compare themselves to their peers on such personal qualities as friendliness, they predictably rated themselves higher. Even when the subjects were warned about the “better-than-average” bias and asked to reevaluate their original assessments, 63 percent claimed that their initial evaluations were objective, and 13 percent even claimed to be too modest! In a second study, Pronin randomly assigned subjects high or low scores on a “social intelligence” test. Unsurprisingly, those given the high marks rated the test fairer and more useful than those receiving low marks. When asked if it was possible that they had been influenced by the score on the test, subjects responded that the other participants were negatively influenced, but not them! In a third study in which Pronin queried subjects about what method they used to assess their own and others’ biases, she found that people tend to use general theories of behavior when evaluating others, but use introspection when appraising themselves; but in what is called the “introspection illusion,” people do not believe that others can be trusted to do the same. Okay for me but not for thee.

The University of California, Berkeley, psychologist Frank J. Sulloway and I made a similar discovery of an “attribution bias” in a study we conducted on why people say they believe in God, and why they think other people believe in God. In general, most people attribute their own belief in God to such intellectual reasons as the good design and complexity of the world, whereas they attribute others’ belief in God to such emotional reasons as it is comforting, gives meaning, and they were raised to believe.

Such biases in our beliefs do not prove, of course, that there is no God (or Martians or Virgin Marys); however, in identifying all these different factors influencing (and often determining) what it is we see and think about the world, it calls into question how we know anything. There are many answers to this solipsistic challenge—consistency, coherence, and correspondence being just three devised by philosophers and epistemologists—but for my money there is no more effective Reliable Knowledge Kit than science. The methods of science, in fact, were specifically designed to weed out idols and biases. Some patterns are real and some are not. Science is the only way to know for sure.

Cancer clusters are a prime example. As portrayed in Steven Soderbergh’s 2000 film Erin Brockovich, staring Julia Roberts as the buxom legal assistant cum corporate watchdog, lawyers can strike a financial bonanza with juries who do not understand that correlation does not necessarily mean causation. Toss a handful of pennies up into the air and let them fall where they may, and you will see small “clusters” of pennies, not a perfectly random distribution. Millions of Americans get cancer—they are not randomly distributed throughout the country; they are clustered. Every once in a while, they may be grouped in a town where a big industrial plant owned by a wealthy corporation has been dumping potentially toxic waste products. Is the cancer cluster due to the potentially toxic waste, or is it due to random chance? Ask a lawyer and his clients hoping for a large cash settlement from the corporation, and they will give you an unambiguous answer: cluster = cause = cash. Ask a scientist with no stake in the outcome and you will get a rather different answer: cluster = or ≠ cause. It all depends. Additional studies must be conducted. Are there other towns and cities with similar correlations between the chemical waste product and the same type of cancer cluster? Are there epidemiological studies connecting these chemicals to that cancer? Is there a plausible chemical or biological mechanism linking that chemical to that cancer? The answers to such questions, usually in the negative, often come long after juries have granted plaintiffs large awards, or after corporations grow weary and financially drained fighting such suits and opt to settle out of court.

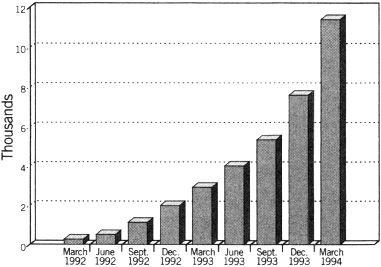

A similar problem was seen in the silicon breast implant scare of the late 1980s and early 1990s. I distinctly recall the advertisements placed in the Los Angeles Times by legal firms, alerting any women with silicon breast implants that they might be entitled to a significant financial award if they exhibit any of the symptoms listed in the ad, which was a laundry list of aches and pains connected to a variety of autoimmune and connective tissue diseases (as well as the vagaries of everyday life). A hotline was also established: 1/800-RUPTURE. Women responded . . . in droves, and the litigant attorneys paraded their clients in front of the courthouse with placards that read WE ARE THE EVIDENCE. In 1991, one of these women, Mariann Hopkins, was awarded $7.3 million after a jury determined that her ruptured silicone breast implant caused a connective tissue disease. Within weeks, 137 lawsuits were filed against the manufacturer, Dow Corning. The next year another woman, Pamela Jean Johnson, won $25 million after a jury linked to her implants connective tissue disease, autoimmune responses, chronic fatigue, muscle pain, joint pain, headaches, and dizziness, even though the scientists who testified for the defense said her symptoms amounted to nothing more than “a bad flu.” By the end of 1994, an unbelievable 19,092 individual lawsuits had been filed against Dow Corning, shortly after which the company filed for bankruptcy.

In the end, the confirmation bias won out and Dow Corning had to pay $4.25 billion to settle tens of thousands of claims. The only problem was, there is no connection between silicone breast implants and any of the diseases linked to them in these trials. After multiple independent studies by reputable scientific institutions in no way connected to either the corporation or any of the litigants, the Journal of the American Medical Association, the New England Journal of Medicine, the Journal of the National Cancer Institute, the National Academy of Science, and other medical organizations declared that this was a case of ‘junk science” in the courtroom. Dr. Marcia Angell, the executive editor of the New England Journal of Medicine, explained that this was nothing more than a chance overlap between two populations: 1 percent of American women have silicone breast implants, 1 percent of American women have autoimmune or degenerative tissue diseases. With millions of women in each of these categories, by chance tens of thousands will have both implants and disease, even though there is no causal connection. That’s all there is to it.

Why, then, in this age of modern science, was this not clear to judges and juries? Because Bacon’s idols of the marketplace dictate that scientists and lawyers speak two different languages that represent dramatically different ways of thinking. The law is adversarial. Lawyers are pitted against one another. There will be a winner and a loser. Evidence is to be marshaled and winnowed to best support your side in order to defeat your opponent. As an attorney for the prosecution it doesn’t matter if silicone actually causes disease, it only matters if you can convince a jury that it does. Science, by contrast, attempts to answer questions about the way the world really works. Although scientists may be competitive with one another, the system is self-correcting and self-policing, with a long-term collective and cooperative goal of determining the truth. Scientists want to know if silicone really causes disease. Either it does or it does not.

Marcia Angell wrote a book on this subject, Science on Trial, in which she explained how “a lawyer questioning an epidemiologist in a deposition asked him why he was undertaking a study of breast implants when one had already been done. To the lawyer, a second study clearly implied that there was something wrong with the first. The epidemiologist was initially confused by the line of questioning. When he explained that no single study was conclusive, that all studies yielded tentative answers, that he was looking for consistency among a number of differently designed studies, it was the lawyer’s turn to be confused.” As executive editor of the New England Journal of Medicine, Angell recalled that she was occasionally asked why the journal does not publish studies “on the other side,” a concept, she explained, “that has no meaning in medical research.” There is “another side” to an issue only if the data warrant it, not by fiat.

The case of Marcia Angell is an enlightening one. She opens her book with a confession: “I consider myself a feminist, by which I mean that I believe that women should have political, economic, and social rights equal to those of men. As such, I am alert to discriminatory practices against women, which some feminists believe lie at the heart of the breast implant controversy. I am also a liberal Democrat. I believe that an unbridled free market leads to abuses and injustices and that government and the law need to play an active role in preventing them. Because of this view, I am quick to see the iniquities of large corporations.” What’s a disclaimer like this doing in a science book? She explains: “I disclose my political philosophy here, because it did not serve me well in examining the breast implant controversy. The facts were simply not as I expected they would be. But my most fundamental belief is that one should follow the evidence wherever it leads.” Francis Bacon would approve.

My favorite example of the beauty and simplicity of science is the case of Emily Rosa and her experiment on Therapeutic Touch. In the 1980s and early 1990s, Therapeutic Touch (TT) became a popular fad among nursing programs throughout the United States and Canada. The claim is that the human body has an energy field that extends beyond the skin, and that this field can be detected and even manipulated by skilled TT practitioners. Bad energy, energy blockages, and other energetic problems were said to be the cause of many illnesses. TT practitioners “massage” the human energy field, not through actual physical massage of muscles, tendons, and tissues (which has been shown to have therapeutic value in reducing muscular tension and thereby stress), but by waving their open palms just above the skin. This is touchy feely, without the touchy. A professional nurse friend of mine name Linda Rosa became alarmed at the outrageous claims being made on behalf of TT, as well as the waste of limited time and resources in nursing programs and medical schools on it. TT was even being employed in hospitals and operating theaters as a legitimate form of treatment. In September 1994, the U.S. military granted $355,225 to the University of Alabama at Birmingham Burn Center for experiments to determine if TT could heal burned skin.

One day Linda was watching a videotape of TT therapists practicing their trade when her ten-year-old daughter, Emily, got an idea for her fourth-grade science project. “I was talking to my mom about a color separation test with M&Ms,” Emily explained. As she watched the TT tape, she reports, “I was nearly mesmerized’ by seeing nurses like my mom waving their hands through the air. I asked her if I could test TT instead (and just eat the M&Ms).” Of course, Emily had neither the time nor the resources to carry out a sophisticated epidemiological study on a large population of patients to determine if they got well or not using TT. Emily just wanted to know if the TT practitioners could actually detect the so-called human energy field. It is obvious that in waving a hand above someone’s body one might imagine sensing an energy field. But could it be detected under blind conditions?

Emily’s experiment was brilliantly simple. She set up a card table with an opaque cardboard shield dividing it into two halves, with Emily sitting on one side and the TT practitioner on the other side. Emily cut two small holes on the bottom of the board, and through each the TT subject put both arms, palms up. Emily then flipped a coin to determine which of the subjects hands she would hold her hand above. Figure I.12 shows a diagraph of Emily’s experimental protocol.

With this simple design we can see that, just by guessing, anyone being tested should be able to detect Emily’s hand 50 percent of the time. Assuming that the TT practitioners can really detect a human energy field, they should do better than chance. (In fact, most said they could detect it 100 percent of the time, and in preexperimental trials without the card-board, they had no problem sensing Emily’s energy field.) Emily was able to test twenty-one Therapeutic Touch therapists, whose experience in practicing TT ranged from one to twenty-one years. Out of a total of 280 trials, the TT test subjects got 123 correct hits and 157 misses, a hit rate of 44 percent, below chance!

Figure I.12. Emily Rosa puts Therapeutic Touch to the test.

We published preliminary results of Emily’s experiment in a 1996 issue of Skeptic magazine, but our readers were already skeptical of Therapeutic Touch so this generated no great controversy. But in the April 1, 1998, issue of the prestigious Journal of the American Medical Association Emily’s complete results were published in a peer-reviewed scientific article, and suddenly Emily found herself on the Today Show, Good Morning America, all the nightly news shows, NPR, UPI, CNN, Reuters, the New York Times, the Los Angeles Times, and many more (over a hundred distinct media stories about Emily and her experiment appeared within weeks). We brought Emily to Caltech to present her with our Skeptic of the Year award, where she was also recognized by the Guinness Book of World Records as the youngest person ever to be published in a major scientific journal.

Did Emily prove that Therapeutic Touch is a sham therapy? Well, it depends on how her results are placed in a larger context. Emily did not directly test whether people are healed or not using TT, so we can only indirectly make this inference. But it is obvious that if TT practitioners cannot even detect the so-called human energy field, how can they possibly be “massaging” it for healing purposes? In any case, the “Emily Event,” as it has now become known among skeptics, serves as a case study in how science can be conducted simply and cheaply (Emily spent under $10 for her entire project), and can get to the answer of an important question.

We have seen that science is a great Baloney Detection Kit. But can it identify nonbaloney? Can it find real patterns? Of course. Smoking causes lung cancer—no question about it. HIV causes AIDS—almost no one doubts it. Earth goes around the sun. Earthquakes are caused by plate tectonics and continental drift. Plants get their energy from the sun through photosynthesis, and we get our energy by eating plants and animals that eat plants. This much is true, and much more. Yet there are true patterns that are counterintuitive. Quantum physics is one of these. It’s weird, really weird. Electrons go around the nucleus of an atom like a planet goes around the sun—we’ve all seen the schematic diagram; only it isn’t true. Electrons are quite real, like itty-bitty planets, but they are everywhere and nowhere in their orbits at the same time—identify their position and you cannot know their momentum; track their momentum and you have lost their position (this is the so-called uncertainty principle). And electrons don’t exactly go “around” the nucleus; they form a “shell” described by a wave equation. And sometimes the electrons jump from inner orbits to outer orbits—and vice versa—without passing in between. How can that be? It seems impossible, but it is true nonetheless. A century of experimental evidence has been accumulated, often by physicists who were hard-core skeptics, demonstrating beyond almost any doubt that quantum physics is real.

Evolution is another of those scientific truths with which a number of people still struggle. Leaving out the religious objections (which are irrelevant in a scientific discussion), the counterintuitive nature of evolution is caused by two problems: (1) our propensity to favor the experimental sciences (e.g., physics) over the historical sciences (e.g., archaeology) as reliable sources of truth claims, and (2) our intuitive grasp of time frames on the order of months, years, and decades of a human life, in contrast to the history of life that spans tens and hundreds of thousands of years, and even millions, tens of millions, and hundreds of millions of years. These time frames are so vast that they are literally inconceivable. So let’s consider a very conceivable problem: explaining the great diversity of “man’s best friend,” dogs. There are hundreds of breeds of dogs, ranging in size from Chihuahuas to Great Danes, and varying in behavior from poodles to pit bulls. Where did all this canine variation come from? In 2003 geneticists found out—comparing the DNA of dogs and wolves, they determined that every breed of dog from everywhere on Earth came from a single population of wolves living in China roughly fifteen thousand years ago. Imagine that—in that short span of time (very short on an evolutionary timescale, speeded up, of course, by human intervention through artificial selection) we have this almost unimaginable degree of variation in dogs. It doesn’t seem possible, but there it is.

The human evolution story is much the same. In the May 11, 2001, issue of the journal Science, in a report on the “African Origin of Modern Humans in East Asia,” a team of Chinese and American geneticists sampled 12,127 men from 163 Asian and Oceanic populations, tracking three genetic markers on the Y chromosome. What they discovered was that every one of their subjects carried a mutation at one of these three sites that can be traced back to a single African population some 35,000 to 89,000 years ago. The finding corroborates earlier mitochondrial DNA (mtDNA) studies, along with fossil evidence, that every one of us can trace our ancestry to Africa not so very long ago. Looking around at the incredible diversity of humans on the globe, this seems nearly impossible. But the evidence supports it, and when it does scientists change their minds. One of the chief proponents of the theory that human groups (so-called races) evolved independently in separate regions (after a much earlier exodus out of Africa), the University of California, Berkeley, anthropologist Vince Sarich, after examining the new data, confessed: “I have undergone a conversion—a sort of epiphany. There are no old Y chromosome lineages [in living humans]. There are no old mtDNA lineages. Period. It was a total replacement.” In other words, in a statement that takes great intellectual courage to make, Sarich admitted that he was wrong.

Although scientists should probably make such admissions more often than they do, as a profession we are more open to error admission than most others. Can you imagine the shocked response a leading Democratic politician would hear if he made a statement like the following? “After examining the evidence for the claim that more gun control laws will reduce gun violence, I have changed my position. I am now against gun control.” Or think of the stunned silence one might hear in a church if a preacher uttered this sentence: “I have carefully considered all the arguments for and against God’s existence. If I am to be intellectually honest I must confess that the evidence for God is no better than the evidence against, so I hereby declare myself an agnostic.” Every once in a great while such conversions happen, but they are so rare as to be newsworthy.

As Bacon so adequately argued four centuries ago, and as cognitive psychologists have experimentally demonstrated the past couple of decades, the facts never speak just for themselves. Science is a very human enterprise. Nevertheless, like democracy, it is the best system we have so we should use it to its fullest extent and apply it wherever we can in our quest to know why.

This précis on knowing and not knowing introduces this volume, a collection of fourteen research articles and personal essays that I have written over the past decade—most (but not all) published in various journals and magazines (but none appearing in my other books)—about how science operates under pressure, during controversies, under siege, and on the precipice of the known as we peer out in search of a ray of light to illuminate the unknown. I have grouped them into four general sections, each of which embodies science on the edge between the known and the unknown, in that fuzzy shadowland that offers a unique perspective on both knowing and not knowing, and how science is the best tool we have to discern which is which.

Part I, “Science and the Virtues of Not Knowing,” begins with chapter 1, “Psychic for a Day,” a first-person account of an amusing and enlightening experience I had spending a day as an astrologer, tarot card reader, palm reader, and psychic medium talking to the dead. I did this on invitation from Bill Nye (the “science guy”) for an episode of his television series, Eyes of Nye. With almost no experience in any of these psychic modalities, I prepared myself the night before and on the plane flying to the studio, then improvised live-to-tape in studio, managing to completely convince my sitters that I had genuine psychic powers, reducing several subjects to tears when we “connected” to lost loved ones. It was at this point that I realized the emotional impact that psychics can have on believers, and the immorality of the entire process and industry that has built up around these claims.

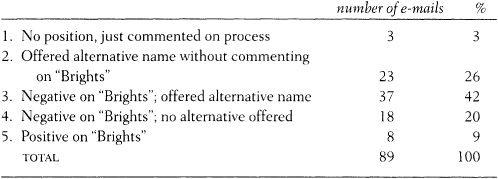

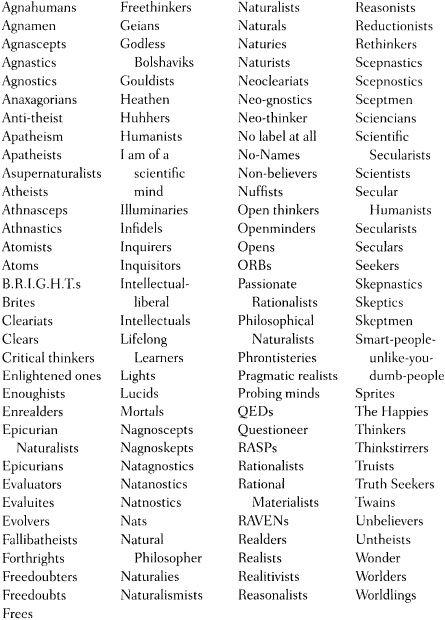

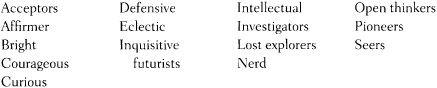

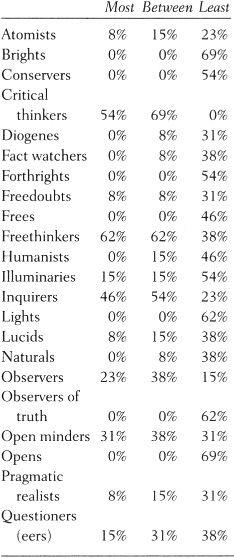

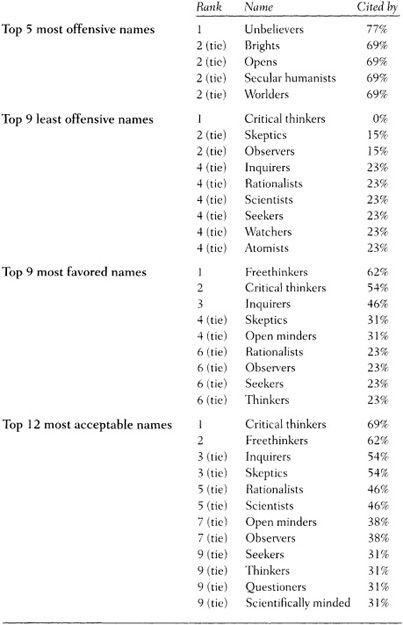

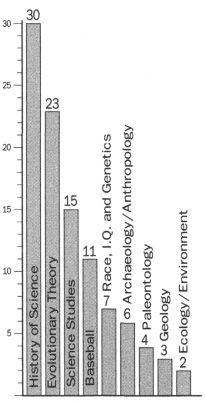

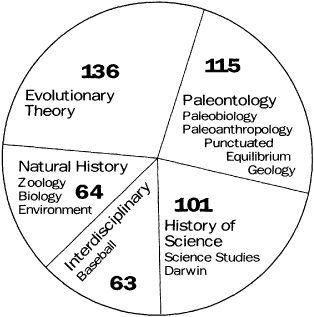

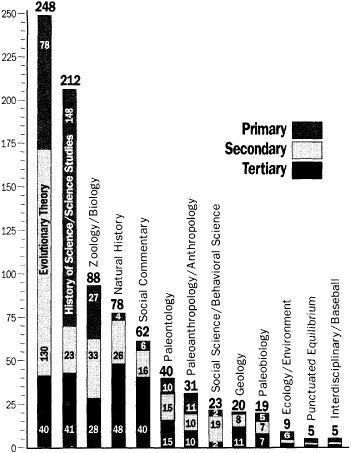

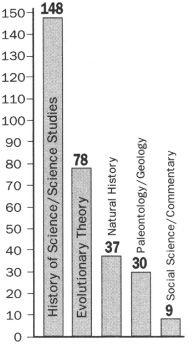

Chapter 2, “The Big ‘Bright’ Brouhaha,” presents in narrative form the results of an empirical study I conducted (I include data charts as well) on the skeptical movement and the attempt to unite all nonbelievers, agnostics, atheists, humanists, and free thinkers under one blanket label—The Brights—and why, in my opinion, all such attempts will ultimately fail. The movement began at the Atheist Alliance International convention in 2003, and I was the first to sign the petition. The “Bright” movement gained momentum when myself and such luminaries as the evolutionary theorist Richard Dawkins and the philosopher Daniel Dennett came out of the skeptical closet through opinion editorials. The reaction was swift and merciless—almost no one, including and especially nonbelievers, agnostics, atheists, humanists, and free thinkers, liked the name, insisting that its elitist implications, along with the natural antonym “dim,” would doom us as a movement. The entire episode afforded a real-time analysis of how social movements evolve.

Chapter 3, “Heresies of Science,” presents six heretical ideas that promise to shake up everything we have come to believe about the world: The Universe Is Not All There Is, Time Travel Is Possible, Evolution Is Not Progressive, Oil Is Not a Fossil Fuel, Cancer Is an Infectious Disease, and The Brain and Spinal Cord Can Regenerate. With each heresy, I consider the belief it is challenging, the alternative it offers, and the likelihood that it is correct. Since this is cutting-edge science, my conclusions are necessarily provisional, as in most of these claims the data are still coming in and resolution is not final. In the case of the first two claims, it may be some time before we can detect other universes, and unless we receive visitors from the future, time machines will likely remain the staple of science fiction for some time to come (pardon the pun). Evidence is mounting, however, in support of the fact that evolution is purposeless (and designless as well, at least from the top down—evolution is a bottom-up designer), that some forms of cancer are caused by infectious viral agents, and that parts of the brain and spinal cord can regenerate under certain limited conditions. As for oil not being a fossil fuel, here we would be wise to be skeptical of the oil skeptics. Even though the proponent of this claim is a renowned scientist, reputation in science only gets you a hearing. You also need reliable data and sound theory.

Chapter 4, “The Virtues of Skepticism,” is a brief history of skepticism and doubt, the relationship between science and skepticism, and the role of skeptics in society. This essay began as a tribute to my friend and colleague—the venerable Martin Gardner, one of the fountainheads of the modern skeptical movement—but, as I try to do in nearly all of my writings, I also impart larger lessons for what we can learn about how science works through examining how it doesn’t work. In this case, I examine skepticism itself, with some embarrassment for my own lack of initiative and insight for not doing this in 1992 when we founded the Skeptics Society and Skeptic magazine. In selecting these names for the society and magazine, one would imagine that we would have carefully thought out their linguistic and historical meaning and usages, but it was not until the late Stephen Jay Gould wrote the foreword to my book Why People Believe Weird Things, in which he discussed the meaning of skepticism, that I got to thinking about what precisely it is we are doing when we are being skeptical.

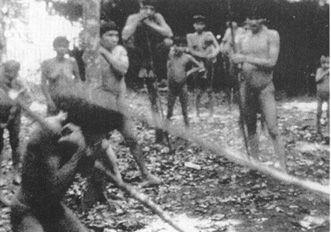

Part II, “Science and the Meaning of Body, Mind, and Spirit,” begins with chapter 5, “Spin-Doctoring Science,” by demonstrating how science gets spin-doctored during explosive controversies, such as the anthropology wars over the true nature of human nature. This is an in-depth analysis of the fight among scientists over the proper interpretation of the Yanomamö people of Amazonia. Are they the “fierce” people, as one anthropologist called them, in constant battles with one another over precious resources, or are they the “erotic” people, as another anthropologist labeled them, passionately loving and sexual? The answer is yes, the Yanomamö are the erotic fierce people, or the fiercely erotic people. They are, in fact, people, just like us in the sense of possessing a full range of human emotions, and a complete suite of human traits, together comprising our nature as Homo sapiens.

In chapter 6, “Psyched Up, Psyched Out,” I utilize my experiences in the 1980s as a professional bicycle racer, particularly my founding of and participation in the three-thousand-mile nonstop transcontinental Race Across America—the ultimate test in the sport of ultramarathon cycling—to consider the power of the mind in sports, what we know and do not know about its role in athletic performance, and what this tells us about the interaction between mind and body. Since I was an active participant in the race, hell-bent on winning as much as the next guy, I entered the fray not as an objective scientist curious about whether this or that diet or training technique or new technology worked, but as a competitor in search of an edge. The farrago of nonsense I encountered along the way ultimately led to my becoming a skeptic because athletes are especially superstitious and vulnerable to outlandish claims, and I was among the gullible at this stage in my life.

Chapter 7, “Shadowlands,” is the most personal commentary in the book, as I recount the story of a ten-year battle with cancer I helped my mother to wage against brain tumors (to which she eventually succumbed), and what I learned about the limitations of medical science, the hubris of medical diagnosis and prognosis, the lure of alternative medicine, and the interface of science and spirituality. I was with my mom every step of the way, from initial diagnosis of depression in a psychiatrist’s office, to CAT scans and MRIs, to the surgery waiting room (numerous times), to her final hospital stay, nursing home, and, finally, when she breathed her last. How even a hardened skeptic deals with death, particularly that of someone as close as a parent, demonstrates, I hope, that skepticism is more than a scientific way of analyzing the world; it is also a humane way of life.

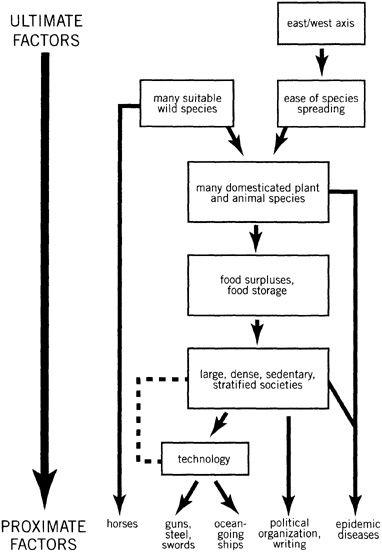

Part III, “Science and the (Re)Writing of History,” begins with chapter 8, “Darwin on the Bounty” by demonstrating how science can be put to good use to solve a historical mystery—what was the true cause of the mutiny on the Bounty—and how evolutionary theory provides an even deeper causal analysis of human behavior under strain. Historians operate at a proximate level of analysis, searching for immediate causes that triggered a particular historical event. Evolutionary theorists operate at an ultimate level of analysis, searching for deeper causes that underlie proximate causes. In this case I am not disputing what historians have determined happened to the crew of the Bounty, and what in the weeks and months preceding the rebellion led them to take such drastic action against their commander. What I am looking for is an explanation in the hearts of the men, so to speak; not just how, but why, in the sense of what in human nature could lead to such actions. As such, in this study I am extending what I have done in my previous work as a professional historian. In Denying History, I analyzed the claims of the Holocaust deniers and demonstrated with rigorous science how we know the Holocaust happened. In my biography of Alfred Russel Wallace, In Darwin’s Shadow, I employed several theories and techniques from the social sciences to ground my subject in a deeper level of understanding of human behavior. I am not attempting to revolutionize the practice of history, so much as I am trying to add to it the tried-and-true methods of science, so often neglected (because of the balkanization of academic departments) by historians.

Chapter 9, “Exorcising Laplace’s Demon,” applies the modern sciences of chaos and complexity to human history, showing how meaningful patterns can be teased out of the apparent chaos of the past. I have been thinking about how to apply chaos and complexity theory to human history since those sciences came on the scene in the late 1980s, while I was earning my doctorate in history. I have always been interested in the philosophy of history. Our flagship journal is History and Theory, and that title says a lot. History is the data of the past. But data without theory are like bricks without a blueprint to transmogrify them into a building. It is theory that binds historical facts into a cohesive and meaningful pattern that allows us to draw deeper conclusions about why (in the deeper sense) things happen as they do.

In a related analysis, chapter 10, “What If?,” I employ the always enjoyable game of “what if history, suggesting that scientists, too, can play this game to useful ends to explore what might have been and what had to be. Here I am most emphatically doing history not just for history’s sake, although that is part of it, but for us. I believe that history is primarily for the present, secondarily for the future, and tertiarily for the past. It is great fun to ponder how the history of the United States might have unfolded after 1863 had General McClellan not received ahead of time General Lee’s plans for the invasion of the North. Lee most likely would have been victorious at Antietam/Sharpsburg, which would probably have led the British and French to recognize the South as a sovereign nation, which would have encouraged them to aid the South in breaking the North’s blockade of ships bringing valuable resources from Europe, which possibly would have led to the South’s ability to carry out war for many more years to come, which most probably would have led to Northern war weariness and perhaps a congressional decision to let their Southern brothers and sisters secede from the union. Such speculation, however, is far more than merely amusing; it serves as an object lesson in historical causality: if this, then that; if not this, then not that. And this and that object lesson may serve us well for both present and future.

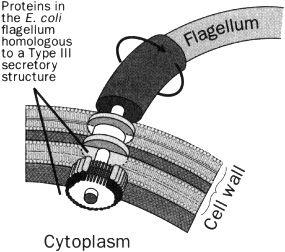

Chapter 11, “The New New Creationism,” picks up the anti-evolution movement in America with the mid-1990s development of “Intelligent Design Theory,” or ID, in which these quasi-scientific thinkers shed the cloak of the old creationists from the 1960s and 1970s who demanded a literal biblical interpretation of scientific findings, and that of the new creationists of the 1980s who were more flexible in adapting the findings of science but still insisted on a divine hand in nature. The IDers are more sophisticated in their thinking, more professional in their presentations and publications, and more politically successful in their ability to gain a public hearing for their cause. That cause, however, is the same as it has always been: to promote a Judeo-Christian biblical cosmology and world-view, to defuse any perceived threats to their religion (such as science and evolutionary theory), and to tear down the wall separating church and state in order to get their doctrines taught in public schools, including and especially public school science classes.

Chapter 12, “History’s Heretics,” is the oldest essay in the book, written initially while in graduate school in the late 1980s, and redacted over the years as I thought more and more about who and what mattered most in history. The germination of this project, in fact, dates back to the early 1970s when I was an undergraduate and one of my professors, Dr. Richard Hardison, introduced me to a book titled The 100, by Michael Hart. In the book an attempt is made to rank the top hundred people in history by their influence and importance (not just fame or infamy). Ever since then I thought about this as I studied the great (and not so great) people of the past, and when the millennium came and endless commentaries were published to venerate (or scorn) those of the past thousand years, I revised the piece yet again. Through this survey of attempts to rank the people and events most influential in our past, I devised my own ranking of who and what mattered most.

The book ends with Part IV, “Science and the Cult of Visionaries,” including chapter 13, “The Hero on the Edge of Forever,” an examination of Gene Roddenberry, the Star Trek empire, and the continuing role of the hero in science and science fiction. This is the most indulgent essay in the book because I was a fan of Star Trek from September 8, 1966, the date of the first airing of the first episode, a date imprinted on my psyche because it was also my twelfth birthday. But Gene Roddenberry was a humanist, which I later grew into after my stint as an evangelical Christian, and his vision of the future was far grander than any I had previously encountered. A science fiction author once explained to me that one can get away with a lot more speculation and controversy the further in the future one’s narrative is set. Scientists are fairly skeptical and hard on shows like X-Files, because it takes place in the all-too-familiar present. But when Roddenberry put his characters into the twenty-third century, scientists were far more forgiving, and viewers glommed on to this vision of the way things could be. Still, this subject would be too self-indulgent if I had not included an analysis of what we can learn about history and social systems from an in-depth analysis of one episode of the original series—my favorite, of course—“The City on the Edge of Forever”—and what it tells us about the role of contingency and chance in our lives.

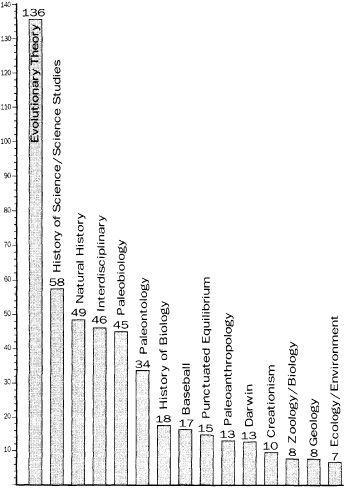

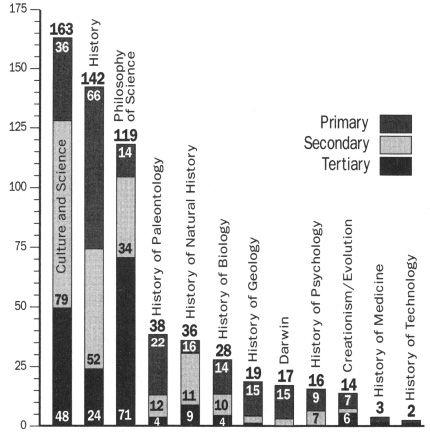

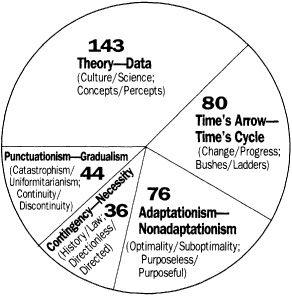

Finally, chapter 14, “This View of Science,” is a comprehensive analysis of the life works of the evolutionary theorist Stephen Jay Gould, and how science, history, and biography come together into one enterprise of knowledge, understanding, and wisdom. Steve Gould was my hero, colleague, and eventually a friend, who was (and will continue to be, in my opinion) one of the most influential thinkers of our time. More than just a world-class paleontologist and world-renowned scientist, Steve was a brilliant synthesizer of data and theory—his own and others’—as well as one of the most elegant essayists of our time, perhaps of all time. Although well grounded in history, as we all should be, Gould’s ideas were always on the edge of science. The primary lesson of this book is particularly well expressed in one of Gould’s (and my own) favorite quotes from Charles Darwin: “How odd it is that anyone should not see that all observation must be for or against some view if it is to be of any service!”

PART I SCIENCE

AND THE VIRTUES OF NOT KNOWING

1

Psychic for a Day

Or, How I Learned Tarot Cards, Palm Reading,

Astrology, and Mediumship in Twenty-four Hours

ON WEDNESDAY, JANUARY 15, 2003, I filmed a television show with Bill Nye in Seattle, Washington, for a new PBS science series entitled Eye on Nye. This series is an adult-oriented version of Bill’s wildly successful hundred-episode children’s series Bill Nye the Science Guy. This thirty-minute segment was on psychics and talking to the dead. Although I have analyzed the process and written about it extensively in Skeptic, Scientific American, and How We Believe, and on www.skeptic.com, I have had very little experience in actually doing psychic readings. Bill and I thought it would be a good test of the effectiveness of the technique and the receptivity of people to it to see how well I could do it armed with just a little knowledge.

Although the day of the taping was set weeks in advance, I did absolutely nothing to prepare until the day before. This made me especially nervous because psychic readings are a form of acting, and good acting takes talent and practice. And I made matters even harder on myself by convincing Bill and the producers that if we were going to do this we should use a number of different psychic modalities, including tarot cards, palm reading, astrology, and psychic mediumship, under the theory that these are all “props” used to stage a psychodrama called cold reading (reading someone “cold” without any prior knowledge). I am convinced more than ever that cheating (getting information ahead of time on subjects) is not a necessary part of a successful reading.

I read five different people, all women that the production staff had selected and about whom I was told absolutely nothing other than the date and time of their births (in order to prepare an astrological chart). I had no contact with any of the subjects until they sat down in front of me for the taping, and there was no conversation between us until the cameras were rolling. The setting was a soundstage at KCTS, the PBS affiliate station in Seattle. Since soundstages can have a rather cold feel to them, and because the setup for a successful psychic reading is vital to generating receptivity in subjects, I instructed the production staff to set up two comfortable chairs with a small table between them, with a lace cloth covering the table and candles on and around the table, all sitting on a beautiful Persian rug. Soft colored lighting and incense provided a “spiritual” backdrop.

The Partial Facts of Cold Reading

My primary source for all of the readings was Ian Rowland’s insightful and encyclopedic The Full Facts Book of Cold Reading (now in a third edition available at www.ian-rowland.com). There is much more to the cold reading process than I previously understood before undertaking to read this book carefully with an eye on performing rather than just analyzing. (Please keep in mind that what I’m describing here is only a small sampling from this comprehensive compendium by a professional cold reader who is arguably one of the best in the world.)

Rowland stresses the importance of the prereading setup to prime the subject into being sympathetic to the cold reading. He suggests—and I took him up on these suggestions—adopting a soft voice, a calm demeanor, and sympathetic and nonconfrontational body language: a pleasant smile, constant eye contact, head tilted to one side while listening, and facing the subject with legs together (not crossed) and arms unfolded. I opened each reading by introducing myself as Michael from Hollywood, calling myself a “psychic intuitor.” I explained that my “clients” come to see me about various things that might be weighing heavy on their hearts (the heart being the preferred organ of New Age spirituality), and that as an intuitor it was my job to use my special gift of intuition. I added that everyone has this gift, but that I have improved mine through practice. I said that we would start general and then get more focused, beginning with the present, then glancing back to the past, and finally taking a glimpse of the future.

I also noted that we psychics cannot predict the future perfectly—setting up the preemptive excuse for later misses—by explaining how we look for general trends and “inclinations” (an astrological buzzword). I built on the disclaimer by adding a touch of self-effacing humor meant also to initiate a bond between us: “While it would be wonderful if I was a hundred percent accurate, you know, no one is perfect. After all, if I could psychically divine the numbers to next week’s winning lottery I would keep them for myself!” Finally, I explained that there are many forms of psychic readings, including tarot cards, palm reading, astrology, and the like, and that my specialty was . . . whatever modality I was about to do with that particular subject.

Since I do not do psychic readings for a living, I do not have a deep backlog of dialogue, questions, and commentary from which to draw, so I outlined the reading into the following themata that are easy to remember (that is, these are the main subject areas that people want to talk about when they go to a psychic): love, health, money, career, travel, education, ambitions. I also added a personality component, since most people want to hear something about their inner selves. I didn’t have time to memorize all the trite and trivial personality traits that psychics serve their marks, so I used the Five Factor Model of personality, also known as the “Big Five,” that has an easy acronym of OCEAN: openness to experience, conscientiousness, extroversion, agreeableness, and neuroticism. Since I have been conducting personality research with my colleague Frank Sulloway (primarily through a method we pioneered of assessing the personality traits of historical personages such as Charles Darwin, Alfred Russel Wallace, and Carl Sagan through the use of expert raters), it was easy for me to riffle through the various adjectives used by psychologists to describe these five personality traits. For example: openness to experience (fantasy, feelings, likes to travel), conscientiousness (competence, order, dutifulness), agreeableness (tender-minded versus tough-minded), extroversion (gregariousness, assertiveness, excitement seeking), and neuroticism (anxiety, anger, depression). Since there is sound experimental research validating these traits, and I have learned through Sulloway’s research how they are influenced by family dynamics and birth order, I was able to employ this knowledge to my advantage in the readings, including (with great effect) nailing the correct birth order (firstborn, middle-born, or later-born) of each of my subjects!

I began with what Rowland calls the “Rainbow Ruse” and “Fine Flattery,” and what other mentalists more generally call a Barnum reading (offering something for everyone, as P.T. always did). The components of the following reading come from various sources; the particular sequential arrangement is my own. I opened my readings with this general statement:

You can be a very considerate person, very quick to provide for others, but there are times, if you are honest, when you recognize a selfish streak in yourself. I would say that on the whole you can be a rather quiet, self-effacing type, but when the circumstances are right, you can be quite the life of the party if the mood strikes you.

Sometimes you are too honest about your feelings and you reveal too much of yourself. You are good at thinking things through and you like to see proof before you change your mind about anything. When you find yourself in a new situation you are very cautious until you find out what’s going on, and then you begin to act with confidence.

What I get here is that you are someone who can generally be trusted. Not a saint, not perfect, but let’s just say that when it really matters this is someone who does understand the importance of being trustworthy. You know how to be a good friend.

You are able to discipline yourself so that you seem in control to others, but actually you sometimes feel somewhat insecure. You wish you could be a little more popular and at ease in your interpersonal relationships than you are now.

You are wise in the ways of the world, a wisdom gained through hard experience rather than book learning.

According to Rowland—and he was spot-on with this one—the statement “You are wise in the ways of the world, a wisdom gained through hard experience rather than book learning” was flattery gold. Every one of my subjects nodded furiously in agreement, emphasizing that this statement summed them up to a tee.

After the general statement and personality assessment, I went for specific comments lifted straight from Rowland’s list of high probability guesses. These include items found in the subject’s home:

A box of old photographs, some in albums, most not in albums

Old medicine or medical supplies out of date

Toys, books, mementos from childhood

Jewelry from a deceased family member

Pack of cards, maybe a card missing

Electronic gizmo or gadget that no longer works

Notepad or message board with missing matching pen

Out-of-date note on fridge or near the phone

Booksabout a hobby no longer pursued

Out-of-date calendar

Drawer that is stuck or doesn’t slide properly

Keys that you can’t remember what they go to

Watch or clock that no longer works

And peculiarities about the person:

Scar on knee

Number 2 in the home address

Childhood accident involving water

Clothing never worn

Photos of loved ones in purse

Wore hair long as a child, then shorter haircut

One earring with a missing match

I added one of my own to great effect: “I see a white car.” All of my subjects were able to find a meaningful connection to a white car. As I was reading this list on the flight to Seattle the morning of the reading, I was amazed to discover how many flight attendants and people around me validated them.

Finally, Rowland reminds his ersatz psychics that if the setup is done properly people are only too willing to offer information, especially if you ask the right questions. Here are a few winners:

“Tell me, are you currently in a long-term relationship, or not?”

“Are you satisfied in terms of your career, or is there a problem?”

“What is it about your health that concerns you?”

“Who is the person who has passed over that you want to try to contact today?”

While going through the Barnum reading I remembered to pepper the commentary with what Rowland calls “incidental questions,” such as:

“Now why would that be?“

“Is this making sense to you?”

“Does this sound right?“

“Would you say this is along the right lines for you?“

“This is significant to you, isn’t it?“

“You can connect with this, can’t you?“

“So who might this refer to please?“

“What might this link to in your life?“

“What period of your life, please, might this relate to?“

“So tell me, how might this be significant to you?“

“Can you see why this might be the impression I’m getting?”

With this background, all gleaned from a single day of intense reading and note taking, I was set.

The Tarot Card Reading

My first subject was a twenty-one-year-old woman, for whom I was to do a tarot card reading. To prepare myself I bought a “Haindl Tarot Deck,” created by Hermann Haindl and produced by U.S. Games Systems ($16) in Stamford, Connecticut, at the Alexandria II New Age Bookstore in Pasadena, California, and read through the little pamphlet that comes with it (itself glossed from a two-volume narrative that presumably gives an expanded explanation of each card). It is a sleek, elegantly illustrated deck, each card of which is replete with an astrological symbol, a rune sign, a Hebrew letter, I Ching symbols, and lots of mythic characters from history. For example, the Wheel of Fortune card description reads:

The wheel is set against a field of stars symbolizing the cosmos. Below, looking upward, is the Mother, the Earth. At the upper left is the Sky Father, Zeus. At the upper right is an androgynous child. The child, with its wizened face, represents humanity and our ancestors. Inside the Wheel, the mushrooms symbolize luck, the snake, rebirth, the eye, time, the dinosaur, all things lost in the turning of time. Divinatory meanings: Change of circumstances. Taking hold of ones life. Grabbing hold of fate. Time to take what life has given you.

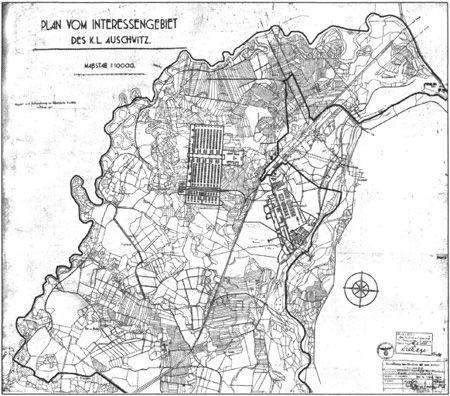

For dramatic effect I added the Death card (figure 1.1) to my presentation.

The image of the boat belongs to birth as well as to death; the baby’s cradle originally symbolized a boat. The trees and grass signify plants, the bones, minerals, the birds, the animal world, and the ferryman, the human world. The peacock’s eye in the center signifies looking at the truth in regard to death. The bird also symbolizes the soul and the divine potential of a person. Divinatory meanings: The Death card rarely refers to physical death. Rather, it has to do with one’s feelings about death. Psychologically, letting go. New opportunities.

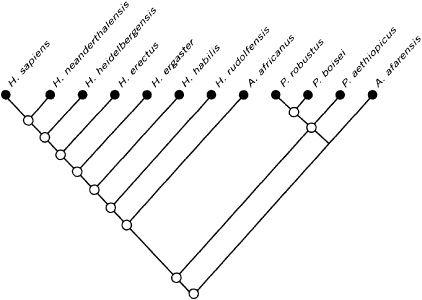

At a total of seventy-eight cards there was no way I was going to memorize all the “real” meanings and symbols, so the night before I sat down with my family and read through the instruction manual and we did a reading together, going through what each of the ten cards we used is suppose to mean. My eleven-year-old daughter, Devin, then quizzed me on them until I had them down cold. (This was, I think, done not just out of Devin’s desire to help her dad; it also had the distinct advantage of getting her out of doing her homework for the evening, plus gave me a taste of my own medicine of repetitive learning.) I used what is called the Hagall Spread (no explanation given as to who or what a Hagall is), where you initially lay out four cards in a diamond shape, then put three cards on top and three more on the bottom (figure 1.2). This is what the spread is supposed to indicate:

1. The general situation

2. Something you’ve done, or an experience you’ve had that has helped create the current situation

3. Your beliefs, impressions, and expectations, conscious or subconscious, of the situation

Figure 1.1 (left). The Death card

Figure 1.2 (right). The Hagall Spread of tarot cards

4. The likely result of the situation as things stand now

5. Spiritual history, how you’ve behaved, what you’ve learned

6. Spiritual task at this time, challenges and opportunities in the current situation

7. Metamorphosis, how the situation will change, and the spiritual tasks that will come to you as a result

8. The Helper. Visualize the actual person. This person gives you support

9. Yourself. You are expressing the qualities of the person shown on the card

10. The Teacher. This figure can indicate the demands of the situation, and also the knowledge that you can gain from the situation

By the time of the reading I forgot all of this, so I made up a story about how the center four cards represent the present, the top three cards represent the future, and the bottom three cards are the characters that are going to help you gel: to that future. It turns out that it doesn’t matter what story you make up, as long as it sounds convincing. I was glad, however, that I had memorized the meanings of the symbols and characters on the cards I used because my subject had previously done tarot card reading herself. (Since you are supposed to have the client shuffle the tarot cards ahead of time to put her influence into the deck, I palmed my memorized cards and then put them on top of the newly shuffled deck.)

Since this subject was my first reading I was a little stiff and nervous, so I did not stray far from the standard Barnum reading, worked my way through the Big Five personality traits fairly successfully (and from which I correctly guessed that she was a middle child between firstborn and last-born siblings), and did not hazard any of the high-probability guesses. Since she was a student I figured she was indecisive about her life, so I offered lots of trite generalities that would have applied to almost anyone: “you are uncertain about your future but excited about the possibilities,” “you are confident in your talents yet you still harbor some insecurities,” “I see travel in your immediate future,” “you strike a healthy balance between head and heart,” and so forth.

Tarot cards are a great gimmick because they provide the cold reader with a prop to lean on, something to reference and point to, something for the subject to ask about. I purposely put the Death card in the spread because that one seems to make people anxious (recall the Death card was in the news of late because the East Coast sniper of 2002 said that it influenced him to begin his killing spree). This gave me an opportunity to pontificate about the meaning of life and death, that the card actually represents not physical death but metaphorical death, that transitions in life are a time of opportunity—the “death” of a career and the “rebirth” of another career—and other such dribble. The bait was set and the line cast. I had only to wait for the fish to bite.

After each reading the producers conducted a short taped interview with the subject, asking them how they thought the reading went. This young lady said she thought the reading went well, that I accurately summarized her life and personality, but that there were no surprises, nothing that struck her as startling. She had experienced psychic readings before and that mine was fairly typical. I felt that the reading was mediocre at best. I was just getting started.

The Palm Reading

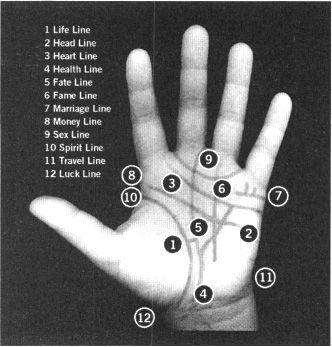

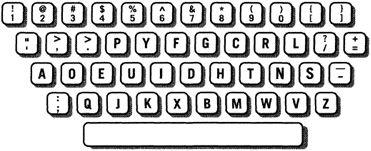

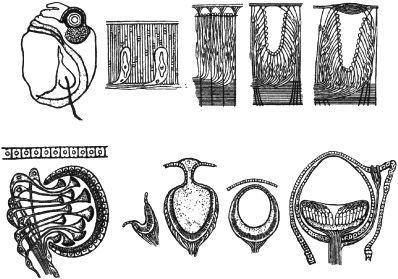

My second reading was on a young woman aged nineteen. Palm reading is the best of the psychic props because, as in the tarot cards, there is something specific to reference, but it has the added advantage of making physical contact with the subject. I could not remember what all the lines on a palm are suppose to represent, so while I was memorizing the tarot cards, my daughter did a Google image search for me and downloaded a palm chart (figure 1.3).

I mainly focused on the Life, Head, Heart, and Health lines, and for added effect added some blather about the Marriage, Money, and Fate lines. Useful nonsense includes:

If the Head and Life lines are connected, it means that there was an early dependence on family.

If the Head and Life lines are not connected, it means the client has declared independence early.

The degree of separation between the Head and Heart lines indicates the degree of dependence or independence between the head and the heart for making decisions.

The strength of the Head line indicates the thinking style—intuitive or rational.

Breaks in the Head line may mean there was a head injury, or that the subject gets headaches, or something happened to the head at some time in the subject’s life.

On one Web page I downloaded some material about the angles of the thumbs to the hand that was quite useful. You have the subject rest both hands palm down on the table, and then observe whether they are relaxed or tight and whether the fingers are close together or spread apart. This purportedly indicates how uptight or relaxed the subject is, how extroverted or introverted, how confident or insecure, etc. According to one palm reader a small thumb angle “reveals that you are a person who does not rush into doing things. You are cautious and wisely observe the situation before taking action. You are not pushy about getting your way.” A medium thumb angle “reveals that you do things both for yourself and for others willingly. You are not overly mental about what you are going to do, so you don’t waste a lot of time doing unnecessary planning for each job.” And a big thumb angle “reveals that you are eager to jump in and get things done right away. You do things quickly, confidently, and pleasurably because you like to take charge and get the job done.” Conveniently, you can successfully use any of these descriptions with anyone.

Figure 1.3. A palm reading chart

It turns out that you can tell the handedness of a person because the dominant hand is a little larger and more muscular. That gave me an opening to tell my mark, who was left-handed, that she was right-brain dominant, which means that she puts more emphasis on intuition than on intellect, that she is herself very intuitive (Rowland says that a great ruse is to flatter the subjects with praise about their own psychic powers), and that her wisdom comes more from real-world experience than traditional book learning. She nodded furiously in agreement.