THE ART OF CHOOSING

SHEENA IYENGAR

New York Boston

Everything begins with a story.

—Joseph Campbell

I was born in Toronto, one month early and during a blizzard that covered the city in snow and silence. The surprise and the low-visibility conditions that accompanied my arrival were portents, though they went unrecognized at the time. My mother, as a recent immigrant from India, was of two worlds, and she would pass that multiple identity on to me. My father was making his way to Canada, but had not yet arrived; his absence at my birth was a sign of the deeper absence yet to come. Looking back, I see all the ways in which my life was set the moment I was born into it. Whether in the stars or in stone, whether by the hand of God or some unnameable force, it was already written, and every action of mine would serve to confirm the text.

That is one story. Here’s another.

You never know, do you? It’s a jack-in-the-box life: You open it carefully, one parcel at a time, but things keep springing up and out. That’s how I came into the world—suddenly—a month before I was due, my father not even able to receive me. He was still in India, where my mother had always imagined she, too, would be. Yet, somehow, she had ended up in Toronto with me in her arms, and through the window she could see the snow whirling. Like those flakes of ice, we were carried to other places: Flushing, Queens, and then Elmwood Park, New Jersey. I grew up in enclaves of Sikh immigrants, who—like my parents—had left India but had also brought it with them. And so I was raised in a country within a country, my parents trying to re-create the life that was familiar to them.

Three days a week, they took me to the gurudwara, or temple, where I sat on the right side with the women, while the men clustered on the left. In accordance with the articles of the Sikh faith, I kept my hair long and uncut, a symbol of the perfection of God’s creation. I wore a kara, a steel bracelet, on my right wrist as a symbol of my resilience and devotion, and as a reminder that whatever I did was done under the watchful eyes of God. At all times, even in the shower, I wore a kachchha, an undergarment that resembled boxers and represented control over sexual desire. These were just some of the rules I followed, as do all observant Sikhs, and whatever was not dictated by religion was decided by my parents. Ostensibly, this was for my own good, but life has a way of poking holes in your plans, or in the plans others make for you.

As a toddler, I constantly ran into things, and at first my parents thought I was just very clumsy. But surely a parking meter was a large enough obstacle to avoid? And why did I need to be warned so frequently to watch where I was going? When it became obvious that I was no ordinary klutz, I was taken to a vision specialist at Columbia Presbyterian Hospital. He quickly solved the mystery: I had a rare form of retinitis pigmentosa, an inherited disease of retinal degeneration, which had left me with 20/400 vision. By the time I reached high school, I was fully blind, able to perceive only light.

A surprise today does prepare us, I suppose, for the ones still in store. Coping with blindness must have made me more resilient. (Or was I able to cope well because of my innate resilience?) No matter how prepared we are, though, we can still have the wind knocked out of us. I was 13 when my father died. That morning, he dropped my mother off at work in Harlem and promised to see a doctor for the leg pain and breathing problems he’d been having. At the doctor’s office, however, there was some confusion about his appointment time, and no one could see him right then. Frustrated by this—and already stressed for other reasons—he stormed out of the office and pounded the pavement, until he collapsed in front of a bar. The bartender pulled him inside and called for an ambulance, and my father was eventually taken to the hospital, but he could not survive the multiple heart attacks he had suffered by the time he got there.

This is not to say that our lives are shaped solely by random and unpleasant events, but they do seem, for better or worse, to move forward along largely unmapped terrain. To what extent can you direct your own life when you can see only so far and the weather changes quicker than you can say “Surprise!”?

Wait. I have still another story for you. And though it is mine, once again, I suspect that this time you will see your own in it, too.

In 1971, my parents emigrated from India to America by way of Canada. Like so many before them, when they landed on the shores of this new country and a new life, they sought the American Dream. They soon found out that pursuing it entailed many hardships, but they persevered. I was born into the dream, and I think I understood it better than my parents did, for I was more fluent in American culture. In particular, I realized that the shining thing at its center—so bright you could see it even if you, like me, were blind—was choice.

My parents had chosen to come to this country, but they had also chosen to hold on to as much of India as possible. They lived among other Sikhs, followed closely the tenets of their religion, and taught me the value of obedience. What to eat, wear, study, and later on, where to work and whom to marry—I was to allow these to be determined by the rules of Sikhism and by my family’s wishes. But in public school I learned that it was not only natural but desirable that I should make my own decisions. It was not a matter of cultural background or personality or abilities; it was simply what was true and right. For a blind Sikh girl otherwise subject to so many restrictions, this was a very powerful idea. I could have thought of my life as already written, which would have been more in line with my parents’ views. Or I could have thought of it as a series of accidents beyond my control, which was one way to account for my blindness and my father’s death. However, it seemed much more promising to think of it in terms of choice, in terms of what was still possible and what I could make happen.

Many of us have conceived and told our stories only in the language of choice. It is certainly the lingua franca of America, and its use has risen rapidly in much of the rest of the world. We are more likely to recognize one another’s stories when we tell them in this language, and as I hope to show in this book, “speaking choice” has many benefits. But I also hope to reveal other ways in which we live and tell our lives and form narratives that are more complex and nuanced than the simplified alternatives of Destiny and Chance that I have presented here.

“Choice” can mean so many different things and its study approached in so many different ways that one book cannot contain its fullness. I aim to explore those aspects of it that I have found to be most thought-provoking and most relevant to how we live. This book is firmly grounded in psychology, but I draw on various fields and disciplines, including business, economics, biology, philosophy, cultural studies, public policy, and medicine. In doing so, I hope to present as many perspectives as possible and to challenge perceived notions about the role and practice of choice in our lives.

Each of the following seven chapters will look at choice from a different vantage point and tackle various questions about the way choice affects our lives. Why is choice powerful, and where does its power come from? Do we all choose in the same way? What is the relationship between how we choose and who we are? Why are we so often disappointed by our choices, and how do we make the most effective use of the tool of choice? How much control do we have over our everyday choices? How do we choose when our options are practically unlimited? Should we ever let others choose for us, and if yes, who and why? Whether or not you agree with my opinions, suggestions, and conclusions—and I’m sure we won’t always see eye to eye—just the process of exploring these questions can help you make more informed decisions. Choice, ranging from the trivial to the life-altering, in both its presence and its absence, is an inextricable part of our life stories. Sometimes we love it, Sometimes we hate it, but no matter what our relationship to choice, we can’t ignore it. As you read this book, I hope you’ll gain insight into how chioce has shaped your past, why it’s so important in the present, and where it can take you in the future.

What is freedom? Freedom is the right to choose: the right to create for oneself the alternatives of choice. Without the possibility of choice a man is not a man but a member, an instrument, a thing.

—Archibald MacLeish,

Pulitzer Prize–winning American poet

I. SURVIVORSHIP

What would you do? If you were stranded at sea in a small inflatable raft, or stuck in the mountains with a broken leg, or just generally up the proverbial creek without a paddle, what do you suppose you would do? How long, say, would you swim before letting yourself drown? How long could you hold out hope? We ask these questions—over dinner, at parties, on lazy Sunday afternoons—not because we’re looking for survival tips but because we’re fascinated by our limits and our ability to cope with the kinds of extreme conditions for which there is little preparation or precedent. Who among us, we want to know, would live to tell the tale?

Take Steven Callahan, for example. On February 5, 1982, some 800 miles west of the Canary Islands, his boat, the Napoleon Solo, capsized in a storm. Callahan, then 30, found himself alone and adrift in a leaky inflatable raft with few resources. He collected rainwater for drinking and fashioned a makeshift spear for fishing. He ate barnacles and sometimes the birds attracted to the remains of those barnacles. To maintain his sanity, he took notes on his experience and did yoga whenever his weak body allowed it. Other than that, he waited and drifted west. Seventy-six days later, on April 21, a boat discovered Callahan off the coast of Guadeloupe. Even today, he is one of the only people to have lasted more than a month at sea on his own.

Callahan—an experienced mariner—possessed seafaring skills that were undoubtedly critical to his survival, but were these alone enough to save him? In his book Adrift: Seventy-six Days Lost at Sea, he describes his state of mind not long after the disaster:

About me lie the remnants of Solo. My equipment is properly secured, vital systems are functioning, and daily priorities are set, priorities not to be argued with. I somehow rise above mutinous apprehension, fear, and pain. I am captain of my tiny ship in treacherous waters. I escaped the confused turmoil following Solo’s loss, and I have finally gotten food and water. I have overcome almost certain death. I now have a choice: to pilot myself to a new life or to give up and watch myself die. I choose to kick as long as I can.

Callahan framed his situation, dire though it was, in terms of choice. A vast ocean stretched before him on all sides. He saw nothing but its endless blue surface, below which lurked many dangers. However, in the lapping of the waves and the whistle of the wind, he did not hear a verdict of death. Instead, he heard a question: “Do you want to live?” The ability to hear that question and to answer it in the affirmative—to reclaim for himself the choice that the circumstances seemed to have taken away—may be what enabled him to survive. Next time someone asks you, “What would you do?,” you might take a page from Callahan’s book and reply, “I would choose.”

Joe Simpson, another famous survivor, almost died during his descent from a mountain in the icy heights of the Peruvian Andes. After breaking his leg in a fall, he could barely walk, so his climbing partner, Simon Yates, attempted to lower him to safety using ropes. When Yates, who couldn’t see or hear Simpson, unwittingly lowered him over the edge of a cliff, Simpson could no longer steady himself against the face of the mountain or climb back up. Yates now had to support all of Simpson’s weight; sooner or later, he would no longer be able to do so, and both of them would plummet to their deaths. Finally, seeing no alternative, Yates cut the rope, believing he was sentencing his friend to death. What happened next was remarkable: Simpson fell onto a ledge in a crevasse, and over the next few days, he crawled five miles across a glacier, reaching base camp just as Yates was preparing to leave. In Touching the Void, his account of the incident, Simpson writes:

The desire to stop abseiling was almost unbearable. I had no idea what lay below me, and I was certain of only two things: Simon had gone and would not return. This meant that to stay on the ice bridge would finish me. There was no escape upwards, and the drop on the other side was nothing more than an invitation to end it all quickly. I had been tempted, but even in my despair I found that I didn’t have the courage for suicide. It would be a long time before cold and exhaustion overtook me on the ice bridge, and the idea of waiting alone and maddened for so long had forced me to this choice: abseil until I could find a way out, or die in the process. I would meet it rather than wait for it to come to me. There was no going back now, yet inside I was screaming to stop.

For the willful Callahan and Simpson, survival was a matter of choice. And as presented by Simpson, in particular, the choice was an imperative rather than an opportunity; you might squander the latter, but it’s almost impossible to resist the former.

Though most of us will never experience such extreme circumstances (we hope), we are nonetheless faced daily with our own imperatives to choose. Should we act or should we hang back and observe? Calmly accept whatever comes our way, or doggedly pursue the goals we have set for ourselves? We measure our lives using different markers: years, major events, achievements. We can also measure them by the choices we make, the sum total of which has brought us to wherever and whoever we are today. When we view life through this lens, it becomes clear that choice is an enormously powerful force, an essential determinant of how we live. But from where does the power of choice originate, and how best can we take advantage of it?

II. OF RATS AND MEN

In 1957 Curt Richter, a prolific psychobiology researcher at Johns Hopkins School of Medicine, conducted an experiment that you might find shocking. To study the effect of water temperature on endurance, Richter and his colleagues placed dozens of rats into glass jars—one rodent per jar—and then filled the jars with water. Because the walls of these jars were too high and slick to climb, the rats were left in a literal sink-or-swim situation. Richter even had water jets blasting from above to force the rats below the surface if they tried to float idly instead of swimming for their lives. He then measured how long the rats swam—without food, rest, or chance of escape—before they drowned.

The researchers were surprised to find that even when the water temperatures were identical, rats of equal fitness swam for markedly different lengths of time. Some continued swimming for an average of 60 hours before succumbing to exhaustion, while others sank almost immediately. It was as though, after struggling for 15 minutes, some rats simply gave up, while others were determined to push themselves to the utmost physical limit. The perplexed researchers wondered whether some rats were more convinced than others that if they continued to swim, they would eventually escape. Were rats even capable of having different “convictions”? But what else could account for such a significant disparity in performance, especially when the survival instinct of all the rats must have kicked in? Perhaps the rats that showed more resilience had somehow been given reason to expect escape from their terrible predicament.

So in the next round of the experiment, rather than throwing them into the water straightaway, researchers first picked up the rats several times, each time allowing them to wriggle free. After they had become accustomed to such handling, the rats were placed in the jars, blasted with water for several minutes, then removed and returned to their cages. This process was repeated multiple times. Finally, the rats were put into the jars for the sink-or-swim test. This time, none of the rats showed signs of giving up. They swam for an average of more than 60 hours before becoming exhausted and drowning.

We’re probably uncomfortable describing rats as having “beliefs,” but having previously wriggled away from their captors and having also survived blasts of water, they seemed to believe they could not only withstand unpleasant circumstances but break free of them. Their experience had taught them that they had some control over the outcome and, perhaps, that rescue was just around the corner. In their incredible persistence, they were not unlike Callahan and Simpson, so could we say that these rats made a choice? Did they choose to live, at least for as long as their bodies could hold out?

There’s a suffering that comes when persistence is unrewarded, and then there’s the heartbreak of possible rescue gone unrecognized. In 1965, at Cornell University, psychologist Martin Seligman launched a series of experiments that fundamentally changed the way we think about control. His research team began by leading mongrel dogs—around the same size as beagles or Welsh corgis—into a white cubicle, one by one, and suspending them in rubberized, cloth harnesses. Panels were placed on either side of each dog’s head, and a yoke between the panels—across the neck—held the head in place. Every dog was assigned a partner dog located in a different cubicle.

During the experiment each pair of dogs was periodically subjected to physically nondamaging yet painful electrical shocks, but there was a crucial difference between the two dogs’ cubicles: One could put an end to the shock simply by pressing the side panels with its head, while the other could not turn it off, no matter how it writhed. The shocks were synchronized, starting at the same moment for each dog in the pair, and ending for both when the dog with the ability to deactivate pressed the side panel. Thus, the amount of shock was identical for the pair, but one dog experienced the pain as controllable, while the other did not. The dogs that could do nothing to end the shocks on their own soon began to cower and whine, signs of anxiety and depression that continued even after the sessions were over. The dogs that could stop the shocks, however, showed some irritation but soon learned to anticipate the pain and avoid it by pressing their panels.

In the second phase of the experiment, both dogs in the pair were exposed to a new situation to see how they would apply what they’d learned from being in—or out of—control. Researchers put each dog in a large black box with two compartments, divided by a low wall that came up to about shoulder height on the animals. On the dog’s side, the floor was periodically electrified. On the other side, it was not. The wall was low enough to jump over, and the dogs that had previously been able to stop the shocks quickly figured out how to escape. But of the dogs that had not been able to end the shocks, two-thirds lay passively on the floor and suffered. The shocks continued, and although the dogs whined, they made no attempt to free themselves. Even when they saw other dogs jumping the wall, and even after researchers dragged them to the other side of the box to show them that the shocks were escapable, the dogs still gave up and endured the pain. For them, the freedom from pain just on the other side of the wall—so near and so readily accessible—was invisible.

When we speak of choice, what we mean is the ability to exercise control over ourselves and our environment. In order to choose, we must first perceive that control is possible. The rats kept swimming despite mounting fatigue and no apparent means of escape because they had already tasted freedom, which—as far as they knew—they had attained through their own vigorous wriggling efforts. The dogs, on the other hand, having earlier suffered a complete loss of control, had learned that they were helpless. When control was restored to them later on, their behavior didn’t change because they still could not perceive the control. For all practical purposes, they remained helpless. In other words, how much choice the animals technically had was far less important than how much choice they felt they had. And while the rats were doomed because of the design of the experiment, the persistence they exhibited could well have paid off in the real world, as it did for Callahan and Simpson.

III. CHOICE ON THE MIND

When we look in the mirror, we see some of the “instruments” necessary for choice. Our eyes, nose, ears, and mouth gather information from our environment, while our arms and legs enable us to act on it. We depend on these capabilities to effectively negotiate between hunger and satiation, safety and vulnerability, even between life and death. Yet our ability to choose involves more than simply reacting to sensory information. Your knee may twitch if hit in the right place by a doctor’s rubber mallet, but no one would consider this reflex to be a choice. To be able to truly choose, we must evaluate all available options and select the best one, making the mind as vital to choice as the body.

Thanks to recent advances in technology, such as functional magnetic resonance imaging (fMRI) scans, we can identify the main brain system engaged when making choices: the corticostriatal network. Its first major component, the striatum, is buried deep in the middle of the brain and is relatively consistent in size and function across the animal kingdom, from reptiles to birds to mammals. It is part of a set of structures known as the basal ganglia, which serve as a sort of switchboard connecting the higher and lower mental functions. The striatum receives sensory information from other parts of the brain and has a role in planning movement, which is critical for our choice making. But its main choice-related function has to do with evaluating the reward associated with the experience; it is responsible for alerting us that “sugar = good” and “root canal = bad.” Essentially, it provides the mental connection needed for wanting what we want.

Yet the mere knowledge that sweet things are appealing and root canals excruciating is not enough to guide our choices. We must also make the connection that under certain conditions, too much of a sweet thing can eventually lead to a root canal. This is where the other half of the corticostriatal network, the prefrontal cortex, comes into play. Located directly behind our foreheads, the prefrontal cortex acts as the brain’s command center, receiving messages from the striatum and other parts of the body and using those messages to determine and execute the best overall course of action. It is involved in making complex cost-benefit analyses of immediate and future consequences. It also enables us to exercise impulse control when we are tempted to give in to something that we know to be detrimental to us in the long run.

The development of the prefrontal cortex is a perfect example of natural selection in action. While humans and animals both possess a prefrontal cortex, the percentage of the brain it occupies in humans is larger than in any other species, granting us an unparalleled ability to choose “rationally,” superseding all other competing instincts. This facility improves with age, as our prefrontal cortex continues to develop well past adolescence. While motor abilities are largely developed by childhood, and factual reasoning abilities by adolescence, the prefrontal cortex undergoes a process of growth and consolidation that continues into our mid-20s. This is why young children have more difficulty understanding abstract concepts than adults, and both children and teenagers are especially prone to acting on impulse.

The ability to choose well is arguably the most powerful tool for controlling our environment. After all, it is humans who have dominated the planet, despite a conspicuous absence of sharp claws, thick hides, wings, or other obvious defenses. We are born with the tools to exercise choice, but just as significantly, we’re born with the desire to do so. Neurons in the striatum, for example, respond more to rewards that people or animals actively choose than to identical rewards that are passively received. As the song goes, “Fish gotta swim, birds gotta fly,” and we all gotta choose.

This desire to choose is so innate that we act on it even before we can express it. In a study of infants as young as four months, researchers attached strings to the infants’ hands and let them learn that by tugging the string, they could cause pleasant music to play. When the researchers later broke the association with the string, making the music play at random intervals instead, the children became sad and angry, even though the experiment was designed so that they heard the same amount of music as when they had activated the music themselves. These children didn’t only want to hear music; they craved the power to choose it.

Ironically, while the power of choice lies in its ability to unearth the best option possible out of all those presented, sometimes the desire to choose is so strong that it can interfere with the pursuit of these very benefits. Even in situations where there is no advantage to having more choice, meaning that it actually raises the cost in time and effort, choice is still instinctively preferred. In one experiment, rats in a maze were given the option of taking a direct path or one that branched into several other paths. The direct and the branched paths eventually led to the same amount of food, so one held no advantage over the other. Nevertheless, over multiple trials, nearly every rat preferred to take the branching path. Similarly, pigeons and monkeys that learned to press buttons to dispense food preferred to have a choice of multiple buttons to press, even though the choice of two buttons as opposed to one didn’t result in a greater food reward. And though humans can consciously override this preference, this doesn’t necessarily mean we will. In another experiment, people given a casino chip preferred to spend it at a table with two identical roulette-style wheels rather than at a table with a single wheel, even though they could bet on only one of the wheels, and all three wheels were identical.

The desire to choose is thus a natural drive, and though it most likely developed because it is a crucial aid to our survival, it often operates independently of any concrete benefits. In such cases, the power of choice is so great that it becomes not merely a means to an end but something intrinsically valuable and necessary. So what happens when we enjoy the benefits that choice is meant to confer but our need for choice itself is not met?

IV. THE PANTHER IN THE GILDED CAGE

Imagine the ultimate luxury hotel. There’s gourmet food for breakfast, lunch, and dinner. During the day, you do as you please: lounge by the pool, get a spa treatment, romp in the game room. At night, you sleep in a king-size bed with down pillows and 600-thread-count sheets. The staff is ever present and ever pleasant, happy to fulfill any requests you might have, and the hotel even boasts state-of-the-art medical services. You can bring your whole family and socialize with lots of new people. If you’re single, you might find that special someone among all the attractive men and women around. And the best part is that it’s free. There’s just one small catch: Once you check in, you can never leave.

No, it’s not the famous Hotel California. Such luxurious imprisonment is the norm for animals in zoos across the world. Since the 1970s and 1980s, zoos have strived to reproduce the natural habitats of their animals, replacing concrete floors and steel bars with grass, boulders, trees, and pools of water. These environments may simulate the wild, but the animals don’t have to worry about finding food, shelter, or safety from predators; all the necessities of life seem to be provided for them. While this may not seem like such a bad deal at first glance, the animals experience numerous complications. The zebras live constantly under the sword of Damocles, smelling the lions in the nearby Great Cats exhibit every day and finding themselves unable to escape. There’s no possibility of migrating or of hoarding food for the winter, which must seem to promise equally certain doom to a bird or bear. In fact, the animals have no way of even knowing whether the food that has magically appeared each day thus far will appear again tomorrow, and no power to provide for themselves. In short, zoo life is utterly incompatible with an animal’s most deeply ingrained survival instincts.

In spite of the dedication of their human caretakers, animals in zoos may feel caught in a death trap because they exert minimal control over their own lives. Every year, undaunted by the extensive moats, walls, nets, and glass surrounding their habitats, many animals attempt escape, and some of them even succeed. In 2008, Bruno, a 29-year-old orangutan at the Los Angeles Zoo, punched a hole in the mesh surrounding his habitat, only to find himself in a holding pen. No one was hurt, but 3,000 visitors were evacuated before Bruno was sedated by a handler. A year earlier, a four-year-old Siberian tiger known as Tatiana had jumped the 25-foot moat at the San Francisco Zoo, killing one person and injuring two others before she was shot dead. And in 2004, at the Berlin Zoo, the Andean bespectacled bear Juan used a log to “surf” his way across the moat surrounding his habitat before climbing a wall to freedom. After he had taken a whirl on the zoo’s merry-go-round and a few trips down the slide, he was shot with a tranquilizer dart by zoo officials.

These and countless other stories reveal that the need for control is a powerful motivator, even when it can lead to harm. This isn’t only because exercising control feels good, but because being unable to do so is naturally unpleasant and stressful. Under duress, the endocrine system produces stress hormones such as adrenaline that prepare the body for dealing with immediate danger. We’ve all felt the fight-or-flight response in a dangerous situation or when stressed, frustrated, or panicked. Breathing and heart rates increase and the blood vessels narrow, enabling oxygen-rich blood to be pumped quickly to the extremities. Energy spent on bodily processes such as digestion and maintaining the immune system is temporarily reduced, freeing more energy for sudden action. Pupils dilate, reflexes quicken, and concentration increases. Only when the crisis has passed does the body resume normal function.

Such responses are survival-enhancing for short-term situations in the wild because they motivate an animal to terminate the source of stress and regain control. But when the source of stress is unending—that is, when it can’t be fled or fought—the body continues its stressed response until it is exhausted. Animals in a zoo still experience anxiety over basic survival needs and the possibility of predator attacks because they don’t know that they’re safe. Physically, remaining in a constant state of heightened alert can induce a weakened immune system, ulcers, and even heart problems. Mentally, this stress can cause a variety of repetitive and sometimes self-destructive behaviors known as stereotypies, the animal equivalent of wringing one’s hands or biting one’s lip, which are considered a sign of depression or anxiety by most biologists.

Gus, the 700-pound polar bear at the Central Park Zoo, exhibited such behavior back in 1994 when, to the dismay of zoo-goers and his keepers, he spent the bulk of his time swimming an endless series of short laps. In order to address his neuroses, Gus—a true New Yorker—was set up with a therapist: animal behaviorist Tim Desmond, known for training the whale in Free Willy. Desmond concluded that Gus needed more challenges and opportunities to exercise his instincts. Gus wanted to feel as if he still had the ability to choose where he spent his time and how—he needed to reassume control of his own destiny. Similarly, the frequent grooming that pet hamsters and lab mice engage in isn’t due to their fastidious natures; it’s a nervous habit that can continue until they completely rub and gnaw away patches of their fur. If administered fluoxetine, the anti-depressant most commonly known as Prozac, the animals reduce or discontinue these behaviors.

Due to these physically and psychologically harmful effects, captivity can often result in lower life expectancies despite objectively improved living conditions. Wild African elephants, for example, have an average life span of 56 years as compared to 17 years for zoo-born elephants. Other deleterious effects include fewer births (a chronic problem with captive pandas) and high infant mortality rates (over 65 percent for polar bears). Though this is bad news for any captive animal, it is especially alarming in the case of endangered species.

For all the material comforts zoos provide and all their attempts to replicate animals’ natural habitats as closely as possible, even the most sophisticated zoos cannot match the level of stimulation and exercise of natural instincts that animals experience in the wild. The desperation of a life in captivity is perhaps conveyed best in Rainer Maria Rilke’s poem “The Panther”: As the animal “paces in cramped circles, over and over,” he seems to perform “a ritual dance around a center / in which a mighty will stands paralyzed.” Unlike the dogs in the Seligman experiment, the panther displays his paralysis not by lying still, but by constantly moving. Just like the helpless dogs, however, he cannot see past his confinement: “It seems to him there are / a thousand bars; and behind the bars, no world.” Whether the bars are real or metaphorical, when one has no control, it is as if nothing exists beyond the pain of this loss.

V. CHOOSING HEALTH, HEALTHY CHOOSING

While we may not face the threat of captivity like our animal counterparts, humans voluntarily create and follow systems that restrict some of our individual choices to benefit the greater good. We vote to create laws, enact contracts, and agree to be gainfully employed because we recognize that the alternative is chaos. But what happens when our ability to rationally recognize the benefits of these restrictions conflicts with an instinctive aversion to them? The degree to which we are able to strike a balance of control in our lives has a significant bearing on our health.

A decades-long research project known as the Whitehall Studies, conducted by Professor Michael Marmot of University College London, provides a powerful demonstration of how our perceptions of choice can affect our well-being. Beginning in 1967, researchers followed more than 10,000 British civil servants aged 20 to 64, comparing the health outcomes of employees from different pay grades. Contradicting the stereotype of the hard-charging boss who drops dead of a heart attack at 45, the studies found that although the higher-paying jobs came with greater pressure, employees in the lowest pay grade, such as doormen, were three times more likely to die from coronary heart disease than the highest-grade workers were.

In part, this was because lower-grade employees were more likely to smoke and be overweight, and less likely to exercise regularly, than their higher-grade counterparts. But when scientists accounted for the differences in smoking, obesity, and exercise, the lowest-grade employees were still twice as likely to die from heart disease. Though the higher income that comes with being at the top of the ladder obviously enhances the potential for control in one’s life, this isn’t the sole explanation for the poorer health of the lower-grade employees. Even employees from the second-highest grade, including doctors, lawyers, and other professionals considered well-off by society’s standards, were at notably higher risk than their bosses.

As it turned out, the chief reason for these results was that pay grades directly correlated with the degree of control employees had over their work. The boss took home a bigger paycheck, but more importantly, he directed his own tasks as well as those of his assistants. Although a CEO’s shouldering of responsibility for his company’s profit is certainly stressful, it turns out that his assistant’s responsibility for, say, collating an endless number of memos is even more stressful. The less control people had over their work, the higher their blood pressure during work hours. Moreover, blood pressure at home was unrelated to the level of job control, indicating that the spike during work hours was specifically caused by lack of choice on the job. People with little control over their work also experienced more back pain, missed more days of work due to illness in general, and had higher rates of mental illness—the human equivalent of stereotypies, resulting in the decreased quality of life common to animals reared in captivity.

Unfortunately, the news only gets worse. Several studies have found that apart from the stressors at work, we suffer greatly due to elements of the daily grind that are beyond our control, such as interruptions, traffic jams, missing the bus, smog, and noisy or flickering fluorescent lights. The very agitation and muscle tension that enable quick, lifesaving movement in the wild can lead to frustration and backache in the modern world. Fight or flight was never intended to address 6:30 a.m. wake-up calls or the long commute to a dead-end job. Because we can’t recover with time, these continuous low-grade stressors can actually deteriorate health to a greater extent than infrequent calamities like getting fired or going through a divorce. When it comes to lack of control, often the devil is indeed in the details.

Is there any hope, then, for those who can’t or choose not to climb the corporate ladder? The Whitehall Studies, though disturbing, suggest there is. What affected people’s health most in these studies wasn’t the actual level of control that people had in their jobs, but the amount of control they perceived themselves as having. True, the lower-ranked employees perceived less control on average than those higher up because their jobs actually offered less control, but within each position there was considerable variation in people’s perceptions of their control and their corresponding measures of health. Thus, a well-compensated executive who feels helpless will suffer the same type of negative physiological response as a low-paid mailroom clerk.

Unlike captive animals, people’s perceptions of control or helplessness aren’t entirely dictated by outside forces. We have the ability to create choice by altering our interpretations of the world. Callahan’s choice to live rather than die is an extreme example, but by asserting control in seemingly uncontrollable situations, we can improve our health and happiness. People who perceive the negative experiences in their lives as the result of uncontrollable forces are at a higher risk for depression than those who believe they have control. They are less likely to try to escape damaging situations such as drug addiction or abusive relationships. They are also less likely to survive heart attacks and more likely to suffer weakened immune systems, asthma, arthritis, ulcers, headaches, or backaches. So what does it take to cultivate “learned optimism,” adjusting our vision to see that we have control rather than passively suffering the shocks of life?

We can find some clues in a 1976 study at Arden House, a nursing home in Connecticut, where scientists Ellen Langer and Judy Rodin manipulated the perception of control among residents aged 65 to 90. To begin, the nursing home’s social coordinator called separate meetings for the residents of two different floors. At the first floor’s meeting he handed out a plant to each resident and informed them that the nurses would take care of their plants for them. He also told them that movies were screened on Thursdays and Fridays, and that they would be scheduled to see the movie on one of those days. He assured residents that they were permitted to visit with people on other floors and engage in different types of activities, such as reading, listening to the radio, and watching TV. The focus of his message was that the residents were allowed to do some things, but the responsibility for their well-being lay in the competent hands of the staff, an approach that was the norm for nursing homes at that time (and still is). As the coordinator said, “We feel it is our responsibility to make this a home you can be proud of and happy in, and we want to do all we can to help you.”

Then the coordinator called a meeting for the other floor. This time he let each resident choose which plant he or she wanted, and told them that taking care of the plants would be their responsibility. He likewise allowed them to choose whether to watch the weekly movie screening on Thursday or Friday, and reminded them of the many ways in which they could choose to spend their time, such as visiting with other residents, reading, listening to the radio, and watching TV. Overall he emphasized that it was the residents’ responsibility to make their new home a happy place. “It’s your life,” he said. “You can make of it whatever you want.”

Despite the differences in these messages, the staff treated the residents of the two floors identically, giving them the same amount of attention. Moreover, the additional choices given to the second group of residents were seemingly trivial, since everyone got a plant and saw the same movie each week, whether on Thursday or Friday. Nevertheless, when examined three weeks later, the residents who had been given more choices were happier and more alert, and they interacted more with other residents and staff than those who hadn’t been given the same choices. Even within the short, three-week time frame of the study, the physical health of over 70 percent of the residents from the “choiceless” group deteriorated. By contrast, over 90 percent of the people with choice saw their health improve. Six months later, researchers even found that the residents who’d been given greater choice—or, indeed, the perception of it—were less likely to have died.

The nursing home residents benefited from having choices that were largely symbolic. Being able to exercise their innate need to control some of their environment prevented the residents from suffering the stress and anxiety that caged zoo animals and lower-pay-grade employees often experience. The study suggests that minor but frequent choice making can have a disproportionately large and positive impact on our perception of overall control, just as the accumulation of minor stresses is often more harmful over time than the stress caused by a few major events. More profoundly, this suggests that we can give choice to ourselves and to others, along with the benefits that accompany choice. A small change in our actions, such as speaking or thinking in a way that highlights our agency, can have a big effect on our mental and physical state.

According to various studies examining mind-over-matter attitudes in medical patients, even those struggling against the most malignant illnesses such as cancer and HIV, refusing to accept the situation as hopeless can increase chances of survival and reduce the chance of relapse, or at least postpone death. For example, in one study at Royal Marsden Hospital in the United Kingdom—the first hospital in the world to be dedicated solely to the study and treatment of cancer—breast cancer patients who scored higher on helplessness and hopelessness had a significantly increased risk of relapse or death within five years as compared to members of the study who scored lower on those measures. Numerous studies also found this to be the case for patients with HIV in the years before effective treatments were available; those who reported more feelings of helplessness were more likely to progress from HIV to full-blown AIDS, and died more quickly after developing AIDS. Is it really possible that the way someone thinks about their illness can directly affect their physical well-being?

The debate in the medical community rages on, but what’s clear is that, whenever possible, people reach for choice—we want to believe that seeing our lives in these terms will make us better off. And even if it doesn’t make us better off physically, there is certainly reason to believe that it makes us feel better. For example, in one study conducted at UCLA, two-thirds of breast cancer patients reported that they believed they could personally control the course of their illness, and of these, more than a third believed they had a lot of control. This perception often led to behavioral changes, for example eating more fruits and vegetables. However, more often than not, the control manifested as purely mental action, such as picturing chemotherapy as a cannon blasting away pieces of the cancer dragon. Patients also told themselves, “I absolutely refuse to have any more cancer.” However implausible these beliefs may have been, the greater the control the patients felt they had over their disease, the happier they were. Indeed, the patients’ need to believe in their power over their illnesses echoes the craving for control that all people, healthy or sick, young or old, instinctively need to exert over their lives. We wish to see our lives as offering us choice and the potential for control, even in the most dismal of circumstances.

VI. TELLING STORIES

Here’s the disclaimer: There is no guarantee that choosing to live will actually help you survive. Stories about “the triumph of the human spirit” often highlight the crucial point at which the hero/survivor said, “I knew now that I had a choice,” or “A difficult choice lay before me.” Frequently, what follows is purple prose about the inspirational journey from darkness to light and a platitude-filled explanation of the lessons to be learned. But Richter’s rats seemed to “believe” as hard as any creature could that they would reach safety, and we have never heard the stories of the many sailors and mountaineers and terminally ill people who died even though they, too, had chosen to live. So survivor stories can be misleading, especially if they emphasize the individual’s “phenomenal strength of character” above all else. At other times, they can seem too familiar, as though read from the same script handed out to all survivors before they face TV cameras.

Nevertheless, such stories do help people withstand the fear and suffering that accompany serious illness and tragedy. Even beliefs that are unrealistically optimistic according to medical consensus are more beneficial for coping than a realistic outlook. And though one might expect a backlash from patients who suffer a relapse after having fervently believed that they were cured, studies show that this is not the case. If you’re healthy, you might reject such optimism as delusion, but if the tables were turned, perhaps you would also reach for anything that could jigger the odds ever so slightly in your favor.

Joan Didion begins her essay “The White Album” with the following phrase: “We tell ourselves stories in order to live.” It is a simple but stunning claim. A few sentences later, she writes, “We look for the sermon in the suicide, for the social or moral lesson in the murder of five. We interpret what we see, select the most workable of the multiple choices. We live entirely, especially if we are writers, by the imposition of a narrative line upon disparate images, by the ‘ideas’ with which we have learned to freeze the shifting phantasmagoria which is our actual experience.” The imposed narrative, even if it is trite or sentimental, serves an important function by allowing us to make some sense of our lives. When that narrative is about choice, when it is the idea that we have control, we can tell it to ourselves—quite literally—“in order to live.”

One could even argue that we have a duty to create and pass on stories about choice because once a person knows such stories, they can’t be taken away from him. He may lose his possessions, his home, his loved ones, but if he holds on to a story about choice, he retains the ability to practice choice. The Stoic philosopher Seneca the Younger wrote, “It is a mistake to imagine that slavery pervades a man’s whole being; the better part of him is exempt from it: the body indeed is subjected and in the power of a master, but the mind is independent, and indeed is so free and wild, that it cannot be restrained even by this prison of the body, wherein it is confined.” For animals, the confinement of the body is the confinement of the whole being, but a person can choose freedom even when he has no physical autonomy. In order to do so, he must know what choice is, and he must believe that he deserves it. By sharing stories, we keep choice alive in the imagination and in language. We give each other the strength to perform choice in the mind even when we cannot perform it with the body.

It is no wonder, then, that the narrative of choice keeps growing, spreading, and acquiring more power. In America, it fuels the American Dream founded upon the “unalienable Rights” of “Life, Liberty and the pursuit of Happiness” promised in the Declaration of Independence. Its origins extend much further back since it is implicit in any discussion of freedom or self-determination. Indeed, we can sense its comforting presence even when the word “choice” is absent. When we act out this narrative, often by following scripts written by others, we claim control no matter what our circumstances. And though our scripts and performances vary, as we will see next, the desire and need for choice is universal. Whatever our differences—in temperament, culture, language—choice connects us and allows us to speak to one another about freedom and hope.

I. A BLESSED UNION

On an August morning over 40 years ago, Kanwar Jit Singh Sethi woke at dawn to prepare for the day. He began with a ceremonial bath; wearing only his kachchha, the traditional Sikh undergarment of white drawstring shorts, he walked into the bathing room of his family’s Delhi home. In the small space lit by a single window, he sat on a short wooden stool, the stone floor cold beneath his bare feet. His mother and grandmother entered the bathing room and anointed him with vatna, a fragrant paste of turmeric, sandalwood, milk, and rosewater. Then they filled a bucket with water and poured cupfuls over his head and shoulders.

Kanwar Jit’s mother washed his hair, which fell to the middle of his back, and his beard, which reached to his breastbone; in accordance with Sikh tradition, they had never been cut. After his hair was clean, she vigorously massaged it with fragrant oil and rolled it into tight knots, tying his hair atop his head and his beard beneath his chin. After donning his best suit, Kanwar Jit cut an impressive figure: 28 years old, 160 pounds, six feet tall in his bright red turban. One could not help but be drawn to his appearance and jolly demeanor, his soft eyes and easy way. He walked through the doors into the courtyard, where nearly a hundred friends and relatives had gathered, to begin the celebrations.

Several blocks away, 23-year-old Kuldeep Kaur Anand started her morning in much the same way, though she was, in many respects, Kanwar Jit’s opposite. At a diminutive five foot one and 85 pounds, and as shy as Kanwar Jit was outgoing, she didn’t call attention to herself, instead focusing her keen eyes on others. After the ceremonial bath, she dressed in an orange sari that matched the one worn by Mumtaz, her favorite actress, in that year’s hit film Brahmachari. She welcomed the many guests now arriving at the house, all of them smiling and wishing her the best for the future.

In both homes, the festivities continued throughout the day with platters of cheese and vegetable pakoras providing sustenance for all the meeting and greeting. At dusk, each household began to prepare for the Milni, the ceremony in which the two families would come together. At Kanwar Jit’s home, a band arrived, playing a traditional song on the shehnai, a reed instrument thought to bring good luck. A white horse covered with a brown embroidered rug came too; Kanwar Jit would ride it to Kuldeep’s home. But before he set off, his sister covered his face with a sehra, several tassels of gold entwined with flowers that hung from his turban. Then Kanwar Jit mounted the horse and, flanked by his family, rode to his destination, the band leading the way.

At her home, Kuldeep stood at the front door singing hymns with her family. Her face was covered by an ornately embroidered veil given to her by Kanwar Jit’s mother. When the procession arrived, shehnai blaring and tabla beating, Kanwar Jit and Kuldeep exchanged garlands of roses and jasmine. At the same time, each member of one family specially greeted his or her counterpart in the other family. Mother greeted mother, sister greeted sister, and so on. These familial “couples” also exchanged garlands. The families then celebrated by singing and dancing until it was time for Kanwar Jit’s family to depart.

The next day at dawn, Kuldeep’s and Kanwar Jit’s families traveled to a nearby temple for the ceremony of Anand Karaj, or Blessed Union. Kanwar Jit, again wearing a red turban and dark suit, knelt in front of the wooden altar that held the Guru Granth Sahib, the Sikh holy book. Kuldeep, wearing a pink salwar kameez, an outfit of loose pants and a long tunic, knelt beside him, an opaque veil with gold tassels covering her almost to the waist. After singing hymns and saying prayers, Kanwar Jit’s grandfather tied one end of a long scarf to his grandson’s hand and the other end to Kuldeep’s hand. Connected in this way, the couple circled the Guru Granth Sahib four times. They paused after each circuit to hear the Sant, or holy man, read a prayer related to their union: karma, dharma, trust, and blessings. Afterward, in celebration, both families tossed money and garlands at the couple’s feet. Then Kanwar Jit lifted the veil and, for the first time, saw his wife’s face.

This is how my parents were married. Every detail of the ceremony was decided for them, from whom to marry to what to wear to what to eat. It was all part of a closely followed cultural script that had evolved over time into the Sikh traditions that they and their families adhered to on that day. Whenever I mention to people that my parents met for the first time on their wedding day, the most common reaction is shock: “Their families decided on the match? How could your parents let that happen to them?” Simply explaining to people that this is the way marriages were decided upon in my family—in most Indian families—does not seem to satisfy their curiosity or diminish their incredulity. On the surface, people understand that there are cultural differences in the way marriages come about. But the part that really doesn’t sit well, the part that they simply can’t wrap their heads around, is that my parents allowed such an important choice to be taken out of their hands. How could they do such a thing, and why?

II. A MATTER OF FAITH

Remember Martin Seligman, the psychologist who ran those unsettling experiments with dogs? His compelling studies with both humans and animals, as well as the other studies we learned about in the previous chapter, demonstrate just how much we need to feel in control of what happens to us. When we can’t maintain control, we’re left feeling helpless, bereft, unable to function. I first learned about these experiments when taking a course with Seligman as an undergraduate at the University of Pennsylvania. The findings from such research made me start to question whether my own Sikh tradition, rather than empowering or uplifting its followers, could actually engender a sense of helplessness. As a member of the Sikh faith, I was constantly keeping track of so many rules: what to wear, what to eat, forbidden behaviors, and my duties to family. When I added it all up, there wasn’t much left for me to decide—so many of my decisions had been made for me. This was true not only for Sikhism but for many other religions. I brought my questions to Seligman, hoping he could help shed some light on whether members of religious faiths were likely to experience greater helplessness in their lives. But he, too, was unsure, as there were no scientific investigations into this subject. So we decided to embark on a study examining the effects of religious adherence on people’s health and happiness.

For the next two years, anyone glancing at my social calendar might have assumed I was trying to atone for a lifetime of sin. Each week my research began at sundown on Friday with a visit to a mosque, immediately followed by a visit to a synagogue. On Saturdays I visited more synagogues and mosques, and on Sundays I went church-hopping. In total, I interviewed over 600 people from nine different religions. These faiths were categorized as fundamentalist (Calvinism, Islam, and Orthodox Judaism), which imposed many day-to-day regulations on their followers; conservative (Catholicism, Lutheranism, Methodism, and Conservative Judaism); or liberal (Unitarianism and Reform Judaism), which imposed the fewest restrictions. In fact, some branches of the liberal religions don’t even require their practicing members to believe in God, and the largest percentage of Unitarian Universalists described themselves as secular humanists, followed by those with an earth- or nature-centered spirituality.

The worshippers were asked to fill out three surveys. The first contained questions regarding the impact of religion in their lives, including the extent to which it affected what they ate, drank, wore, whom they would associate with, and whom they would marry. Members of the fundamentalist faiths indeed scored the highest on these questions and members of the liberal faiths scored the lowest. The survey also asked about religious involvement (how often they attended services or prayed) and religious hope (“Do you believe there is a heaven?” and “Do you believe your suffering will be rewarded?”). A second survey measured each individual’s level of optimism by examining their reactions to a series of hypothetical good and bad life events. When asked how they would react to being fired, optimists gave answers like, “If I was fired from my job it would be for something specific that would be easy to fix,” while pessimists said things like, “If I was fired from my job it would be because there’s something wrong with me that I’ll never be able to fix.” In essence, they were describing how much control they believed they had over their lives. Last, they filled out a commonly used mental health questionnaire to determine if they had any symptoms of depression, such as weight loss or lack of sleep. To my surprise, it turned out that members of more fundamentalist faiths experienced greater hope, were more optimistic when faced with adversity, and were less likely to be depressed than their counterparts. Indeed, the people most susceptible to pessimism and depression were the Unitarians, especially those who were atheists. The presence of so many rules didn’t debilitate people; instead, it seemed to empower them. Many of their choices were taken away, and yet they experienced a sense of control over their lives.

This study was an eye-opener: Restrictions do not necessarily diminish a sense of control, and freedom to think and do as you please does not necessarily increase it. The resolution of this seeming paradox lies in the different narratives about the nature of the world—and our role within it—that are passed down from generation to generation. We all want and need to be in control of our lives, but how we understand control depends on the stories we are told and the beliefs we come to hold. Some of us come to believe that control comes solely through the exercise of personal choice. We must find our own path to happiness because no one will (or can) find it for us. Others believe that it is God who is in control, and only by understanding His ways and behaving accordingly will we be able to find happiness in our own lives. We are all exposed to different narratives about life and choice as a function of where we’re born, who our parents are, and numerous other factors. In moving from culture to culture and country to country, then, we encounter remarkable variations in people’s beliefs about who should make choices, what to expect from them, and how to judge the consequences.

Since beginning my formal study of choice as an undergraduate, I have interviewed, surveyed, and run experiments with people from all walks of life: old and young, secular and religiously observant, members of Asian cultures, veterans of the communist system, and people whose families have been in the United States for generations. In the rest of this chapter, I’ll share with you my own research and also the observations of a growing number of researchers who have been looking at the ways in which geography, religion, political systems, and demographics can fundamentally shape how people perceive themselves and their roles. The stories of our lives, told differently in every culture and every home, have profound implications for what and why we choose, and it is only by learning how to understand these stories that we can begin to account for the wonderful and baffling differences among us.

III. THE INDIVIDUAL AND THE COLLECTIVE

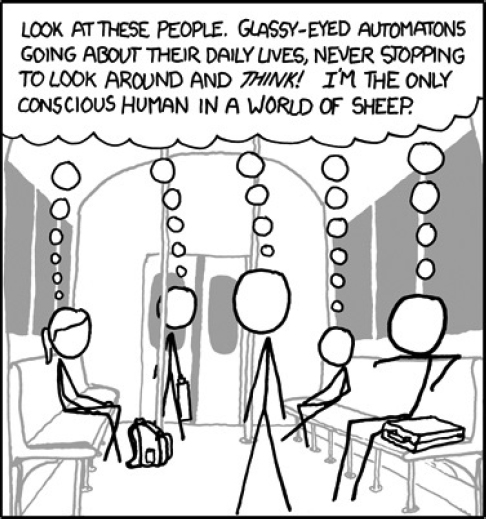

In 1995, I spent several months in Kyoto, Japan, living with a local family while I did research for my PhD dissertation with Shinobu Kitayama, one of the founders of the field of cultural social psychology. I knew I would experience cultural differences, even misunderstandings, but they often popped up where I least expected them. The most surprising might have been when I ordered green tea with sugar at a restaurant. After a pause, the waiter politely explained that one does not drink green tea with sugar. I responded that yes, I was aware of this custom, but I liked my tea sweet. My request was met with an even more courteous version of the same explanation: One does not drink green tea with sugar. While I understood, I told him, that the Japanese do not put sugar in their green tea, I would still like to put some in my green tea. Thus thwarted, the waiter took up the issue with the manager, and the two of them began a lengthy conversation. Finally, the manager came over to me and said, “I am very sorry. We do not have sugar.” Since I couldn’t have the green tea as I liked it, I changed my order to a cup of coffee, which the waiter soon brought over. Resting on the saucer were two packets of sugar.

My failed campaign for a cup of sweet green tea makes for an amusing story, but it also serves as shorthand for how views on choice vary by culture. From the American perspective, when a paying customer makes a reasonable request based on her personal preferences, she has every right to have those preferences met. From the perspective of the Japanese, however, the way I liked my tea was terribly inappropriate according to accepted cultural standards, and the waitstaff was simply trying to prevent me from making such an awful faux pas. Looking beyond the trappings of the situation, similar patterns of personal choice or social influence can be seen in family life, at work, and in potentially every other aspect of life when comparing American and Japanese cultures. While there are numerous differences between these two cultures, or indeed any two cultures, one particular cultural feature has proved especially useful for understanding how the ideas and practice of choice vary across the globe: the degree of individualism or collectivism.

Ask yourself: When making a choice, do you first and foremost consider what you want, what will make you happy, or do you consider what is best for you and the people around you? This seemingly simple question lies at the heart of major differences between cultures and individuals, both within and between nations. Certainly, most of us would not be so egocentric as to say that we would ignore all others or so selfless as to say that we would ignore our own needs and wants entirely—but setting aside the extremes, there can still be a great deal of variation. Where we fall on this continuum is very much a product of our cultural upbringing and the script we are given for how to choose—in making decisions, are we told to focus primarily on the “I” or on the “we”? Whichever set of assumptions we’re given, these cultural scripts are intended not only to help us successfully navigate our own lives but also to perpetuate a set of values regarding the way in which society as a whole functions best.

Those of us raised in more individualist societies, such as the United States, are taught to focus primarily on the “I” when choosing. In his book Individualism and Collectivism, cultural psychologist Harry Triandis notes that individualists “are primarily motivated by their own preferences, needs, rights, and the contracts they have established with others” and “give priority to their personal goals over the goals of others.” Not only do people choose based on their own preferences, which is itself significant given the number of choices in life and their importance; they also come to see themselves as defined by their individual interests, personality traits, and actions; for example, “I am a film buff” or “I am environmentally conscious.” In this worldview, it’s critical that one be able to determine one’s own path in life in order to be a complete person, and any obstacle to doing so is seen as patently unjust.

Modern individualism has its most direct roots in the Enlightenment of seventeenth- and eighteenth-century Europe, which itself drew on a variety of influences: the works of Greek philosophers, especially Socrates, Plato, and Aristotle; René Descartes’ attempt to derive all knowledge from the maxim “I think, therefore I am”; the Protestant Reformation’s challenge to the central authority of the Catholic Church with the idea that every individual had a direct line to God; and scientific advances by such figures as Galileo and Isaac Newton that provided ways to understand the world without recourse to religion. These led to a new worldview, one that rejected the traditions that had long ruled society in favor of the power of reason. Each person possessed the ability to discover for himself what was right and best instead of depending on external sources like kings and clergy.

The founding fathers of the United States were heavily influenced by Enlightenment philosophy, in particular John Locke’s arguments for the existence of universal individual rights, and in turn incorporated these ideas into the U.S. Constitution and Bill of Rights. The signing of the Declaration of Independence coincided with another milestone in the history of individualism: Adam Smith’s The Wealth of Nations, published in 1776, which argued that if each person pursued his own economic self-interest, society as a whole would benefit as if guided by an “invisible hand.” Central to individualist ideology is the conceiving of choice in terms of opportunity—promoting an individual’s ability to be or to do whatever he or she desires. The cumulative effect of these events on people’s expectations about the role choice should play in life and its implications for the structure of society was eloquently expressed by the nineteenth-century philosopher and economist John Stuart Mill, who wrote, “The only freedom deserving the name is that of pursuing our own good in our own way, long as we do not attempt to deprive others of theirs, or impede their efforts to obtain it…. Mankind are greater gainers by suffering each other to live as seems good to themselves, than by compelling each to live as seems good to the rest.”

This way of thinking has become so ingrained that we rarely pause to consider that it may not be a universally shared ideal—that we may not always want to make choices, or that some people prefer to have their choices prescribed by another. But in fact the construct of individualism is a relatively new one that guides the thinking of only a small percentage of the world’s population. Let’s now turn to the equally rich tradition of collectivism and how it impacts people’s notions of choice across much of the globe.

Members of collectivist societies, including Japan, are taught to privilege the “we” in choosing, and they see themselves primarily in terms of the groups to which they belong, such as family, coworkers, village, or nation. In the words of Harry Triandis, they are “primarily motivated by the norms of, and duties imposed by, those collectives” and “are willing to give priority to the goals of these collectives over their own personal goals,” emphasizing above all else “their connectedness to members of these collectives.” Rather than everyone looking out for number one, it’s believed that individuals can be happy only when the needs of the group as a whole are met. For example, the Japanese saying makeru ga kachi (literally “to lose is to win”) expresses the idea that getting one’s way is less desirable than maintaining peace and harmony. The effects of a collectivist worldview go beyond determining who should choose. Rather than defining themselves solely by their personal traits, collectivists understand their identities through their relationships to certain groups. People in such societies, then, strive to fit in and to maintain harmony with their social in-groups.

Collectivism has, if anything, been the more pervasive way of life throughout history. The earliest hunter-gatherer societies were highly collectivist by necessity, as looking out for one another increased everyone’s chances of survival, and the value placed on the collective grew after humans shifted to agriculture as a means of sustenance. As populations increased and the formerly unifying familial and tribal forces became less powerful, other forces such as religion filled the gap, providing people with a sense of belongingness and common purpose.

Whereas value for individualism solidified mainly in the Enlightenment, multiple manifestations of collectivism have emerged over time. The first can be traced directly back to the cultural emphasis on duty and fate that gradually developed in Asia—essentially independent of the West—thousands of years ago and is still influential today. Hinduism and those religions that succeeded it, including Buddhism, Sikhism, and Jainism, place a strong emphasis on some form of dharma, which defines each person’s duties as a function of his caste or religion, as well as on karma, the universal law of cause and effect that transcends even death. Another significant influence is Confucianism, a codification of preexisting cultural practices that originated in China but later also spread to Southeast Asia and Japan. In The Analects, Confucius wrote, “In the world, there are two great decrees: one is fate and the other is duty. That a son should love his parents is fate—you cannot erase this from his heart. That a subject should serve his ruler is duty—there is no place he can go and be without his ruler, no place he can escape to between heaven and earth.” The ultimate goal was to make these inevitable relationships as harmonious as possible. This form of collectivism remains foremost in the East today; in these cultures, individuals tend to understand their lives relatively more in terms of their duties and less in terms of personal preferences.

A second major strain of collectivism emerged in nineteenth-century Europe, in many ways as a response to individualism. Political theorists like Karl Marx criticized the era’s capitalist institutions, arguing that the focus on individual self-interest perpetuated a system in which a small upper class benefited at the expense of the larger working class. They called for people to develop “class consciousness,” to identify with their fellow workers and rise up to establish a new social order in which all people were equal in practice as well as in principle, and this rallying cry often received considerable support. In contrast to individualism, this more populist ideology focused on guaranteeing each and every person’s access to a certain amount of resources rather than on maximizing the overall number of opportunities available. This philosophy’s most significant effect on the world occurred when the communist Bolshevik faction came to power in Russia as a result of the October Revolution in 1917, which led to the eventual formation of the Soviet Union and offered an alternative model of government to emerging nations around the world.

So where do the borders between individualism and collectivism lie in the modern world? Geert Hofstede, one of the most well-known researchers in this field, has created perhaps the most comprehensive ranking system for a country’s level of individualism based on the results of his work with the employees of IBM branches across the globe. Not surprisingly, the United States consistently ranks as the most individualist country, scoring 91 out of 100. Australia (90) and the United Kingdom (89) are close behind, while Western European countries primarily fall in the 60 to 80 range. Moving across the map to Eastern Europe, rankings begin to fall more on the collectivist end, with Russia at 39. Asia as a whole also tends to be more collectivist, with a number of countries hovering around 20, including China, though Japan and India are somewhat higher with scores of 46 and 48, respectively. Central and South American countries tend to rank quite high in collectivism, generally between 10 and 40, with Ecuador rated the most collectivist country of all, with a 6 out of 100 on the scale. Africa is understudied, though a handful of countries in East and West Africa are estimated to score between 20 and 30. Subsequent studies have consistently found a similar pattern of results around the world, with individualists tending to endorse statements like, “I often ‘do my own thing,’ ” or “One should live one’s life independently of others,” while collectivists endorse, “It is important to maintain harmony within my group,” or “Children should be taught to place duty before pleasure.”

It’s important to note that a country’s score on scales like these is nothing more than the average of its citizens’ scores, which aren’t solely dependent on the prevailing culture and can cover a significant range. Many of the same factors that affect the culture of a nation or a community can have an effect on the individual as well. Greater wealth is associated with greater individualism at all levels, whether we compare nations by GDP, or blue-collar and upper-middle-class Americans by annual income. Higher population density is associated with collectivism, as living in close proximity to others requires more restrictions on behavior in order to keep the peace. On the other hand, greater exposure to other cultures and higher levels of education are both associated with individualism, so cities aren’t necessarily more collectivist than rural areas. People become slightly more collectivist with age as they develop more numerous and stronger relationships with others, and just as important, they become more set in their views over time, meaning they will be less affected than the younger generations by broad cultural changes. All these factors, not to mention personality and incidental experiences in life, combine and interact to determine each person’s position on the individualism-collectivism spectrum.

IV. A TALE OF TWO WEDDINGS

So why did my parents let others decide whom they would be spending the rest of their lives with? Perhaps we can find an answer to this question by using the concepts of individualism and collectivism. If you look at the narratives of love and arranged marriage, it seems clear that a love marriage is a fundamentally individualist endeavor, while an arranged marriage is quintessentially collectivist. Let’s examine how these narratives unfold and the different messages that they convey.

Consider the fairy tale of Cinderella, the kind and lovely young maiden forced to work as a servant by the evil stepmother and the two ugly stepsisters. Aided by a magical fairy godmother, she manages to attend the royal ball despite her stepmother’s forbidding her to do so, and steals the spotlight when she arrives in a carriage, wearing a beautiful gown and stunning glass slippers. She also manages to steal the heart of the prince himself—he falls in love with her at first sight—but she must leave before the spell that transformed her from a servant girl into a lovely maiden wears off at midnight. In spite of her stepfamily’s attempts to sabotage her love, she finally succeeds in proving herself the wearer of the glass slipper and marries the prince, and the story ends with the declaration that they “lived happily ever after.”

Now let me share with you a very different story, about a real princess who lived long, long ago. In the fifteenth century, a beautiful 14-year-old girl was chosen to become the third wife of the powerful Mughal emperor Shah Jahan. They were said to have fallen in love at first sight but had to wait five years for their marriage to be consecrated. The real story begins after their lives were joined as one, as Mumtaz Mahal (meaning “Chosen One of the Palace”) accompanied her husband everywhere he went on his travels and military campaigns throughout the Mughal Empire, bearing 13 children along the way.

Court chroniclers dutifully documented their intimate and loving marriage, in which Mumtaz acted not only as a wife and companion but also frequently as a trusted adviser and a benevolent influence upon her powerful husband. She was widely considered to be the perfect wife and was celebrated by poets even during her lifetime for her wisdom, beauty, and kindness. When she died while bearing her fourteenth child, it was rumored that the emperor had made a promise to her on her deathbed that he would build a monument to their loving life together. After her death and a period of deep grief and mourning, Shah Jahan set about designing the mausoleum and gardens that would do justice to the beauty and incredible life of his late spouse. The result, the Taj Mahal, remains standing in Agra, India, as one of the Seven Wonders of the World and as a testament to a legendary marriage.