Acclaim for Henry Petroski’s

THE EVOLUTION OF USEFUL THINGS

“[It] offers the reader many fascinating data about human artifacts.… Petroski is an amiable and lucid writer.… He belong[s] with the poets, extending the Romantic embrace of nature to the invented, manufactured world that has become man’s second nature.”

—John Updike, The New Yorker

“Petroski weaves wonderfully odd facts into this book.… [He] makes us pay attention to ordinary objects … just the ticket for the technophile.”

—Los Angeles Times

“A constellation of little marvels [that] all make good stories.… [A] paean to Yankee ingenuity … an informative, entertaining book.”

—Boston Globe

“One has to admire a man who delivers an intelligent tirade on a garbage bag.… Readable and entertaining … Petroski liberally mixes biography, social history, design theory and even word derivations into these affectionately told tales.”

—Chicago Tribune

“This book is a monument to the historian’s curiosity and the engineer’s tenacity. It is a treasure trove of fascinating facts and amusing anecdotes.”

—New Criterion

BOOKS BY HENRY PETROSKI

The Book on the Bookshelf

Remaking the World

Invention by Design

Engineers of Dreams

Design Paradigms

The Evolution of Useful Things

The Pencil

Beyond Engineering

To Engineer Is Human

Paperboy

Small Things Considered

Henry Petroski

THE EVOLUTION OF USEFUL THINGS

Henry Petroski is the Aleksandar S. Vesic Professor of Civil Engineering and a professor of history at Duke University. He is the author of more than ten books.

FIRST VINTAGE BOOKS EDITION, FEBRUARY 1994

Copyright © 1992 by Henry Petroski

All rights reserved under International and Pan-American Copyright Conventions. Published in the United States by Vintage Books, a division of Random House, Inc., New York, and simultaneously in Canada by Random House of Canada Limited, Toronto. Originally published in hardcover by Alfred A. Knopf, Inc.,

New York, in 1992.

Some of this material first appeared, in different form, in

American Heritage of Invention and Technology, Technology Review,

Wigwag, and Wilson Quarterly.

Grateful acknowledgment is made to the following for permission to reprint previously published material:

Barrie & Jenkins: Excerpts from The Nature and Aesthetics of Design by David Pye (Barrie & Jenkins, London, 1978). Reprinted by permission of Barrie & Jenkins, a division of The Random Century Group Limited.

Caliban Books: Excerpt from William Smith, Potter and Farmer, 1790–1858 by George Sturt (Caliban Books, London, 1978). Reprinted by permission.

HarperCollins Publishers Inc.: Excerpts from Etiquette: The Blue Book of Social Usage by Emily Post. Copyright © 1965 by Funk and Wagnalls Co., Inc. Reprinted by permission of HarperCollins Publishers Inc.

Harvard University Press: Excerpts from Notes on the Synthesis of Form by Christopher Alexander (Harvard University Press, 1964). Reprinted by permission.

Library of Congress Cataloging-in-Publication Data

Petroski, Henry.

The evolution of useful things / Henry Petroski.—1st Vintage Books ed.

p. cm.

Originally published: New York: A. Knopf, 1992.

eISBN: 978-0-307-77305-0

1. Inventions. 2. Patents. I. Title.

T212.P465 1994

609—dc20 93-6351

Author photograph © Catherine Petroski

v3.1

Contents

1 How the Fork Got Its Tines

2 Form Follows Failure

3 Inventors as Critics

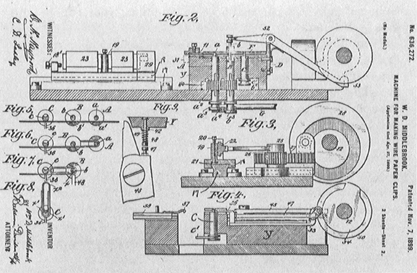

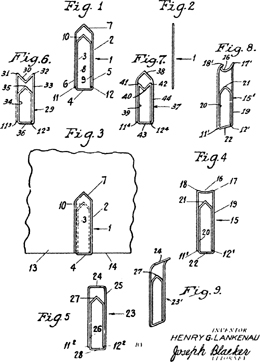

4 From Pins to Paper Clips

5 Little Things Can Mean a Lot

6 Stick Before Zip

7 Tools Make Tools

8 Patterns of Proliferation

9 Domestic Fashion and Industrial Design

10 The Power of Precedent

11 Closure Before Opening

12 Big Bucks from Small Change

13 When Good Is Better Than Best

14 Always Room for Improvement

Preface

Other than the sky and some trees, everything I can see from where I now sit is artificial. The desk, books, and computer before me; the chair, rug, and door behind me; the lamp, ceiling, and roof above me; the roads, cars, and buildings outside my window, all have been made by disassembling and reassembling parts of nature. If truth be told, even the sky has been colored by pollution, and the stand of trees has been oddly shaped to conform to the space allotted by development. Virtually all urban sensual experience has been touched by human hands, and thus the vast majority of us experience the physical world, at least, as filtered through the process of design.

Given that so much of our perception involves made things, it is reasonable to ask how they got to look the way they do. How is it that an artifact of technology has one shape rather than another? By what process do the unique, and not-so-unique, designs of manufactured goods come to be? Is there a single mechanism whereby the tools of different cultures evolve into distinct forms and yet serve the same essential function? To be specific, can the development of the knife and fork of the West be explained by the same principle that explains the chopsticks of the East? Can any single theory explain the shape of a Western saw, which cuts on the push stroke, as readily as an Eastern one, which cuts on the pull? If form does not follow function in any deterministic way, then by what mechanism do the shapes and forms of our made world come to be?

Such are the questions that have led to this book. It extends an exploration of engineering that I began in To Engineer Is Human, which dealt mainly with understanding why made things break, and that I continued in The Pencil, which traced the evolution of a single artifact through the cultural, political, and technological vicissitudes of history. Here I have focused not on the physical failings of any single thing but, rather, on the implications of failure—whether physical, functional, cultural, or psychological—for form generally. This extended essay, which may be read as a refutation of the design dictum that “form follows function,” has led to considerations that go beyond things themselves to the roots of the often ineffable creative processes of invention and design.

As artifacts evolve from artifacts, so do books from books. In writing this one, I have once again benefited from the physical and intellectual resources of many libraries and librarians. As always, I acknowledge Eric Smith, head of Duke University’s Vesic Engineering Library, who remains ever-patient in the face of my frequently vague requests for often obscure sources, and even pursues avenues of information I would never have dreamed of following. Stuart Basefsky, of the Public Documents Department in Duke’s Perkins Library, helped me get oriented in patent literature, which proved to be so important for my case, and the patent repository of the D. H. Hill Library of North Carolina State University graciously filled my numerous requests for documents. Several manufacturers, by freely providing their company histories, catalogues, and ephemera, enabled me to read beyond library walls and find invaluable documentation of things as they have been and are. Also, many friends, readers, and collectors generously shared with me art, facts, and artifacts that have found their way into my work. Where I have remembered my debts, I have acknowledged them in the notes at the end of this volume.

Correspondence and conversations with inventors and designers over the years have certainly shaped the ideas in this book, but, as in so much invention and design, individual contributions must necessarily remain largely anonymous, because they have become so threaded into the fabric of the work that to try to pick out even the most conspicuous of them would but lead to a lot of loose ends. Where practitioners have written or spoken for the record, their works are referenced in my bibliography, as are all those in which I can recall having read support for my thesis. By their example and encouragement, certain writers, engineers, and historians of technology have been especially instrumental in influencing this book, and I must single out Freeman Dyson, Eugene Ferguson, Melvin Kranzberg, and Walter Vincenti for their support.

A book naturally takes time and space to write, and I am indebted to a fellowship from the John Simon Guggenheim Memorial Foundation for the former and to a carrel in Perkins Library for the latter. I am grateful to my supportive editor, Ashbel Green, and to the many others at Alfred A. Knopf who have read the manuscript with pencils of various colors and in other ways prepared it for the press. Whatever shortcomings that remain are naturally my responsibility. Finally, my family once again understood my need to think and read at home each evening, and they quietly and constantly added to my store of examples by leaving interesting thing after interesting thing, from the broken to the bizarre, on my desk. I am, as always, grateful to Stephen, to Karen, who indexed this book, and especially to Catherine, who read the book for me at each stage of its evolution.

William R. Perkins Library

Duke University

April 1992

1

How the Fork Got Its Tines

The eating utensils that we use daily are as familiar to us as our own hands. We manipulate knife, fork, and spoon as automatically as we do our fingers, and we seem to become conscious of our silverware only when right- and left-handers cross elbows at a dinner party. But how did these convenient implements come to be, and why are they now so second-nature to us? Did they appear in some flash of genius to one of our ancestors, who yelled “Eureka!,” or did they evolve as naturally and quietly as did the parts of our bodies? Why is Western tableware so alien to Eastern cultures, and why do chopsticks make our hands all thumbs? Are our eating utensils really “perfected,” or is there room for improvement?

Such questions that arise out of table talk can serve as paradigms for questions about the origins and evolution of all made things. And seeking answers can provide insight into the nature of technological development generally, for the forces that have shaped place settings are the same that have shaped all artifacts. Understanding the origins of diversity in pieces of silverware makes it easier to understand the diversity of everything from bottles, hammers, and paper clips to bridges, automobiles, and nuclear-power plants. Delving into the evolution of the knife, fork, and spoon can lead us to a theory of how all the things of technology evolve. Exploring the tableware that we use every day, and yet know so little about, provides as good a starting point for a consideration of the interrelated natures of invention, innovation, design, and engineering as we are likely to find.

Some writers have been quite unequivocal about the origins of things. In their Picture History of Inventions, Umberto Eco and G. B. Zorzoli state flatly that “all the tools we use today are based on things made in the dawn of prehistory.” And in his Evolution of Technology, George Basalla posits as fundamental that “any new thing that appears in the made world is based on some object already there.” Such assertions appear to be borne out in the case of eating utensils.

Certainly our earliest ancestors ate food, and it is reasonable to ask how they ate it. At first, no doubt, they were animals as far as their table manners were concerned, and so we can assume that the way we see real animals eat today gives us clues as to how the earliest people ate. They would use their teeth and nails to tear off pieces of fruits, vegetables, fish, and meat. But teeth and nails can only do so much; they alone are generally not strong enough or sharp enough to render easily all things edible into bite-sized pieces.

The knife is thought to have had its origins in shaped pieces of flint and obsidian, very hard stone and rock whose fractured edges can be extremely sharp and thus suitable to scrape, pierce, and cut such things as vegetable and animal flesh. How the efficacious properties of flints were first discovered is open to speculation, but it is easy to imagine how naturally fractured specimens may have been noticed by early men and women to be capable of doing things their hands and fingers could not. Such a discovery could have occurred, for example, to someone walking barefoot over a field and cutting a foot on a shard of flint. Once the connection between accident and intention was made, it would have been a matter of lesser innovation to look for other sharp pieces of flint. Failing to find an abundance of them, early innovators might have engaged in the rudiments of knapping, perhaps after noticing the naturally occurring fracture of falling rocks.

In time, prehistoric people must have come to be adept at finding, making, and using flint knives, and they would naturally also have discovered and developed other ingenious devices. With fire came the ability to cook food, but even meat that had been delicately cut into small pieces could barely be held over a fire long enough to warm it, let alone cook it, and sticks may have come to be used in much the same way as children today roast marshmallows. Pointed sticks, easily obtained in abundance from nearby trees and bushes, could have been used to keep an individual’s fingers from being cooked with dinner. But larger pieces of meat, if not the whole animal, would more likely first have been roasted on a larger stick. Upon being removed from the fire, the roast could be divided among the diners, perhaps by being scored first with a flint knife. Those around the fire could then pick warm pieces of tender meat off the bone with pointed sticks, or resort to their fingers.

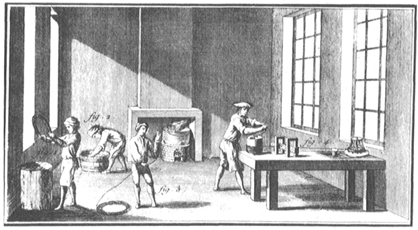

This damascened blade of a thousand-year-old Saxon scramasax is inscribed, “Gebereht owns me.” Early knives were proud personal possessions and they served many functions; the pointed blade not only could pierce the flesh of an enemy but also could spear pieces of food and convey them to the mouth. This knife’s long-missing handle may have been made of wood or bone. (photo credit 1.1)

From the separate implements of sharp-edged flint for cutting and sharp-pointed stick for spearing evolved the single implement of a knife that would be easily recognized as such today. By ancient times, knives were being made of bronze and iron, with handles of wood, shell, and horn. The applications of these knives were multifarious, as tools and weapons as well as dining implements, and in Saxon England a knife known as a “scramasax” was the constant companion of its owner. Whereas common folk still ate mostly with their teeth and fingers, tearing meat from the bone with abandon, more refined people came to employ their knives in some customary ways. In the politest of circumstances, the dish being sliced might have been held steady by a crust of bread, with the knife being used also to spear the morsel and convey it to the mouth, thus keeping the fingers of both hands clean.

I first experienced what it is like to eat with only a single knife some years ago in Montreal, in a setting that might best be described as participatory dinner theater. The Festin du Gouverneur took place in an old fort, and a hundred or so of us sat at long bare wooden tables set parallel to three sides of a small stage. At each place were a napkin and a single knife, with which we were expected to eat our entire meal, which consisted of roast chicken, potatoes, carrots, and a roll. It was relatively easy to deal with the firm carrots and potatoes, for pieces of them could be sheared off with the knife blade, speared on its point, and put neatly in the mouth. However, I had considerable trouble just cutting off pieces of chicken. At first I tried to steady it with my roll, but it was soft to begin with and soon became crumbly and soggy. I had to resort to eating the chicken with my fingers. What I remember most about the experience was how greasy my fingers felt for the rest of the evening. How convenient and more civilized it would have been at least to have had a second knife.

My only other experience eating with a single knife occurred at a barbecue restaurant popular with the students and faculty of Texas A & M University. I had been visiting the campus, and for a light dinner before I caught my plane back to North Carolina one of my hosts thought I might enjoy trying what he described as real barbecue—Texas beef instead of the pork variety I had come to know and love in the Southeast. I ordered a small portion of the house specialty, and the waitress brought me several slices of beef brisket, two whole cooked onions, a fat dill pickle, a good-sized wedge of cheddar cheese, and two slices of white bread, all wrapped in a large piece of white butcher paper, which when opened up served as both plate and place mat. On the paper was set a very sharply pointed butcher knife with a bare wooden handle.

I followed the lead of the Aggies I was with and picked up a piece of brisket with the point of the knife and laid it atop a piece of bread. (In medieval times, the piece of bread, called a “trencher,” would have been four days old to give it some stiffness and body, the better to hold the meat and sauce.) We proceeded to cut off bite-sized pieces of this open-faced sandwich, and everything else set before us, and it all was delicious. The single knife worked well, because it was very sharp and could be pressed through the firm food, which itself did not slip much on the paper. However, I was quite distracted throughout the meal by my host, who used his knife so casually that I feared any minute he would cut his lip or worse. He also kept me a bit uneasy with his jokingly expressed hope that no one would come up behind us and give us a good pat on the back just as we were putting our knives into our mouths.

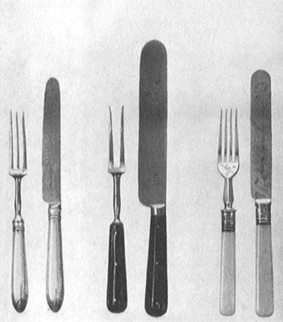

Eating a meal with two knives might seem to have been doubly crude and dangerous, but in its time it was thought of as the height of refinement. For the most formal dining in the Middle Ages, a knife was grasped in each hand. For a right-handed person the knife in the left hand held the meat steady while the knife in the right hand sliced off an appropriately sized piece. This piece was then speared and conveyed to the mouth on the knife’s tip. Eating with two knives represented a distinct advance in table manners, and the adept diner must have manipulated a pair of knives as readily as we do a knife and fork today.

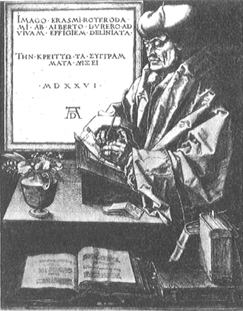

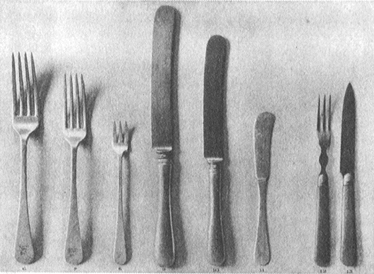

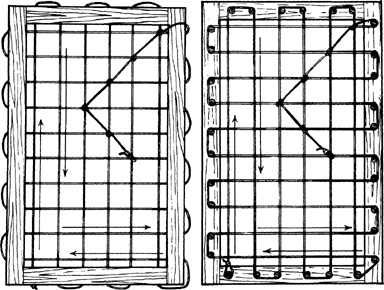

Knives, like all artifacts, have over time been subject to the vagaries of style and fashion, especially in the more decorative aspects of their handles. These English specimens date (left to right) from approximately 1530, 1530, 1580, 1580, 1630, and 1633, and they show that in one form or another the functional tip of the knife remained a constant feature until the introduction of the fork provided an alternate means of spearing food. (photo credit 1.2)

By using one knife to steady the roast in the middle of the table while the other knife cut off a slice, the diners could help themselves without touching the common food. But a sharp, pointed knife is not a very good holding device, as we can easily learn by trying to eat a T-bone with a steak knife in each hand. If the holding knife is to press the steak against the plate, we must use scant effort to keep it in place, and this can become tiring; if the holding knife is to spear the steak, we will soon find it rotating in place tike a wheel on an axle. As a result, using the fingers to steady food being cut was not uncommon.

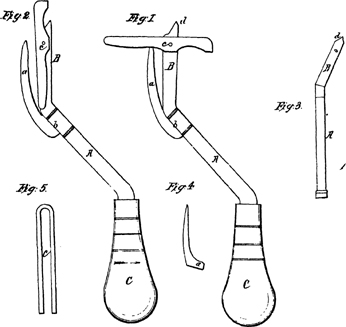

Frustrations with knives, especially their shortcomings in holding meat steady for cutting, led to the development of the fork. While ceremonial forks were known to the Greeks and Romans, they apparently had no names for table forks, or at least did not use them in their writings. Greek cooks did have a “flesh-fork … to take meat from a boiling pot,” and this kitchen utensil “had a resemblance to the hand, and was used to prevent the fingers from being scalded.” Ancient forklike tools also included the likes of hay forks and Neptune’s trident, but forks are assumed not to have been used for dining in ancient times.

The first utilitarian food forks had two prongs or tines, and were employed principally in the kitchen and for carving and serving. Such forks pierced the meat like a pointed knife, but the presence of two tines kept the meat from moving and twisting too easily when a piece was being sliced off. Although this advantage must also have been recognized in prehistoric eras, when forked sticks were almost as easy to come by as straight ones for skewering meat over the fire, the fork as an eating utensil was a long time in coming. It is believed that forks were used for dining in the royal courts of the Middle East as early as the seventh century and reached Italy around the year 1100. However, they did not come into any significant service there until about the fourteenth century. The inventory of Charles V of France, who reigned from 1364 to 1380, listed silver and gold forks, but with an explanation “that they were only used for eating mulberries and foods likely to stain the fingers.” Table forks for conveying a variety of foods to the mouth moved westward to France with Catherine de Médicis in 1533, when she married the future King Henry II, but the fork was thought to be an affectation, and those who lost half their food as it was lifted from plate to mouth were ridiculed. It took a while for the new implement to gain widespread use among the French.

Not until the seventeenth century did the fork appear in England. Thomas Coryate, an Englishman who traveled in France, Italy, Switzerland, and Germany in 1608, published three years later an account of his adventures in a book entitled, in part, Crudities Hastily Gobbled Up in Five Months. At that time, when a large piece of meat was set on a table in England, the diners were still expected to partake of this main dish by slicing off a portion each while holding the roast steady with the fingers of their free hand. Coryate saw it done differently in Italy:

I observed a custom in all those Italian cities and towns through which I passed, that is not used in any other country that I saw in my travels, neither do I think that any other nation of Christendom doth use it, but only Italy. The Italian, and also most strangers that are commorant in Italy, do always at their meals use a little fork when they cut their meat. For while with their knife which they hold in one hand they cut the meat out of the dish, they fasten the fork, which they hold in their other hand, upon the same dish; so that whatsoever he be that sitting in the company of any others at the meal, should unadvisedly touch the dish of meat with his fingers from which all at the table do cut, he will give occasion of offense unto the company, as having transgressed the laws of good manners, insomuch that for his error he shall be at least brow beaten if not reprehended in words. This form of eating I understand is generally used in all places of Italy; their forks being for the most part made of iron or steel, and some of silver, but those are used only by gentlemen. The reason of this their curiosity is, because the Italian cannot by any means indure to have his dish touched with fingers, seeing all men’s fingers are not alike clean. Hereupon I myself thought to imitate the Italian fashion by this forked cutting of meat, not only while I was in Italy, but also in Germany, and oftentimes in England since I came home.

Coryate was jokingly called “Furcifer,” which meant literally “fork bearer,” but which also meant “gallows bird,” or one who deserved to be hanged. Forks spread slowly in England, for the utensil was much ridiculed as “an effeminate piece of finery,” according to the historian of inventions John Beckmann. He documented further the initial reaction to the fork by quoting from a contemporary dramatist who wrote of a “fork-carving traveller” being spoken of “with much contempt.” Furthermore, no less a playwright than Ben Jonson could get laughs for his characters by questioning, in The Devil Is an Ass, first produced in 1616,

The laudable use of forks,

Brought into custom here as they are in Italy,

To the sparing of napkins.

But the new fashion was soon being taken more seriously, for Jonson could also write, in Volpone, “Then must you learn the use and handling of your silver fork at meals.”

Putting aside acceptance and custom, what makes the fork work, of course, are its tines. But how many tines make the best fork, and why? Something with a single tine is hardly a fork, and would be no better than a pointed knife for spearing and holding food. The toothpicks at cocktail parties may be considered, like sharpened sticks, rudimentary forks, but most of us have experienced the frustrations of manipulating a toothpick to pick up a piece of shrimp and dip it in sauce. If the shrimp does not fall off, it rotates in the sauce cup. If the shrimp does not drop into the cup, we must contort our hand to hold the toothpick, shrimp, and dripping sauce toward the vertical while trying to put the hors d’oeuvre on our horizontal tongue. The single-tined fork is not generally an instrument of choice, but that is not to say it does not have a place. Butter picks are really single-tined forks, but, then, we do want a butter pick to release the butter easily. Escargot and nut picks might also be classified as single-pronged forks, but, then, there is hardly room for a second tine in a snail’s snug spiral or a pecan shell’s interstices.

The two-pronged fork is ideal for carving and serving, for a roast can be held in place without rotating, and the fork can be slid in and out of the meat relatively easily. The implement can be moved along the roast with little difficulty and can also convey slices of meat from carving to serving platter with ease. The carving fork functions as it was intended, leaving little to be desired, and so it has remained essentially unchanged since antiquity. But the same is not true of the table fork.

As the fork grew in popularity, its form evolved, for its shortcomings became evident. The earliest table forks, which were modeled after kitchen carving forks, had two straight and longish tines that had developed to serve the principal function of holding large pieces of meat. The longer the tines, the more securely something like a roast could be held, of course, but longish tines are unnecessary at the dining table. Furthermore, fashion and style dictated that tableware look different from kitchenware, and so since the seventeenth century the tines of table forks have been considerably shorter and thinner than those of carving forks.

In order to prevent the rotation of what was being held for cutting, the two tines of the fork were necessarily some distance apart, and this spacing was somewhat standardized. However, small loose pieces of food fell through the space between the tines and thus could not be picked up by the fork unless speared. Furthermore, the very advantage of two tines for carving meat, their ease of removal, made it easy for speared food to slip off early table forks. Through the introduction of a third tine, not only could the fork function more efficiently as something like a scoop to deliver food to the mouth, but also food pierced by more tines was less likely to fall off between plate and mouth.

If three tines were an improvement, then four were even better. By the early eighteenth century, in Germany, four-tined forks looked as they do today, and by the end of the nineteenth century the four-tined dinner fork became the standard in England. There have been five- and six-tined forks, but four appears to be the optimum. Four tines provide a relatively broad surface and yet do not feel too wide for the mouth. Nor does a four-tined fork have so many tines that it resembles a comb, or function like one when being pressed into a piece of meat. Wilkens, the German silversmith, does make a modern five-tined dinner fork, but it appears to have been designed more for fashion than function, since the pattern (called Epoca) is marketed as being “unique in its entirety and in every detail” and “full of generous, massive strength.” The fork’s selling point seems to be its unusual appearance rather than its effectiveness for eating. Many contemporary silverware patterns have three-tined dinner forks for similar reasons, but some go so far in rounding and tapering the tines, thus softening the lines of the fork, that it is almost impossible to pick up food with it.

The evolution of the fork in turn had a profound impact on the evolution of the table knife. With the introduction of the fork as a more efficient spearer of food, the pointed knife tip became unnecessary. But many artifacts retain nonfunctional vestiges of earlier forms, and so why did not the knife? The reason appears to be at least as much social as technical. When everyone carried a personal knife not only as a singular eating utensil but also as a tool and a defensive weapon, the point had a purpose well beyond the spearing of food. Indeed, many a knife carrier may have preferred to employ his fingers for lifting food to his mouth rather than the tip of his most prized possession. According to Erasmus’s 1530 book on manners, it was not impolite to resort to fingers to help yourself from the pot as long as you “use only three fingers at most” and you “take the first piece of meat or fish that you touch.” As for the knife, the young were admonished, “Don’t clean your teeth with your knife.” A French book of advice to students recognized the implicit threat involved in using a weapon at the table, and instructed its readers to place the sharp edge of their knife facing toward themselves, not their neighbor, and to hold it by its point in passing it to someone else. Such customs have influenced how today’s table is set and how we are expected to behave at it. In Italy, for example, when one is eating with a fork alone, it is correct to rest the free hand in full view on the table edge. Though this might be considered poor manners irr America, the custom is believed to have originated in the days when the visible hand showed one’s fellow diners that no weapon was being held in the lap.

It is said to have been Cardinal Richelieu’s disgust with a frequent dinner guest’s habit of picking his teeth with the pointed end of his knife that drove the prelate to order all the points of his table knives ground down. In 1669, as a measure to reduce violence, King Louis XIV made pointed knives illegal, whether at the table or on the street. Such actions, coupled with the growing widespread use of forks, gave the table knife its now familiar blunt-tipped blade. Toward the end of the seventeenth century, the blade curved into a scimitar shape, but this contour was to be modified over the next century to become less weaponlike. The blunt end became more prominent, not merely to emphasize its bluntness but, since the paired fork was likely to be two-tined and so not an efficient scoop, to serve as a surface onto which food might be heaped for conveying to the mouth. Peas and other small discrete foods, which had been eaten by being pierced one by one with a knife point or a fork tine, could now be eaten more efficiently by being piled on the knife blade, whose increasingly backward curve made it possible to insert the food-laden tip into the mouth with less contortion of the wrist. During this time, the handles on some knife-and-fork sets became pistol-shaped, thus complementing the curve of the knife blade but making the fork look curiously asymmetrical.

With the beginning of the nineteenth century, English table-knife blades came to be made with nearly parallel straight sides, perhaps in part as a consequence of the introduction of steam power during the Industrial Revolution and the economy of process in forming this shape out of ingots, but perhaps even more because the fork had evolved into the scooper and shoveler of food, and the knife was to be reserved for cutting. The blunt-nosed straight-bladed knife, which was often more efficient as a spreading than a cutting utensil, remained in fashion throughout the nineteenth century. However, unless the cutting edge of the blade extended some distance below the line of the handle around which the fingers curled, only the tip of the blade was fully practical for cutting and slicing. This shortcoming caused the knife’s bottom edge to evolve into the convex shape of most familiar table knives of today. The top edge serves no purpose other than stiffening the blade against bending, and since this has not been found to be wanting, there has been essentially no change in the shape of that edge of the knife for two centuries.

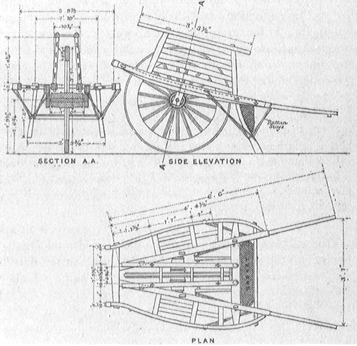

Early two-tined forks worked well for holding meat being cut but were not useful for scooping up peas and other loose food. The bulbous tip of the knife blade evolved to provide an efficient means of conveying food to the mouth, with the curve of the blade reducing the amount of wrist contortion needed to use the utensil thus. These English sets date (left to right) from approximately 1670, 1690, and 1740. (photo credit 1.3)

With the introduction of three- and four-tined forks, the latter sometimes called “split spoons,” it was no longer necessary or fashionable to use the knife as a food scoop, and so its bulbous curved blade reverted to more easily manufactured shapes. However, habit and custom persisted at the dinner table, and the functionally inefficient knife was used throughout the nineteenth century by less refined diners for putting food in the mouth. Left to right, these sets date from about 1805, 1835, and 1880. (photo credit 1.4)

Whereas the shapes of table knives have evolved to remove their existing failings and shortcomings, kitchen knives have changed little over the centuries. Their blades have remained pointed, the shape into which they naturally evolved by successive correction of faults from flint shards. The inadequacy of the common table knife to be all things to all people is emphasized when we eat a food like steak. Since the table knife is generally not sharp-pointed enough to work its way in tight curves around pieces of gristle and bone, we are brought special implements that are more suited to the task at hand. Cutting up a steak is very much like kitchen work, and so the steak knife has evolved back from the table knife to look like a kitchen knife.

The modern table knife and fork have evolved through a kind of symbiotic relationship, but the general form of the spoon has developed more or less independently. The spoon is sometimes claimed to be the first eating utensil, since solid food could easily be eaten with the bare fingers and the knife is thought to have had its beginnings as a tool or weapon rather than as an eating utensil per se. It is reasonable to assume that the cupped hand was the first spoon, but we all know how inefficient it can be. Empty clam, oyster, or mussel shells can be imagined to have been spoons, with distinct advantages over the cupped hand or hands. Shells could hold liquid longer than cramping hands, and they enabled the latter to be kept clean and dry. But shells have their own shortcomings. In particular, it is not easy to fill a shell from a bowl of liquid without getting the fingers wet, and so a handle would naturally have been added. Spoons formed out of wood could incorporate a handle integrally, and the very word “spoon” comes from the Anglo-Saxon “spon,” which designated a splinter or chip of wood. With the introduction of metal casting to make spoons, the shape of bowls was not limited to those naturally occurring in nature and thus could evolve freely in response to real or perceived shortcomings, and to fashion. But even having been shaped, from the fourteenth century to the twentieth, successively round, triangular (with the handle at the apex, sometimes said to be fig-shaped), elliptical, elongated triangular (with the handle at the base), ovoid, and elliptical, the bowl of the spoon has never been far from the shape of a shell.

The use of the knife, fork, and spoon in late-seventeenth- and early-eighteenth-century Europe has influenced some persistent differences in their use by Europeans and Americans today. The introduction of the fork produced an asymmetry in tableware, and the question of which implement a diner’s right and left hand held could no longer be considered moot. With identical knives in each hand, the diner was able to cut and carry food to the mouth with either knife, but, whether by custom or natural inclination, right-handedness may be assumed always to have prevailed, and so the knife in the right hand not only performed the cutting, which took much more dexterity than merely holding the meat steady on the plate, but also speared the cut-off morsel to convey it to the mouth. Because it did not need to be pointed, the left-hand knife was sometimes blunt-ended and used as a spatula to scoop up looser food or slices of meat. When the fork gained currency, it displaced the noncutting and relatively passive knife in the left hand, and in time the function of the knife in the right hand changed. With its point blunted, it was used only as a cutter and shoveler, and the fork held meat that was being cut and speared it for lifting to the mouth, a relatively easy motion with the left hand, even for a right-handed person.

By the eighteenth century, the European style of using utensils had become somewhat standardized, with the knife in the right hand cutting off food and sometimes also pushing pieces of it onto the fork, which conveyed it to the mouth. Since the first forks were straight-tined, there was no front or back to them, but shortcomings of this ambiguous design soon became evident. Whether food was skewered on or placed across the tines of the fork, the fork had to be brought to a near-horizontal position to enter the mouth with the least chance of its tines’ piercing the roof of the mouth or the food’s falling off. With slightly curved tines, and with food placed on their convex side, the fork handle did not have to be lifted so high to convey the food quickly and safely to the mouth. Furthermore, the arching tines enabled the fork to pierce a piece of meat squarely, yet curved out of the way so that diners could see clearly what they were cutting. By the middle of the eighteenth century, gently curving tines were standard on English forks, thus giving them distinct fronts and backs.

But the fork was a rare item in colonial America. According to one description of everyday life in the Massachusetts Bay Colony, the first and only fork in the earliest days, carefully preserved in its case, had been brought over in 1630 by Governor Winthrop. In seventeenth-century America, “knives, spoons, and fingers, with plenty of napery, met the demands of table manners.” As the eighteenth century dawned, there were still few forks. Furthermore, since knives imported from England had ceased to come with pointed tips, they could not be employed to spear food and convey it to the mouth.

How the present American use of the knife and fork evolved does not seem to be known with certainty, but it has been the subject of much speculation. Without forks, the more refined colonists can be assumed to have handled a knife and spoon at the dinner table. Indeed, using an older, pointed knife and spoon, a “spike and spon,” to keep the fingers from touching food may have given us the phrase “spic and span” to connote a high standard of cleanliness. How the blunted spike and spon influenced today’s knife and fork has been suggested by the archaeologist James Deetz, who has written of Early American life in his evocative In Small Things Forgotten. (The phrase is taken from colonial probate records, where it referred to the completion of an accounting of an estate’s items by grouping together the small and trivial things whose individually intrinsic value did not warrant a separate accounting. Forks themselves would never have been lumped with “small things forgotten,” but still the way knives, forks, or spoons were actually used seems not to have been recorded.)

According to Deetz, in the absence of forks some colonists took to holding the spoon in the left hand, bowl down, and pressing a piece of meat against the plate so that they could cut off a bite with the knife in the right hand. Then the knife was laid down and the spoon transferred from the left to the generally preferred hand, being turned over in the process, to scoop up the morsel and transfer it to the mouth (the rounded back of a spoon being ill suited to pile food upon). When the fork did become available in America, its use replaced that of the spoon, and so the customary way of eating with a knife and spoon became the way to eat with a knife and fork. In particular, after having used the knife to cut, the diner transferred the fork from the left to the right hand, turning it over in the process, to scoop up the food for the mouth, for the spoonlike scooping action dictated that the fork have the tines curving upward. This theory is supported by the fact that when the four-tined fork first appeared in America it was sometimes called a “split spoon.” The action of passing the fork back and forth between hands, a practice that Emily Post termed “zigzagging” and contrasted to the European “expert way of eating,” persists to this day as the American style.

In America as elsewhere, however, well into the nineteenth century table manners and tableware remained far from uniform. Though “etiquette manuals appeared in unprecedented numbers,” as late as 1864 Eliza Leslie could still declare in her Ladies’ Guide to True Politeness and Perfect Manners that “many persons hold silver forks awkwardly, as if not accustomed to them.” Frances Trollope described among the diners on a Mississippi River steamboat in 1828 some “generals, colonels, and majors” who had “the frightful manner of feeding with their knives, till the whole blade seemed to enter the mouth.” And since the feeding knife was apparently blunt-tipped, the diners had to clean their teeth with pocket knives afterward. Just a generation later, the experiences of Mrs. Trollope’s son, Anthony, were quite different. Dining in a Lexington, Kentucky, hotel in 1861, he observed not officers but “very dirty” teamsters who nevertheless impressed him by being “less clumsy with their knives and forks … than … Englishmen of the same rank.”

On an American tour in 1842, Charles Dickens noted that fellow passengers on a Pennsylvania canal boat “thrust the broad-bladed knives and the two-pronged forks further down their throats than I ever saw the same weapons go before, except in the hands of a skilled juggler.” The growing use of the fork displaced the knife from the mouth, but the new fashion was not without its dissenters, who likened eating peas with a fork to “eating soup with a knitting needle.” With its multiplying tines and uses, however, the fork was to become the utensil of choice, and by the end of the nineteenth century a refined person could eat “everything with it except afternoon tea.” It was just such a menu of applications for a single utensil that led to specialized descendants like fish and pastry forks, as we shall see later in this book.

European and American styles of eating with knife and fork are not the only ways civilized human beings have solved the design problem of getting food from the table to the mouth. Indeed, as Jacob Bronowski pointed out, “A knife and fork are not merely utensils for eating. They are utensils for eating in a society in which eating is done with a knife and fork. And that is a special kind of society.” To this day, some Eskimos, Africans, Arabs, and Indians eat with their fingers, observing ages-old customs of washing before and after the meal. But even Westerners sometimes eat with their fingers. The American hamburger and hot dog are consumed without the aid of utensils, with the bun keeping the fingers from becoming greasy. Tacos may be less easy to eat, but the shell—reminiscent of the first food containers—keeps the greasier food from soiling the fingers, at least in principle. Such foods demonstrate alternative technological ways of achieving the same cultural objective.

In the Far East, chopsticks developed about five thousand years ago as extensions of the fingers. According to one theory of their origin, food was cooked in large pots, which held the heat long after everything was ready to be eaten. Hungry people burned their fingers reaching into the pot early to pull out the choicest-looking morsels, and so they sought alternatives. Grasping the morsels with a pair of sticks protected the fingers, or so one tradition has it. Another version credits Confucius with advising against the use of knives at the table, for they would remind the diners of the kitchen and the slaughterhouse, places the “honorable and upright man keeps well away from.” Thus Chinese food has traditionally been prepared in bite-sized pieces or cooked to sufficient tenderness so that pieces could be torn apart with the chopsticks alone.

Just as Western eating utensils evolved in response to real and perceived shortcomings, so a characteristic form of modern chopsticks, rounded at the food end and squarish at the end that fits in the hand, no doubt evolved over the course of time because rounded sticks taken from nature left something to be desired. Whereas any available twigs may have served well the function of grasping food from a common pot, they would not have seemed so appropriate for dining in more formal settings. The obvious way to imitate twigs to make better chopsticks would be to form wood into straight, round rods of the desired size. But such an apparent improvement might also have highlighted shortcomings overlooked in the cruder implements. Finely shaped chopsticks that were of the same diameter at both the food and the finger ends might prove to be too thick to tear apart certain foods easily, or too thin to be comfortable during a longish meal. Thus, it would have been an obvious further improvement to make the sticks tapered, with the different ends becoming fixed at compromise sizes that made them function better for both food and hand. Whether uniform or tapered, however, round chopsticks would tend to twist in the fingers and roll off the table, and so squaring one end eliminated two annoyances in what is certainly a brilliant design.

Putting implements as common as knife and fork and chopsticks into an evolutionary perspective, tentative as it necessarily must be, gives a new slant to the concept of their design, for they do not spring fully formed from the mind of some maker but, rather, become shaped and reshaped through the (principally negative) experiences of their users within the social, cultural, and technological contexts in which they are embedded. The formal evolution of artifacts in turn has profound influences on how we use them.

Imagining how the form of things as seemingly simple as eating utensils might have evolved demonstrates the inadequacy of a “form follows function” argument to serve as a guiding principle for understanding how artifacts have come to look the way they do. Reflecting on how the form of the knife and fork has developed, let alone how vastly divergent are the ways in which Eastern and Western cultures have solved the identical design problem of conveying food to mouth, really demolishes any overly deterministic argument, for clearly there is no unique solution to the elementary problem of eating.

What form does follow is the real and perceived failure of things as they are used to do what they are supposed to do. Clever people in the past, whom we today might call inventors, designers, or engineers, observed the failure of existing things to function as well as might be imagined. By focusing on the shortcomings of things, innovators altered those items to remove the imperfections, thus producing new, improved objects. Different innovators in different places, starting with rudimentary solutions to the same basic problem, focused on different faults at different times, and so we have inherited culture-specific artifacts that are daily reminders that even so primitive a function as eating imposes no single form on the implements used to effect it.

The evolution of eating utensils provides a strong paradigm for the evolution of artifacts generally. There are clearly technical components to the story, for even the kind of wood in chopsticks or the kind of metal in knives and forks will have a serious impact on the way the utensils can be formed and can carry out their functions. Technological advances can have far-reaching implications for the manner of manufacture and use of utensils, as the introduction of stainless steel did for tableware, which in turn can affect their price and availability across broad economic classes of people. But the stories associated with knives, forks, and spoons also illustrate well how interrelated are technology and culture generally. The form, nature, and use of all artifacts are as influenced by politics, manners, and personal preferences as by that nebulous entity, technology. And the evolution of the artifacts in turn has profound influences on manners and social intercourse.

But how do technology and culture interact to shape the world beyond the dinner table? Are there general principles whereby all sorts of things, familiar and unfamiliar, evolve into their shapes and sizes and systems? If not in tableware, does form follow function in the genesis and development of our more high-tech designs, or is the alliterative phrase just an alluring consonance that lulls the mind to sleep? Is the proliferation of made things, such as the seemingly endless line of serving pieces that complement a table service, merely a capitalist trick to sell consumers what they do not need? Or do artifacts multiply and diversify in an evolutionary way as naturally as do living organisms, each having its purpose in some wider scheme of things? Is it true that necessity is the mother of invention, or is that just an old wives’ tale? These are among the questions that have prompted this book. In order to begin to answer them, it will be helpful first to look beyond a place setting of examples to rules, and then to illustrate them by an omnivorous selection of further examples. Thus is the design problem of this literary artifact.

2

Form Follows Failure

The evolution of the modern knife and fork from flint and stick, and the evolution of the spoon from the cupped hands and shells of eons ago, seem thoroughly reasonable stories. But they are more than stories, constructed after the fact by imaginative social scientists; the way our common tableware has developed to its present form is but a single example of a fundamental principle by which all made things come to look and function the way they do. That principle revolves about our perception of how existing things fail to do what we expect them to do as well and conveniently and economically as we think they should or wish they would. In short, they leave something to be desired.

But whereas the shortcomings of an existing thing may be expressed in terms of a need for improvement, it is really want rather than need that drives the process of technological evolution. Thus we may need air and water, but generally we do not require air conditioning or ice water in any fundamental way. We may find food indispensable, but it is not necessary to eat it with a fork. Luxury, rather than necessity, is the mother of invention. Every artifact is somewhat wanting in its function, and this is what drives its evolution.

Here, then, is the central idea: the form of made things is always subject to change in response to their real or perceived shortcomings, their failures to function properly. This principle governs all invention, innovation, and ingenuity; it is what drives all inventors, innovators, and engineers. And there follows a corollary: Since nothing is perfect, and, indeed, since even our ideas of perfection are not static, everything is subject to change over time. There can be no such thing as a “perfected” artifact; the future perfect can only be a tense, not a thing.

If this hypothesis is universally valid and can explain the evolution of all made things, it must apply to any artifact of which we can think. It must explain the evolution of the zipper no less than the pin; the aluminum can no less than the hamburger package; the suspension bridge no less than Scotch tape. The hypothesis fleshed out must also have the potential for explaining why some of our most everyday things continue to look the way they do in spite of all their obvious shortcomings. It must explain why some things change for the worse, and why those things aren’t made in the good old way. Some background from the writings of inventors and designers and those who think about invention and design can set the stage for the case studies that will test the hypothesis.

The large number of things that have been devised and made by humans throughout the ages has been estimated in some recently published books on the design and evolution of artifacts. Donald Norman, in The Design of Everyday Things, describes sitting at his desk and seeing about him a host of specialized objects, including various writing devices (pencils, ballpoint pens, fountain pens, felt-tip markers, highlighters, etc.), desk accessories (paper clips, tape, scissors, pads of paper, books, bookmarks, etc.), fasteners (buttons, snaps, zippers, laces, etc.), etc. In fact, Norman counted over one hundred items before he tired of the task. He suggests that there are perhaps twenty thousand everyday things that we might encounter in our lives, and he quotes the psychologist Irving Biederman as estimating that there are probably “30,000 readily discriminable objects for the adult.” The number was arrived at by counting the concrete nouns in a dictionary.

George Basalla, in The Evolution of Technology, suggests the great “diversity of things made by human hands” over the past two hundred years by pointing out that five million patents have been issued in America alone. (Not every new thing is patented, of course, and we can get some idea of the enormity of our rearrangement and processing of things by noting that over ten million new chemical substances were registered in the American Chemical Society’s computer data base between 1957 and 1990.) Basalla also notes that, in support of Darwin’s evolutionary theory, biologists have identified and named over one and a half million species of flora and fauna, and thus he concludes that, if each American patent were “counted as the equivalent of an organic species, then the technological can be said to have a diversity three times greater than the organic.” He then introduces the fundamental questions of his study:

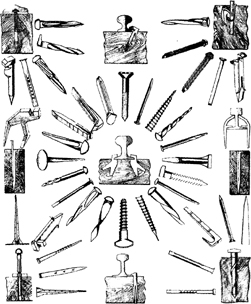

The variety of made things is every bit as astonishing as that of living things. Consider the range that extends from stone tools to microchips, from waterwheels to spacecraft, from thumbtacks to skyscrapers. In 1867 Karl Marx was surprised to learn … that five hundred different kinds of hammers were produced in Birmingham, England, each one adapted to a specific function in industry or the crafts. What forces led to the proliferation of so many variations of this ancient and common tool? Or more generally, why are there so many different kinds of things?

Basalla dismisses the “traditional wisdom” that attributes technological diversity to necessity and utility, and looks for other explanations, “especially ones that can incorporate the most general assumptions about the meaning and goals of life.” He finds that his search “can be facilitated by applying the theory of organic evolution to the technological world,” but he acknowledges that the “evolutionary metaphor must be approached with caution,” because fundamental differences exist between the made world and the natural world. In particular, Basalla admits that, whereas natural things arise out of random natural processes, made things come out of purposeful human activity. Such activity, manifested in psychological, economic, and other social and cultural factors, is what creates the milieu in which novelty appears among continuously evolving artifacts.

Adrian Forty has also reflected on the multitude of made objects. In Objects of Desire, he notes that historians have generally accounted for the diversification of designs in one of two ways. The first explanation, albeit a rather circular one, is that there is an ongoing evolution of new needs created by the development of new designs, such as machinery and appliances that are increasingly complicated and compact. The new designs require new tools for assembly and disassembly, and these new tools in turn enable still further new designs to be realized. The second explanation for the diversity of artifacts is “the desire of designers to express their ingenuity and artistic talent.” Both theories were used by Siegfried Giedion in Mechanization Takes Command, but neither theory, convincing though it may be in explaining particular cases of diversity, covers all cases, as Forty admits.

In mid-nineteenth-century America, for example, there developed a new piece of furniture, the adjustable chair. Giedion’s explanation for the proliferation of designs for such a chair was that it was prompted by the posture of the times, which was based on relaxation, “found in a free, unposed attitude that can be called neither sitting nor lying.” He argued that the development of the new patent furniture was thus in response to a new need, which happily coincided with a concentration of creativity among ingenious designers. But Forty rejects Giedion’s reasoning as overly dependent on coincidence, arguing that “it is most unlikely that after several millennia mankind should suddenly have discovered a new way of sitting in the nineteenth century,” when “designers were no more inventive and ingenious than people at other times.”

Forty dismisses the “functionalist” theory as inadequate to explain the diversity of a less adjustable but more recent example: “Could Montgomery Ward’s 131 different designs of pocket knife be said to be the result of the discovery of new ways of cutting?” And he does not allow that nineteenth-century designers, no matter how ingenious, had the power or autonomy to influence “how many or what type of articles should be made,” although he does agree that designers could determine the form of individual articles. Forty’s own arguments for the multiplication of things like adjustable chairs “place the products of design in a direct relationship to the ideas of the society in which they are made.” In particular, he identifies the capitalists as the proliferators of diversity: “The evidence is that manufacturers themselves made distinctions between designs on the basis of different markets.” Thus there exists a dictionary situation for everyone: designers design, manufacturers manufacture, and diverse consumers consume diversity. This is or is not a nefarious arrangement, depending upon one’s ideology.

Whether or not the world should have diversity, it does, and the question remains as to how individual designs come to be distinguished from related designs. Even if manufacturers are the primary driving force for diversity, what underlying idea governs how a particular product looks? Certainly it was more than economic considerations alone that distinguished one from the other among those 131 knives in the Montgomery Ward’s catalogue, one from the other among those five hundred specialized hammers made in Birmingham. Certainly there were distinctions, but what forces created them?

Neither Norman, Basalla, nor Forty has much to say about a relationship between form and function. The words do not appear in any of their indexes, and we can confidently assume that these authors do not subscribe to the formula “form follows function,” which Forty calls an “aphorism.” Nor does David Pye, who has written very cogently about design. Pye’s books are especially rewarding reading because he lets the reader see how he thinks. He does not just give us the polished fruits of his thought; he also gives us the pits and seeds and cores, so that we may observe what is at the heart of his thinking through a design problem. Not only does he dismiss “form follows function” as “doctrine,” he also ridicules the dictionary definition that function is “the activity proper to a thing.”

According to Pye, “function is a fantasy,” and he italicizes his further assertion that “the form of designed things is decided by choice or else by chance; but it is never actually entailed by anything whatever.” He ridicules the idea that something “looks like that because it has got to be like that,” and equates “purely functional” with terms that to him are pejoratives, such as “cheap” and “streamlined.” He elaborates on his disdain for the idea that “form follows function”:

The concept of function in design, and even the doctrine of functionalism, might be worth a little attention if things ever worked. It is, however, obvious that they do not. Indeed, I have sometimes wondered whether our unconscious motive for doing so much useless work is to show that if we cannot make things work properly we can at least make them presentable. Nothing we design or make ever really works. We can always say what it ought to do, but that it never does. The aircraft falls out of the sky or rams the earth full tilt and kills the people. It has to be tended like a new born babe. It drinks like a fish. Its life is measured in hours. Our dinner table ought to be variable in size and height, removable altogether, impervious to scratches, self-cleaning, and having no legs.… Never do we achieve a satisfactory performance.… Every thing we design and make is an improvisation, a lash-up, something inept and provisional.

Pye is engaging in hyperbole, of course, but all hyperbole has its roots in truth. What is at the root of Pye’s ranting is that nothing is perfect: If a malfunction occurs in one out of a million airline flights, then the aircraft is not perfected in the strictest sense of the word. Only tending to airplanes as if they were newborn babes keeps them well enough maintained to hold accident rates down. The truly perfected airplane would not need maintenance, would fly on little fuel, and would last for centuries, if not longer. And what is wrong with the dinner table? Well, we do have to insert and remove leaves to accommodate our variable-sized dinner parties. We have to situate telephone books to bring the latest generation up to table height. The table does just sit there when we are not using it. Its finish gets scratched, and it gets dirty. And it has legs that restrict our movement up to and away from it. In short, the table, like all designed objects, leaves room for improvement.

In fact, it is just this ubiquitous imperfection that Pye so exaggerates that is the single common feature of all made objects. And it is exactly this feature that drives the evolution of things, for the coincidence of a perceived problem with an imagined solution enables a design change. But such a scenario for the evolution of artifacts should give us ever better designs, yet it does not seem to do so. A resolution of the paradox lies in Pye’s observation that design requirements are always in conflict and hence “cannot be reconciled”:

All designs for devices are in some degree failures, either because they flout one or another of the requirements or because they are compromises, and compromise implies a degree of failure.…

It follows that all designs for use are arbitrary. The designer or his client has to choose in what degree and where there shall be failure. Thus the shape of all things is the product of arbitrary choice. If you vary the terms of your compromise—say, more speed, more heat, less safety, more discomfort, lower first cost—then you vary the shape of the thing designed. It is quite impossible for any design to be “the logical outcome of the requirements” simply because, the requirements being in conflict, their logical outcome is an impossibility.

Thus the common dinner table that Pye had described earlier is a failure because it cannot meet simultaneously all the competing terms of seating two and twelve, seating small children and large adults, possessing an aesthetically pleasing finish that does not scratch or soil, and having legs that hold it up without getting in the way. We can find fault with any common object if we look hard enough at it. But that is not Pye’s goal, nor is it this book’s intention. Rather, the objective here is to celebrate the clever and everyday things of an imperfect world as triumphs in the face of design adversity. We will come to understand why we can speak of “perfected” designs in such an environment, and why one thing follows from another through successive changes, all intended to be for the better.

Few writers have been more explicit about the role of failure in the evolution of artifacts than the architect Christopher Alexander in his Notes on the Synthesis of Form. He makes it abundantly clear that we must look to failure if we ever hope to declare success, and he illustrates the principle with the example of how a metal face can be declared “perfectly” smooth and level. We can ink the face of a standard block that is known to be level and rub it against the face being machined:

If our metal face is not quite level, ink marks appear on it at those points which are higher than the rest. We grind these high spots, and try to fit it against the block again. The face is level when it fits the block perfectly, so that there are no high spots which stand out any more.

A dentist fitting a crown employs a similar technique. Although he does not seek a totally level surface, the dentist does want the new tooth surface to conform to its mate. This is done by having the patient grind something like carbon paper between the teeth to mark those high spots where the crown fails to fit. It is clear from Alexander’s paradigm for realizing the design of an artifact, which to him consists of fitting form to context, that we are able to declare success only when we can find no more points that fail to conform to the standard against which we judge. In general, a successful design, which Alexander terms a good fit between form and context, can be declared only when we can detect no more differences. It is “departures from the norm which stand out in our minds, rather than the norm itself. Their wrongness is somehow more immediate than the rightness.”

Alexander also gives a more everyday example, one that does not require a machine shop or a dentist’s office to re-enact. All we need is a box of buttons that have collected over the years:

Suppose we are given a button to match, from among a box of assorted buttons. How do we proceed? We examine the buttons in the box, one at a time; but we do not look directly for a button which fits the first. What we do, actually, is to scan the buttons, rejecting each one in which we notice some discrepancy (this one is larger, this one darker, this one has too many holes, and so on), until we come to one where we can see no differences. Then we say that we have found a matching one.

This is essentially how a word processor’s spelling-checker program can work. It takes each word in a document in turn and compares it with the words in its dictionary. The logic or software of the program can find a matching word, if any exists, by successively eliminating those that do not match. Words of different length from the one being checked can be eliminated first because they obviously can’t fit letter for letter. Then the remaining words in the dictionary that do not have the same first letter as the word being checked can be eliminated. Of those words remaining, those that do not have the same second letter can be eliminated, and so forth until the last letter in the word being checked is reached. If there remains a word in the dictionary that produces no misfits, then the word whose spelling is being checked can be declared correctly spelled. If all the words in the dictionary are found to be misfits, then the word being checked can be declared misspelled. The success of the program depends fundamentally on the concept of failure. (The logic has several shortcomings, of course, which if not dealt with separately will not catch certain misspelled words and will declare some correctly spelled words misspelled. For example, it will not catch such misspellings as “their” for “there,” because both are valid words in the dictionary.)

Alexander generalizes from his examples to recommend that “we should always expect to see [design] as a negative process of neutralizing the incongruities, or irritants, or forces, which cause misfit” between form and context. This is also how artifacts change over time and evolve with use. The manipulation of two pointed knives to eat a piece of meat might frequently have irritated medieval diners as the meat rotated about the stationary knife. Diners who chose not to touch the meat with their fingers would generally have been able to neutralize the irritant by pressing the noncutting knife flatter onto the meat, thus altering its use. And in time this might change the form of the knife blade to give it a better bearing surface. Knife makers are also diners, of course, and a particularly reflective or imaginative one might have come up with a more radical way of neutralizing the irritant—developing an entirely different eating implement, one with two prongs to stab the meat in order to prevent it from rotating while being sliced.

“Misfit provides an incentive to change; good fit provides none,” declares Alexander, and even if we ourselves do not have the material, tools, or ability to work up a new artifact to remove an irritant in one we use, we might at least call the irritant to the attention of someone who can. That someone who can effect changes can be a craftsperson, for whom Alexander uses the masculine to include the feminine, and whom he describes as “an agent simply” through whom artifacts can evolve in an almost organic way:

Even the most aimless changes will eventually lead to well-fitting forms, because of the tendency to equilibrium inherent in the organization of the process. All the agent need do is recognize failures when they occur, and to react to them. And this even the simplest man can do. For although only few men have sufficient integrative ability to invent form of any clarity, we are all able to criticize existing forms. It is especially important to understand that the agent in such a process needs no creative strength. He does not need to be able to improve the form, only to make some sort of change when he notices a failure. The changes may not be always for the better; but it is not necessary that they should be, since the operation of the process allows only the improvements to persist.

This evolutionary process has worked throughout civilization and continues to work today even as craftsmen have become scientifically savvy engineers, and even as artifacts have grown to the complexity of nuclear-power plants, space shuttles, and computers. However, unlike Alexander’s agent, who does not necessarily have to make changes for the better, when the modern designer or inventor makes a change in an artifact, he or she must definitely think it is for the better in some sense. Nevertheless, actual incidents as well as mere perceptions of misfit and failure do continue to drive the evolution of artifacts, and we can expect that they always will. And it need not be only the likes of engineers, politicians, and entrepreneurs who have a hand in shaping the world and its things, for we are all specialists in at least a small corner of the world of things. We are all perfectly capable of seeing what fails to live up to the promise of its designers, makers, sellers, or licensers. Such ideas should be as evident to users of artifacts today as they were to the governed in the days of the Athenian statesman Pericles, who observed that “although only a few may originate a policy, we are all able to judge it.”

Understanding how and why our physical surroundings come to look and work the way they do provides considerable insight into the nature of technological change and the workings of even the most complex of modern technology. Basalla takes the artifact as “the fundamental unit for the study of technology,” and argues convincingly that “continuity prevails throughout the made world.” Thus the cover illustration for The Evolution of Technology depicts “the evolutionary history of the hammer, from the first crudely shaped pounding stone to James Nasmyth’s gigantic steam hammer,” with which steel forgings of unprecedented size could be made at the culmination of the Industrial Revolution. Basalla asserts that the existence of such continuity in all things “implies that novel artifacts can only arise from antecedent artifacts—that new kinds of made things are never pure creations of theory, ingenuity, or fancy.” If this be so, then the history of artifacts and technology becomes more than a cultural adjunct to the business of engineering and invention. It becomes a means of understanding the elusive creative process itself, whereby the intellectual capital of nations is generated.

The same purposeful human activity that has shaped such common objects as the knife, fork, and spoon shapes all objects of technology, “from stone tools to microchips,” and also accounts for the diversity of things, from the hundreds of hammers made in nineteenth-century Birmingham to the multitude of specialized pieces of silverware that came to constitute a table service. The distinctly human activities of invention, design, and development are themselves not so distinct as the separate words for them imply, and in their use of failure these endeavors do in fact form a continuum of activity that determines the shapes and forms of every made object.

Whereas shape and form are the fundamental subjects of this book, the aesthetic qualities of things are not among its primary concerns. Aesthetic considerations may certainly influence, and in some cases even dominate, the process whereby a designed object comes finally to look the way it does, but they are seldom the first causes of shape and form, with jewelry and objets d’art being notable exceptions. Utilitarian objects can be streamlined and in other ways made more pleasing to the eye, but such changes are more often than not cosmetic to a mature or aging artifact. Tableware, for example, has clearly evolved for useful purposes, and, no matter what pattern of silver we may have set before us on a table, we do not confuse knife, fork, and spoon. But when aesthetic considerations dominate the design of a new silverware pattern, the individual implements, no matter how striking and well balanced they may look on the table, can often leave much to be desired in their feel and use in the hand. Chess pieces constitute another example of a set of objects that have long-established and fixed requirements. There is no leeway as to how many pawns or rooks a set must have, and there is no getting around the fact that the pieces must be distinguishable one from another and must be provided in two equal but easily separated groups of sixteen. To design or “redesign” a chess set may involve some minor considerations of weight and balance in the pieces, but more often than not it is taken as a problem in aesthetics. And in the name of aesthetics many a chess set has been made more modern- or abstract-looking, if not merely different-looking, at the expense of chess players’ ability to tell the queen from the king or the knight from the bishop. Such design games are of little concern in this book.

We shall, however, be concerned with what is variously called “product design” or “industrial design.” Though this activity often appears to have aesthetics as its principal consideration, the best of industrial design does not have so narrow a focus. Rather, the complete industrial designer seeks to make objects easier to assemble, disassemble, maintain, and use, as well as to look at. And the best of industrial designers will have the ability to see into the future of a product so that what might have been a damning shortcoming of an otherwise wonderful-looking and beautifully functioning artifact will be nipped in the bud. Considerations that go variously under the name “human-factors engineering” or, especially in England, “ergonomics” are closely related to those of industrial design, but the human-factors engineer is especially concerned with how anything from the simplest kitchen gadget to the most advanced technological system will behave at the hands of its intended, and perhaps unintended, users.

The childproof bottle for prescription medicine is something that many people, especially older folks with arthritis, would agree could benefit from some industrial redesign, but most would also no doubt concur that the focus should be on the human-engineering aspects of getting the top off before the container gets an aesthetic treatment, although that would also be welcome. The ideal prescription-medicine container would be human-engineered to perfection and yet attractive enough to displace a bowl of fruit on the kitchen table. Such pretty things may not be designed in this book, but the intention is to go at least some way toward developing an understanding of why such things do not exist among the myriad ones that do. Just as there are many ways in which an artifact can fail, so there are many paths that a corrective form can follow.

3

Inventors as Critics

If the shortcomings of things are what drive their evolution, then inventors must be among technology’s severest critics. They are, and it is the inventor’s unique ability not only to realize what is wrong with existing artifacts but also to see how such wrongs may be righted in order to provide increasingly more sophisticated gadgets and devices. These contentions are not just the wishful thinking of a theorist seeking order in the made world; they are grounded in the words of reflective inventors themselves, who come from all walks of life.