The Fallacy of Fine-Tuning: Why the Universe is not Designed for us – Read Now and Download Mobi

Published 2011 by Prometheus Books

The Fallacy of Fine-Tuning: Why the Universe Is Not Designed for Us. Copyright © 2011 Victor J. Stenger. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, digital, electronic, mechanical, photocopying, recording, or otherwise, or conveyed via the Internet or a website without prior written permission of the publisher, except in the case of brief quotations embodied in critical articles and reviews.

Inquiries should be addressed to

Prometheus Books

59 John Glenn Drive

Amherst, New York 14228–2119

VOICE: 716–691–0133

FAX: 716–691–0137

WWW.PROMETHEUSBOOKS.COM

15 14 13 12 11 5 4 3 2 1

Library of Congress Cataloging-in-Publication Data

Stenger, Victor J., 1935–

The fallacy of fine-tuning : why the universe is not designed for us / by Victor J. Stenger.

p. cm.

Includes bibliographical references and index.

ISBN 978–1–61614–443–2 (alk. paper)

ISBN 978–1–61614–444–9 (e-book)

1. Causality (Physics) 2. Cosmology. 3. Equilibrium. 4. Religion and science. 5. God—Proof. 6. Atheism. I. Title.

QC6 .4.C3S74 2011

530.01—dc22

2010049901

Printed in the United States of America

ACKNOWLEDGMENTS

PREFACE

1. SCIENCE AND GOD

1.1. NOMA

1.2. Natural Theology

1.3. Darwinism

1.4. Intelligent Design

2. THE ANTHROPIC PRINCIPLES

2.1. Fine-Tuning

2.2. History

2.3. Hoyle's Prediction

2.4. The Anthropic Principles

2.5. Fine-Tuning Today

3. THE FOUR DIMENSIONS

3.1. Models

3.2. Observations

3.3. Space, Time, and Reality

3.4. Parameters

3.5. Definitions

3.6. Trivial and Arbitrary Parameters

3.7. Space-Time and Four-Momentum

3.8. Criteria for Fine-Tuning

3.9. Geological and Biological Parameters

4. POINT-OF-VIEW INVARIANCE

4.1. The Conservation Principles

4.2. Lorentz Invariance

4.3. Classical Mechanics

4.4. General Relativity and Gravity

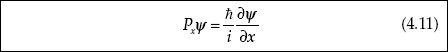

4.5. Quantum Mechanics

4.6. Gauge Theory

4.7. The Standard Model

4.8. Atoms and the Void

5. COSMOS

5.1. Some Basic Cosmology

5.2. A Semi-Newtonian Model

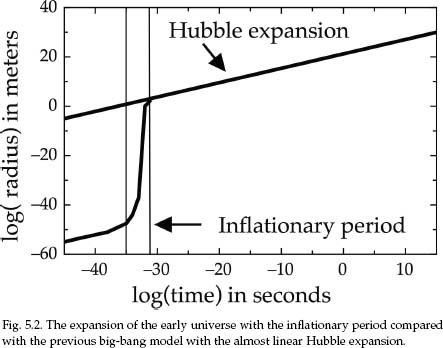

5.3. Inflation, Past and Present

5.4. Phase Space

5.5. Entropy

6. THE ETERNAL UNIVERSE

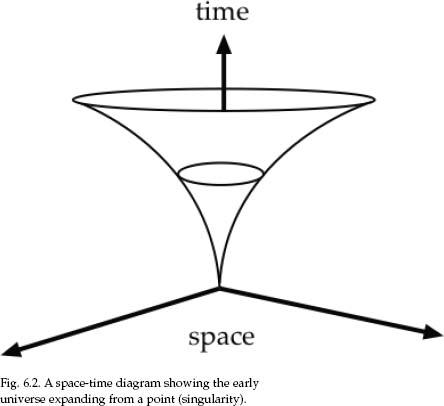

6.1. Did the Universe Begin?

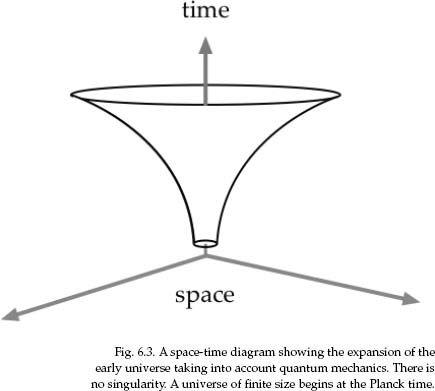

6.2. The Missing Singularity

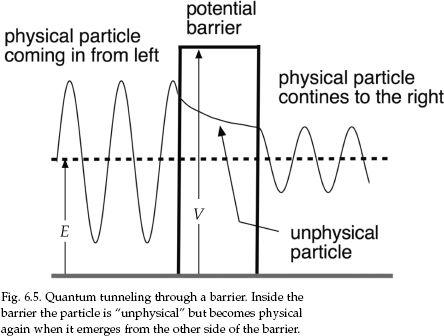

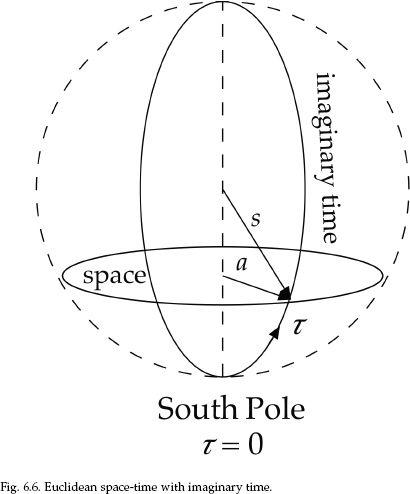

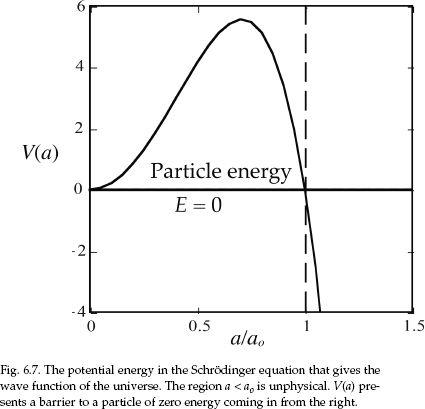

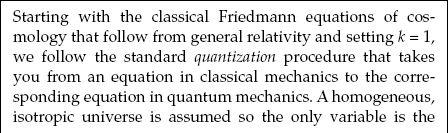

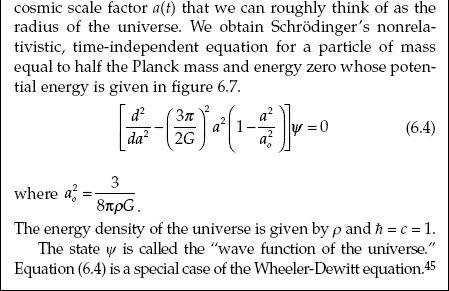

6.3. Quantum Tunneling

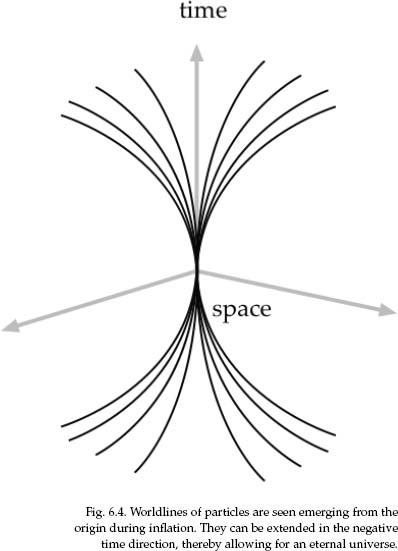

6.4. Imaginary Time

6.5. A Scenario for a Natural Origin of the Universe

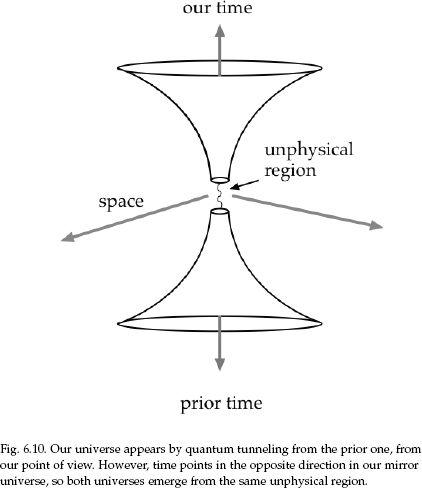

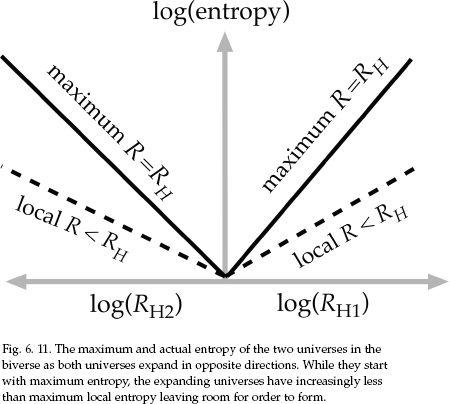

6.6. The Biverse

7. GRAVITY IS FICTION

7.1. Gravity and Electromagnetism

7.2. Not Universal

7.3. Why Are Masses So Small?

7.4. How to Get Mass

7.5. Another Way to Get Mass

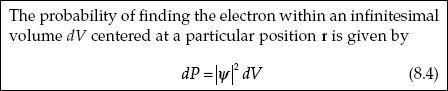

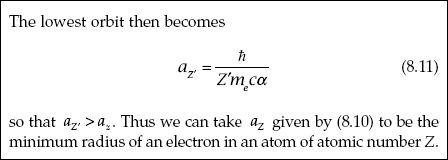

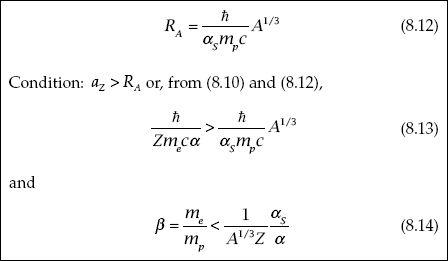

8. CHEMISTRY

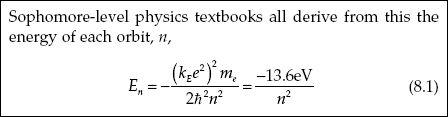

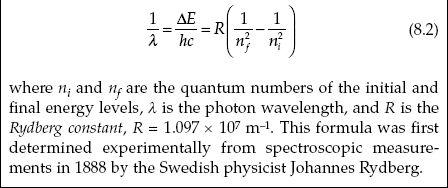

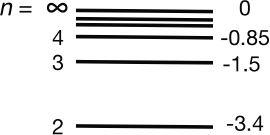

8.1. The Hydrogen Atom

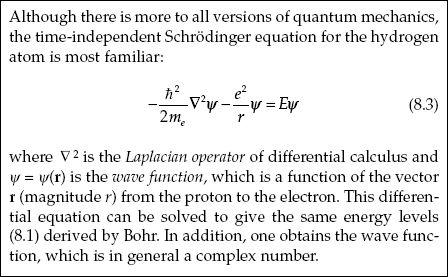

8.2. The Quantum Theory of Atoms

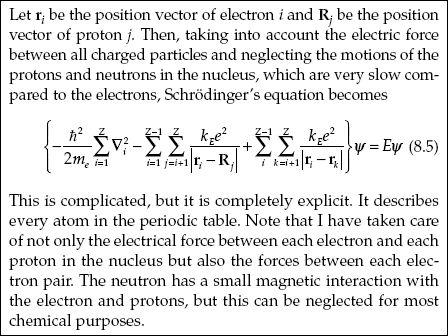

8.3. The Many-Electron Atom

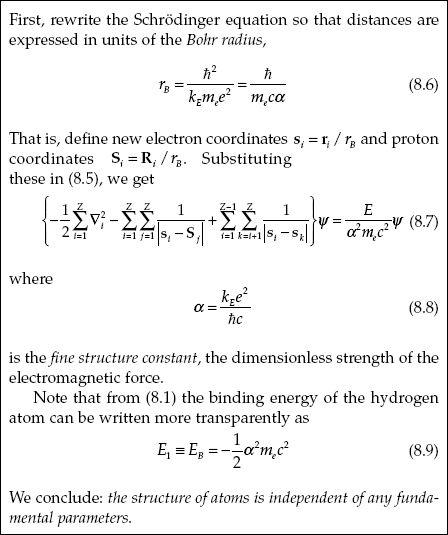

8.4. The Scaling of the Schrödinger Equation

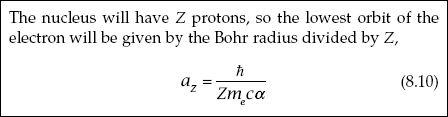

8.5. Range of Allowed Parameters

9. THE HOYLE RESONANCE

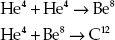

9.1. Manufacturing Heavier Elements in Stars

9.2. The Hoyle Prediction

10. PHYSICS PARAMETERS

10.1. Are the Relative Masses of Particles Fine-Tuned?

10.2. Mass of Electron

10.3. Masses of Neutrinos

10.4. Strength of Weak Nuclear Force

10.5. Strength of the Strong Interaction

10.6. The Relative Strengths of the Forces

10.7. Proton Decay

10.8. Baryogenesis

11. COSMIC PARAMETERS

11.1. Mass Density of the Universe

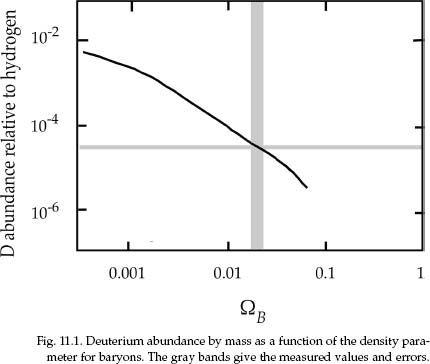

11.2. Deuterium

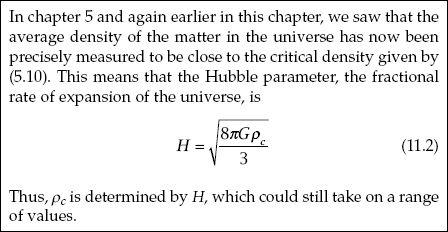

11.3. The Expansion Rate of the Universe

11.4. Protons and Electrons in the Universe

11.5. Big Bang Ripples

11.6. Parameters of the Concordance Model

11.7. The Cold Big Bang

12. THE COSMOLOGICAL CONSTANT

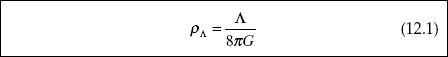

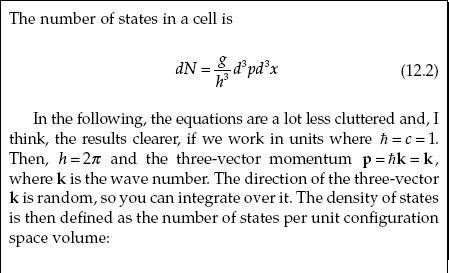

12.1. Vacuum Energy

12.2. Holographic Cosmology

12.3. Ghost Particles

12.4. Is the Cosmological Constant Zero by Definition?

12.5. Acceleration with Zero Cosmological Constant

12.6. The Multiverse and the Principle of Mediocrity

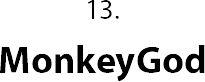

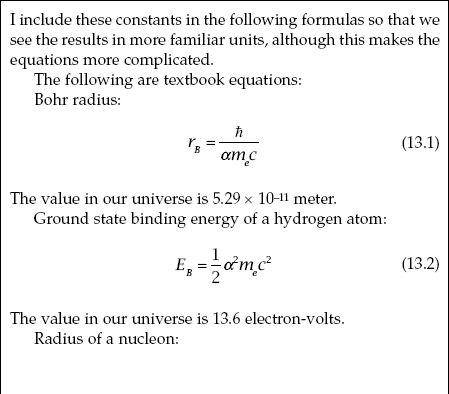

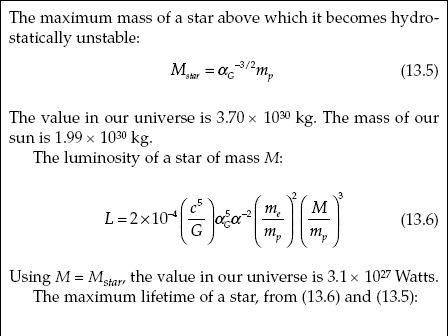

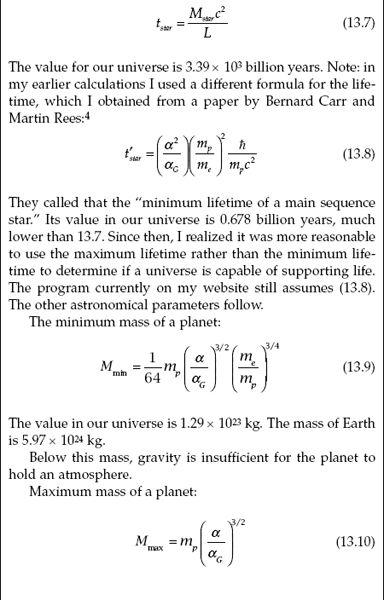

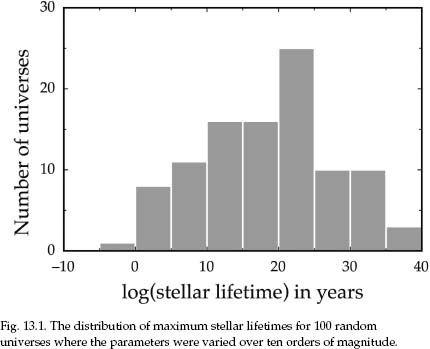

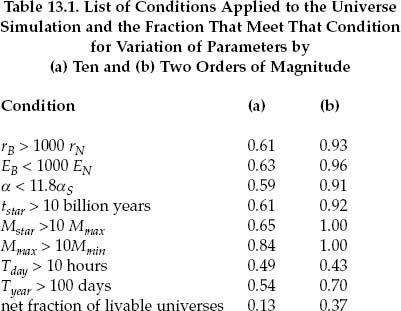

13. MONKEYGOD

13.1. Principles and Parameters

13.2. Simulating Universes

14. PROBABILITY

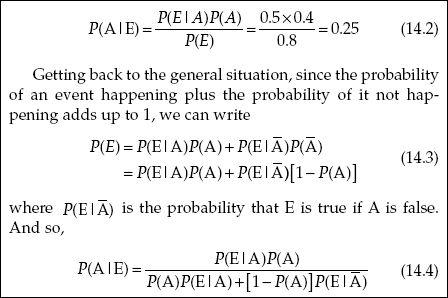

14.1. Probability Arguments

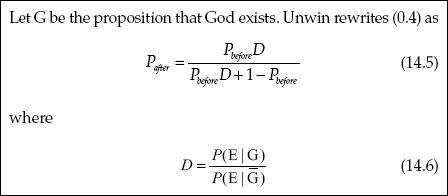

14.2. Bayesian Arguments

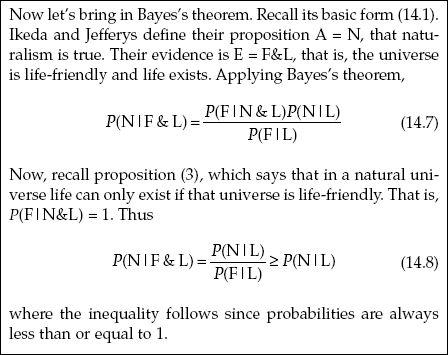

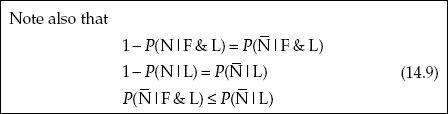

14.3. Another Bayesian Argument

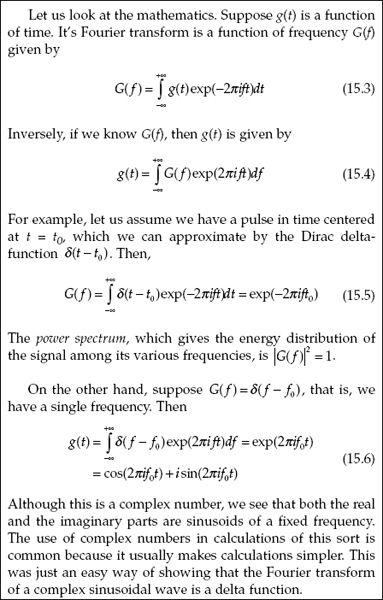

15. QUANTUM AND CONSCIOUSNESS

15.1. The New Spirituality

15.2. Making Your Own Reality

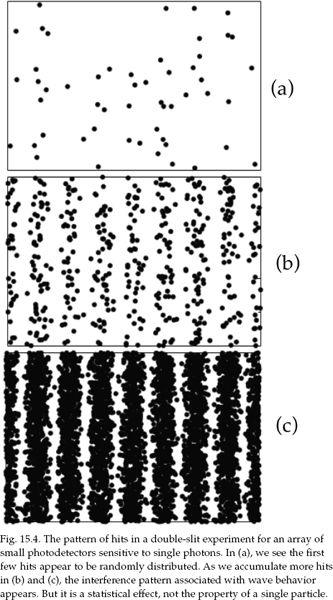

15.3. Waves and Particles

15.4. Human Inventions

15.5. A Single Reality

15.6. The Statistical Interpretation

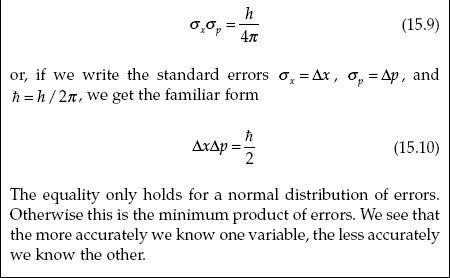

15.7. Deriving the Uncertainty Principle

16. SUMMARY AND REVIEW

16.1. The Parameters

16.2. Probability Arguments

16.3. Status of the Strong Anthropic Principle

16.4. A Final Conclusion

NOTES

BIBLIOGRAPHY

INDEX

ABOUT THE AUTHOR

OTHER BOOKS BY VICTOR J. STENGER

As with my previous books, I have relied heavily on the advice of others. Once again I have had superb commentary from people with a wide range of expertise, not just in physics but also in astronomy, biology, neuroscience, philosophy, history, and computer science. I want to especially thank physicist Bob Zannelli for his help on all aspects of this work, and for managing the e-mail discussion list avoid-L on which much of the discussion is carried out. Once again, physicist Brent Meeker has been invaluable in his ability to detect errors of science and logic, and for suggesting rewrites for sections that were not adequately clear.

Other members of avoid-L who have made substantial contributions include Richard Branham, Lawrence Crowell, Bill Jefferys, Don McGee, Steven Nunes, Christopher Savage, Sydney Shall, Brian Stilston, and Michael Tutkowski.

I also benefited immensely from the replies to my questions from the following notable physicists and cosmologists: Anthony Aguirre, Sean Carroll, Paul Davies, Roni Harnik, Mario Livio, Martin Rees, and Michael Salem. Of course, any errors in this manuscript are mine alone, and furthermore it should not be assumed that each of the people named agrees with all or any of my conclusions.

I am also very grateful to Paul Kurtz, Jonathan Kurtz, Steven L. Mitchell, and their talented and dedicated staff at Prometheus Books for their continued support of my work. I would like to single out Jade Zora Ballard for her tireless efforts at what must have been a demanding job of copyediting.

Finally, I could never carry out a difficult and time-consuming work such as this without the love and steadfast support of my wife, Phylliss, and our wonderful family.

In the late nineteenth century in Lithuania, when my paternal grandfather, Anthony Stungaris, was a sixteen-year-old boy, he became gravely ill with diphtheria.1 His family members laid him out as comfortably as they could in the barn to die. A strong-willed neighbor girl named Benigna, who was two years older, nursed him back to health. They would marry and raise a family. Their first two daughters died in childhood, but they would eventually have six more children. My father, Vytautis, was the third of these, the first son and the last born in Lithuania.

At the time, Lithuania was under the control of the Russian Czar. Anthony worked as a caretaker in a royal forest preserve where no logging was allowed. One winter was so bitterly cold that nearby townspeople were dying, so grandfather allowed them into the forest to gather up dead wood lying on the ground. They did not stop there but cut down some trees as well.

So, as the story goes, my grandfather was sent to Siberia. When he returned a year or so later and could not find work, he decided to emigrate to the United States. He left Benigna and three children behind, promising to send for them once he was settled.

After two years of hearing almost nothing from Anthony, Benigna set out on her own for America, children in tow. Despite not having had a day of schooling (although she spoke several languages, not including English), she managed to get to America and to find her husband in Bayonne, New Jersey. In my house in Colorado I still have the small chest in which they carried all their belongings onboard the ship. My father was only three years old. The year was about 1906.

My grandfather had found a good job with the General Cable Company in Bayonne, and he and my grandmother eventually bought a four-family house on Avenue A, a block from Newark Bay, which in those years was unpolluted. Right down the street from the house was a rocky beach full of broken glass and the decayed Pavonia Yacht Club. When my father and mother married, they moved into one of the cold-water flats on Avenue A. I lived in that house until leaving for graduate school in Los Angeles at age twenty-one, a move my grandmother deeply disapproved of. She had taken my father out of school in the sixth grade so he could work and make money for the family.

My mother was born in Bayonne, her parents emigrating from Hungary. She was one of eleven children and left high school in the tenth grade.

Now, every human being on this planet can tell an interesting story about the events of previous generations that eventually led to their existence. In my case, if my grandmother had not taken the enormous risk of nursing my grandfather, I would not exist. And neither would my daughter, my son, and my four grandchildren. None of us would exist if my grandmother had contracted the disease herself and died. We would not exist if she had not embarked on that complicated journey to America, or if upon getting there she could not find her husband. And what about the many contingencies that led my father and my mother to meet? Carry the story back in time, generation by generation, species by species, until we reach that primordial accident that resulted in the origin of life, and you will realize how lucky each of us is to be here. If we attempt to calculate the a priori probability for all these events happening exactly as they did, we would get an infinitesimally small number.

Many people find it difficult to comprehend how events with very low probabilities can ever happen naturally. The fact of my existence and that of every other human, plant, and animal on Earth is so incredibly unlikely that in many minds it must be the result of some supernatural plan.

In his 1989 tome The Emperor's New Mind, mathematician Roger Penrose calculated that the state of the observed universe is one out of ten raised to the power of 10123 possible states.2 We could not even write this number out using every particle in the universe as a zero.

In physics, the state of a multibody system is represented as a point in an abstract multidimensional space called phase space where each axis corresponds to one of the degrees of freedom of the system, such as the spatial x-coordinate or y-component of the momentum of a particle. Penrose shows a cartoon of the “Creator” pointing to an absurdly tiny volume in the phase space of possible universes. This is our universe, and while Penrose has remained neutral, the implication drawn by others is that our universe is so wildly unlikely that our very existence provides irrefutable proof of a creator God.

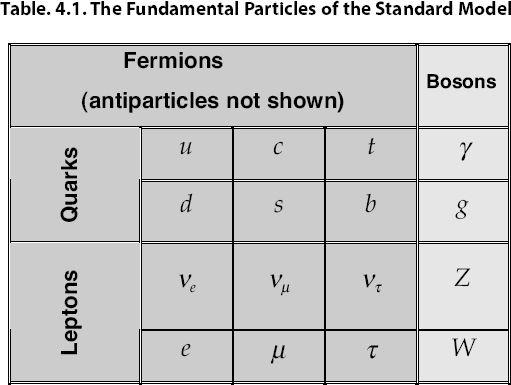

Our current understanding of physics and cosmology allows us to describe the fundamental physical properties of our universe back to as early as a trillionth of a second after it began. At this writing we have two complementary models—the standard model of particles and forces and the concordance model of cosmology (also referred to as the standard model of cosmology)—that successfully describe all the observations made to date of the submicro world (using our highest energy particle accelerators) and of the supermacro world (made with our best space-borne and earthbound telescopes). More advanced instruments, notably the Large Hadron Collider (LHC) now going into operation in Geneva, are expected to extend our description of the universe even further back in time.

The standard models of physics and cosmology depend on a few dozen parameters, such as the masses of the elementary particles, the relative strengths of the various forces, and the cosmological constant. Many of these parameters are very tightly constrained by existing data, which should not be surprising since the models fit the data with good and in some cases great precision. These parameters specify the infinitesimal volume of phase space pointed to by Penrose's “Creator” that defines the state of the universe.

In recent years, people have wondered what the universe would be like if the parameters of the models that describe it were slightly different. Clearly this new set of parameters would specify a different volume in phase space and a different state of the universe. Again, it should come as no surprise that the models describe a universe that would lead to a different set of observations.

One set of observations concerns life on Earth. A number of authors have noted that the universe described when some parameters are slightly changed no longer can support life as we know it. This implies that life, as we know it, depends sensitively on the parameters of our universe, which is unarguable. A more dubious conclusion, which has attracted much theological attention for over two decades now, asserts that the parameters of our universe are “fine-tuned” to produce life as we know it. This is often referred to as the anthropic principle, although, as we will see, there are several versions. What could possibly be doing the fine-tuning? Clearly, according to the proponents, it has to be an entity outside the universe, and such an entity is what most people identify as the creator God.

Let us look at a few quotations selected from the vast literature on the subject. Back in 1985, astronomer Edward Robert Harrison wrote:

Here is the cosmological proof of the existence of God—the design argument of Paley—updated and refurbished. The fine-tuning of the universe provides prima facie evidence of deistic design. Take your choice: blind chance that requires multitudes of universes, or design that requires only one.3

Geneticist Francis Collins was the head of the Human Genome Project and at this writing directs the United States National Institutes of Health. In his 2006 bestseller, The Language of God: A Scientist Presents Evidence for Belief, Collins argues for the following interpretation of the data:

The precise tuning of all the physical constants and physical laws to make intelligent life possible is not an accident, but reflects the action of the one who created the universe in the first place.4

Physician Michael Anthony Corey writes:

The stupendous degree of fine-tuning that instantly existed between these fundamental parameters following the Big Bang reveals a miraculous level of micro-engineering that is simply inconceivable in the absence of a “supercalculating” Designer.5

Astronomer George Greenstein asserts:

As we survey the evidence, the thought insistently arises that some supernatural agency—or rather Agency—must be involved. Is it possible that suddenly, without intending to, we have stumbled upon scientific proof of the existence of a Supreme Being? Was it God who stepped in and so providentially crafted the cosmos for our benefit?6

And theoretical physicist Tony Rothman adds,

The medieval theologian who gazed at the night sky through the eyes of Aristotle and saw angels moving the spheres in harmony has become the modern cosmologist who gazes at the same sky through the eyes of Einstein and sees the hand of God not in angels but in the constants of nature…. When confronted with the order and beauty of the universe and the strange coincidences of nature, it's very tempting to take the leap of faith from science to religion. I am sure many physicists want to. I only wish they would admit it.7

Christian philosopher and apologist William Lane Craig has been debating the existence of God and other theological issues for decades. Many transcripts of his debates can be found on his website.8 I have debated him twice myself, in 2003 at the University of Hawaii in Honolulu and in 2010 at Oregon State University in Corvallis, Oregon. In chapter 6 I will discuss in detail some of Craig's cosmological arguments for a divine creation of the universe.

Craig uses the fine-tuning argument in many of his debates. Here's how he presented it in his 1998 debate with philosopher/biologist Massimo Pigliucci (and in other debates):9

During the last thirty years, scientists have discovered that the existence of intelligent life depends upon a complex and delicate balance of initial conditions given in the Big Bang itself. We now know that life-prohibiting universes are vastly more probable than any life-permitting universe like ours. How much more probable?

The answer is that the chances that the universe should be life-permitting are so infinitesimal as to be incomprehensible and incalculable. For example, Stephen Hawking has estimated that if the rate of the universe's expansion one second after the Big Bang had been smaller by even one part in a hundred thousand million million, the universe would have re-collapsed into a hot fireball.10 P. C. W. Davies has calculated that the odds against the initial conditions being suitable for later star formation (without which planets could not exist) is one followed by a thousand billion billion zeroes, at least.11 John Barrow and Frank Tipler estimate that a change in the strength of gravity or of the weak force by only one part in 10100 would have prevented a life-permitting universe.12 There are around fifty such quantities and constants present in the Big Bang which must be fine-tuned in this way if the universe is to permit life. And it's not just each quantity which must be exquisitely fine-tuned; their ratios to one another must be also finely-tuned. So improbability is multiplied by improbability by improbability until our minds are reeling in incomprehensible numbers.

Craig also quotes physicist and prolific author Davies, winner of the 1995 Templeton Prize for Progress in Religion, as saying: “Through my scientific work I have come to believe more and more strongly that the physical universe is put together with an ingenuity so astonishing that I cannot accept it merely as a brute fact.”13

Craig and many other theist authors frequently cite the 1984 statement by the late Robert Jastrow, former head of NASA's Goddard Institute for Space Studies, as calling this the most powerful evidence for the existence of God ever to come out of science.

So once again, the view that Christian theists have always held, that there is an intelligent Designer of the universe, seems to make much more sense than the atheistic view that the universe, when it popped into being uncaused out of nothing, just happened to be by chance fine-tuned to an incomprehensible precision for the existence of intelligent life.14

As we will see, a lot of science has been done since 1984 and if Jastrow were still alive, I wonder if he would still feel this way. I hope that other physicists and astronomers who may have felt this way a generation ago will take a look at the arguments in this book, many of which have not appeared before.

As a physicist, I cannot go wherever I want to but wherever the data take me. If they take me to God, so be it. I have examined the data closely over many years and have come to the opposite conclusion: the observations of science and our naked senses not only show no evidence for God but also provide evidence beyond a reasonable doubt that a God that plays such an important, everyday role in the universe such as the Judeo-Christian-Islamic God does not exist.15

I will devote most of this book to showing why the evidence does not require the existence of a creator of the universe who has designed it specifically for humanity. I will show that the parameters of physics and cosmology are not particularly fine-tuned for life, especially human life. I will present detailed new information not previously published in any book or scientific article that demonstrates why the most commonly cited examples of apparent fine-tuning can be readily explained by the application of well-established physics and cosmology. I will provide references to recent work on the subject by others besides myself that shows it to be very likely that some form of life would have occurred in most universes that could be described by the same physical models as ours, with parameters whose values vary over ranges consistent with those models. And I will show why we can expect to be able to describe any uncreated universe with the same models and laws with at most slight, accidental variations. Plausible natural explanations can be found for those parameters that are the most crucial for life. I will show that the universe looks just like it should if it were not fine-tuned for humanity.

Cosmologists have proposed a very simple solution to the fine-tuning problem. Their current models strongly suggest that ours is not the only universe but part of a multiverse containing an unlimited number of individual universes extending an unlimited distance in all directions and for an unlimited time in the past and future. If that's the case, we just happen to live in that universe which is suited for our kind of life. The universe is not fine-tuned to us; we are fine-tuned to our particular universe.

Now, theists and many nonbelieving scientists object to this solution as being “nonscientific” because we have no way of observing a universe outside our own, which we will see is disputable. In fact, a multiverse is more scientific and parsimonious than hypothesizing an unobservable creating spirit and a single universe. I would argue that the multiverse is a legitimate scientific hypothesis, since it agrees with our best knowledge.

In this regard, I should mention that modern string theory is used as a possible basis for a multiverse. This view as been promoted by physicist Leonard Susskind, one of the founders of the subject.16 String theory is the idea that the fundamental “atoms” (uncuttable objects) of the universe are not zero-dimensional particles but one-dimensional vibrating strings.17 In this theory, the universe has six dimensions beyond the usual four of space and time. The extra dimensions are curled up on such a small scale that we can't detect them, but they are responsible for the “inner” degrees of freedom carried by the atoms, such as spins and electric charge.

Since gravity is included along with the other forces, string theory has been a major candidate for an ultimate unified “Theory of Everything” (TOE). For years, string theorists have been seeking a unique solution to the elegant but enormously complex equations of string theory that would correspond to the universe as we know it, with no adjustable parameters. They have found solutions all right, but no unique one. Instead, the number of vacuum states alone in string theory is 10500. Susskind got the brilliant idea that these 10500 solutions is each a different possible universe with different parameters. He pictures these solutions as a landscape with 10500 valleys, each valley corresponding to a universe with a particular set of parameters. Assuming a theory, such as inflationary cosmology in which universes are constantly generated by natural quantum processes and fall into a random valley, there is bound to be one universe that has parameters such as ours suitable for life.

Now, I mention this only for completeness. Although, I believe it is adequate to refute fine-tuning, it remains an untested hypothesis. My case against fine-tuning will not rely on speculations beyond well-established physics nor on the existence of multiple universes. I will show that fine-tuning is a fallacy based on our knowledge of this universe alone.

I will also address a number of issues in cosmology and physics that are only indirectly related to fine-tuning but represent major misinterpretations of science by theologians, Christian apologists, and the many layperson authors who are part of the great, richly financed Christian media machine in the United States that promulgates much misinformation about science to the masses. These are necessary to complete my story that not only is there no evidence for God, but also there is strong evidence for his nonexistence beyond a reasonable doubt.

Since the fine-tuning question is basically one of physics, it obviously cannot be understood satisfactorily without some knowledge of physics. I will attempt to elucidate the physics in words that should be understandable to the average reader, although some grasp of scientific terms and methods will be helpful. The boxed equations can be skipped and the general arguments followed from the text. However, to make my case precise I cannot escape some use of mathematics at about the college freshman level or, occasionally, slightly above. Certainly anyone with sufficient knowledge to write authoritatively on fine-tuning should have no trouble following my mathematical arguments.

At the same time, highly trained physicists and cosmologists may not be totally satisfied by my lack of complete mathematical rigor. I will be using what is sometimes called “semiclassical” arguments. For example, at this writing we have no quantum theory of gravity. Instead we have Einstein's general theory of relativity, which still describes all gravitational phenomena after almost a century since it was introduced but is surely not complete. As a separate theory, we have quantum mechanics and its extension to the standard model of particles and forces, which describes everything else prior to data from the LHC. Both of these replace the classical, Newtonian physics that went before. In a semiclassical argument we use classical physics, with some modifications here and there to account for relativistic and quantum effects. I claim this is adequate for my purposes, which only require that I provide a plausible explanation for observations.

I do not have the burden of disproving that God fine-tuned physics and cosmology so that humans formed in his image would evolve. Anyone making such an awesome claim carries the burden of proof. I regard my task as a devil's advocate to simply find a plausible explanation within existing knowledge for the parameters having the values they do. In doing so I will avoid speculating much beyond currently established knowledge, using only the standard models of physics and cosmology and, rarely, slight extrapolations to what can be expected in the next step. For example, I do not use any arguments based on string theory.

I described above Roger Penrose's characterization of fine-tuning in terms of a tiny region of phase space that specified the parameters of our universe. We saw that the region is an infinitesimal volume, one part in ten raised to the power 10123. Those who claim fine-tuning assert that it is demonstrated with that degree of certitude. I will refute this by showing that some form of life would be possible for a wide range of parameters inside a finite volume of phase space.

Soon after finishing the first draft of this manuscript, I learned that philosopher Robin Collins was preparing a book arguing for the existence of God based on fine-tuning. An abridged, but still eighty-two-page-long version was published in 2009.18 While Collins has a far better understanding of physics than the typical Christian apologist, I think he still exhibits some of the misunderstandings and narrow vision that we will see are common among the proponents of fine-tuning. I will point out a few of these when the subject arises. Also, in a later chapter I will refer to Collins's specific objections to my previously published work.

This also prompts me to make a general observation that is not widely understood by the general public. It is impossible to prove the reality of any god, or anything else for that matter, by deductive logic alone. Any conclusion one makes by deduction is already embedded in the premises made as the first step of that procedure. Thus one has to be very careful about any philosophical argument for God. Unless that argument brings in observational data at some point, the process may be nothing more than the rearranging of words. Having spent a lifetime looking at observational data, you can expect my arguments to be based on science and not philosophical disputation.

On the other hand, it is possible to logically disprove the existence of gods with certain attributes, by showing an inconsistency between those attributes and either the definition of the god or other established facts. For many examples of this, see The Impossibility of God by Michael Martin and Ricki Monnier.19

1.1. NOMA

A widespread belief exists that science has nothing to say about God—one way or another. I must have heard it said a thousand times that “science can neither prove nor disprove the existence of God.”

However, in the past two decades believers and nonbelievers alike have convinced themselves in significant numbers, and their opponents in negligible numbers, that the scientific basis for each position is virtually unassailable.

Atheists look at the world around them, with their naked eyes and with the instruments of science, and see no sign of God. Even the most devout theist must admit that the existence of God is not an accepted scientific fact in the same way as, for example, the existence of quarks or black holes. As is the case with God, no one has directly observed these objects. But the indirect empirical evidence is ample for them to be considered to have some relation to reality with a high degree of probability, always with the caveat that future developments could still find a better explanation for this evidence.

Now, the theist will retort that this does not prove that God does not exist. If she is a Christian, she will of course be thinking of the Christian God. But the argument also does not prove that Zeus and Vishnu do not exist, nor Santa Claus and the Tooth Fairy. Still, one can easily imagine scientific experiments to test for the existence of Santa Claus and the Tooth Fairy. Just post lookouts on rooftops around the world on Christmas Eve, and at the bedsides of children who just lost baby teeth.

As I pointed out in my 2007 book, God: The Failed Hypothesis—How Science Shows That God Does Not Exist, it is possible to scientifically test the hypothesis of the existence of a god who plays such an active role in the universe as the traditional God of Judaism, Christianity, and Islam.1 Zeus and Vishnu might be a little tougher to rule out scientifically, but the Judeo-Christian-Islamic God is surprisingly easy to test for by virtue of his assumed participation in every event in the universe, from atomic transitions in distant galaxies to keeping watch that evolution on Earth does not stray from his divine plan.

While the majority of scientists in Western and non-Islamic nations do not believe in God, many prefer to adopt the stance advocated by famed paleontologist Stephen Jay Gould in his 1999 book Rocks of Ages: Science and Religion in the Fullness of Life.2 Gould proposed that we redefine science and religion so that they are “two nonoverlapping magisteria” (NOMA), leaving science to deal with studying nature and religion to deal with morality. Or, to paraphrase Galileo, science tells us the way the heavens go and religion tells us the way to go to heaven.3

Gould's NOMA especially appeals to believing scientists. The many I have known in fifty years of academic life place their religion and science in separate compartments that never interact with one another. Most nonbelieving scientists go along with NOMA as well, since they would prefer, as a social and political strategy, to avoid getting into battles over religion. However, philosophers, theologians, and many atheist scientists have not found NOMA practical. Notice I referred to Gould's proposal as a “redefinition.” Gould, an avowed atheist, now deceased, was good-intentionally trying to carve out distinct, “nonoverlapping” areas for both religion and science. He strived to eliminate conflicts, which had increased in recent times, by basically redefining religion as moral philosophy. However, existing religions, while claiming to tell us how to go to heaven, also try to tell us how the heavens go. Moreover, science is not proscribed from observing human behavior and providing observational data on matters of morality.

NOMA simply does not accurately describe either the history or the current status of the relationship between religion and science. Neither is likely to agree to any limitations on its zone of activity. So they overlap and are going to continue to do so, and they will continue to battle over their common ground where differences are, in many cases, irreconcilable.

1.2. NATURAL THEOLOGY

During the Enlightenment in the eighteenth century, when the rise of science increasingly influenced thinking, religion was deeply questioned in Europe and America. For the first time perhaps in history, it became possible to be openly atheistic or at least critical of established religion. But it was not a one-way street. Western Christian theology, which by then already had a proud history of logical thinking on the problem of God, found a place for science in what was called natural theology.

Natural theology provided several excellent scientific arguments for the existence of God that, when first introduced, were irrefutable with existing scientific knowledge. They only became refutable with further scientific developments.4

The premier figure in natural theology was William Paley (d. 1805), archdeacon of Carlisle, whose 1802 book, Natural Theology; or, Evidences of the Existence and Attributes of the Deity, was the first serious attempt to use scientific arguments to prove that the world was designed and sustained by God.5 While design arguments for God had been proposed since antiquity, and argued against—notably by the great Scottish philosopher David Hume (d. 1776) in his Dialogues concerning Natural Religion6 and An Enquiry concerning Human Understanding7—Paley went beyond the typical theological emphasis on logical deduction to a direct appeal to empirical observation and its interpretation.

This was important because every one of the endless series of “proofs” of the existence of God that has been proposed, from antiquity to the present day, is automatically a failure because, as I have mentioned, a logical deduction tells you nothing that is not already embedded in its premises. All logic can do for you is test the self-consistency of those premises. There is only one reliable way that humans have discovered so far to obtain knowledge they do not already possess—observation. And science is the methodical collecting of observations and the building and testing of models to describe those observations.

Paley's main argument was based on the “watchmaker analogy,” which had been used by others in the past to illustrate divine order in the world. In his opening paragraph he talks about crossing a heath and pitching his foot against a stone. He sees no problem thinking that the stone had not lain there forever. On the other hand, if he had found a watch upon the ground and saw that its several parts were put together for a purpose, the inference would be inevitable that the watch must have had a maker, an artificer who had formed it for a purpose. He then proceeds to make an analogy between the watch and living creatures, with their eyes and limbs so intricately designed as to defy any imaginable possibility that they could have come about by any natural process.

1.3. DARWINISM

This argument convinced almost everyone, even the young Charles Darwin (d. 1882) when he was a student, coincidentally occupying the same rooms at Cambridge as Paley had a generation earlier. But Darwin would eventually change many minds besides his own. During his voyage around the world on HMS Beagle from December 27,1831, to October 2,1836, Darwin accumulated a wealth of data that he analyzed meticulously during the next twenty-three years. In 1859, he published On the Origin of Species: By Means of Natural Selection, or the Preservation of Favoured Races in the Struggle for Life, which demonstrated how, over great lengths of time, complex life-forms evolve by a combination of random mutations and natural selection.8 Living organisms not only develop without the need for the intervention of an intelligent designer but also provide ample evidence for the lack of such divine action.9

While it should not be forgotten that Alfred Russel Wallace announced his independent discovery of natural selection simultaneous with Darwin (by mutual agreement), and others had also toyed with the notion, Darwin deserves the lion's share of the credit by virtue of providing the great bulk of supporting evidence and his brilliant insights in interpreting that evidence.

I need not relate the familiar history of the 150-year battle between science and religion over the theory of evolution, especially the attempts by Christians to have public schools in the United States teach the biblical creation myth as a legitimate “scientific” alternative.10 While heroic attempts have been made by theists and atheists alike to show that evolution need not conflict with traditional beliefs, the fact remains that the majority of believers in the United States refuse to accept a scientific theory that is as well established as the theory of gravity because of its gross conflict with the biblical account of the creation of life.

A series of Gallup polls of Americans from 1982 to 2008 asked respondents to choose from three options: (1) Humans developed over millions of years, God-guided, (2) Humans developed over millions of years, God had no part, (3) God created humans as is within ten thousand years. The results were fairly consistent over the years, the 2008 results giving 36 percent for God-guided but over millions of years, 14 percent for the long period with God having no part, and 44 percent with creation as is within the last ten thousand years.11

Another recent poll was conducted by Vision Critical, a UK organization, on the question of whether human beings evolved from less-advanced forms over millions of years or whether they were created by God in their present form within the last ten thousand years. The result for Great Britain was that 68 percent supported evolution, 16 percent supported creation, and 15 percent were unsure. The result for Canada was 61 percent for evolution, 24 percent for creation, and 15 percent unsure. The result for the United States was 35 percent for evolution, 47 percent for creation, and 18 percent unsure.12 The difference between the United States and the two nations closest to it in culture is striking.

Only the Gallup poll considered the question of God guidance. While it is true that there were people before Darwin, including his own grandfather, who had speculated about evolution, today the term is understood to include the Darwin-Wallace mechanism of random mutations and natural selection. There is no crying in baseball, and there is no guidance, God or otherwise, in Darwinian evolution. Only the 14 percent of Americans who accept that God had no part in the process can be said to believe in the theory of evolution as the vast majority of biologists and other scientists understand it today. God-guided development is possible, but it is unnecessary and just another form of intelligent design.

Just because the Catholic Church and moderate Protestant congregations say they have no problem with evolution, that doesn't mean they don't. A statement by Pope John Paul II in 1996 seemed to support biological evolution. However, he made it clear that in his opinion it was still one of several hypotheses still under dispute. That opinion sharply disagrees with that of the vast majority of biologists. Furthermore, the pope unambiguously excluded the evolution of mind, saying that “the spiritual soul is immediately created by God” and that theories of evolution that consider mind as emerging from living matter “are incompatible with the truth about man.”13 No doubt the pope has never considered the possibility that the evolution of the human species was not controlled by God.

In the theory of evolution accepted by an almost unanimous consensus of scientists, humans with fully material bodies evolved by accident and natural selection only, with no further mechanisms or agents involved, and simply were not designed by God or natural law.14 The evolution of mind is currently more contentious, but the evidence piles up daily that mind is also purely the product of the same natural processes with no need to introduce anything beyond matter. This conclusion is unacceptable to anyone who has been raised to think he was made in the image of God with an immortal, immaterial soul that is responsible for our conscious thinking.

It is important to recognize that when evolution by natural selection was first proposed in 1859, it was not in agreement with all scientific knowledge and was potentially falsifiable. According to calculations by the great physicist William Thomson, Lord Kelvin, the sun did not have enough stored energy to last the millions of years needed for biological evolution. It was not until the early twentieth century that nuclear energy was discovered and was shown to be the highly efficient source of energy of the sun and other stars that allows them to shine for billions of years, thus providing ample time for life to evolve.

1.4. INTELLIGENT DESIGN

In recent years we have seen Paley's argument exhumed with an attempt to place it on a sounder scientific basis, or, at least, to make it seem so. In 1996, biochemist Michael Behe published Darwin's Black Box: The Biochemical Challenge to Evolution, which claimed that some biological structures are “irreducibly complex.”15 That is, living systems possess parts that could not have evolved from simpler forms since they had no function outside of the system of which they were part. His examples included bacterial flagella and blood clotting. Evolutionary biologists, of whom Behe is not one, easily demonstrated the flaw in this argument. Parts of biological structures often evolve with one function and then change function when joining up with another system. This was well known before Behe wrote his book, and many examples have since been described.

In 1999, theologian William Dembski published a book called Intelligent Design: The Bridge between Science & Theology, claiming that he could mathematically demonstrate that living systems contained more information than could be generated by natural means alone.16 While he had a number of other arguments based on information theory, they all boiled down to what he called the “law of conservation of information.” This law, Dembski asserted, required that the amount of information output by a physical system could never exceed the amount of information input. Thus it followed that the large amounts of information contained in living systems must have had an external input of information provided by an intelligent designer outside nature, who shall remain nameless.17

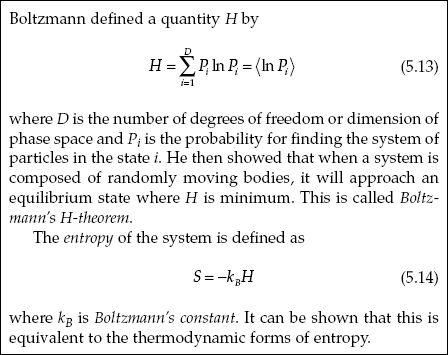

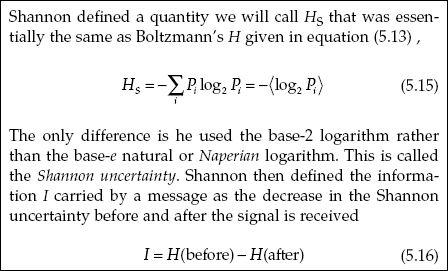

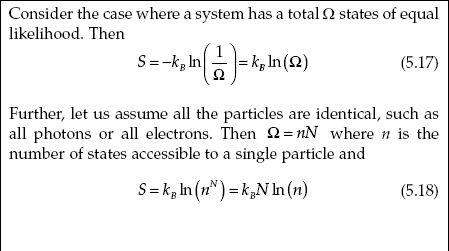

Hundreds of papers and dozens of books have refuted Dembski (as well as Behe), and I need not refer to them all. I will just mention his misuse of the concept of information.18 Dembski used the definition of information provided by the father of communication theory, Claude Shannon, in 1948.19 Shannon defined the information transferred in a communication process to be equal, within a constant, to the decrease in the entropy of the system. Here he used the conventional definition of entropy in statistical mechanics that was provided by Ludwig Boltzmann in the late nineteenth century.

Now, it is well known that entropy is not a conserved quantity such as energy. The second law of thermodynamics allows for the entropy of a closed system to increase with time. It follows that information is not a conserved quantity and Dembski's law of conservation of information is provably wrong. On the empirical side, many examples can be given of physical systems creating information. A spinning compass needle provides no information on direction. When it slows to a stop, it “creates” the information of the direction North.

The particular form of intelligent design proposed by Behe and Dembski received a deadly blow in December 2005, when a federal court in Dover, Pennsylvania, ruled that it was motivated by religion and thus would violate the Establishment Clause of the US Constitution if taught as science in public schools.20 There can be no doubt that intelligent design claims are motivated by religion. However, in his ruling the judge went further than necessary by declaring that intelligent design is not science. It is my professional opinion and that of several philosophers that intelligent design is in fact science, just wrong science. That should be sufficient to keep it out of classrooms along with phlogiston and the theory that Earth is flat, except as historical references.

2.1. FINE-TUNING

F or years now theists have thought they have the final, killer scientific argument for the existence of God. They have claimed that the physical parameters of the universe are delicately balanced—“fine-tuned”—so that any infinitesimal changes would make life as we know it impossible. Even atheist physicists find this so-called “anthropic principle” difficult to explain naturally, and many think they need to invoke multiple universes to do so.

2.2. HISTORY

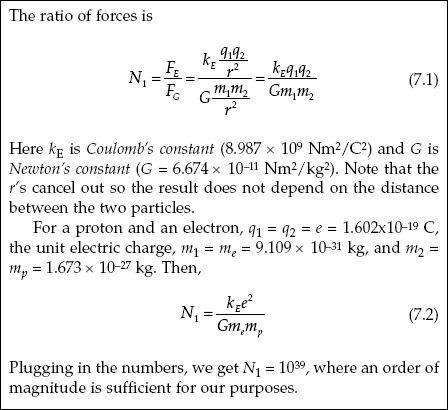

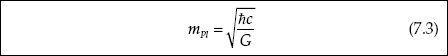

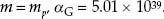

Let us review the history of the notions of fine-tuning and the anthropic principles. In 1919, physicist Hermann Weyl expressed his puzzlement that the ratio of the electromagnetic force to the gravitational force between an electron and a proton is such a huge number, N1 = 1039.1 Weyl wondered why this should be the case, expressing his intuition that “pure” numbers occurring in the description of physical properties, such as π, which do not depend on any system of units, should most naturally occur within a few orders of magnitude of unity. Unity, or zero, you can expect “naturally.” But why 1039? Why not 1057 or 10-123? Some principle must select out 1039. This is called the large number puzzle.

In 1923 Arthur Eddington commented: “It is difficult to account for the occurrence of a pure number (of order greatly different from unity) in the scheme of things; but this difficulty would be removed if we could connect it to the number of particles in the world—a number presumably decided by accident.”2 He estimated that number, now called the “Eddington number,” to be N = 1079. Well, N is not too far from the square of N1. This coincidence was no doubt accidental, since we now know that it corresponds just to the number of atoms in the visible universe, which contains a billion times as many photons and neutrinos and many billions of times more beyond our horizon.3

These musings may bring to mind the measurements made on the Great Pyramid of Egypt in 1864 by the Astronomer Royal for Scotland, Charles Piazzi Smyth. He found accurate estimates of π and the distance from Earth to the sun, and other strange “coincidences” buried in his measurements.4 However, further analysis revealed that these were simply the result of Smyth's selective toying with the numbers.5 Still, even today some people believe that the pyramids hold secrets about the universe. Ideas like this never seem to die, no matter how deep in the Egyptian sand they may be buried.

Look around at enough numbers and you are bound to find some that appear connected. Most physicists, therefore, did not seriously regard the large number puzzle until one of their most brilliant members, Paul Dirac, took an interest. Few physicists ignored anything Dirac had to say.

Dirac pointed out that N1 is the same order of magnitude as another pure number, N2, that gives the ratio of a typical stellar lifetime to the time for light to traverse the radius of a proton. That is, he found two seemingly unconnected large numbers to be of the same order of magnitude.6 If one number being large is unlikely, how much more unlikely is another to come along with about the same value?

In 1961 physicist Robert Dicke pointed out that N2 is necessarily large in order that the lifetime of typical stars be sufficient to generate heavy chemical elements such as carbon. Furthermore, he showed that N1 must be of the same order as N2 in any universe with heavy elements.7 This was the first of the anthropic coincidences: if N1 did not approximately equal N2, life as we know it would not exist.

The heavy elements did not get fabricated straightforwardly. According to the big bang theory, only hydrogen, deuterium (the isotope of hydrogen containing one proton and one neutron in its nucleus), helium, and lithium were formed in the early universe. Carbon, nitrogen, oxygen, iron, and the other elements of the chemical periodic table were not produced until billions of years later. These billions of years were needed for stars to form and in their death throes, after burning all their hydrogen, to assemble these heavier elements out of neutrons and protons. When the more massive stars expended their hydrogen fuel, they exploded as supernovae, spraying the manufactured elements into space. Once in space, these elements cooled, mixed with the interstellar medium, and eventually formed newer stars accompanied in many instances by planets.

Billions of additional years were needed for our home star, the sun, to provide a stable output of energy so that at least one of its planets could develop highly complex life. But if the gravitational attraction between protons in stars had not been many orders of magnitude weaker than the electric repulsion, as represented by the very large value of N1, stars would have collapsed and burned out long before nuclear processes could build up the periodic table from the original hydrogen and deuterium. The formation of chemical complexity is possible only in a universe of great age in terms of nuclear reaction times—or at least in a universe with other parameters close to the values they have in this one.

2.3. HOYLE'S PREDICTION

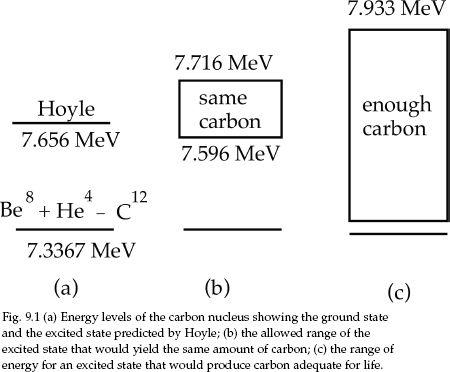

The next important step in the history of fine-tuning occurred in 1952 when astronomer Fred Hoyle used anthropic arguments to predict that an excited carbon nucleus, 6C12, has an energy level at around 7.7 MeV. Hoyle had looked closely at the nuclear processes involved and found that they appeared to be inadequate.

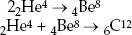

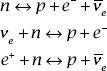

The basic mechanism for the manufacture of carbon is the fusion of three helium nuclei into a single carbon nucleus:

The subscript on the chemical symbol tells us how many protons are in the nucleus: 2 for 2He4, 6 for 6C12. This is called the atomic number and is the position number in the periodic table. Although it is redundant with the chemical symbol, I have included it for pedagogical purposes. The superscripts give the total number of nucleons, that is, protons plus neutrons in each nucleus. This is called the nucleon number or mass number and relates to the atomic weight in chemistry. The total number of nucleons is conserved, that is, remains constant, in a nuclear reaction. So does the total atomic number, since it measures nuclear electrical charge and this is also conserved. Chemical elements with the same atomic number but different atomic weights are called isotopes.

The probability of three bodies coming together simultaneously is very low, and some catalytic process in which only two bodies interact at a time must be assisting. An intermediate process in which two helium nuclei first fuse into a beryllium nucleus, which then interacts with the third helium nucleus to give the desired carbon nucleus, had earlier been suggested by astrophysicist Edwin E. Salpeter:8

Hoyle showed that this still was not sufficient unless the carbon nucleus had an excited state at 7.7 MeV to provide for a high reaction probability. A laboratory experiment was undertaken, and, sure enough, a previously unknown excited state of carbon was found at 7.65 MeV.9

Nothing can gain you more respect in science than the successful prediction of a new phenomenon. Hoyle's prediction provided scientific legitimacy for anthropic reasoning. But he also gave believers (he claimed to be an atheist himself) something to crow about, remarking,

A commonsense interpretation of the facts suggests that a superintellect has monkeyed with physics, as well as with chemistry and biology, and that there are no blind forces worth speaking about in nature. The numbers one calculates from the facts seem to me so overwhelming as to put this conclusion almost beyond question.10

2.4. THE ANTHROPIC PRINCIPLES

In 1974, physicist Brandon Carter introduced the term anthropic principle to describe the anthropic coincidences.11 In the weak form of the principle, the location in the universe in which we live must be compatible with the fact that we are here to observe it. In the strong form, at least at some stage, the universe itself must have been compatible with the existence of observers.

In its weak form, the anthropic principle simply points out the obvious fact that if the laws and parameters of nature were not suitable for life, we would not be here to talk about them. As a simple example, physical constants and the laws that contain them determined that the atmosphere of Earth would be transparent to wavelengths of light from about 350 nanometers to 700 nanometers, where a nanometer is a billionth of a meter. The human eye is sensitive to the same region. The theistic interpretation is that God designed our eyes so we could see in front of us. The natural explanation is that our eyes evolved to be sensitive in that region.

One possible natural explanation for the anthropic coincidences is that multiple universes exist with different physical constants and laws and our life-form evolved in the one suitable for us. Theists vehemently object that we have no evidence for multiple universes and, furthermore, we are violating Occam's razor by introducing multiple entities “beyond necessity.” Even some atheistic physicists criticize the idea as “non-scientific.” However, cosmologist and evangelical Christian Don Page, who was Stephen Hawking's student, finds the idea of multiple universes congenial to theism. He suggests, “God might prefer a multiverse as the most elegant way to create life and the other purposes He has for His Creation.”12

Modern cosmological theories do indicate that ours is just one of an unlimited number of universes, and theists can give no reason for ruling them out. In this book I do not spend a lot of time with philosophical or “commonsense” reasoning. Rather I look directly at the various parameters that have been proposed as being fine-tuned and see if plausible explanations can be found within existing knowledge.

None of the books and articles I have seen that promote the anthropic coincidences as scientific evidence for God, including those written by scientists, present precise scientific arguments. Although many journal articles have been published on special topics, the only place one can find a detailed discussion of the physics of fine-tuning, with equations, is in the 1986 tome by physicists John Barrow and Frank Tipler, The Anthropic Cosmological Principle. This book provides an exhaustive historical, philosophical, and scientific survey of the notion that the universe is fine-tuned for humanity, without taking a stand one way or the other.13

Barrow and Tipler defined three different forms of the anthropic principle, the first of which is as follows:

Weak Anthropic Principle (WAP):

The observed values of all physical and cosmological quantities are not equally probable but they take on values restricted by the requirement that there exist sites where carbon-based life can evolve and by the requirement that the Universe be old enough for it to have already done so.14

This is essentially the same as Carter's definition. As we saw above, all the WAP seems to say is that if the universe were not the way it is, we would not be here talking about it. If the mass of the electron were different, people would look different. However, it does not tell us why the constants have the values they do rather than some other value that would make life impossible.

Barrow and Tipler formulated the strong anthropic principle as follows, which differs from Carter's definition:

Strong Anthropic Principle (SAP):

The universe must have those properties that allow life to develop within it at some stage in its history.15

Barrow and Tipler noted that SAP can have three interpretations:

1. There exists one possible universe “designed” with the goal of generating and sustaining “observers.”

2. Observers are necessary to bring the universe into being.

3. An ensemble of other different universes is necessary for the existence of our universe.

The authors identify a third version of the anthropic principle:

Final Anthropic Principle (FAP):

Intelligent, information-processing must come into existence in the universe, and, once it comes into existence, it will never die out.

The late polymath Martin Gardner referred to this as the Completely Ridiculous Anthropic Principle (CRAP).16

2.5. FINE-TUNING TODAY

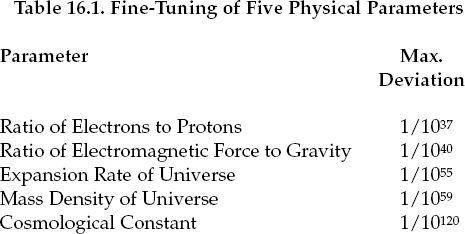

Since Barrow and Tipler, many books and articles have appeared that have made much of the anthropic coincidences. As we have seen from the several quotations I have already presented, believers have little doubt that here is indisputable evidence for a creator. William Lane Craig's mind “reels” at the incomprehensibly small probabilities for any natural explanation. He says there are fifty fine-tuned parameters, but he does not list them. A list of thirty-four parameters that are claimed to be fine-tuned has been assembled by microbiologist Rich Deem on his God and Science website.17 Deem also lists, without details, estimates of the precision at which each parameter had to be tuned to produce our kind of life. These are the numbers that make Craig's mind reel.

Deem's main reference is physicist and Christian apologist Hugh Ross and his popular book The Creator and the Cosmos, first published in 1993.18 Ross is the founder of Reasons to Believe, which describes itself as an “international and interdenominational science-faith think tank providing powerful new reasons from science to believe in Jesus Christ.”19 A list of twenty-six claimed “design evidences” can be found in the book.20 Ross has further developed his arguments in a chapter called “Big Bang Model Refined by Fire” in the anthology Mere Creation: Science, Faith & Intelligent Design.21

Without giving any more quotations, I will just list a few additional books by believers in my private library that call on the anthropic coincidences to make their case for God:

- Atheism Is False, by David Reuben Stone22

- A Case against Accident and Self-Organization, by Dean L. Overman23

- Life after Death, by Dinesh D'Souza24

- The Language of God, by Francis Collins25

These and many more can be found in university and public libraries, and I have studied a sufficient number of these efforts to have a good grasp of the claims being made.

In addition, a number of reputable, nontheistic scientists have written popular books on fine-tuning. They try to keep an open mind on the theological implications but still express puzzlement alongside the enthusiasm typical of science writers anxious to exhibit the wonders of the universe to their readers. Again, in my private library I find:

- The Constants of Nature, by John Barrow26

- Just Six Numbers, by Martin Rees27

- The Goldilocks Enigma, by Paul Davies28

Needless to say, theologians and philosophers have not ignored fine-tuning. Perhaps the best-known theologian arguing for the existence of God is Richard Swinburne. His article, “Argument from the Fine-Tuning of the Universe,”29and a review, “The Anthropic Principle Today,”30 by philosopher John Leslie can be found in a very useful set of essays Modern Cosmology & Philosophy, edited by Leslie.31

In this book I will look in detail at all of the most significant claims of fine-tuning. But first I need to establish some basic facts about physics and cosmology that are much misunderstood, or at least misrepresented, especially in the Christian apologetic community.

3.1. MODELS

T he process of doing physics is no different than that of any other natural science. We make observations, almost exclusively quantitative measurements, and describe these observations with mathematical models. We attempt to use those models to correlate and predict other observations. When a model's predictions are risky; that is, when they have a good chance of turning out wrong, then the model can be fairly tested by checking those predictions. If the test fails, the model is falsified and we forget about it, or, more frequently, we modify it and try again. Models that cannot be falsified, that explain everything, explain nothing.

At some point, after a model has passed many risky tests, it may be granted the exalted status of “theory.” The two models we will discuss the most in this book, the standard model of particles and forces and the standard (concordance) model of cosmology, have legitimately achieved theory status by their success at making many risky predictions. Nevertheless, they are still referred to as “models.” This may be the unfortunate consequence of creationists' attempt to demean Darwinian evolution in the public's mind by referring to it as “just a theory.” Or perhaps it is just habit.

Scientists and philosophers of science have never been able to agree on a demarcation criterion that precisely distinguishes science from nonscience. The falsification criterion proposed by Karl Popper and Rudolf Carnap is still commonly referred to but has proved unsatisfactory, at least to most philosophers.1 While not everything that is falsifiable, such as astrology, is science, my own experience in physics research has been that the failure of a logically consistent model to agree with observations is the only reason that we have to discard the model. Without falsification science would be an anarchy of logically consistent but still useless models that simply suit someone's fancy. But falsification does not solve the demarcation problem.

As is often pointed out, since we can never know whether a model might be falsified in the future, its current agreement with the data is never sufficient to verify the model with 100 percent certainty. However, the admitted fact that science is always tentative is often overemphasized to the point where laypeople wonder if science can say anything certain. When a model has passed many risky tests, tests that could have falsified it, we can begin to have confidence that it is telling us something about the real world with certainty approaching 100 percent.

It's not likely that we will someday discover that Earth is flat after all, or that it really is only six thousand years old. In cases like these we can say that science has “proved” something “beyond a reasonable doubt.” This still leaves open a tiny possibility for change but assures us we can take these as scientific facts for all practical purposes. If we were going to take too literally the statement that science is tentative, we would never set foot in an airplane nor allow ourselves to be put to sleep for surgery.

A good example of a model that we can accept with some confidence is inflationary cosmology, which will be discussed in chapter 5. This model has passed a number of tests that could have falsified it. In the meantime, it helps explain much of what is observed in cosmology, including, as we will see, several observations that theists insist are examples of fine-tuning. However, being relatively recent and still having some theoretical problems, inflationary cosmology remains subject to further testing and consideration of alternatives.

3.2. OBSERVATIONS

Even if we cannot precisely distinguish science from nonscience, we can establish several facts about the scientific process. Most important of all, science deals with observations. If you are talking about science, you are talking about data. If you are not talking about data, you are not talking about science.

Now, it is true that string theory and other theoretical attempts to unite general relativity and quantum mechanics have yet to confront empirical tests. However, they must ultimately do so or cease to be considered scientific endeavors.

Any process must start with some definitions. The physical science process starts with operational definitions of the quantities that will be part of the models that will be built to describe observations. The operational definition of a quantity prescribes the precise procedure that one goes about in making a measurement of that quantity. For example, before the establishment of the atomic theory of matter, the absolute temperature of a body was defined as what is measured with a constant volume gas thermometer. This made it possible for workers in one laboratory to present their results in terms of a quantity that could be unambiguously tested in any other laboratory with the same class of instrument. Other types of thermometers might be used, but these would have to be calibrated against the constant volume gas thermometer. And that was temperature, by definition. Temperature was not some Platonic form out there in a metaphysical world beyond the laboratory. It was what you measured on a constant volume gas thermometer.

3.3. SPACE, TIME, AND REALITY

Most people, including most physicists, believe that the models and “laws” of physics directly describe reality. That is, the objects in these models actually exist outside the paper they are written on and the concepts they contain refer directly to true aspects of the world. Consider space and time, the two concepts that form the starting point of all physical models.2 What could be more real than space and time?

Originally, space-time models assumed three dimensions of space obeying Euclidean geometry, with time an independent measure. In 1905, Einstein introduced a model called special relativity that united space and time in a single four-dimensional manifold we call space-time. In 1916, he introduced general relativity that utilized a more general non-Euclidean geometry that had been developed by mathematicians in the previous century. The surface of a sphere was an example of non-Euclidean geometry, where the parallel lines of longitude meet at the poles. Thus Einstein formulated the idea that in the vicinity of a heavy mass space is “curved” and that bodies naturally followed “geodesics” analogous to the great circles on a sphere. He thereby accounted for gravity without introducing a gravitational force. Like an airliner taking the shortest available distance, a great circle from point to point on Earth's surface, the geodesic path is the path of “least action.”

Today the most fashionable model being worked on in physics is called M-theory (a generalized form of string theory) in which space-time has ten dimensions of space and one of time. Seven of the ten spatial dimensions are curled up on such a tiny scale as to be invisible to even our most powerful instruments, but they have degrees of freedom that give particles their “internal” properties, such as spin and electric charge.

In his popular book on fine-tuning, Just Six Numbers, the Astronomer Royal of the United Kingdom, Martin Rees, proposes that the dimensionality of the universe is one of six parameters that appear particularly adjusted to enable life.3 He presents arguments known from the time of Newton that three spatial dimensions are special. In particular, in three dimensions gravity obeys an inverse square law, without which stable planetary orbits would not be possible.

Clearly Rees regards the dimensionality of space as a property of objective reality. But is it? I think not. Since the spacetime model is a human invention, so must be the dimensionality of space-time. We choose it to be three because it fits the data. In the M-theory we choose it to be eleven. We use whatever works, but that does not mean reality is exactly that way in one-to-one correspondence.

The same thing can be said about the geometry of space. That's our invention, too. It happens to be non-Euclidean in Einstein's beautiful model, but perhaps someday a simpler, Euclidean model will be found with gravity treated in a different way.

When physicists talk about space and time, as in general relativity and M-theory, they implicitly assume that they are describing reality. At least, they talk as if they are. Time is “real.” Space is “real” and it “really” has three, ten or some other number of dimensions still to be determined by experiment. By “real” here I am referring to ontology, not mathematics.

But how do physicists know that space, time, or any other of their invented quantities are real? All they can know is that they have invented some models with which they are trying to describe observations. If they agree with observation, then the models no doubt have something to do with reality. However, do physicists have any basis for assuming that the models and “laws” of physics, which are human inventions, describe reality precisely as it is? Why couldn't reality be something totally different that gives the same observations?

This was certainly Plato's view in his metaphor of the cave. Prisoners are chained inside a cave so they can see only the wall. Their only knowledge of what is going on outside their view is obtained from the shadows on the wall. Are our observations just shadows of what is truly real? We can never know.

Do we have any basis for assuming that the models and “laws” of physics, which are human inventions, describe reality as it is? The success of quantum mechanics has made that question a lot more profound. Is an object “really” a particle or a wave? Does a particle “really” have a momentum after its position has been measured? Is this particle's wave function a “real” field that collapses instantaneously far out to the edge of the universe and back all the way in the past when the position is measured here and now? If the wave function is not real, then what about the electric and magnetic fields that are associated in quantum mechanics with the wave function of a photon? Does any physicist consider them not real? No, but they can't prove it.

A common refrain among theoretical physicists is that the fields of quantum field theory are the “real” entities while the particles they represent are images like the shadows in Plato's cave. As one who did experimental particle physics for forty years before retiring in 2000, I say, “Wait a minute!” No one has ever measured a quantum field, or even a classical electric, magnetic, or gravitational field. No one has ever measured a wavicle, the term used to describe the so-called wavelike properties of a particle. You always measure localized particles. The interference patterns you observe in sending light through slits are not seen in the measurements of individual photons, just in the statistical distributions of an ensemble of many photons. To me, it is the particle that comes closest to reality. But then, I cannot prove it is real either. I will expand on this point in chapter 15.

We have to think about what it is we actually do when we make measurements and fit them to models. We do not use the models to build up some metaphysical system. We use the models to predict the outcomes of other measurements and to aid us in putting these to practical use. Consider the example of transmitting an electromagnetic signal from one point to another in empty space. For simplicity, let us try to send a signal of a single frequency. We set up an oscillating electric current in an antenna and tune a receiver attached to a distant antenna to the frequency of that oscillation. We then use Maxwell's equations to calculate the form of the electromagnetic wave that will propagate through space at the speed of light from the first antenna to the second. But we never see that wave. We just measure an oscillating current in the receiver. In other words, it does not matter whether or not the electromagnetic field is “real.” It is part of a model that we use to describe the data but it never enters into the measurement process at either end of the signal.

Now, none of this should be interpreted as meaning that physics is not to be taken seriously. When I say physical models are human inventions, I mean the same as if I were saying that the camera is a human invention. Like the camera, the models of physics very usefully describe our observations. When they do not, the model or the camera is discarded. I am simply repeating what many philosophers have pointed out over the centuries, that our observations are not pure but are operated on by our cognitive system composed of our senses and the brain that analyzes the data from those senses. Those models need not correspond precisely, or even roughly, to whatever reality is out there—although they probably do at least for large objects. The moon is probably real. But the gravitational field does not have to be.

Now, this may seem like pedantic philosophizing, but it is important when we start talking about God fine-tuning the parameters of our models. Why should quantities that are simply human artifacts used in describing nature have to have external forces setting their values?

Still, I realize that I open myself up to some tough questions by taking this point of view. If the models and parameters are just human inventions, why should they have anything to do with objective reality? Well, they are not arbitrary since they have to agree with observations, not just roughly but with quantitative accuracy. Furthermore, I have already admitted that the moon is probably real. Where do I draw the line? Let's say macroscopic bodies that we see with unaided eyes are real. Does that mean that bacteria we can see only with a microscope are imaginary? No biologist would let me get away with that.

It is not until you get to the “submicroscopic” quantum level that the reality issue comes up. There, our models include things such as “virtual particles” with imaginary mass and wave functions that propagate instantaneously throughout the universe. Later, after we have developed physics ideas further, I will delve a little into speculative metaphysics just to show that a plausible and consistent, if unprovable, picture exists for the reality behind observations.

3.4. PARAMETERS

The properties of the universe that are supposed to be fine-tuned for life arise from how matter is described in our models from the tiniest distances at subnuclear level to the greatest distances in the cosmos. The quantities that theists refer to when they talk about fine-tuning are usually either “constants” in the models of physics, such as the masses of particles and the relative strengths of forces, or physical properties used in the models of cosmology, such as the average mass density of the universe or the cosmological constant. It is also often suggested that the models of physics themselves are fine-tuned—that they might have been different and so needed the special attention of a creator to come out “just right.” But if they are human inventions, then they needed the special attention of a human to come out “just right.”

Now, since we are allowing the quantities of physics, such as the masses of particles, to take on different values, it is confusing to refer to them as “constants” and so I will call them “parameters.” Indeed, unbeknownst to most of the non-physicist authors who write about fine-tuning, some of the quantities, such as the relative strengths of forces, are not even constant in our universe but depend on the energies of the particles interacting with one another. I will discuss this in detail in chapter 10.

3.5. DEFINITIONS

Space and time are the two concepts that form the starting point of virtually all physical models. Over the centuries, the standard units of distance and time have been steadily refined to be as convenient and stable as possible. In 1960, the meter was defined as 1,650,763.73 wavelengths in a vacuum of the electromagnetic radiation that results from the transition between the 2p10 and 5d5 energy levels of the Krypton-86 atom. In 1967, the second was defined as 9,192,631,770 periods of the radiation corresponding to the transition between the two hyperfine levels of the ground state of the Cesium-133 atom.

The quantity conventionally labeled c in physics is called the “speed of light in a vacuum.” In 1905, Einstein proposed that c is a universal constant, and this became one of the axioms of his theory (model) of special relativity. By 1983, special relativity had proved so successful that the universality of c was accepted as a fact by the scientific community and built into the very definitions of space and time.

In the Standard International (SI) system of units, the distance between two points in space is measured in meters. Until 1983, the meter was defined independently of the second. In that year, by international agreement, it was mandated that the meter would be defined as the distance between two points when the time it takes light to go between the points in a vacuum is 1/299,792,458 second. That is, the speed of light in a vacuum is c = 299,792,458 meters per second by definition.

In general, then, time is what you measure on a clock and distance is what you measure on the same clock for light to go between two points. The significance of this agreement has never been fully understood, either by laypeople or by many physicists and philosophers. The quantity c cannot be fine-tuned. It is fixed by definition.

But how can this be? How can the speed of some physical process be simply declared by fiat? Light travels so many meters per second. Isn't that a measurable quantity just like a major-league pitcher's fastball?