The Story of Psychology – Read Now and Download Mobi

Morton Hunt

THE STORY OF

PSYCHOLOGY

Morton Hunt has been a freelance writer specializing in the behavioral sciences since 1949. His articles have appeared in many national magazines, including The New Yorker and The New York Times, and have won him numerous prizes including the Westinghouse A.A.A.S. Award for best science article of the year. He has written twenty-one books, the best known of which are The World of the Formerly Married (about the lives and psychology of separated and divorced people), The Universe Within (cognitive science), and the earlier edition of this present book. He lives in Gladwyn, Pennsylvania, with his wife, writer and psychotherapist Bernice Hunt.

A L S O B Y M O R T O N H U N T

The Natural History of Love

Her Infinite Variety:

The American Woman as Lover, Mate and Rival

Mental Hospital

The Talking Cure

(with Rena Corman and Louis R. Ormont)

The Thinking Animal

The World of the Formerly Married

The Affair: A Portrait of Extra-Marital Love

in Contemporary America

The Mugging

Sexual Behavior in the 1970s

Prime Time: A Guide to the Pleasures and Opportunities

of the New Middle Age (with Bernice Hunt)

The Divorce Experience (with Bernice Hunt)

The Universe Within:

A New Science Explores the Human Mind

Profiles of Social Research:

The Scientific Study of Human Interactions

The Compassionate Beast: What Science Is Discovering

About the Humane Side of Humankind

How Science Takes Stock: The Story of Meta-Analysis

The New Know-Nothings: The Political Foes of the

Scientific Study of Human Nature

To Bernice,

for reasons beyond counting

READER

I here put into thy hands what has been the diversion of some of my idle and heavy hours; if it has the good luck to prove so of any of thine, and thou hast but half so much pleasure in reading as I had in writing it, thou wilt as little think thy money, as I do my pains, ill bestowed.

JOHN LOCKE, “The Epistle to the Reader,”

An Essay Concerning Human Understanding

CONTENTS

Prologue: Exploring the Universe Within

A Psychological Experiment in the Seventh Century B.C. 1

PART ONE: PRESCIENTIFIC PSYCHOLOGY

The Forerunners: Alcmaeon, Protagoras, Democritus, Hippocrates

The “Midwife of Thought”: Socrates

The Commentators: Theophrastus, the Hellenists, the Epicureans, the Skeptics, the Stoics

Roman Borrowers: Lucretius, Seneca, Epictetus, Galen, Plotinus

The Patrist Adapters: the Patrists, Tertullian, Saint Augustine

The Patrist Reconcilers: the Schoolmen, Saint Thomas Aquinas

The Rationalists: Descartes, the Cartesians, Spinoza

The Empiricists: Hobbes, Locke, Berkeley, Hume, the Empiricist-Associationist School

German Nativism: Leibniz, Kant

PART TWO: FOUNDERS OF A NEW SCIENCE

Just Noticeable Differences: Weber

Neural Physiology: von Helmholtz

The Making of the First Psychologist

The Curious Goings-on at Konvikt

6 The Psychologist Malgré Lui: William James

Ideas of the Pre-eminent Psychologizer

7 Explorer of the Depths: Sigmund Freud

The Invention of Psychoanalysis

Dynamic Psychology: Early Formulations

Dynamic Psychology: Extensions and Revisions

“Whenever You Can, Count”: Francis Galton

The Mental Age Approach: Alfred Binet

Two Discoverers of the Laws of Behaviorism: Thorndike and Pavlov

Mr. Behaviorism: John B. Watson

Two Great Neobehaviorists: Hull and Skinner

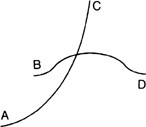

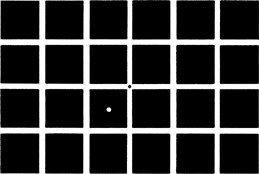

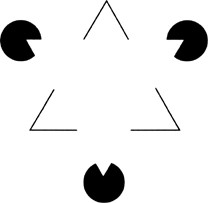

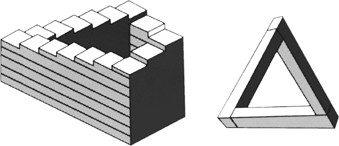

A Visual Illusion Gives Rise to a New Psychology

Out-of-Reach Bananas and Other Problems

PART THREE: SPECIALIZATION AND SYNTHESIS

Introduction: The Fissioning of Psychology— and the Fusion of the Psychological Sciences

11 The Personality Psychologists

“The Secrets of the Hearts of Other Men”

The Fundamental Units of Personality

Late Word from the Personality Front

“Great Oaks from Little Acorns Grow”

Closed Cases: Cognitive Dissonance, the Psychology of Imprisonment, Obedience, the Bystander Effect

Ongoing Inquiries: Conflict Resolution, Attribution, Others

The Value of Social Psychology

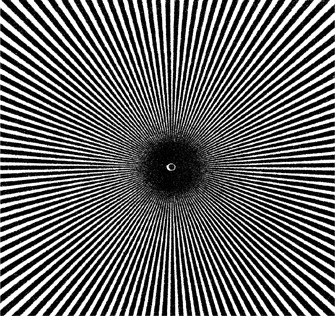

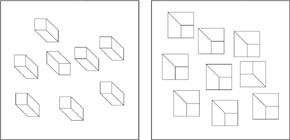

14 The Perception Psychologists

15 The Emotion and Motivation Psychologists

Is the Mind a Computer? Is a Computer a Mind?

Freud’s Offspring: The Dynamic Psychotherapists

The Patient as Laboratory Animal: Behavior Therapy

All in the Mind: Cognitive Therapy

18 Users and Misusers of Psychology

Improving the Human Use of the Human Equipment

Improving the Fit Between Humans and Their Jobs

Covert Persuasion: Advertising and Propaganda

PROLOGUE:

Exploring the Universe Within

A Psychological Experiment in the Seventh Century B.C.

A most unusual man, Psamtik I, King of Egypt. During his long reign, in the latter half of the seventh century B.C., he not only drove out the Assyrians, revived Egyptian art and architecture, and brought about general prosperity, but found time to conceive of and conduct history’s first recorded experiment in psychology.

The Egyptians had long believed that they were the most ancient race on earth, and Psamtik, driven by intellectual curiosity, wanted to prove that flattering belief. Like a good psychologist, he began with a hypothesis: If children had no opportunity to learn a language from older people around them, they would spontaneously speak the primal, inborn language of humankind—the natural language of its most ancient people—which, he expected to show, was Egyptian.

To test his hypothesis, Psamtik commandeered two infants of a lower-class mother and turned them over to a herdsman to bring up in a remote area. They were to be kept in a sequestered cottage, properly fed and cared for, but were never to hear anyone speak so much as a word. The Greek historian Herodotus, who tracked the story down and learned what he calls “the real facts” from priests of Hephaestus in Memphis, says that Psamtik’s goal “was to know, after the indistinct babblings of infancy were over, what word they would first articulate.”

The experiment, he tells us, worked. One day, when the children were two years old, they ran up to the herdsman as he opened the door of their cottage and cried out “Becos!” Since this meant nothing to him, he paid no attention, but when it happened repeatedly, he sent word to Psamtik, who at once ordered the children brought to him. When he too heard them say it, Psamtik made inquiries and learned that becos was the Phrygian word for bread. He concluded that, disappointingly, the Phrygians were an older race than the Egyptians.1

We today may smile condescendingly; we know from modern studies of children brought up under conditions of isolation that there is no innate language and that children who hear no speech never speak. Psamtik’s hypothesis rested on an invalid assumption, and he apparently mistook a babbled sound for an actual word. Yet we must admire him for trying to prove his hypothesis and for having had the highly original notion that thoughts arise in the mind through internal processes that can be investigated.

Messages from the Gods

For it had not occurred to anyone until then, nor would it for another several generations, that human beings could study, understand, and predict how their thoughts and feelings arose.

Many other complex natural phenomena had long engaged the interest of both primitive and civilized peoples, who had come more or less to understand and master them. For nearly 800,000 years human beings had known how to make and control fire;2 for 100,000 years they had been devising and using tools of many kinds; for eight thousand years some of them had understood how to plant and raise crops; and for over a thousand years, at least in Egypt, they had known some of the elements of human anatomy and possessed hundreds of remedies—some of which may even have worked—for a variety of diseases. But until a century after Psamtik’s time neither the Egyptians nor anyone else thought about or sought to understand—let alone influence—how their own minds functioned.

And no wonder. They took their thoughts and emotions to be the work of spirits and gods. We have direct and conclusive evidence of this in the form of the testimony of ancient peoples themselves. Mesopotamian cuneiform texts from about 2000 B.C., for instance, refer repeatedly to the “commands” of the gods—literally heard as utterances by the rulers of society—dictating where and how to plant crops, to whom to delegate authority, on whom to make war, and so on. A typical clay cone reads, in part:

Mesilin King of Kish at the command of his deity Kadi concerning the plantation of that field set up a stele [an inscribed stone column] in that place… Ningirsu, the hero of Enlil [another god], by his righteous command, upon Umma war made.3

A far more detailed portrait of how early people supposed their thoughts and feelings arose can be found in the Iliad, which records the beliefs of Homer in the ninth century B.C., and to some extent those of the eleventh-century Greeks and Trojans he wrote about. Professor Julian Jaynes of Princeton, who exhaustively analyzed the language of the Iliad that refers to mental and emotional functions, summed up his findings as follows:

There is in general no consciousness in the Iliad… and in general, therefore, no words for consciousness or mental acts. The words in the Iliad that in a later age come to mean mental things have different meanings, all of them more concrete. The word psyche, which later means soul or conscious mind, [signifies] in most instances life-substances, such as blood or breath: a dying warrior bleeds out his psyche onto the ground or breathes it out in his last gasp …Perhaps most important is the word noos which, spelled as nous in later Greek, comes to mean conscious mind. Its proper translation in the Iliad would be something like perception or recognition or field of vision. Zeus “holds Odysseus in his noos.” He keeps watch over him.4

The thoughts and feelings of the people in the Iliad are put directly into their minds by the gods. The opening lines of the epic make that plain. It begins when, after nine years of besieging Troy, the Greek army is being decimated by plague, and the thought occurs to the great Achilles that they should withdraw from those shores:

Achilles called the men to gather together, this having been put into his mind by the goddess of the white arms, Hera, who had pity on the Greeks when she saw them dying… and he said to them, “I believe that backwards we must make our way home if we are to escape death through fighting and the plague.”

Such explanations of both thought and emotion occur time and again, said Professor Jaynes.

When Agamemnon, king of men, robs Achilles of his mistress, it is a god that grasps Achilles by his yellow hair and warns him not to strike Agamemnon. It is a god… who leads the armies into battle, who speaks to each soldier at the turning points, who debates and teaches Hector what he must do.5

Other ancient peoples, even centuries later, similarly believed that their thoughts, visions, and dreams were messages from the gods. Herodotus tells us that Cyrus the Great, founder of the Persian Empire, crossed into the land of the hostile Massagetae in 529 B.C. and during his first night there dreamed that he saw Darius, the son of his follower Hystaspes, with wings on his shoulders, one shadowing Asia, the other Europe. When Cyrus awoke, he summoned Hystaspes and said, “Your son is discovered to be plotting against me and my crown. I will tell you how I know it so certainly. The gods watch over my safety, and warn me beforehand of every danger.” He recounted the dream and ordered Hystaspes to return to Persia and have the son ready to answer to Cyrus when he came back from defeating the Massagetae.6 (Cyrus, however, was killed by the Massagetae. Darius did later become king, but not by having plotted against him.)

The ancient Hebrews had comparable beliefs. Throughout the Old Testament, important thoughts are taken to be utterances of God, who appears in person in the earlier writings, or as the voice of God heard within oneself, in the later ones. Three instances:

After these things the word of the Lord came unto Abram in a vision, saying, Fear not, Abram: I am thy shield, and thy exceeding great reward. (Genesis, 15:1)

Now after the death of Moses the servant of the Lord it came to pass, that the Lord spake unto Joshua the son of Nun, Moses’ minister, saying, Moses my servant is dead; now therefore arise, go over this Jordan, thou, and all this people, unto the land which I do give to them, even to the children of Israel. (Joshua, 1:1–2)

Now the word of the Lord came unto Jonah the son of Amittai, saying, Arise, go to Nineveh, that great city, and cry against it; for their wickedness is come up before me. (Jonah, 1:1–2)

Disordered thoughts and madness were likewise interpreted as the work of God or of spirits sent by Him. Deuteronomy names insanity as one of the many curses that God will inflict on those who do not obey His commands:

The Lord shall smite thee with madness, and blindness, and astonishment of heart. (Deut., 28:28)

Saul’s psychotic fits, which David allayed by playing the harp, are attributed to an evil spirit sent by the Lord:

But the spirit of the Lord departed from Saul, and an evil spirit from the Lord troubled him… And it came to pass, when the evil spirit from God was upon Saul, that David took an harp, and played with his hand: so Saul was refreshed, and was well, and the evil spirit departed from him. (I Samuel, 16:14–23)

When David’s fame as a warrior exceeded Saul’s, though, the divinely caused madness raged out of all control:

And it came to pass on the morrow, that the evil spirit from God came upon Saul, and he prophesied in the midst of the house: and David played with his hand, as at other times: and there was a javelin in Saul’s hand. And Saul cast the javelin; for he said, I will smite David even to the wall with it… [but David] slipped away out of Saul’s presence, and he smote the javelin into the wall. (I Samuel, 18:10–11 and 19:10)

The Discovery of the Mind

But in the sixth century B.C. there appeared hints of a remarkable new development. In India, Buddha attributed human thoughts to our sensations and perceptions, which, he said, gradually and automatically combine into ideas. In China, Confucius stressed the power of thought and decision that lay within each person (“A man can command his principles; principles do not master the man,” “Learning, undigested by thought, is labor lost; thought unassisted by learning is perilous”).

The signs of change were even stronger in Greece, where poets and sages began to view their thoughts and emotions in wholly new terms.7 Sappho, for one, described the inner torment of jealousy in realistic terms rather than as an emotion inflicted on her by a god:

Peer of gods he seemeth to me, the blissful

Man who sits and gazes at thee before him,

Close beside thee sits, and in silence hears thee

Silverly speaking,

Laughing love’s low laughter. Oh, this, this only

Stirs the troubled heart in my breast to tremble!

For should I but see thee a little moment,

Straight is my voice hushed;

Yea, my tongue is broken, and through and through me

’Neath the flesh, impalpable fire runs tingling;

Nothing see mine eyes, and a voice of roaring

Waves in my ears sounds.

—“Ode to Atthis”

Solon, poet and lawgiver, used the word nous not in the Homeric sense but to mean something like rational mind. He declared that at about age forty “a man’s nous is trained in all things” and in the fifties he is “at his best in nous and tongue.” He or the philosopher Thales— sources differ—sounded a note totally different from that of Homeric times in one of Western civilization’s briefest and most famous pieces of advice, inscribed on the Temple of Apollo at Delphi: “Know thyself.”

Within a few decades there began a sudden and astonishing efflorescence of Greek thought, science, and art. George Sarton, the historian of science, once estimated that in the Hellenic era, human knowledge increased something like forty-fold in less than three centuries.8

One of the most notable aspects of this intellectual outburst was the abrupt appearance and burgeoning of a new area of knowledge, philosophy. In the Greek city-states of the fifth and fourth centuries B.C., a small number of reflective upper-class men, who had neither scientific equipment nor hard data but were driven by a passion to understand the world and themselves, managed by pure speculation and reasoning to conceive of, and offer answers to, many of the enduring questions of cosmogony, cosmology, physics, metaphysics, ethics, aesthetics, and psychology.

The philosophers themselves did not use the term “psychology” (which did not exist until A.D. 1520) or regard it as a distinct area of knowledge, and they were less interested in the subject than in more fundamental ones like the structure of matter and the nature of causality. Nonetheless, they identified and offered hypotheses about nearly all the significant problems of psychology that have concerned scholars and scientists ever since. Among them:

—Is there only one substance, or is “mind” something different from “matter”?

—Do we have souls? Do they exist after the body dies?

—How are mind and body connected? Is mind part of soul, and if so can it exist apart from the body?

—Is human nature the product of inborn tendencies or of experience and upbringing?

—How do we know what we know? Are our ideas built into our minds, or do we develop them from our perceptions and experiences?

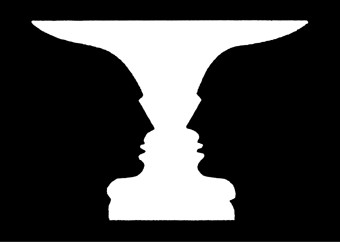

—How does perception work? Are our impressions of the world around us true representations of what is out there? How can we know whether they are or not?

—Which is the right road to true knowledge—pure reasoning or data gathered by observation?

—What are the principles of valid thinking?

—What are the causes of invalid thinking?

—Does the mind rule the emotions, or vice versa?

There is scarcely a major topic in today’s textbooks of introductory psychology that was not anticipated, at least in rudimentary form, by the Greek philosophers. What is even more impressive, their goal was the same as that of contemporary psychologists: to discover the true causes of human behavior—those unseen processes of the mind which take place in response to external events and other stimuli.

This quest launched the Greek philosophers on an intellectual voyage into the invisible world of the mind—the universe within, one might call it. From their day to ours, explorers of the mind have been pressing ever deeper into its terra incognita and uncharted wilderness. It has been and continues to be a voyage as challenging and enlightening as any expedition across unknown seas or lands, any space mission to faroff planets, any astronomical probe of the rim of the world and the border of time.

What kind of men (and, in recent decades, women) have felt compelled to find out what lies in the vast and invisible cosmos of the mind? All kinds, as we will see: solitary ascetics and convivial sybarites, feverish mystics and hardheaded realists, reactionaries and liberals, true believers and convinced atheists—the list of antinomies is endless. But they are alike in one way, these Magellans of the mind: All of them, in various ways, are interesting, impressive, even awesome human beings. Time and again I felt, after reading the biography and writings of one of these people, that I was fortunate to have come to know him, privileged to have lived with him vicariously, and greatly enriched by having shared his adventures.

The explorations of the interior world conducted by such people have surely been more important to human development than the explorations of the external one. Historians are wont to name technological advances as the great milestones of culture, among them the development of the plow, the discovery of smelting and metalworking, the invention of the clock, printing press, steam power, electric engine, lightbulb, semiconductor, and computer. But possibly even more transforming than any of these was the recognition by Greek philosophers and their intellectual descendants that human beings could examine, comprehend, and eventually even guide or control their own thought processes, emotions, and resulting behavior.

With that realization we became something new and different on earth: the only animal that, by examining its own cerebration and behavior, could alter them. This, surely, was a giant step in evolution. Although we are physically little different from the people of three thousand years ago, we are culturally a different species. We are the psychologizing animal.

This inward voyage of the past twenty-five hundred years, this search for the true causes of behavior, this most liberating of all human inquiries, is the subject of The Story of Psychology.

ONE

The

Conjecturers

The Glory That Was Greece

“In all history,” the philosopher Bertrand Russell has said, “nothing is so surprising or so difficult to account for as the sudden rise of civilization in Greece.”1

Until the sixth century B.C., the Greeks borrowed much of their culture from Egypt, Mesopotamia, and neighboring countries, but from the sixth to the fourth centuries they generated a stupendous body of new and distinctive cultural materials.* Among other things, they created sophisticated new forms of literature, art, and architecture, wrote the first real histories (as opposed to mere annals), invented mathematics and science, developed schools and gymnasiums, and originated democratic government. Much of subsequent Western culture has been the lineal descendant of theirs; in particular, much of philosophy and science during the past twenty-five hundred years has been the outgrowth of the Greek philosophers’ attempts to understand the nature of the world. Above all, the story of psychology is the narrative of a continuing effort to answer the questions they first asked about the human mind.

It is mystifying that the Greek philosophers so suddenly began to theorize about human mental processes in psychological, or at least quasi-psychological, terms. For while the 150 or so Greek city-states around the Mediterranean had noble temples, elegant statues and fountains, and bustling marketplaces, living conditions in them were in many respects primitive and not, one would suppose, conducive to subtle psychological inquiry.

Few people could read or write; those who could had to scratch laboriously on wax tablets or, for permanent records, on strips of papyrus or parchment twenty to thirty feet long wrapped around a stick. Books— actually, hand-copied scrolls—were costly, rare, and awkward to use.

The Greeks, possessing neither clocks nor watches, had but a rudimentary sense of time. Sundials offered only approximations, were not transportable, and were of no help in cloudy weather; the water clocks used to limit oratory in court were merely bowls filled with water that emptied through a hole in about six minutes.

Lighting, such as it was, was provided by flickering oil lamps. A few of the well-to-do had bathrooms with running water, but most people, lacking water to wash with, cleansed themselves by rubbing their bodies with oil and then scraping it off with a crescent-shaped stick. (Fortunately, some three hundred days a year were sunny, and Athenians lived out of doors most of the time.) Few city streets were paved; most were dirt roads, dusty in dry weather and muddy in wet. Transport consisted of pack mules or springless, bone-bruising horse-drawn wagons. News was sometimes conveyed by fire beacons or carrier pigeons, but most often by human runners.

Illustrious Athens, the center of Greek culture, could not feed itself; the surrounding plains had poor soil, the hills and mountains were stony and infertile. The Athenians obtained much of their food through maritime commerce and conquest. (Athens established a number of colonies, and at times dominated the Aegean, receiving tribute from other city-states.) But while their ships had sails, the Athenians knew only how to rig them to be driven by a following wind; to proceed cross-wind or into the wind or in a calm, they forced slaves to strain hour after hour at banks of oars, driving the ships at most eight miles per hour. The armies thus borne to far shores to advance Athenian interests fought much like their primitive ancestors, with spears, swords, and bows and arrows.

Slaves also provided most of the power in Greek workshops and silver mines; human muscles, feeble as they are compared to modern machinery, were, aside from beasts of burden, the only source of kinetic energy. Slavery was, in fact, the economic foundation of the Greek city-states; men, women, and children captured abroad by Greek armies made up much of the population of many cities. Even in democratic Athens and the neighboring associated towns of Attica, at least 115,000 of the 315,000 inhabitants were slaves. Of the 200,000 free Athenians only the forty-three thousand men who had been born to two Athenian parents possessed all civil rights, including the right to vote.

All in all, it was not a way of life in which one would expect reflective and searching philosophy, or its subdiscipline, psychology, to flourish.

What, then, accounts for the Greeks’ astonishing intellectual accomplishments, and for those of the Athenians in particular? Some have half-seriously suggested the climate; Cicero said that Athens’ clear air contributed to the keenness of the Attic mind. Certain present-day analysts have hypothesized that the Athenians’ living outdoors much of the time, in constant conversation with one another, induced questioning and thinking. Others have argued that commerce and conquest, bringing Athenians and other Greeks into contact with many other cultures, made them curious about the origin of human differences. Still others have said that the mix of cultural influences in the Greek city-states gave Greek culture a kind of hybrid vigor. Finally, some have pragmatically suggested that when civilization had developed to the point where day-to-day survival did not take up every hour of the day, human beings for the first time had leisure in which to theorize about their motives and thoughts, and those of other people.

None of these explanations is really satisfactory, although perhaps all of them taken together, along with still others, are. Athens reached the zenith of its greatness, its Golden Age (480 to 399), after it and its allies defeated the Persians. Victory, wealth, and the need to rebuild the temples on the Acropolis that the Persian leader Xerxes had burned, in addition to the favorable influences mentioned above, may have produced a kind of cultural critical mass and an explosion of creativity.

The Forerunners

Along with their many other speculations, a number of the Greek philosophers of the sixth and early fifth centuries began proposing naturalistic explanations of human mental processes; these hypotheses and their derivatives have been at the core of Western psychology ever since.

What kinds of persons were they? What caused or at least enabled them to think about human cognition in this radically new fashion? We know their names—Thales, Alcmaeon, Empedocles, Anaxogoras, Hippocrates, Democritus, and others—but about many of them we know little else; about the others what we know consists largely of hagiography and legend.

We read, for instance, that Thales of Miletus (624–546), first of the philosophers, was an absentminded dreamer who, studying the nighttime heavens, could be so absorbed in glorious thoughts as to tumble ingloriously into a ditch. We read, too, that he paid no heed to money until, tired of being mocked for his poverty, he used his astrological expertise one winter to foretell a bumper crop of olives, cheaply leased all the oil presses in the area, and later, at harvest time, charged top prices for their use.

Gossipy chroniclers tell us that Empedocles (500?–430), of Acragas in southern Sicily, had such vast scientific knowledge that he could control the winds and once brought back to life a woman who had been dead for thirty days. Believing himself a god, in his old age he leaped into Etna in order to die without leaving a human trace; as some later poet-aster jested, “Great Empedocles, that ardent soul / Leaped into Etna, and was roasted whole.” But Etna vomited his brazen slippers back onto the rim of the crater and thereby proclaimed him mortal.

Such details hardly help us fathom the psychophilosophers, if we may so call them. Nor did any of them set down an account—at least, none exists—of how or why they became interested in the workings of the mind. We can only suppose that in the dawn of philosophy, when thoughtful men began to ask all sorts of searching questions about the nature of the world and of humankind, it was natural that they would also ask how their own thoughts about such things arose and where their ideas came from.

One or two did actual research that touched on the physical equipment involved in psychological processes. Alcmaeon (fl. 520), a physician of Croton in southern Italy, performed dissections on animals (dissecting the human body was taboo) and discovered the optic nerve, connecting the eye to the brain. Most, however, were neither hands-on investigators nor experimentalists but men of leisure, who, starting with self-evident truths and their own observations of everyday phenomena, sought to deduce the nature of the world and of the mind.

The psychophilosophers most often carried on their reasoning while strolling or sitting with their students in the marketplaces of their cities or courtyards of their academies, endlessly debating the questions that intrigued them. And probably, like Thales gazing at the stars, they also spent periods alone in deep meditation. But little remains of the fruits of their labors; nearly all copies of their writings were lost or destroyed.

Most of what we know of their thinking comes from brief citations in the works of later writers. Yet even these bits and pieces indicate that they asked a number of the major questions—to which they offered some sensible and some outlandish answers—that have concerned psychologists ever since.

We can surmise from the few obscure and tantalizing allusions by later writers to the philosophers’ ideas that among the questions they asked themselves concerning nous (which they variously identified as soul, mind, or both) were what its nature is (what it is made of), and how so seemingly intangible an entity could be connected to and influence the body.

Thales pondered these matters, although a single sentence in Aristotle’s De Anima (On the Soul) is the only surviving record of those thoughts: “Judging from the anecdotes related of him [Thales], he conceived soul as a cause of motion, if it be true that he affirmed the lode-stone to possess soul because it moves iron.”2 Little as this is to go on, it indicates that Thales considered soul or mind the source of human behavior and its mode of action a kind of physical force inherent in it, a view radically unlike the earlier Greek belief that human behavior was directed by supernatural forces.

Within a century, some philosophers and the physician Alcmaeon suggested that the brain, rather than the heart or other organs, as earlier believed, was where nous existed and where thinking goes on. Some thought it was a kind of spirit, others that it was the very stuff of the brain itself, but in neither case did they say anything about how memory, reasoning, or other thought processes take place. They were preoccupied by the more elementary question of whence—since not from the gods—the mind obtains the raw materials of thought.

Alcmaeon

Their general answer was sense experience. Alcmaeon, for one, said that the sense organs send perceptions to the brain, where, by means of thinking, we interpret them and derive ideas from them. What intrigued him and others was how the perceptions get from the sense organs to the brain. Unaware of nerve impulses, even though he had discovered the optic nerve, and believing, on abstract metaphysical grounds, that air was the vital component of mind, he decided that perceptions must travel along air channels from the sense organs to the brain: No matter that he never saw any and that no such channels exist; reason told him it must be so. (Later Greek anatomists would refer to the air, pneuma, they thought was in the nerves and brain as “animal spirits,” and in one form or another this belief would dominate thinking about the nervous system until the eighteenth century.) Although Alcmaeon’s theory was wholly incorrect, his emphasis on perception as the source of knowledge was the beginning of epistemology—the study of how we acquire knowledge—and laid the ground for a debate about that topic that has gone on ever since.

Protagoras

Alcmaeon’s ideas were borne around the far-flung Greek cities by travelers; soon, philosophers in many places were devising their own explanations of how perception takes place, and a number of them asserted that it was the basis of all knowledge. But some saw the troubling implications of this view. Protagoras (485–411), best known of the Sophists (a term that then meant not fallacious reasoners but “teachers of wisdom”), unsettled his contemporaries and pupils by pointing out that, since perception was the only source of knowledge, there could be no absolute truth. His famous apothegm, “Man is the measure of all things,” meant, he explained, that any given thing is to me what it appears to me to be, and, if it appears different to you, is what it seems to you to be. Each perception is true—for each perceiver. Philosophers were willing to debate the point, but politicians considered it subversive. When Protagoras, visiting Athens, tactlessly applied his theory to religion, saying there was no way for him to know whether the gods exist or not, the outraged Assembly banished him and burned his writings. He fled and drowned at sea en route to Sicily.

Democritus

Others carried on that line of inquiry, devising explanations of how perception takes place and maintaining that, since knowledge is based on perception, all truths are relative and subjective. The most sophisticated of such musings were those of Democritus (460–362) of Abdera, Thrace, the most learned man of his time. Vastly amused by the follies of humankind, he was known as the “laughing philosopher.” His main claim to fame, actually, derives not from his psychological reflections but from his extraordinary guess that all matter is composed of invisible particles (atoms) of different shapes linked together in different combinations, a conclusion he came to, without any experimental evidence, by sheer reasoning. Unlike Alcmaeon’s air channels, this theory would eventually be proven absolutely correct.

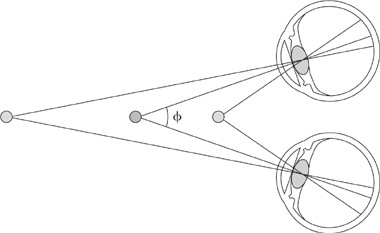

From his theory of atoms Democritus derived an explanation of perception. Every object gives off or imprints on the atoms of the air images of itself, which travel through the air, reach the eye of the beholder, and there interact with its atoms. The product of that interaction passes to the mind and, in turn, interacts with its atoms.3 He thus anticipated, albeit in largely incorrect detail, today’s theory of vision, which holds that photons of light, emanating from an object, travel to the eye, enter it, and stimulate the endings of the optic nerves, which send messages to the brain, where they act on the brain’s neurons.

All knowledge, according to Democritus, results from the interaction of the transmitted images with the mind. Like Protagoras, he concluded that this means we have no way of knowing whether our perceptions correctly represent what is outside or whether anyone else’s perception is identical with our own. As he put it, “We know nothing for certain, but only the changes produced in our body by the forces that impinge upon it.”4 That issue would vex philosophers and psychologists from then until now, driving many of them to devise elaborate theories in the effort to escape the solipsistic trap and to affirm that there is some way to know what is really true about the world.

Hippocrates

When the early philosopher-psychologists concluded that thought occurs in the mind, it was only natural that they would also wonder why our thoughts are sometimes clear and sometimes muddled, and why most of us are mentally healthy but others are mentally ill.

Unlike their ancestors, who had believed mental dysfunction to be the work of gods or demons, they sought naturalistic answers. The most widely accepted of these was that of Hippocrates (460–377), the Father of Medicine. The son of a physician, he was born on the Greek island of Cos off the coast of what is today Turkey. He studied and practiced there, treating many of the invalids and tourists who came for the island’s hot springs and achieving such renown that far-off rulers sought him out. In 430 Athens sent for him when a plague was ravaging the city; observing that blacksmiths seemed immune to it, he ordered fires built in all public squares and, legend says, brought the disease under control. Only a handful of the seventy-odd tracts bearing his name were actually written by him, but the rest, the work of his followers, embody his ideas, which are a mixture of the sound and the absurd. For instance, he stressed diet and exercise rather than drugs, but for many diseases recommended fasting on the grounds that the more we nourish unhealthy bodies, the more we injure them.

His greatest contribution was to divorce medicine from religion and superstition. He maintained that all diseases, rather than being the work of the gods, have natural causes. In this spirit, he taught that most of the physical and mental ills of his patients had a biochemical basis (though the term “biochemical” would have meant nothing to him).

He based this explanation of health and illness on the prevailing theory of matter. Philosophers had held that the primordial stuff of the world was water, fire, air, and so on, but Empedocles concocted a more intellectually satisfying theory, which dominated Greek and later thinking. All things, he said, are made up of four “elements”—earth, air, fire, and water—held together in varying proportions by a force he called “love” or kept asunder by its opposite, “strife.”5 Though the specifics were wholly wrong, many centuries later scientists would find that his core concept—that all matter is composed of elemental substances alone or in combination—was quite right.

Hippocrates borrowed Empedocles’ four-element theory and applied it to the body. Good health, he said, is the result of a proper balance of the four bodily fluids, or “humors,” which correspond to the four elements—blood corresponding to fire, phlegm to water, black bile to earth, and yellow bile to air. For the next two thousand years physicians would attribute many illnesses to humoral imbalances that they would try to cure by draining off an excess of a humor (as in bloodletting) or by administering medicines supplying one that was lacking. The harm this caused over the centuries, particularly through bloodletting, is incalculable.

Hippocrates used the same theory to explain mental health and illness. If the four humors were in proper balance, consciousness and thought would function well, but if any humor was either in excess or short supply, mental illness of one kind or another would result. As he wrote:

Men ought to know that from the brain, and the brain only, arise our pleasures, joys, laughter, and jests, as well as our sorrows, pains, grief, and tears… These things that we suffer all come from the brain when it is not healthy but becomes abnormally hot, cold, moist, or dry… Madness comes from its moistness. When the brain is abnormally moist, of necessity it moves, and when it moves, neither sight nor hearing is still, but we see or hear now one thing and now another, and the tongue speaks in accordance with the things seen and heard on any occasion. But when the brain is still, a man is intelligent.

The corruption of the brain is caused not only by phlegm but by bile. You may distinguish them thus: those who are mad through phlegm are quiet, and neither shout nor make a disturbance; those maddened through bile are noisy, evil-doing, and restless…The patient suffers from causeless distress and anguish when the brain is chilled and contracted contrary to custom; these effects are caused by phlegm, and it is these very effects that cause loss of memory.6

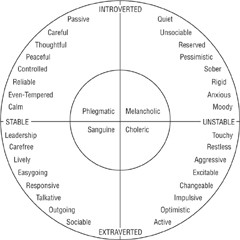

Later, followers of Hippocrates extended his humoral theory to account for differences in temperament. Galen, in the second century A.D., said that a phlegmatic person suffers from an excess of phlegm, a choleric one from an excess of yellow bile, a melancholic one from an excess of black bile, and a sanguine one from an excess of blood. That doctrine persisted in Western psychology until the eighteenth century and remains embedded in our daily speech—we call people “phlegmatic,” “bilious,” and so on—if not our psychology.

Although the humoral theory of personality and of mental illness now seems as benighted as the belief that the earth is the center of the universe, its premise—that there is a biological basis to, or at least a biological component in, personality traits and mental health or illness—has lately been confirmed beyond all question. A vast amount of recent research by cognitive neuroscientists has identified many of the substances produced by brain cells and shown how these enable thought processes to take place, and myriad other studies have shown that foreign substances such as drugs or toxins distort or interfere with those processes. Hippocrates was close to the mark after all.

One can only marvel at the psychological musings of Hippocrates and the pre-Socratic psychophilosophers. Quite without laboratories, methodology, or empirical evidence—indeed, without anything but open minds and intense curiosity—they recognized and enunciated a number of the salient issues and devised certain of the theories that have remained central in psychology from their time to our own.

The “Midwife of Thought”: Socrates

We now come upon a man unlike the shadowy figures we just met, a real and vivid person whose appearance, personal habits, and thoughts are thoroughly documented: Socrates (469–399), the leading philosopher of his time and the proponent of a theory of knowledge that directly contradicted perception-based theory. We know a good deal about him as a person because two of his pupils—Plato and the historian-soldier Xenophon—set down detailed recollections of him. Unfortunately, Socrates himself wrote nothing, and his ideas come to us chiefly through Plato’s dialogues, where much of what he says is probably Plato’s own thinking put in Socrates’ mouth for dramatic reasons. Nonetheless, Socrates’ contributions to psychology are clear.

He lived during the first half of Athens’ era of greatness (the span from its defeat of the Persians at Salamis in 480 to the death of Alexander in 323), when philosophy and the arts flourished as never before. The son of a sculptor and a midwife, he was fascinated by what he learned of philosophy in his youth from Protagoras, Zeno of Elea, and others. He early decided to make it his life work, but, unlike the Sophists, he took no fees for his teaching; he would talk to anyone who wanted to discuss ideas with him. He occasionally worked as a stonecutter and carver of statues but preferred the luxury of thought and discourse to the comforts money could buy. Content to be poor, he wore one simple shabby robe all year and went barefoot; once, looking about in the marketplace, he exclaimed with pleasure, “How many things there are that I do not want!”

Not that he was an ascetic; he liked good company, sometimes went to banquets given by the wealthy, and freely confessed to feeling a “flame” within him when he peered inside a youth’s garment. Uncommonly homely, with a considerable paunch, a bald head, broad snub nose, and thick lips, he looked like a satyr, his friend Alcibiades told him. Unlike a satyr, however, he was a model of moderation and self-control; he seldom drank wine, remained sober when he did, and was chaste even when in love. The beautiful and amoral Alcibiades, slipping into Socrates’ bed one night to seduce him, was astonished to be treated as if by a father. “I thought I had been disgraced,” he later said, according to Plato’s Symposium, “and yet I admired the way this man was made, and his temperance and courage.”

Socrates kept himself in good physical condition; he fought bravely during the Peloponnesian War, where his ability to withstand cold and hunger amazed his fellow soldiers. After long years of instructing his pupils, he was tried and condemned for his teachings, which Athenian democrats said corrupted youth. The real problem was that he was contemptuous of their democracy and numbered many aristocrats, their political foes, among his followers. He accepted the verdict with equanimity and refused the opportunity to escape, preferring to die with dignity.

Although the Delphic Oracle once declared Socrates the wisest man in the world, he disputed that pronouncement; it was his style to claim that he knew nothing and was wiser than others only in knowing that he knew nothing. He claimed to be a “midwife of thought,” one who merely helped others give birth to their ideas. This, of course, was a pose; in reality he had a number of firmly held opinions about certain philosophic matters. But unlike many of his contemporaries, he was uninterested in cosmology, physics, or perception; as he says in Plato’s Apology, “I have nothing to do with physical speculations.” His concern, rather, was with ethics. His goal was to help others lead the virtuous life, which, he said, comes about through knowledge, since no man sins wittingly.

To help his students attain knowledge, Socrates relied not on lectures but on a wholly different educational method. He asked his students questions that seemingly led them step by step to discover the truth for themselves. This technique, known as dialectic, was first used by Zeno, from whom he may have learned it, but it was Socrates who developed and popularized it. In doing so, he promulgated a theory of knowledge that would be the major alternative to perception-based theories from then on.

According to that theory, knowledge is recollection; we learn not from experience but from reasoning, which leads us to discover knowledge that exists within us (“to educate” comes from the Latin meaning “to lead out”). Sometimes Socrates asks for definitions and then leads his partner into contradictions until the definition is reshaped. Sometimes he asks for or offers examples, from which his partner finally makes a generalization. Sometimes he leads him, step by step, to a conclusion that contradicts one he had previously stated, or to a conclusion he had not known was implicit in his beliefs.

Socrates cites geometry as the ideal model of this process. One starts with self-evident axioms and, by hypothesis and deduction, discovers other truths in what one already knew. In the Meno dialogue he questions a slave boy about geometrical problems, and the boy’s answers supposedly show that he must all along have known the conclusions to which Socrates leads him; he was unaware that he knew them until he recalled them through dialectical reasoning. Similarly, in many another dialogue Socrates, without presenting an argument or offering answers, asks a friend or pupil questions that lead him, inference by inference, to the discovery of some truth about ethics, politics, or epistemology—in each case, knowledge he supposedly had but was unaware of.

We who live in an era of empirical science know that Socratic dialectic, though it can expose fallacies or contradictions in belief systems or lead to new conclusions in such formal systems as mathematics, cannot discover new facts. Until Anton van Leeuwenhoek (1632–1723 A.D.) first saw red corpuscles and bacteria under his lens, no Socratic teacher could have led his pupils or himself to “remember” that such things existed; until astronomers saw evidence of the “red shift” in distant galaxies, no philosopher could, through logical searching, have discovered that he already knew the universe to be expanding at a measurable rate.

Yet Socrates’ teachings greatly affected the development of psychology. His view that knowledge exists within us and needs only to be recovered through correct reasoning became part of the psychological theories of persons as diverse as Plato, Saint Thomas Aquinas, Kant, and even, in a sense, those present-day psychologists who maintain that personality and behavior are largely determined by genetics, linguists who say that our minds come equipped with language-comprehending structures, and parapsychologists who believe that each of us has lived before and can be “regressed” to recall our previous lives.

The notion that we have lived before is related to Socrates’ other major impact on psychology. He held that the existence of innate knowledge, revealed by the dialectic method of instruction, proves that we possess an immortal soul, an entity that can exist apart from the brain and body. With this, the vague mythical notions of soul that had long existed in Greek and related cultures assumed a new significance and specificity. Soul is mind but is separable from the body; mind does not cease to be at death.

On this ground would be built Platonic and, later, Christian dualism: the division of the world into mind and matter, reality and appearance, ideas and objects, reason and sense perception, the first half of each pair regarded not only as more real than but as morally superior to the second. Although these distinctions may seem chiefly philosophic and religious, they would pervade and affect humankind’s search for self-understanding throughout the centuries.

The Idealist: Plato

He was named Aristocles, but the world knows him as Plato—in Greek, platon, or “broad”—the nickname he was given as a young wrestler because of the width of his shoulders. He was born in Athens in 427 to well-to-do aristocratic parents, and in his youth was an accomplished student, a handsome charmer of men and women, and a would-be poet. At twenty, about to submit a poetic drama in a competition, he listened to Socrates speaking in a public place, after which he burned his poetry and became the philosopher’s pupil. Perhaps it was the gamelike quality of Socrates’ dialectic that captivated the former wrestler; perhaps the subtlety of Socrates’ ideas entranced the serious student; perhaps the quiet and serenity of Socrates’ philosophy appealed to the son of ancient lineage in that era of political upheaval and betrayal, war and defeat, revolution and terror.

Plato studied with Socrates for eight years. He was a dedicated student and something of a sobersides; one ancient author says that he was never seen to laugh out loud. A few scraps of love poetry attributed to him exist, some of them addressed to men, some to women, all of doubtful authenticity, and there is almost no gossip about his love life and no evidence that he ever married. Still, from the wealth of detail in his dialogues, it is evident that he was an active participant in Athenian social life and a keen observer of behavior and the human condition.

In 404, an oligarchic political faction that included some of his own aristocratic relatives urged him to enter public life under its auspices. The young Plato wisely held back, waiting to see what the group’s policy would be, and was repelled by the violence and terror it used as its tools of government. But when democratic forces regained power, he was even more repelled by their trial and conviction of his revered teacher, whom he calls, in the Apology, “the wisest, the justest, and best of all men I have ever known.” After Socrates’ death, in 399, Plato fled Athens, wandered around the Mediterranean meeting and studying with other philosophers, returned to Athens to fight for his city, then again went wandering and studying.

At forty, conversing with Dionysius, the despot of Syracuse, he daringly condemned dictatorship. Dionysius, nettled, said, “Your words are those of an old dotard,” to which Plato replied, “Your language is that of a tyrant.” Dionysius ordered him seized and sold into slavery, which might have been the end of his philosophizing, but Anniceris, a wealthy admirer, ransomed him, and he returned to Athens. Friends raised three thousand drachmas to reimburse Anniceris, who refused the money. They thereupon used it to buy Plato a suburban estate, where in 387 he founded his Academy. This school of higher learning would be the intellectual center of Greece for nine centuries until, in A.D. 529, the Emperor Justinian, a zealous Christian, shut it down in the best interests of the true faith.

We have almost no details about Plato’s activities at the Academy, which he headed for forty-one years, until his death in 347 at eighty or eighty-one. It is believed, however, that he taught his students by a combination of Socratic dialectic and lectures, usually delivered as he and his auditors wandered endlessly to and fro in the garden. (A minor playwright, mocking this custom, has a character say, “I am at my wits’ end walking up and down like Plato, and yet have discovered no wise plan but only tired my legs.”7)

Plato’s thirty-five or so dialogues—the actual number is uncertain, because at least half a dozen are probably spurious—were not meant for his students’ use; they were a popularized, semidramatized version of his ideas, addressed to a larger audience. They deal with metaphysical, moral, and political matters and, here and there, certain aspects of psychology. His influence on philosophy was immense and on psychology, although it was not his main concern, far greater than that of anyone who preceded him and of anyone except Aristotle for the next two thousand years.

Despite the veneration in which Plato is generally held, from a scientific standpoint his effect on the development of psychology was more harmful than helpful. Its most negative aspect was his antipathy to the theory that perception is the source of knowledge; believing that data derived from the senses are shifting and unreliable, he held that true knowledge consists solely of concepts and abstractions arrived at through reasoning. In the Theaetetus, he mocks the perception-based theory of knowledge: If each man is the measure of all things, why are not pigs and baboons equally valid measures, since they too perceive? If each man’s perception of the world is truth, then any man is as wise as the gods, yet no wiser than a fool. And so on.

More seriously, Plato has Socrates point out that even if we agree that one man’s judgment is as true as another’s, the wise man’s judgment may have better consequences than the ignorant man’s. The doctor’s forecast of the course of the patient’s illness, for instance, is more likely to be correct than the patient’s; thus, the wise man is, after all, a better measure of things than the fool.

But how does one become wise? Through touch we perceive hard and soft, but it is not the sense organs that recognize them as opposites, he says; it is the mind that makes that judgment. Through sight we may judge two objects to be about equal in size, but we never see or experience absolute equality; such abstract qualities can be apprehended only by other means. We gain true knowledge—that is, the knowledge of concepts like absolute equality, similarity and difference, existence and nonexistence, honor and dishonor, goodness and badness—through reflection and reason, not through sense impressions.

Here Plato was on the trail of an important psychological function, the process by which the mind derives general principles, categories, and abstractions from particular observations. But his bias against sense data led him to offer a wholly unprovable metaphysical explanation of that process. Like his mentor, he held that conceptual knowledge comes to us by recollection; we inherently have such knowledge and discover it through rational thinking.8

But going further than Socrates, he argued that these concepts are more “real” than the objects of our perceptions. The “idea” of a chair— the abstract concept of chairness—is more enduring and real than this or that physical chair. The latter will decay and cease to be; the former will not. Any beautiful individual will eventually grow old and wrinkled, die, and cease to exist, but the concept of beauty is eternal.9 The idea of a right triangle is perfect and timeless, while any triangle drawn on wax or parchment is imperfect and will someday cease to be; indeed, over the door of the Academy was the inscription “Let no one without geometry enter here.”

This is the heart of Plato’s Theory of Ideas (or Forms), the metaphysical doctrine that reality consists of ideas or forms that exist eternally in the soul pervading the universe—God—while material objects are transient and illusory.10 Plato is thus an Idealist, not in the sense of one with high ideals but of one who advocates the superiority of ideas to material objects. Our souls partake of those eternal ideas; we bring them with us when we are born. When we see objects in the material world, we understand what they are and the relationships between them—larger or smaller, and so on—by remembering our ideas and using them as a guide to experience.

Or rather we do if we have been liberated from ignorance by philosophy; if not, we are deluded by our senses and live in error like the prisoners in Plato’s famous metaphorical cave. Imagine a cave, he says in the Republic, in which prisoners are so bound that they face an inner wall and see only shadows, cast on it by a fire outside, of themselves and of men passing behind them carrying all sorts of vessels, statues, and figures of animals. The prisoners, knowing nothing of what is behind them, take the shadows to be reality. At last one man escapes, sees the actual objects, and understands that he has been deceived. He is like a philosopher who recognizes that material objects are only shadows of reality and that reality is composed of ideal forms.11 It is his duty to go down into the cave and lead the prisoners up into the light of reality.

Plato may have been led to construct his otherworldly and metaphysical explanation of true knowledge by Socrates’ and his own reasoning. But perhaps the military and political chaos of his era made him seek something eternal, unshakable, and absolute in which to believe. Certainly his prescription for an ideal state, spelled out in the Republic, aims to achieve stability and permanence through a rigid class system and the totalitarian rule of a small elite of philosopher-kings.

In any case, in Plato’s epistemology that which is physical, particular, and mortal is considered illusion and error, while only what is conceptual, abstract, and eternal is real and true. His Theory of Ideas, greatly extending the dualism of Socrates, portrayed the senses as deceptive, the spiritual as the only path to truth; appearances and material things as illusory and transient, ideas as real and eternal; the body as corruptible and corrupting, the soul as incorruptible and pure; desires and hungers as the source of trouble and sin, the ascetic life of philosophy as the way to goodness. These dichotomies sound remarkably like anticipations of the fulminations of the early Fathers of the Church but are Plato’s own:

The body fills us full of loves and lusts and fears and fancies of all kinds…We are slaves to [the body’s] service. If we would have true knowledge of anything we must be quit of the body—the soul in herself must behold things in themselves; then we shall attain the wisdom we desire, be pure and have converse with the pure… And what is purification but the separation of the soul from the body?12

Soul, for Plato, is thus not only an incorporeal and immortal entity, as many Greeks had long believed: it is also mind. But he never explained how thinking can take place in an incorporeal essence. Since thinking requires effort and thus uses energy, whence would the energy come to enable the soul to think? Plato says that motion is the essence of the soul and that psychological activities are related to its inner motions, but he is silent about the source of the energy for such motion.

Yet he was a sensible man with wide experience of the world, and some of his psychological conjectures about the soul are down-to-earth and sound almost contemporary. In some of the middle and later dialogues—notably the Republic, the Phaedrus, and the Timaeus— he says that when the soul inhabits a body, it operates on three levels: thought or reason, spirit or will, and appetite or desire. Though he castigated the lusts of the body in the Phaedo, now he says that it is as bad for reason wholly to suppress appetite or spirit as for either of those to overpower reason; the Good is achieved when all three aspects of the soul function in harmony. Here too he resorts to metaphor to make his meaning clear: He likens the soul, in the Phaedrus, to a team of two steeds, one lively but obedient (spirit), the other violent and unruly (appetite), the two yoked together and driven by a charioteer (reason) who, with considerable effort, makes them cooperate and pull together. Plato came to this conclusion without conducting clinical studies or psychoanalyzing anyone, yet to a surprising extent it anticipates Freud’s analysis of character as composed of superego, ego, and id.

Plato also said, without any empirical evidence to go on, that the reason is located in the brain, the spirit in the chest, and the appetites in the abdomen; that they are linked by the marrow of the spine and brain; and that emotions are carried around the body by the blood vessels. These guesses are in part ludicrous, in part prescient of later discoveries. Considering that he was no anatomist, one can only wonder how he arrived at these judgments.

In the Republic Plato describes in remarkably modern terms what happens when appetite is ungoverned:

When the reasoning and taming and ruling power of the personality is asleep, the wild beast within us, gorged with meat and drink, starts up and having shaken off sleep goes forth to satisfy his desires; and there is no conceivable folly or crime—not excepting incest or parricide, or the eating of forbidden food—which at such a time, when he has parted company with all shame and sense, a man may not be ready to commit.13

And he portrays in almost contemporary terms the condition we call ambivalence, which for him is a conflict between spirit and appetite that reason fails to control. In the Republic Socrates offers this example:

I was once told a story, which I can quite believe, to the effect that Leontius, the son of Aglaion, as he was walking up from the Piraeus and approaching the northern wall from the outside, observed some dead bodies on the ground and the executioner standing by them. He immediately felt a desire to look at them, but at the same time loathing the thought he tried to divert himself from it. For some time he struggled with himself, and covered his eyes, till at length, over-mastered by the desire, he opened his eyes wide with his fingers, and running up to the bodies, exclaimed, “Look, ye wretches, take your fill of the fair sight!”14

Yet he also says—and it is the most important message of the charioteer-and-team metaphor—that appetite should not be eliminated but, rather, controlled. Attempting total repression of our desires would be like holding the steeds in check rather than driving them on toward reason’s goal.

Two other items of Plato’s psychology are worth our noting. One is his concept of Eros, the drive to be united with the loved one. It usually has a sexual or romantic connotation, but in Plato’s larger sense it refers to a desire to be united with the Idea or eternal Form that the other person exemplifies. Despite the metaphysical trapping of the concept, it contributed to psychology the idea that our most basic drive is for unity with an undying principle. As Robert I. Watson, a historian of psychology, puts it: “Eros is popularly translated as ‘love,’ but may often be more meaningfully called ‘life force.’ This is something akin to the biological will to live, the life energy.”15

Finally, Plato casually offered a thought about memory that would be used much later to counter his own theory of knowledge. Although he viewed recollection through reasoning as the most important kind of memory, he did admit that we learn and retain much from everyday experience. To explain why some of us remember more of that experience, or remember it more correctly, than others do, and why we often forget much of what we have learned, he resorted in the Theaetetus dialogue to a simile likening memory of experiences to writing on wax tablets; just as these surfaces may vary in size, hardness, moistness, and purity, so the minds of different persons vary in capacity, ability to learn, and retentiveness. Plato pursued the thought no further, but much later it would epitomize a theory of knowledge diametrically opposed to his. The seventeenth-century philosopher John Locke and the twentieth-century behaviorist John Watson would base their psychologies on the assumption that everything we know is what experience has written on the blank slate of the newborn mind.

The Realist: Aristotle

Plato’s most distinguished pupil, Aristotle, spent twenty years at the Academy, but after leaving it he contradicted so effectively much of what Plato had taught that he had as great an influence on philosophy as his master. More than that, through philosophy he left his mark on areas of knowledge as diverse as logic and astronomy, physics and ethics, religion and aesthetics, biology and rhetoric, politics and psychology. “He, perhaps more than any other thinker,” asserts one scholar, Anselm H. Amadio, “has characterized the orientation and content of all that is termed Western civilization.”16 And though psychology was far from Aristotle’s main concern, he gave “history’s first fully integrated and systematic account” of it, says the psychologist-scholar Daniel N. Robinson, adding, “Directly and indirectly, it has been among the most influential as well. Within the surviving works can be found theories of learning and memory, perception, motivation and emotion, socialization, personality.”17

One might expect such an intellectual giant to have been a strange person, but almost no peculiarities have been recorded of him. Busts show a handsome bearded man with refined and sensitive features; a malicious contemporary said he had small eyes and spindleshanks, but Aristotle offset these drawbacks with elegant dress and impeccable barbering. Nothing is known of his private life during his years at the Academy, but at thirty-seven he married for love. His wife died early, and in his will he asked that at his own death her bones be laid next to his. He remarried, lived with his second wife the rest of his life, and left her well provided for, “in recognition of the steady affection she has shown me.” He was usually kindly and warm, but when sorely tried could be tart. When a long-winded fellow asked him, “Have I bored you to death with my chatter?” he replied, “No, indeed—I wasn’t paying attention to you.”

Although affluent by birth, Aristotle was an extraordinarily hard worker all his life, sparing himself nothing in his quest for knowledge. When Plato read his Phaedo dialogue aloud, wearied auditors tiptoed out one by one, but Aristotle, and he alone, stayed to the end. On his honeymoon he devoted much of his time to collecting seashells, and he labored so assiduously at his research and writing that he completed 170 works in forty years.

Aristotle was born in 384 in Stagira, in northern Greece. His father was court physician to Amyntas II, King of Macedonia, whose son would become Philip II, father of Alexander the Great. Medical knowledge being traditionally passed down from father to son in Greece, Aristotle must have learned a good deal of biology and medicine; this may account for the scientific and empirical outlook that later made him the quintessential Realist, as opposed to Plato’s quintessential Idealist.

He came to Plato’s Academy at seventeen and stayed until he was thirty-seven; then he left, some say in anger, when Plato died and his nephew, rather than Aristotle, was appointed successor. He spent thirteen years away from Athens, first as philosopher-adviser to Hermeas, tyrant of Assus in Asia Minor; then as head of a philosophic academy at Mytilene on Lesbos, then as tutor to the teenage Alexander at Pella, King Philip’s capital. All the while, he was intensely busy reading, observing animal and human behavior, studying the skies, collecting biological specimens, dissecting animals, and writing. Some of his works, cast in dialogue form, were said to have been literary masterpieces, but all of these are lost. The forty-seven that remain, though intellectually profound, are numbingly prosaic and pedantic; they were probably lecture notes and treatises meant only for school use.

At forty-nine, at the height of his powers, Aristotle returned to Athens. Although the presidency of the Academy again became vacant, he was again passed over. He then founded a rival institution, the Lyceum, just outside the city, and there assembled teachers and pupils, a library, and a collection of zoological specimens. He lectured both morning and afternoon while strolling up and down the peripatos, the covered walk-way of the Lyceum (whence our word “peripatetic”), yet doubled his scholarly output by parceling out areas of research to students, much like today’s professors, and marshaling their findings in one work after another.

After thirteen years at the Lyceum, he left Athens when anti-Macedonian agitation broke out and he came under attack because of his Macedonian connections. His reason for moving away, he said, was to save the Athenians from sinning twice against philosophy (the first sin having been the conviction and execution of Socrates). He died the following year (322), at sixty-two or sixty-three, of a stomach illness.

None of this explains the immensity of his accomplishments. One can only suppose that, as with Shakespeare, Bach, and Einstein, Aristotle was a genius of the rarest sort who happened to live in a time and at a place that particularly favored his extraordinary abilities.

To be sure, many of his theories were later overturned or abandoned, and his scientific writings are riddled with myths, folklore, and outright errors. In his impressive De Generatione Animalium (History of Animals), for instance, he reported as fact that mice die if they drink in summertime, that eels are generated spontaneously, that human beings have only eight ribs, and that women have fewer teeth than men.

But unlike Plato he had the hunger for empirical data and the love of painstaking observation that have characterized science ever since. Despite the high value he placed on deductive reasoning and formal logic, he continually stressed the importance of inductive reasoning— the derivation of generalizations from observed cases or examples, a fundamental part of scientific method and a way of arriving at knowledge exactly contrary to that advocated by Plato.

For far from regarding sense perceptions as illusory and untrustworthy, Aristotle considered them the essential raw material of knowledge.18Extraordinary for one who had studied with Plato, he had, says one Aristotle scholar, “an intense interest in the concrete facts”;19 he regarded the direct observation of real things, except in abstract domains such as mathematics, to be the foundation of understanding. In De Generatione Animalium, for instance, after admitting that he does not know how bees procreate, he says:

The facts have not yet been sufficiently established. If ever they are, then credit must be given to observation rather than to theories, and to theories only insofar as they are confirmed by the observed facts.20

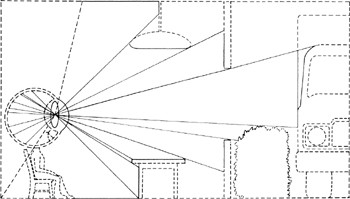

Like earlier philosophers, he sought to understand how perception takes place, but having no way to gather hard data on the matter— testing and experimentation were unknown, the dissection of human bodies impermissible—he relied on metaphysical explanations. He theorized that we do not perceive objects as such but their qualities, such as whiteness and roundness, which are nonmaterial “forms” that inhere in matter. When we see them, they are re-created within the eye, and the sensations they arouse are transmitted through the blood vessels to the mind—which, he thought, must be in the heart, since people often recover from injuries to the head while wounds to the heart are invariably fatal. (The brain’s function, he thought, was to cool the blood when it became overly warm.) He also discussed the possible existence of an interior sense, the “common” sense, by means of which we recognize that various sensations arriving from different sense organs—say, white and round, warm and soft—come from a single object (in this case, a ball of wool).

If we ignore these absurdities, Aristotle’s explanation of how perceptions become knowledge is commonsensical and convincing, and complementary to the perception-based epistemologies of Protagoras and Democritus. Our minds, Aristotle says, recognize the similarities in a series of objects—this is the essence of inductive reasoning—and from those common traits form a “universal,” a word or concept signifying not an actual thing but a sort of thing or a general principle; this is the route to higher levels of knowledge and wisdom. Reason or intellect thus acts upon sense data; it is an active, organizing force.

Having spent so many years examining biological specimens, Aristotle was of no mind to regard the objects of perception as mere illusions, or to rank generalized concepts as more real than the individual things they summarize. Where Plato said that abstract ideas exist eternally, apart from material things, and are more real than they, his realistic pupil said they were only attributes that could be “predicated” of specific subjects. Though he never totally abandoned the metaphysical trappings of Greek thought, he came close to saying that universals have no existence except in the thinking mind. He thus synthesized the two main streams of Greek thinking about knowledge: the extreme emphasis on sense perception of Protagoras and Democritus and the extreme rationalism of Socrates and Plato.

About the relation of mind to body, at times he is hopelessly opaque, at other times crystal clear. The opacity concerns the nature of “soul,” which, waxing metaphysical, he calls the “form” of the body—not its shape but its “essence,” its individuality, or perhaps its capacity to live. This muddy concept was to roil the waters of psychology for many centuries.

On the other hand, his comments about that part of the soul where thinking takes place are lucid and sensible. “Certain writers,” he says in De Anima, “have happily called the soul the place of ideas, but this description applies not to the soul as a whole but merely to the power of thought.”21 Most of the time he calls the part of the soul where thinking takes place the psyche, although sometimes he uses that term to mean the entire soul; despite the inconsistency, he is consistent in saying that the thinking part of the soul is a place where ideas are formed, not a place in which they exist before the soul inhabits the body.

Nor is soul, or psyche, an entity that can exist apart from the body. “It is clear,” he says, “that the soul is not separable from the body, and the same holds good of particular parts of the soul.”22 He rejects the Platonic doctrine of the imprisoned soul whose highest goal is to escape from the bonds of matter; in contrast to Plato’s dualism, his system is essentially monistic. (But this is his mature view. Because his views changed during his lifetime, Christian theologians would be able to find ample material in his early writings to justify their dualism.)

Once he has these matters out of the way, Aristotle gets to his real interest: how the mind uses both deduction and induction to arrive at knowledge. His description constitutes, according to Robert Watson, “the first functional view of mental processes…[For him] psyche is a process; psyche is what psyche does.”23 Psyche isn’t an immaterial essence, nor is it the heart or blood (nor, even if he had located psyche in the brain, would it have been the brain); it is the steps taken in thinking—the functionalist concept that today underlies cognitive science, information theory, and artificial intelligence. No wonder those who know Aristotle’s psychology stand in awe of him.

His description of thought processes sounds as if he based it on laboratory findings. He had none, of course, but being so diligent a collector of biological specimens, he may well have done something analogous, that is, scrutinized his own experiences and those of others, treating them as the specimens on which he based his generalizations.

The most important of these is that the thinking mind, whether functioning deductively or inductively, uses sense perceptions or remembered perceptions to arrive at general truths. Sensation brings us perceptions of the world, memory permits us to store those perceptions, imagination enables us to re-create from memory mental images corresponding to perceptions, and from accumulated images we derive general ideas. Radically differing with his mentor, Plato, Aristotle did not believe that the soul is born with knowledge. According to Daniel Robinson, he believed that

human beings have a cognitive capacity by which the (perceptual) registration of externals leads to their storage in memory, this giving rise to experience, and from this—“or from the whole universal that has come to rest in the soul”—a veritable principle of understanding arises (Post. Anal. 100 1–10).24*

It is an extraordinary vision of what scientific psychology would document twenty-three centuries later.