Webbots, Spiders, and Screen Scrapers

Table of Contents

Old-School Client-Server Technology

The Problem with Browsers

What to Expect from This Book

Learn from My Mistakes

Master Webbot Techniques

Leverage Existing Scripts

About the Website

About the Code

Requirements

Hardware

Software

Internet Access

A Disclaimer (This Is Important)

I. FUNDAMENTAL CONCEPTS AND TECHNIQUES

1. WHAT'S IN IT FOR YOU?

Uncovering the Internet's True Potential

What's in It for Developers?

Webbot Developers Are in Demand

Webbots Are Fun to Write

Webbots Facilitate "Constructive Hacking"

What's in It for Business Leaders?

Customize the Internet for Your Business

Capitalize on the Public's Inexperience with Webbots

Accomplish a Lot with a Small Investment

Final Thoughts

2. IDEAS FOR WEBBOT PROJECTS

Inspiration from Browser Limitations

Webbots That Aggregate and Filter Information for Relevance

Webbots That Interpret What They Find Online

Webbots That Act on Your Behalf

A Few Crazy Ideas to Get You Started

Help Out a Busy Executive

Save Money by Automating Tasks

Protect Intellectual Property

Monitor Opportunities

Verify Access Rights on a Website

Create an Online Clipping Service

Plot Unauthorized Wi-Fi Networks

Track Web Technologies

Allow Incompatible Systems to Communicate

Final Thoughts

3. DOWNLOADING WEB PAGES

Think About Files, Not Web Pages

Downloading Files with PHP's Built-in Functions

Downloading Files with fopen() and fgets()

Downloading Files with file()

Introducing PHP/CURL

Multiple Transfer Protocols

Form Submission

Basic Authentication

Cookies

Redirection

Agent Name Spoofing

Referer Management

Socket Management

Installing PHP/CURL

LIB_http

Familiarizing Yourself with the Default Values

Using LIB_http

Learning More About HTTP Headers

Examining LIB_http's Source Code

Final Thoughts

4. PARSING TECHNIQUES

Parsing Poorly Written HTML

Standard Parse Routines

Using LIB_parse

Splitting a String at a Delimiter: split_string()

Parsing Text Between Delimiters: return_between()

Parsing a Data Set into an Array: parse_array()

Parsing Attribute Values: get_attribute()

Removing Unwanted Text: remove()

Useful PHP Functions

Detecting Whether a String Is Within Another String

Replacing a Portion of a String with Another String

Parsing Unformatted Text

Measuring the Similarity of Strings

Final Thoughts

Don't Trust a Poorly Coded Web Page

Parse in Small Steps

Don't Render Parsed Text While Debugging

Use Regular Expressions Sparingly

5. AUTOMATING FORM SUBMISSION

Reverse Engineering Form Interfaces

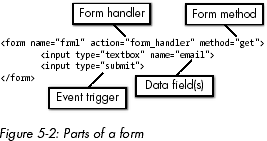

Form Handlers, Data Fields, Methods, and Event Triggers

Form Handlers

Data Fields

Methods

Event Triggers

Unpredictable Forms

JavaScript Can Change a Form Just Before Submission

Form HTML Is Often Unreadable by Humans

Cookies Aren't Included in the Form, but Can Affect Operation

Analyzing a Form

Final Thoughts

Don't Blow Your Cover

Correctly Emulate Browsers

Avoid Form Errors

6. MANAGING LARGE AMOUNTS OF DATA

Organizing Data

Naming Conventions

Storing Data in Structured Files

Storing Text in a Database

Storing Images in a Database

Database or File?

Making Data Smaller

Storing References to Image Files

Compressing Data

Removing Formatting

Thumbnailing Images

Final Thoughts

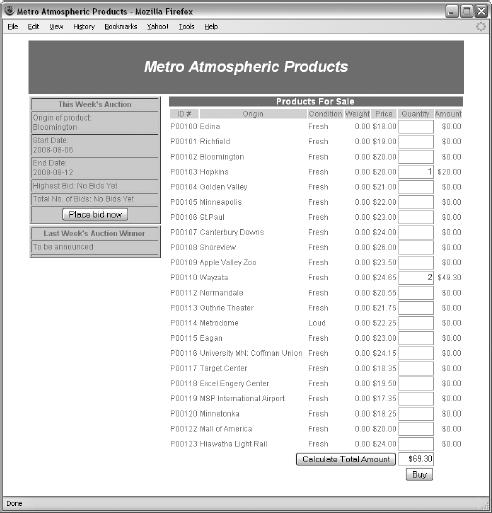

7. PRICE-MONITORING WEBBOTS

The Target

Designing the Parsing Script

Initialization and Downloading the Target

Further Exploration

8. IMAGE-CAPTURING WEBBOTS

Example Image-Capturing Webbot

Creating the Image-Capturing Webbot

Binary-Safe Download Routine

Directory Structure

The Main Script

Further Exploration

Final Thoughts

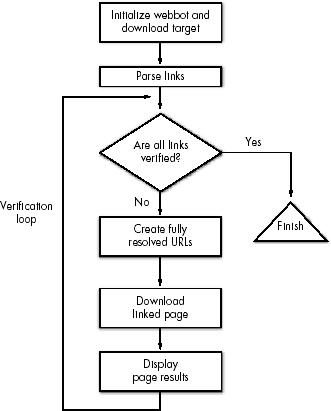

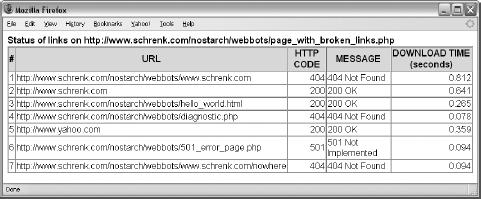

9. LINK-VERIFICATION WEBBOTS

Creating the Link-Verification Webbot

Initializing the Webbot and Downloading the Target

Setting the Page Base

Parsing the Links

Running a Verification Loop

Generating Fully Resolved URLs

Downloading the Linked Page

Displaying the Page Status

Running the Webbot

LIB_http_codes

LIB_resolve_addresses

Further Exploration

10. ANONYMOUS BROWSING WEBBOTS

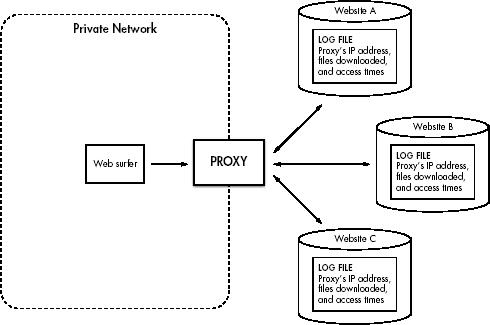

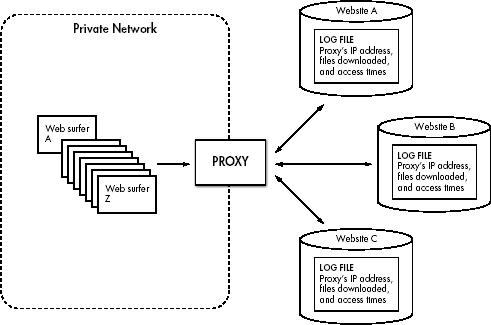

Anonymity with Proxies

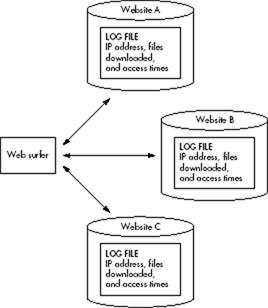

Non-proxied Environments

Your Online Exposure

Proxied Environments

The Anonymizer Project

Writing the Anonymizer

Final Thoughts

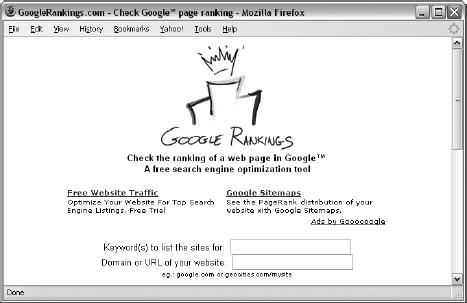

11. SEARCH-RANKING WEBBOTS

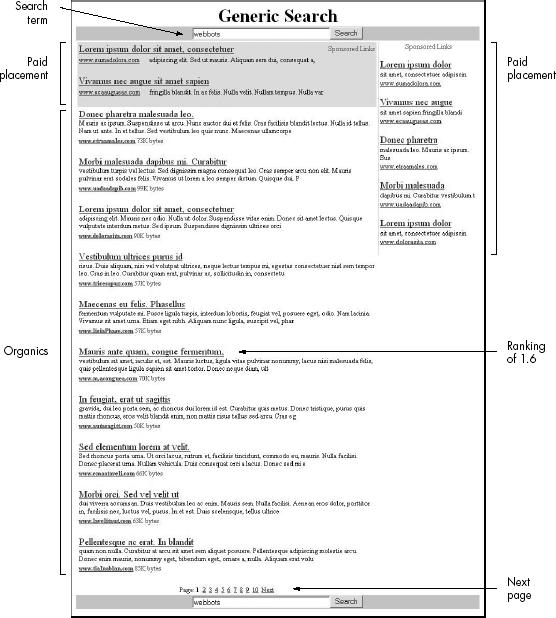

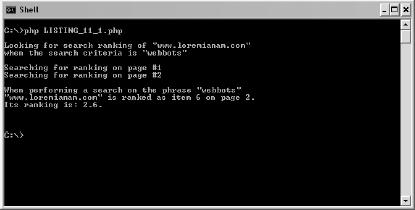

Description of a Search Result Page

What the Search-Ranking Webbot Does

Running the Search-Ranking Webbot

How the Search-Ranking Webbot Works

The Search-Ranking Webbot Script

Initializing Variables

Starting the Loop

Fetching the Search Results

Parsing the Search Results

Final Thoughts

Be Kind to Your Sources

Search Sites May Treat Webbots Differently Than Browsers

Spidering Search Engines Is a Bad Idea

Familiarize Yourself with the Google API

Further Exploration

12. AGGREGATION WEBBOTS

Choosing Data Sources for Webbots

Example Aggregation Webbot

Familiarizing Yourself with RSS Feeds

Writing the Aggregation Webbot

Adding Filtering to Your Aggregation Webbot

Further Exploration

13. FTP WEBBOTS

Example FTP Webbot

PHP and FTP

Further Exploration

14. NNTP NEWS WEBBOTS

NNTP Use and History

Webbots and Newsgroups

Identifying News Servers

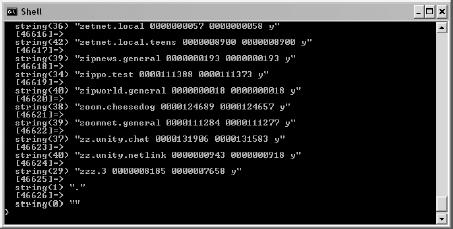

Identifying Newsgroups

Finding Articles in Newsgroups

Reading an Article from a Newsgroup

Further Exploration

15. WEBBOTS THAT READ EMAIL

The POP3 Protocol

Logging into a POP3 Mail Server

Reading Mail from a POP3 Mail Server

Executing POP3 Commands with a Webbot

Further Exploration

Email-Controlled Webbots

Email Interfaces

16. WEBBOTS THAT SEND EMAIL

Email, Webbots, and Spam

Sending Mail with SMTP and PHP

Configuring PHP to Send Mail

Sending an Email with mail()

Writing a Webbot That Sends Email Notifications

Keeping Legitimate Mail out of Spam Filters

Sending HTML-Formatted Email

Further Exploration

Using Returned Emails to Prune Access Lists

Using Email as Notification That Your Webbot Ran

Leveraging Wireless Technologies

Writing Webbots That Send Text Messages

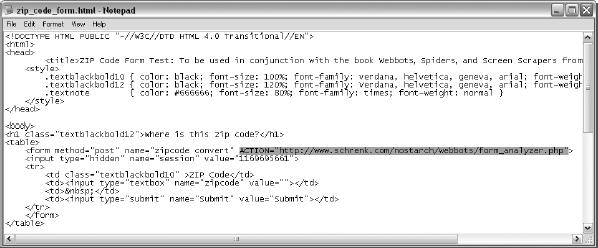

17. CONVERTING A WEBSITE INTO A FUNCTION

Writing a Function Interface

Defining the Interface

Analyzing the Target Web Page

Using describe_zipcode()

Final Thoughts

Distributing Resources

Using Standard Interfaces

Designing a Custom Lightweight "Web Service"

III. ADVANCED TECHNICAL CONSIDERATIONS

18. SPIDERS

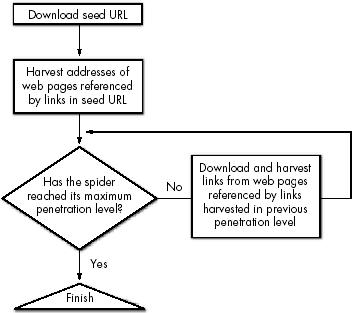

How Spiders Work

Example Spider

LIB_simple_spider

harvest_links()

archive_links()

get_domain()

exclude_link()

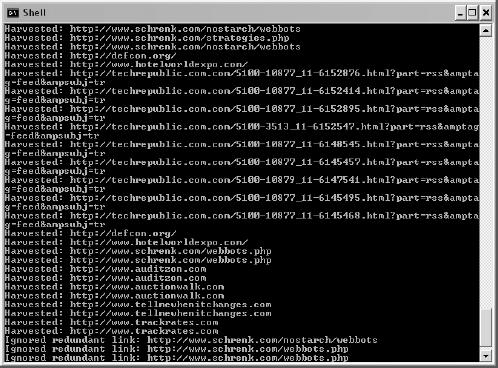

Experimenting with the Spider

Adding the Payload

Further Exploration

Save Links in a Database

Separate the Harvest and Payload

Distribute Tasks Across Multiple Computers

Regulate Page Requests

19. PROCUREMENT WEBBOTS AND SNIPERS

Procurement Webbot Theory

Get Purchase Criteria

Authenticate Buyer

Verify Item

Evaluate Purchase Triggers

Make Purchase

Evaluate Results

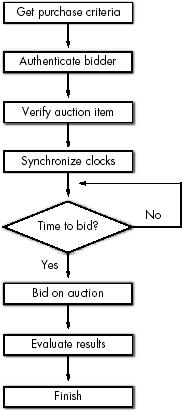

Sniper Theory

Get Purchase Criteria

Authenticate Buyer

Verify Item

Synchronize Clocks

Time to Bid?

Submit Bid

Evaluate Results

Testing Your Own Webbots and Snipers

Further Exploration

Final Thoughts

20. WEBBOTS AND CRYPTOGRAPHY

Designing Webbots That Use Encryption

SSL and PHP Built-in Functions

Encryption and PHP/CURL

A Quick Overview of Web Encryption

Local Certificates

Final Thoughts

21. AUTHENTICATION

What Is Authentication?

Types of Online Authentication

Strengthening Authentication by Combining Techniques

Authentication and Webbots

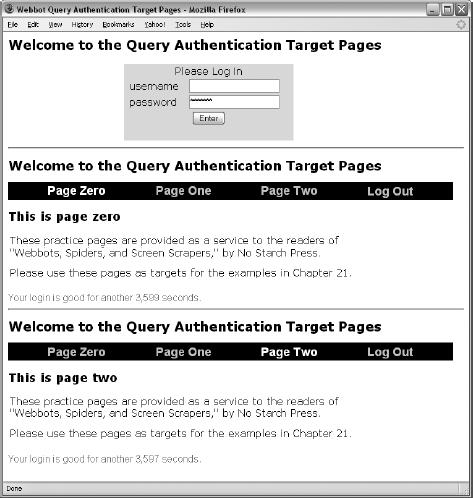

Example Scripts and Practice Pages

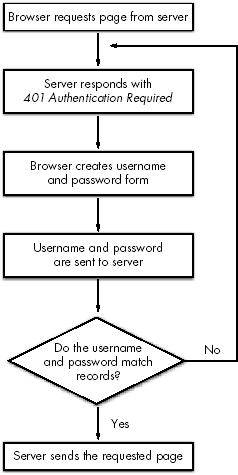

Basic Authentication

Session Authentication

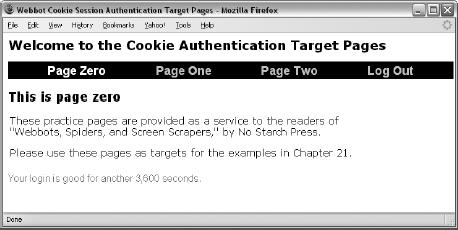

Authentication with Cookie Sessions

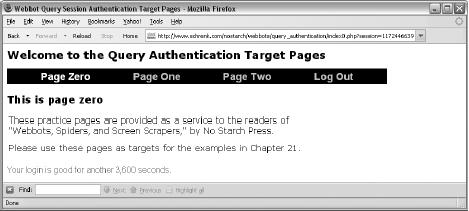

Authentication with Query Sessions

Final Thoughts

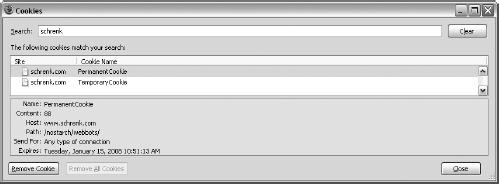

22. ADVANCED COOKIE MANAGEMENT

How Cookies Work

PHP/CURL and Cookies

How Cookies Challenge Webbot Design

Purging Temporary Cookies

Managing Multiple Users' Cookies

Further Exploration

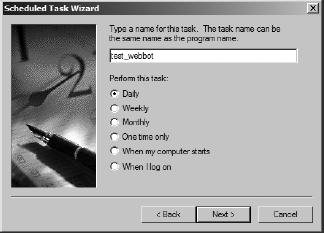

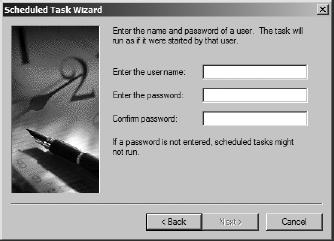

23. SCHEDULING WEBBOTS AND SPIDERS

The Windows Task Scheduler

Preparing Your Webbots to Run as Scheduled Tasks

Scheduling a Webbot to Run Daily

Complex Schedules

Non-Calendar-Based Triggers

Final Thoughts

Determine the Webbot's Best Periodicity

Avoid Single Points of Failure

Add Variety to Your Schedule

24. DESIGNING STEALTHY WEBBOTS AND SPIDERS

Why Design a Stealthy Webbot?

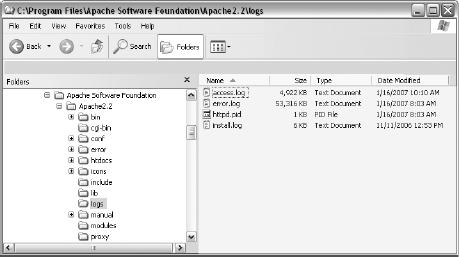

Log Files

Log-Monitoring Software

Stealth Means Simulating Human Patterns

Be Kind to Your Resources

Run Your Webbot During Busy Hours

Don't Run Your Webbot at the Same Time Each Day

Don't Run Your Webbot on Holidays and Weekends

Use Random, Intra-fetch Delays

Final Thoughts

25. WRITING FAULT-TOLERANT WEBBOTS

Types of Webbot Fault Tolerance

Adapting to Changes in URLs

Adapting to Changes in Page Content

Adapting to Changes in Forms

Adapting to Changes in Cookie Management

Adapting to Network Outages and Network Congestion

Error Handlers

26. DESIGNING WEBBOT-FRIENDLY WEBSITES

Optimizing Web Pages for Search Engine Spiders

Well-Defined Links

Google Bombs and Spam Indexing

Title Tags

Meta Tags

Header Tags

Image alt Attributes

Web Design Techniques That Hinder Search Engine Spiders

JavaScript

Non-ASCII Content

Designing Data-Only Interfaces

XML

Lightweight Data Exchange

SOAP

27. KILLING SPIDERS

Asking Nicely

Create a Terms of Service Agreement

Use the robots.txt File

Use the Robots Meta Tag

Building Speed Bumps

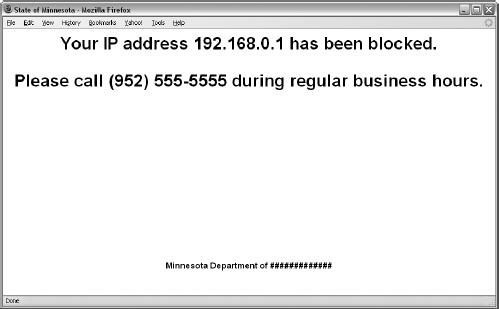

Selectively Allow Access to Specific Web Agents

Use Obfuscation

Use Cookies, Encryption, JavaScript, and Redirection

Authenticate Users

Update Your Site Often

Embed Text in Other Media

Setting Traps

Create a Spider Trap

Fun Things to Do with Unwanted Spiders

Final Thoughts

28. KEEPING WEBBOTS OUT OF TROUBLE

It's All About Respect

Copyright

Do Consult Resources

Don't Be an Armchair Lawyer

Trespass to Chattels

Internet Law

Final Thoughts

A. PHP/CURL REFERENCE

Creating a Minimal PHP/CURL Session

Initiating PHP/CURL Sessions

Setting PHP/CURL Options

CURLOPT_URL

CURLOPT_RETURNTRANSFER

CURLOPT_REFERER

CURLOPT_FOLLOWLOCATION and CURLOPT_MAXREDIRS

CURLOPT_USERAGENT

CURLOPT_NOBODY and CURLOPT_HEADER

CURLOPT_TIMEOUT

CURLOPT_COOKIEFILE and CURLOPT_COOKIEJAR

CURLOPT_HTTPHEADER

CURLOPT_SSL_VERIFYPEER

CURLOPT_USERPWD and CURLOPT_UNRESTRICTED_AUTH

CURLOPT_POST and CURLOPT_POSTFIELDS

CURLOPT_VERBOSE

CURLOPT_PORT

Executing the PHP/CURL Command

Retrieving PHP/CURL Session Information

Viewing PHP/CURL Errors

Closing PHP/CURL Sessions

B. STATUS CODES

HTTP Codes

NNTP Codes

C. SMS EMAIL ADDRESSES

Webbots, Spiders, and Screen Scrapers

Michael Schrenk

Editor

William Pollock

Copyright © 2009

No Starch Press

Dedication

In loving memory

Charlotte Schrenk

1897–1982

WEBBOTS, SPIDERS, AND SCREEN SCRAPERS. Copyright © 2007 by Michael Schrenk.

All rights reserved. No part of this work may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying, recording, or by any information storage or retrieval system, without the prior written permission of the copyright owner and the publisher.

Printed on recycled paper in the United States of America

Printed on recycled paper in the United States of America

11 10 09 08 07 1 2 3 4 5 6 7 8 9

ISBN-10: 1-59327-120-4

ISBN-13: 978-1-59327-120-6

Publisher: William Pollock

Production Editor: Christina Samuell

Cover and Interior Design: Octopod Studios

Developmental Editors: Tyler Ortman and William Pollock

Technical Reviewer: Peter MacIntyre

Copyeditor: Megan Dunchak

Compositors: Megan Dunchak, Riley Hoffman, and Christina Samuell

Proofreader: Stephanie Provines

Indexer: Nancy Guenther

For information on book distributors or translations, please contact No Starch Press, Inc. directly:

No Starch Press, Inc.

555 De Haro Street, Suite 250, San Francisco, CA 94107

phone: 415.863.9900; fax: 415.863.9950; [email protected]; www.nostarch.com

Library of Congress Cataloging-in-Publication Data

Schrenk, Michael.

Webbots, spiders, and screen scrapers : a guide to developing internet agents

with PHP/CURL / Michael Schrenk.

p. cm.

Includes index.

ISBN-13: 978-1-59327-120-6

ISBN-10: 1-59327-120-4

1. Web search engines. 2. Internet programming. 3. Internet searching. 4.

Intelligent agents (Computer software) I. Title.

TK5105.884.S37 2007

025.04--dc22

2006026680

No Starch Press and the No Starch Press logo are registered trademarks of No Starch Press, Inc. Other product and company names mentioned herein may be the trademarks of their respective owners. Rather than use a trademark symbol with every occurrence of a trademarked name, we are using the names only in an editorial fashion and to the benefit of the trademark owner, with no intention of infringement of the trademark.

The information in this book is distributed on an "As Is" basis, without warranty. While every precaution has been taken in the preparation of this work, neither the author nor No Starch Press, Inc. shall have any liability to any person or entity with respect to any loss or damage caused or alleged to be caused directly or indirectly by the information contained in it.

ACKNOWLEDGMENTS

I needed support and inspiration from family, friends, and colleagues to write this book. Unfortunately, I did not always acknowledge their contributions when they offered them. Here is a delayed thanks to all of those who helped me.

Thanks to Donna, my wife, who convinced me that I could actually do this, and to my kids, Ava and Gordon, who have always supported my crazy schemes, even though they know it means fewer coffees and chess matches together.

Andy King encouraged me to find a publisher for this project, and Daniel Stenberg, founder of the cURL project, helped me organize my thoughts when this book was barely an outline.

No Starch Press exhibited saint-like patience while I split my time between writing webbots and writing about webbots. Special thanks to Bill, who trusted the concept, Tyler, who edited most of the manuscript, and Christina, who kept me on task. Peter MacIntyre was instrumental in checking for technical errors, and Megan's copyediting improved the book throughout.

Anamika Mishra assisted with the book's website and consistently covered for me when I was busy writing or too tired to code.

Laurie Curtis helped me explore what it might be like to finish a book.

Finally, a tip of the hat goes to Mark, Randy, Megan, Karen, Terri, Susan, Dennis, Dan, and Matt, who were thoughtful enough to ask about my book's progress before inquiring about the status of their projects.

Introduction

My introduction to the World Wide Web was also the beginning of my relationship with the browser. The first browser I used was Mosaic, pioneered by Eric Bina and Marc Andreessen. Andreessen later co-founded Netscape.

Shortly after I discovered the World Wide Web, I began to associate the wonders of the Internet with the simplicity of the browser. By just clicking a hyperlink, I could enjoy the art treasures of the Louvre; if I followed another link, I could peruse a fan site for The Brady Bunch.[1] The browser was more than a software application that facilitated use of the World Wide Web: It was the World Wide Web. It was the new television. And just as television tamed distant video signals with simple channel and volume knobs, browsers demystified the complexities of the Internet with hyperlinks, bookmarks, and back buttons.

Old-School Client-Server Technology

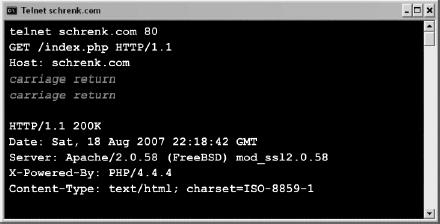

My big moment of discovery came when I learned that I didn't need a browser to view web pages. I realized that Telnet, a program used since the early '80s to communicate with networked computers, could also download web pages, as shown in Figure 2.

Figure 1. Viewing a web page with Telnet

Suddenly, the World Wide Web was something I could understand without a browser. It was a familiar client-server architecture where simple clients worked on tasks found on remote servers. The difference here was that the clients were browsers and the servers dished up web pages.

The only revolutionary thing was that, unlike previous client-server client applications, browsers were easy for anyone to use and soon gained mass acceptance. The Internet's audience shifted from physicists and computer programmers to the public. Unfortunately, the general public didn't understand client-server technology, so the dependency on browsers spread further. They didn't understand that there were other ways to use the World Wide Web.

As a programmer, I realized that if I could use Telnet to download web pages, I could also write programs to do the same. I could write my own browser if I desired, or I could write automated agents (webbots, spiders, and screen scrapers) to solve problems that browsers couldn't.

[1] I stumbled across a fan site for The Brady Bunch during my first World Wide Web experience.

The Problem with Browsers

The basic problem with browsers is that they're manual tools. Your browser only downloads and renders websites: You still need to decide if the web page is relevant, if you've already seen the information it contains, or if you need to follow a link to another web page. What's worse, your browser can't think for itself. It can't notify you when something important happens online, and it certainly won't anticipate your actions, automatically complete forms, make purchases, or download files for you. To do these things, you'll need the automation and intelligence only available with a webbot, or a web robot.

What to Expect from This Book

This book identifies the limitations of typical web browsers and explores how you can use webbots to capitalize on these limitations. You'll learn how to design and write webbots through sample scripts and example projects. Moreover, you'll find answers to larger design questions like these:

- Where do ideas for webbot projects come from?

- How can I have fun with webbots and stay out of trouble?

- Is it possible to write stealthy webbots that run without detection?

- What is the trick to writing robust, fault-tolerant webbots that won't break as Internet content changes?

Learn from My Mistakes

I've written webbots, spiders, and screen scrapers for nearly 10 years, and in the process I've made most of the mistakes someone can make. Because webbots are capable of making unconventional demands on websites, system administrators can confuse webbots' requests with attempts to hack into their systems. Thankfully, none of my mistakes has ever led to a courtroom, but they have resulted in intimidating phone calls, scary emails, and very awkward moments. Happily, I can say that I've learned from these situations, and it's been a very long time since I've been across the desk from an angry system administrator. You can spare yourself a lot of grief by reading my stories and learning from my mistakes.

Master Webbot Techniques

You will learn about the technology needed to write a wide assortment of webbots. Some technical skills you'll master include these:

- Programmatically downloading websites

- Decoding encrypted websites

- Unlocking authenticated web pages

- Managing cookies

- Parsing data

- Writing spiders

- Managing the large amounts of data that webbots generate

Leverage Existing Scripts

This book uses several code libraries that make it easy for you to write webbots, spiders, and screen scrapers. The functions and declarations in these libraries provide the basis for most of the example scripts used in this book. You'll save time by using these libraries because they do the underlying work, leaving the upper-level planning and development to you. All of these libraries are available for download at this book's website.

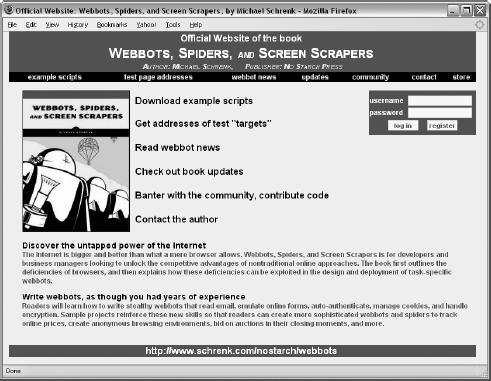

About the Website

This book's website (http://www.schrenk.com/nostarch/webbots) is an additional resource for you to use. To the extent that it's possible, all the example projects in this book use web pages on the companion site as targets, or resources for your webbots to download and take action on. These targets provided a consistent (unchanging) environment for you to hone your webbot writing skills. A controlled learning environment is important because, regardless of our best efforts, webbots can fail when their target websites change. Knowing that your targets are unchanging makes the task of debugging a little easier.

The companion website also has links to other sites of interest, white papers, book updates, and an area where you can communicate with other webbot developers (see Figure 2). From the website, you will also be able to access all of the example code libraries used in this book.

Figure 2. The official website of Webbots, Spiders, and Screen Scrapers

About the Code

Most of the scripts in this book are straight PHP. However, sometimes PHP and HTML are intermixed in the same script—and in many cases, on the same line. In those situations, a bold typeface differentiates PHP scripts from HTML, as shown in Listing 1.

You may use any of the scripts in this book for your own personal use, as long as you agree not to redistribute them. If you use any script in this book, you also consent to bear full responsibility for its use and execution and agree not to sell or create derivative products, under any circumstances. However, if you do improve any of these scripts or develop entirely new (related) scripts, you are encouraged to share them with the webbot community via the book's website.

<h1>Coding Conventions for Embedded PHP</h1>

<table border="0" cellpadding="1" cellspacing="0">

<tr>

<th>Name</th>

<th>Address</th>

</tr>

<? for ($x=0; $x<sizeof($person_array); $x++)

{ ?>

<tr>

<td><? echo person_array[$x]['NAME']?></td>

<td><? echo person_array[$x]['ADDRESS']?></td>

</tr>

<? } ?>

</table>

Listing 1-1: Bold typeface differentiates PHP from HTML script

The other thing you should know about the example scripts is that they are teaching aids. The scripts may not reflect the most efficient programming method, because their primary goal is readability.

Note

The code libraries used by this book are governed by the W3C Software Notice and License (http://www.w3.org/Consortium/Legal/2002/copyright-software-20021231) and are available for download from the book's website. The website is also where the software is maintained. If you make meaningful contributions to this code, please go to the website to see how your improvements may be part of the next distribution. The software examples depicted in this book are protected by this book's copyright.

Requirements

Knowing HTML and the basics of how the Internet works will be necessary for using this book. If you are a beginning programmer with even nominal computer network experience, you'll be fine. It is important to recognize, however, that this book will not teach you how to program or how TCP/IP, the protocol of the Internet, works.

Hardware

You don't need elaborate hardware to start writing webbots. If you have a secondhand 33 MHz Pentium computer, you have the minimum requirement to play with all the examples in this book. Any of the following hardware is appropriate for using the examples and information in this book:

- A personal computer that uses a Windows 95, Windows XP, or Windows Vista operating system

- Any reasonably modern Linux-, Unix-, or FreeBSD-based computer

- A Macintosh running OS X (or later)

It will also prove useful to have ample storage. This is particularly true if your plan is to write spiders, self-directed webbots, which can consume all available resources (especially hard drives) if they are allowed to download too many files.

Software

In an effort to be as relevant as possible, the software examples in this book use PHP,[2] cURL,[3] and MySQL.[4] All of these software technologies are available as free downloads from their respective websites. In addition to being free, these software packages are wonderfully portable and function well on a variety of computers and operating systems.

Note

If you're going to follow the script examples in this book, you will need a basic knowledge of PHP. This book assumes you know how to program.

Internet Access

A connection to the Internet is very handy, but not entirely necessary. If you lack a network connection, you can create your own local intranet (one or more webservers on a private network) by loading Apache[5] onto your computer, and if that's not possible, you can design programs that use local files as targets. However, neither of these options is as fun as writing webbots that use a live Internet connection. In addition, if you lack an Internet connection, you will not have access to the online resources, which add a lot of value to your learning experience.

[2] See http://www.php.net.

[3] See http://curl.haxx.se.

[4] See http://www.mysql.com.

[5] See http://www.apache.org.

A Disclaimer (This Is Important)

As with anything you develop, you must take responsibility for your own actions. From a technology standpoint, there is little to distinguish a beneficial webbot from one that does destructive things. The main difference is the intent of the developer (and how well you debug your scripts). Therefore, it's up to you to do constructive things with the information in this book and not violate copyright law, disrupt networks, or do anything else that would be troublesome or illegal. And if you do, don't call me.

Please reference Chapter 28 for insight into how to write webbots ethically. Chapter 28 will help you do this, but it won't provide legal advice. If you have questions, talk to a lawyer before you experiment.

Part I. FUNDAMENTAL CONCEPTS AND TECHNIQUES

While most web development books explain how to create websites, this book teaches developers how to combine, adapt, and automate existing websites to fit their specific needs. Part I introduces the concept of web automation and explores elementary techniques to harness the resources of the Web.

This chapter explores why it is fun to write webbots and why webbot development is a rewarding career with expanding possibilities.

We've been led to believe that the only way to use a website is with a browser. If, however, you examine what you want to do, as opposed to what a browser allows you to do, you'll look at your favorite web resources in a whole new way. This chapter discusses existing as well as potential webbots.

This chapter introduces PHP/CURL, the free library that makes it easy to download web pages—even when the targeted web pages use advanced techniques like forwarding, encryption, authentication, and cookies.

Downloaded web pages aren't of any use until your webbot can separate the data you need from the data you don't need.

To truly automate web agents, your application needs the ability to automatically upload data to online forms.

Spiders in particular can generate huge amounts of data. That's why it's important for you to know how to effectively store and reduce the size of web pages, text, and images.

You may already have experience from other areas of computer science that you can apply to these activities. However, even if these concepts are familiar to you, developing webbots may force you to view these skills in a different context, so the following chapters are still worth reading. If you don't already have experience in these areas, the next six chapters will provide the basics for designing and developing webbots. You'll use this groundwork in the other projects and advanced considerations discussed later.

Chapter 1. WHAT'S IN IT FOR YOU?

Whether you're a software developer looking for new skills or a business leader looking for a competitive advantage, this chapter is where you will discover how webbots create opportunities.

Uncovering the Internet's True Potential

Webbots present a virtually untapped resource for software developers and business leaders. This is because the public has yet to realize that most of the Internet's potential lies outside the capability of the existing browser/website paradigm. For example, in today's world, people are satisfied with pointing a browser at a website and using whatever information or services they find there. With webbots, the focus of the Internet will shift from what's available on individual websites toward what people actually want to accomplish. To this end, webbots will use as many online resources as required to satisfy their individual needs.

To be successful with webbots, you need to stop thinking like other Internet users. Namely, you need to stop thinking about the Internet in terms of a browser viewing one website at a time. This will be difficult, because we've all become dependent on browsers. While you can do a wide variety of things with a browser, you also pay a price for that versatility—browsers need to be sufficiently generic to be useful in a wide variety of circumstances. As a result, browsers can do general things well, but they lack the ability to do specific things exceptionally well.[6] Webbots, on the other hand, can be programmed for specific tasks and can perform those tasks with perfection. Additionally, webbots have the ability to automate anything you do online or notify you when something needs to be done.

[6] For example, they can't act on your behalf, filter content for relevance, or perform tasks automatically.

What's in It for Developers?

Your ability to write a webbot can distinguish you from a pack of lesser developers. Web developers—who've gone from designing the new economy of the late 1990s to falling victim to it during the dot-com crash of 2001—know that today's job market is very competitive. Even today's most talented developers can have trouble finding meaningful work. Knowing how to write webbots will expand your ability as a developer and make you more valuable to your employer or potential employers.

A webbot writer differentiates his or her skill set from that of someone whose knowledge of Internet technology extends only to creating websites. By designing webbots, you demonstrate that you have a thorough understanding of network technology and a variety of network protocols, as well as the ability to use existing technology in new and creative ways.

Webbot Developers Are in Demand

There are many growth opportunities for webbot developers. You can demonstrate this for yourself by looking at your website's file access logs and recording all the non-browsers that have visited your website. If you compare current server logs to those from a year ago, you should notice a healthy increase in traffic from nontraditional web clients or webbots. Someone has to write these automated agents, and as the demand for webbots increases, so does the demand for webbot developers.

Hard statistics on the growth of webbot use are hard to come by, since many webbots defy detection and masquerade as traditional web browsers. In fact, the value that webbots bring to businesses forces most webbot projects underground. I can't talk about most of the webbots I've developed because they create competitive advantages for clients, and they'd rather keep those techniques secret. Regardless of the actual numbers, it's a fact that webbots and spiders comprise a large amount of today's Internet traffic and that many developers are required to both maintain existing webbots and develop new ones.

Webbots Are Fun to Write

In addition to solving serious business problems, webbots are also fun to write. This should be welcome news to seasoned developers who no longer experience the thrill of solving a problem or using a technology for the first time. Without a little fun, it's easy for developers to get bored and conclude that software is simply a sequence of instructions that do the same thing every time a program runs. While predictability makes software dependable, it also makes it tiresome to write. This is especially true for computer programmers who specialize in a specific industry and lack diversity in tasks. At some point in their careers, nearly all of the programmers I know have become very tired of what they do, in spite of the fact that they still like to write computer programs.

Webbots, however, are almost like games, in that they can pleasantly surprise their developers with their unpredictability. This is because webbots operate on data that changes frequently, and they respond slightly differently every time they run. As a result, webbots become impulsive and lifelike. Unlike other software, webbots feel organic! Once you write a webbot that does something wonderfully unexpected, you'll have a hard time describing the experience to those writing traditional software applications.

Webbots Facilitate "Constructive Hacking"

By its strict definition, hacking is the process of creatively using technology for a purpose other than the one originally intended. By using web pages, news groups, email, or other online technology in unintended ways, you join the ranks of innovators that combine and alter existing technology to create totally new and useful tools. You'll also broaden the possibilities for using the Internet.

Unfortunately, hacking also has a dark side, popularized by stories of people breaking into systems, stealing private data, and rendering online services unusable. While some people do write destructive webbots, I don't condone that type of behavior here. In fact, Chapter 28 is dedicated to this very subject.

What's in It for Business Leaders?

Few businesses gain a competitive advantage simply by using the Internet. Today, businesses need a unique online strategy to gain a competitive advantage. Unfortunately, most businesses limit their online strategy to a website—which, barring some visual design differences, essentially functions like all the other websites within the industry.

Customize the Internet for Your Business

Most of the webbot projects I've developed are for business leaders who've become frustrated with the Internet as it is. They want added automation and decision-making capability on the websites they use to run their businesses. Essentially, they want webbots that customize other people's websites (and the data those sites contain) for the specific way they do business. Progressive businesses use webbots to improve their online experience, optimizing how they buy things, how they gather facts, how they're notified when things change, and how to enforce business rules when making online purchases.

Businesses that use webbots aren't limited to envisioning the Internet as a set of websites that are accessed by browsers. Instead, they see the Internet as a stockpile of varied resources that they can customize (using webbots) to serve their specific needs.

There has always been a lag between when people figure out how to do something manually and when they figure out how to automate the process. Just as chainsaws replaced axes and as sewing machines superseded needles and thimbles, it is only natural to assume that new (automated) methods for interacting with the Internet will follow the methods we use today. The companies that develop these processes will be the first to enjoy the competitive advantage created by their vision.

Capitalize on the Public's Inexperience with Webbots

Most people have very little experience using the Internet with anything other than a browser, and even if people have used other Internet clients like email or news readers, they have never thought about how their online experience could be improved through automation. For most, it just hasn't been an issue.

For businesspeople, blind allegiance to browsers is a double-edged sword. In one respect, it's good that people aren't familiar with the benefits that webbots provide—this provides opportunities for you to develop webbot projects that offer competitive advantages. On the other hand, if your supervisors are used to the Internet as seen through a browser alone, you may have a hard time selling your webbot projects to management.

Accomplish a Lot with a Small Investment

Webbots can achieve amazing results without elaborate setups. I've used obsolete computers with slow, dial-up connections to run webbots that create completely new revenue channels for businesses. Webbots can even be designed to work with existing office equipment like phones, fax machines, and printers.

Final Thoughts

One of the nice things about webbots is that you can create a large effect without making something difficult for customers to use. In fact, customers don't even need to know that a webbot is involved. For example, your webbots can deliver services through traditional-looking websites. While you know that you're doing something radically innovative, the end users don't realize what's going on behind the scenes—and they don't really need to know about the hordes of hidden webbots and spiders combing the Internet for the data and services they need. All they know is that they are getting an improved Internet experience. And in the end, that's all that matters.

Chapter 2. IDEAS FOR WEBBOT PROJECTS

It's often more difficult to find applications for new technology than it is to learn the technology itself. Therefore, this chapter focuses on encouraging you to generate ideas for things that you can do with webbots. We'll explore how webbots capitalize on browser limitations, and we'll see a few examples of what people are currently doing with webbots. We'll wrap up by throwing out some wild ideas that might help you expand your expectations of what can be done online.

Inspiration from Browser Limitations

A useful method for generating ideas for webbot projects is to study what cannot be done by simply pointing a browser at a typical website. You know that browsers, used in traditional ways, cannot automate your Internet experience. For example, they have these limitations:

- Browsers cannot aggregate and filter information for relevance

- Browsers cannot interpret what they find online

- Browsers cannot act on your behalf

However, a browser may leverage the power of a webbot to do many things that it could not do alone. Let's look at some real-life examples of how browser limitations were leveraged into actual webbot projects.

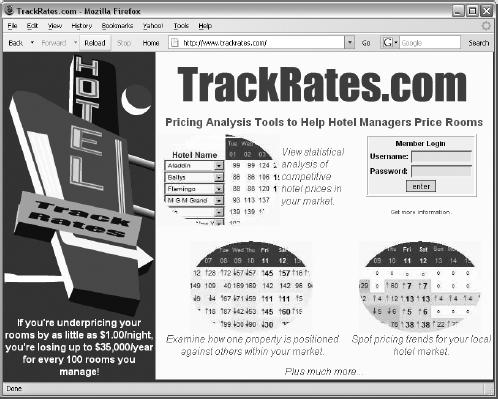

Webbots That Aggregate and Filter Information for Relevance

TrackRates.com (http://www.trackrates.com, shown in Figure 2-1) is a website that deploys an army of webbots to aggregate and filter hotel room prices from travel websites. By identifying room prices for specific hotels for specific dates, it determines the actual market value for rooms up to three months into the future. This information helps hotel managers intelligently price rooms by specifically knowing what the competition is charging for similar rooms. TrackRates.com also reveals market trends by performing statistical analysis on room prices, and it tries to determine periods of high demand by indicating dates on which hotels have booked all of their rooms.

Figure 2-1. TrackRates.com

I wrote TrackRates.com to help hotel managers analyze local markets and provide facts for setting room prices. Without the TrackRates.com webbot, hotel managers either need to guess what their rooms are worth, rely on less current information about their local hotel market, or go through the arduous task of manually collecting this data.

Webbots That Interpret What They Find Online

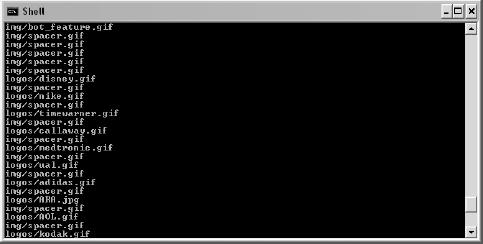

WebSiteOptimization.com (http://www.websiteoptimization.com) uses a webbot to help web developers create websites that use resources effectively. This webbot accepts a web page's URL (as shown in Figure 2-1) and analyzes how each graphic, CSS, and JavaScript file is used by the web page. In the interest of full disclosure, I should mention that I wrote the back end for this web page analyzer.

Figure 2-2. A website-analyzing webbot

The WebSiteOptimization.com webbot analyzes the data it collects and offers suggestions for optimizing website performance. Without this tool, developers would have to manually parse through their HTML code to determine which files are required by web pages, how much bandwidth they are using, and how the organization of the web page affects its performance.

Webbots That Act on Your Behalf

Pokerbots, webbots that play online poker, are a response to the recent growth in online gambling sites, particularly gaming sites with live poker rooms. While the action in these pokers sites is live, not all the players are. Some online poker players are webbots, like Poker Robot, shown in Figure 2-3.

Webbots designed to play online poker not only know the rules of Texas hold 'em but use predetermined business rules to expertly read how others play. They use this information to hold, fold, or bet appropriately. Reportedly, these automated players can very effectively pick the pockets of new and inexperienced poker players. Some collusion webbots even allow one virtual player to play multiple hands at the same table, while making it look like a separate person is playing each hand. Imagine playing against a group of people who not only know each other's cards, but hold, fold, and bet against you as a team!

Obviously, such webbots that play expert poker (and cheat) provide a tremendous advantage. Nobody knows exactly how prevalent pokerbots are, but they have created a market for anti-pokerbot software like Poker BodyGuard, distributed by StopPokerCheaters.com.

Figure 2-3. An example pokerbot

A Few Crazy Ideas to Get You Started

One of the goals of this book is to encourage you to write new and experimental webbots of your own design. A way to jumpstart this process is to brainstorm and generate some ideas for potential projects. I've taken this opportunity to list a few ideas to get you started. These ideas are not here necessarily because they have commercial value. Instead, they should act as inspiration for your own webbots and what you want to accomplish online.

When designing a webbot, remember that the more specifically you can define the task, the more useful your webbot will be. What can you do with a webbot? Let's look at a few scenarios.

Help Out a Busy Executive

Suppose you're a busy executive type and you like to start your day reading your online industry publication. Time is limited, however, and you only let yourself read industry news until you've finished your first cup of coffee. Therefore, you don't want to be bothered with stories that you've read before or that you know are not relevant to your business. You ask your developer to create a specialized webbot that consolidates articles from your favorite industry news sources and only displays links to stories that it has not shown you before.

The webbot could ignore articles that contain certain key phrases you previously entered in an exclusion list[7] and highlight articles that contain references to you or your competitors. With such an application, you could quickly scan what's happening in your industry and only spend time reading relevant articles. You might even have more time to enjoy your coffee.

Save Money by Automating Tasks

It's possible to design a webbot that automatically buys inventory for a store, given a predetermined set of buying criteria. For example, assume you own a store that sells used travel gear. Some of your sources for inventory are online auction websites.[8] Say you are interested in bidding on under-priced Tumi suitcases during the closing minute of their auctions. If you don't use a webbot of some sort, you will have to use a web browser to check each auction site periodically.

Without a webbot, it can be expensive to use the Internet in a business setting, because repetitive tasks (like procuring inventory) are time consuming without automation. Additionally, the more mundane the task, the greater the opportunity for human error. Checking online auctions for products to resell could easily consume one or two hours a day—up to 25 percent of a 40-hour work week. At that rate, someone with an annual salary of $80,000 would cost a company $20,000 a year to procure inventory (without a webbot). That cost does not include the cost of opportunities lost while the employee manually surfs auction sites. In scenarios like this, it's easy to see how product acquisition with a webbot saves a lot of money—even for a small business with small requirements. Additionally, a webbot may uncover bargains missed by someone manually searching the auction site.

Protect Intellectual Property

You can write a webbot to protect your online intellectual property. For example, suppose you spent many hours writing a JavaScript program. It has commercial value, and you license the script for others to use for a fee. You've been selling the program for a few months and have learned that some people are downloading and using your program without paying for it. You write a webbot to find websites that are using your JavaScript program without your permission. This webbot searches the Internet and makes a list of URLs that reference your JavaScript file. In a separate step, the webbot does a whois lookup on the domain to determine the owner from the domain registrar.[9] If the domain is not one of your registered users, the webbot compiles contact information from the domain registrar so you can contact the parties who are using unlicensed copies of your code.

Monitor Opportunities

You can also write webbots that alert you when particular opportunities arise. For example, let's say that you have an interest in acquiring a Jack Russell Terrier.[10] Instead of devoting part of each day to searching for your new dog, you decide to write a webbot to search for you and notify you when it finds a dog meeting your requirements. Your webbot performs a daily search of the websites of local animal shelters and dog rescue organizations. It parses the contents of the sites, looking for your dog. When the webbot finds a Jack Russell Terrier, it sends you an email notification describing the dog and its location. The webbot also records this specific dog in its database, so it doesn't send additional notifications for the same dog in the future. This is a fairly common webbot task, which could be modified to automatically discover job listings, sports scores, or any other timely information.

Verify Access Rights on a Website

Webbots may prevent the potentially nightmarish situation that exists for any web developer who mistakenly gives one user access to another user's data. To avoid this situation, you could commission a webbot to verify that all users receive the correct access to your site. This webbot logs in to the site with every viable username and password. While acting on each user's behalf, the webbot accesses every available page and compares those pages to a list of appropriate pages for each user. If the webbot finds a user is inadvertently able to access something he or she shouldn't, that account is temporarily suspended until the problem is fixed. Every morning before you arrive at your office, the webbot emails a report of any irregularities it found the night before.

Create an Online Clipping Service

Suppose you're very vain, and you'd like a webbot to send an email to your mother every time a major news service mentions your name. However, since you're not vain enough to check all the main news websites on a regular basis, you write a webbot that accomplishes the task for you. This webbot accesses a collection of websites, including CNN, Forbes, and Fortune. You design your webbot to look only for articles that mention your name, and you employ an exclusion list to ignore all articles that contain words or phrases like shakedown, corruption, or money laundering. When the webbot finds an appropriate article, it automatically sends your mother an email with a link to the article. Your webbot also blind copies you on all emails it sends so you know what she's talking about when she calls.

Plot Unauthorized Wi-Fi Networks

You could write a webbot that aids in maintaining network security on a large corporate campus. For example, suppose that you recently discovered that you have a problem with employees attaching unauthorized wireless access points to your network. Since these unauthorized access points occur inside your firewalls and proxies, you recognize that these unauthorized Wi-Fi networks pose a security risk that you need to control. Therefore, in addition to a new security policy, you decide to create a webbot that automatically finds and records the location of all wireless networks on your corporate campus.

You notice that your mail room uses a small metal cart to deliver mail. Because this cart reaches every corner of the corporate campus on a daily basis, you seek and obtain permission to attach a small laptop computer with a webbot and Global Positioning System (GPS) card to the cart. As your webbot hitches a ride through the campus, it looks for open wireless network connections. When it finds a wireless network, it uses the open network to send its GPS location to a special website. This website logs the GPS coordinates, IP address, and date of uplink in a database. If you did your homework correctly, in a few days your webbot should create a map of all open Wi-Fi networks, authorized and unauthorized, in your entire corporate campus.

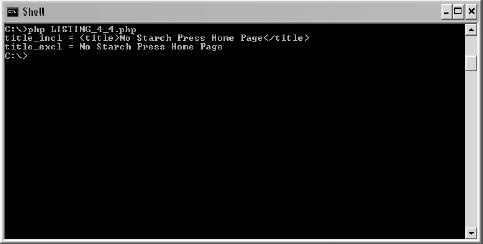

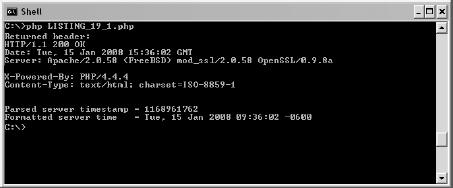

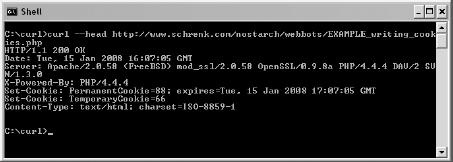

Track Web Technologies

You could write webbots that use web page headers, the information that servers send to browsers so they may correctly render websites, to maintain a list of web technologies used by major corporations. Headers typically indicate the type of webserver (and often the operating system) that websites use, as shown in Figure 2-4.

Figure 2-4. A web page header showing server technology

Your webbot starts by accessing the headers of each website from a list that you keep in a database. It then parses web technology information from the header. Finally, the webbot stores that information in a database that is used by a graphing program to plot how server technology choices change over time.

Allow Incompatible Systems to Communicate

In addition to creating human-readable output, you could design a webbot that only talks to other computers. For example, let's say that you want to synchronize two databases, one on a local private network and one that's behind a public website. In this case, synchronization (ensuring that both databases contain the same information) is difficult because the systems use different technologies with incompatible synchronization techniques. Given the circumstances, you could write a webbot that runs on your private network and, for example, analyzes the public database through a password-protected web service every morning. The webbot uses the Internet as a common protocol between these databases, analyzes data on both systems, and exchanges the appropriate data to synchronize the two databases.

[7] An exclusion list is a list of keywords or phrases that are ignored by a webbot.

[8] Some online auctions actually provide tools to help you write webbots that manage auctions. If you're interested in automating online auctions, check out eBay's Developers Program (http://developer.ebay.com).

[9] whois is a service that returns information about the owner of a website. You can do the equivalent of a whois from a shell script or from an online service.

[10] I actually met my dog online.

Final Thoughts

Studying browser limitations is one way to uncover ideas for new webbot designs. You've seen some real-world examples of webbots in use and read some descriptions of conceptual webbot designs. But, enough with theory—let's head to the lab!

The next four chapters describe the basics of webbot development: downloading pages, parsing data, emulating form submission, and managing large amounts of data. Once you master these concepts, you can move on to actual webbot projects.

Chapter 3. DOWNLOADING WEB PAGES

The most important thing a webbot does is move web pages from the Internet to your computer. Once the web page is on your computer, your webbot can parse and manipulate it.

This chapter will show you how to write simple PHP scripts that download web pages. More importantly, you'll learn PHP's limitations and how to overcome them with PHP/CURL, a special binding of the cURL library that facilitates many advanced network features. cURL is used widely by many computer languages as a means to access network files with a number of protocols and options.

Note

While web pages are the most common targets for webbots and spiders, the Web is not the only source of information for your webbots. Later chapters will explore methods for extracting data from newsgroups, email, and FTP servers, as well.

Prior to discovering PHP, I wrote webbots in a variety of languages, including Visual Basic, Java, and Tcl/Tk. But due to its simple syntax, in-depth string parsing capabilities, networking functions, and portability, PHP proved ideal for webbot development. However, PHP is primarily a server language, and its chief purpose is to help webservers interpret incoming requests and send the appropriate web pages in response. Since webbots don't serve pages (they request them), this book supplements PHP built-in functions with PHP/CURL and a variety of libraries, developed specifically to help you learn to write webbots and spiders.

Think About Files, Not Web Pages

To most people, the Web appears as a collection of web pages. But in reality, the Web is collection of files that form those web pages. These files may exist on servers anywhere in the world, and they only create web pages when they are viewed together. Because browsers simplify the process of downloading and rendering the individual files that make up web pages, you need to know the nuts and bolts of how web pages are put together before you write your first webbot.

When your browser requests a file, as shown in Figure 3-1, the webserver that fields the request sends your browser a default or index file, which maps the location of all the files that the web page needs and tells how to render the text and images that comprise that web page.

Figure 3-1. When a browser requests a web page, it first receives an index file.

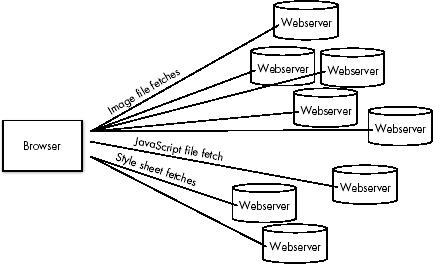

As a rule, this index file also contains references to the other files required to render the complete web page,[11] as shown in Figure 3-2. These may include images, JavaScript, style sheets, or complex media files like Flash, QuickTime, or Windows Media files. The browser downloads each file separately, as it is referenced by the index file.

Figure 3-2. Downloading files, as they are referenced by the index file

For example, if you request a web page with references to eight items your single web page actually executes nine separate file downloads (one for the web page and one for each file referenced by the web page). Usually, each file resides on the same server, but they could just as easily exist on separate domains, as shown in Figure 3-2.

[11] Some very simple websites consist of only one file.

Downloading Files with PHP's Built-in Functions

Before you can appreciate PHP/CURL, you'll need to familiarize yourself with PHP's built-in functions for downloading files from the Internet.

Downloading Files with fopen() and fgets()

PHP includes two simple built-in functions for downloading files from a network—fopen() and fgets(). The fopen() function does two things. First, it creates a network socket, which represents the link between your webbot and the network resource you want to retrieve. Second, it implements the HTTP protocol, which defines how data is transferred. With those tasks completed, fgets() leverages the networking ability of your computer's operating system to pull the file from the Internet.

Creating Your First Webbot Script

Let's use PHP's built-in functions to create your first webbot, which downloads a "Hello, world!" web page from this book's companion website. The short script is shown in Listing 3-1.

# Define the file you want to download

$target = "http://www.schrenk.com/nostarch/webbots/hello_world.html";

$file_handle = fopen($target, "r");

# Fetch the file

while (!feof($file_handle))

echo fgets($file_handle, 4096);

fclose($file_handle);

Listing 3-1: Downloading a file from the Web with fopen() and fgets()

As shown in Listing 3-1, fopen() establishes a network connection to the target, or file you want to download. It references this connection with a file handle, or network link called $file_handle. The script then uses fopen() to fetch and echo the file in 4,096-byte chunks until it has downloaded and displayed the entire file. Finally, the script executes an fclose() to tell PHP that it's finished with the network handle.

Before we can execute the example in Listing 3-1, we need to examine the two ways to execute a webbot: You can run a webbot either in a browser or in a command shell.[12]

Executing Webbots in Command Shells

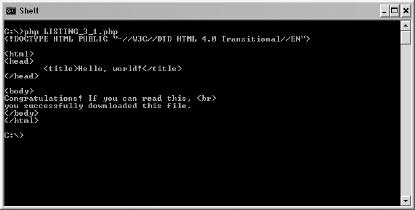

If you have a choice, it is usually better to execute webbots from a shell or command line. Webbots generally don't care about web page formatting, so they will display exactly what is returned from a webserver. Browsers, in contrast, will interpret HTML tags as instructions for rendering the web page. For example, Figure 3-3 shows what Listing 3-1 looks like when executed in a shell.

Figure 3-3. Running a webbot script in a shell

Executing Webbots in Browsers

To run a webbot script in a browser, simply load the script on a webserver and execute it by loading its URL into the browser's location bar as you would any other web page. Contrast Figure 3-3 with Figure 3-4, where the same script is run within a browser. The HTML tags are gone, as well as all of the structure of the returned file; the only things displayed are two lines of text. Running a webbot in a browser only shows a partial picture and often hides important information that a webbot needs.

Note

To display HTML tags within a browser, surround the output with <xmp> and </xmp> tags.

Figure 3-4. Browser "rendering" the output of a webbot

Browser buffering is another complication you might run into if you try to execute a webbot in a browser. Buffering is useful when you're viewing web pages because it allows a browser to wait until it has collected enough of a web page before it starts rendering or displaying the web page. However, browser buffering is troublesome for webbots because they frequently run for extended periods of time—much longer than it would take to download a typical web page. During prolonged webbot execution, status messages written by the webbot may not be displayed by the browser while it is buffering the display.

I have one webbot that runs continuously; in fact, it once ran for seven months before stopping during a power outage. This webbot could never run effectively in a browser because browsers are designed to render web pages with files of finite length. Browsers assume short download periods and may buffer an entire web page before displaying anything—therefore, never displaying the output of your webbot.

Note

Browsers can still be very useful for creating interfaces that set up or control the actions of a webbot. They can also be useful for displaying the results of a webbot's work.

Downloading Files with file()

An alternative to fopen() and fgets() is the function file(), which downloads formatted files and places them into an array. This function differs from fopen() in two important ways: One way is that, unlike fopen(), it does not require you to create a file handle, because it creates all the network preparations for you. The other difference is that it returns the downloaded file as an array, with each line of the downloaded file in a separate array element. The script in Listing 3-2 downloads the same web page used in Listing 3-1, but it uses the file() command.

<?

// Download the target file

$target = "http://www.schrenk.com/nostarch/webbots/hello_world.html";

$downloaded_page_array = file($target);

// Echo contents of file

for($xx=0; $xx<count($downloaded_page_array); $xx++)

echo $downloaded_page_array[$xx];

?>

Listing 3-2: Downloading files with file()

The file() function is particularly useful for downloading comma-separated value (CSV) files, in which each line of text represents a row of data with columnar formatting (as in an Excel spreadsheet). Loading files line-by-line into an array, however, is not particularly useful when downloading HTML files because the data in a web page is not defined by rows or columns; in a CSV file, however, rows and columns have specific meaning.

[12] See Chapter 23 for more information on executing webbots as scheduled events.

Introducing PHP/CURL

While PHP is capable when it comes to simple file downloads, most real-life applications require additional functionality to handle advanced issues such as form submission, authentication, redirection, and so on. These functions are difficult to facilitate with PHP's built-in functions alone. Therefore, most of this book's examples use PHP/CURL to download files.

The open source cURL project is the product of Swedish developer Daniel Stenberg and a team of developers. The cURL library is available for use with nearly any computer language you can think of. When cURL is used with PHP, it's known as PHP/CURL.

The name cURL is either a blend of the words client and URL or an acronym for the words client URL Request Library—you decide. cURL does everything that PHP's built-in networking functions do and a lot more. Appendix A expands on cURL's features, but here's a quick overview of the things PHP/CURL can do for you, a webbot developer.

Multiple Transfer Protocols

Unlike the built-in PHP network functions, cURL supports multiple transfer protocols, including FTP, FTPS, HTTP, HTTPS, Gopher, Telnet, and LDAP. Of these protocols, the most important is probably HTTPS, which allows webbots to download from encrypted websites that employ the Secure Sockets Layer (SSL) protocol.

Form Submission

cURL provides easy ways for a webbot to emulate browser form submission to a server. cURL supports all of the standard methods, or form submission protocols, as you'll learn in Chapter 5.

Basic Authentication

cURL allows webbots to enter password-protected websites that use basic authentication. You've encountered authentication if you've seen this familiar gray box, shown in Figure 3-5, asking for your username and password. PHP/CURL makes it easy to write webbots that enter and use password-protected websites.

Figure 3-5. A basic authentication prompt

Cookies

Without cURL, it is difficult for webbots to read and write cookies, those small bits of data that websites use to create session variables that track your movement. Websites also use cookies to manage shopping carts and authenticate users. cURL makes it easy for your webbot to interpret the cookies that webservers send it; it also simplifies the process of showing webservers all the cookies your webbot has written. Chapter 21 and Chapter 22 have much more to say on the subject of webbots and cookies.

Redirection

Redirection occurs when a web browser looks for a file in one place, but the server tells it that the file has moved and that it should download it from another location. For example, the website www.company.com may use redirection to force browsers to go to www.company.com/spring_sale when a seasonal promotion is in place. Browsers handle redirections automatically, and cURL allows webbots to have the same functionality.

Agent Name Spoofing

Every time a webserver receives a file request, it stores the requesting agent's name in a log file called an access log file. This log file stores the time of access, the IP address of the requester, and the agent name, which identifies the type of program that requested the file. Generally, agent names identify the browser that the web surfer was using to view the website.

Some agent names that a server log file may record are shown in Listing 3-3. The first four names are browsers; the last is the Google spider.

Mozilla/5.0 (Windows; U; Windows NT 5.1; rv:1.7.6) Gecko/20050225 Firefox/1.0.1

Mozilla/4.0 (compatible; MSIE 5.0; Windows 2000) Opera 6.03 [en]

Mozilla/5.0 (compatible; Konqueror/3.1-rc3; i686 Linux; 20020515)

Mozilla/4.0 (compatible; MSIE 7.0b; Windows NT 5.1)

Googlebot/2.1 (+http://www.google.com/bot.html)

Listing 3-3: Agent names as seen in a file access log

A webbot using cURL can assume any appropriate (or inappropriate) agent name. For example, sometimes it is advantageous to identify your webbots, as Google does. Other times, it is better to make your webbot look like a browser. If you write webbots that use the LIB_http library (described later), your webbot's agent name will be Test Webbot. If you download a file from a webserver with PHP's fopen() or file() functions, your agent name will be the version of PHP installed on your computer.

Referer Management

cURL allows webbot developers to change the referer, which is the reference that servers use to detect which link the web surfer clicked. Sometimes webservers use the referer to verify that file requests are coming from the correct place. For example, a website might enforce a rule that prevents downloading of images unless the referring web page is also on the same webserver. This prohibits people from bandwidth stealing, or writing web pages using images on someone else's server. cURL allows a webbot to set the referer to an arbitrary value.

Socket Management

cURL also gives webbots the ability to recognize when a webserver isn't going to respond to a file request. This ability is vital because, without it, your webbot might hang (forever) waiting for a server response that will never happen. With cURL, you can specify how long a webbot will wait for a response from a server before it gives up and moves on.

Installing PHP/CURL

Since PHP/CURL is tightly integrated with PHP, installation should be unnecessary, or at worst, easy. You probably already have PHP/CURL on your computer; you just need to enable it in php.ini, the PHP configuration file. If you're using Linux, FreeBSD, OS X, or another Unix-based operating system, you may have to recompile your copy of Apache/PHP to enjoy the benefits of PHP/CURL. Installing PHP/CURL is similar to installing any other PHP library. If you need help, you should reference the PHP website (http://www.php.net) for the instructions for your particular operating system and PHP version.

LIB_http

Since PHP/CURL is very flexible and has many configurations, it is often handy to use it within a wrapper function, which simplifies the complexities of a code library into something easier to understand. For your convenience, this book uses a library called LIB_http, which provides wrapper functions to the PHP/CURL features you'll use most. The remainder of this chapter describes the basic functions of the LIB_http library.

LIB_http is a collection of PHP/CURL routines that simplify downloading files. It contains defaults and abstractions that facilitate downloading files, managing cookies, and completing online forms. The name of the library refers to the HTTP protocol used by the library. Some of the reasons for using this library will not be evident until we cover its more advanced features. Even simple file downloads, however, are made easier and more robust with LIB_http because of PHP/CURL. The most recent version of LIB_http is available at this book's website.

Familiarizing Yourself with the Default Values

To simplify its use, LIB_http sets a series of default conditions for you, as described below:

- Your webbot's agent name is Test Webbot.

- Your webbot will time out if a file transfer doesn't complete within 25 seconds.

- Your webbot will store cookies in the file c:\ cookie.txt.

- Your webbot will automatically follow a maximum of four redirections, as directed by servers in HTTP headers.

- Your webbot will, if asked, tell the remote server that you do not have a local authentication certificate. (This is only important if you access a website employing SSL encryption, which is used to protect confidential information on e-commerce websites.)

These defaults are set at the beginning of the file. Feel free to change any of these settings to meet your specific needs.

Using LIB_http

The LIB_http library provides a set of wrapper functions that simplify complicated PHP/CURL interfaces. Each of these interfaces calls a common routine, http(), which performs the specified task, using the values passed to it by the wrapper interfaces. All functions in LIB_http share a similar format: A target and referring URL are passed, and an array is returned, containing the contents of the requested file, transfer status, and error conditions.

While LIB_http has many functions, we'll restrict our discussion to simply fetching files from the Internet using HTTP. The remaining features are described as needed throughout the book.

http_get()

The function http_get() downloads files with the GET method; it has many advantages over PHP's built-in functions for downloading files from the Internet. Not only is the interface simple, but this function offers all the previously described advantages of using PHP/CURL. The script in Listing 3-4 shows how files are downloaded with http_get().

# Usage: http_get()

array http_get (string target_url, string referring_url)

Listing 3-4: Using http_get()

These are the inputs for the script in Listing 3-4:

| target_url is the fully formed URL of the desired file |

| referring_url is the fully formed URL of the referer |

These are the outputs for the script in Listing 3-4:

| $array['FILE'] contains the contents of the requested file |

| $array['STATUS'] contains status information regarding the file transfer |

| $array['ERROR'] contains a textual description of any errors |

http_get_withheader()

When a web agent requests a file from the Web, the server returns the file contents, as discussed in the previous section, along with the HTTP header, which describes various properties related to a web page. Browsers and webbots rely on the HTTP header to determine what to do with the contents of the downloaded file.

The data that is included in the HTTP header varies from application to application, but it may define cookies, the size of the downloaded file, redirections, encryption details, or authentication directives. Since the information in the HTTP header is critical to properly using a network file, LIB_http configures cURL to automatically handle the more common header directives. Listing 3-5 shows how this function is used.

# Usage: http_get_withheader()

array http_get_withheader (string target_url, string referring_url)

Listing 3-5: Using http_get()

These are the inputs for the script in Listing 3-5:

| target_url is the fully formed URL of the desired file |

| referring_url is the fully formed URL of the referer |

These are the outputs for the script in Listing 3-5:

| $array['FILE'] contains the contents of the requested file, including the HTTP header |

| $array['STATUS'] contains status information about the file transfer |

| $array['ERROR'] contains a textual description of any errors |

The example in Listing 3-6 uses the http_get_withheader() function to download a file and display the contents of the returned array.

# Include http library

include("LIB_http.php");

# Define the target and referer web pages

$target = "http://www.schrenk.com/publications.php";

$ref = "http://www.schrenk.com";

# Request the header

$return_array = http_get_withheader($target, $ref);

# Display the header

echo "FILE CONTENTS \n";

var_dump($return_array['FILE']);

echo "ERRORS \n";

var_dump($return_array['ERROR']);

echo "STATUS \n";

var_dump($return_array['STATUS']);

Listing 3-6: Using http_get_withheader()

The script in Listing 3-6 downloads the page and displays the requested page, any errors, and a variety of status information related to the fetch and download.

Listing 3-7 shows what is produced when the script in Listing 3-6 is executed, with the array that includes the page header, error conditions, and status. Notice that the contents of the returned file are limited to only the HTTP header, because we requested only the header and not the entire page. Also, notice that the first line in a HTTP header is the HTTP code, which indicates the status of the request. An HTTP code of 200 tells us that the request was successful. The HTTP code also appears in the status array element.[13]

FILE CONTENTS

string(215) "HTTP/1.1 200 OK

Date: Sat, 08 Oct 2008 16:38:51 GMT

Server: Apache/2.0.53 (FreeBSD) mod_ssl/2.0.53 OpenSSL/0.9.7g PHP/4.4.0

X-Powered-By: PHP/4.4.0

Content-Type: text/html; charset=ISO-8859-1

"

ERRORS

string(0) ""

STATUS

array(20) {

["url"]=>

string(39) "http://www.schrenk.com/publications.php"

["content_type"]=>

string(29) "text/html; charset=ISO-8859-1"

["http_code"]=>

int(200)

["header_size"]=>

int(215)

["request_size"]=>

int(200)

["filetime"]=>

int(-1)

["ssl_verify_result"]=>

int(0)

["redirect_count"]=>

int(0)

["total_time"]=>

float(0.683)

["namelookup_time"]=>

float(0.005)

["connect_time"]=>

float(0.101)

["pretransfer_time"]=>

float(0.101)

["size_upload"]=>

float(0)

["size_download"]=>

float(0)

["speed_download"]=>

float(0)

["speed_upload"]=>

float(0)

["download_content_length"]=>

float(0)

["upload_content_length"]=>

float(0)

["starttransfer_time"]=>

float(0.683)

["redirect_time"]=>

float(0)

}

Listing 3-7: File contents, errors, and the download status array returned by LIB_http

The information returned in $array['STATUS'] is extraordinarily useful for learning how the fetch was conducted. Included in this array are values for download speed, access times, and file sizes—all valuable when writing diagnostic webbots that monitor the performance of a website.

Learning More About HTTP Headers

When a Content-Type line appears in an HTTP header, it defines the MIME, or the media type of file sent from the server. The MIME type tells the web agent what to do with the file. For example, the Content-Type in the previous example was text/html, which indicates that the file is a web page. Knowing if the file they just downloaded was an image or an HTML file helps browsers know if they should display the file as text or render an image. For example, the HTTP header information for a JPEG image is shown in Listing 3-8.

HTTP/1.1 200 OK

Date: Mon, 23 Mar 2009 00:06:13 GMT

Server: Apache/1.3.12 (Unix) mod_throttle/3.1.2 tomcat/1.0 PHP/4.0.3pl1

Last-Modified: Wed, 23 Jul 2008 18:03:29 GMT

ETag: "74db-9063-3d3eebf1"

Accept-Ranges: bytes

Content-Length: 36963

Content-Type: image/jpeg

Listing 3-8: An HTTP header for an image file request

Examining LIB_http's Source Code

Most webbots in this book will use the library LIB_http to download pages from the Internet. If you plan to explore any of the webbot examples that appear later in this book, you should obtain a copy of this library; the latest version is available for download at this book's website. We'll explore some of the defaults and functions of LIB_http here.

LIB_http Defaults

At the very beginning of the library is a set of defaults, as shown in Listing 3-9.

define("WEBBOT_NAME", "Test Webbot"); # How your webbot will appear in server

logs

define("CURL_TIMEOUT", 25); # Time (seconds) to wait for network

response

define("COOKIE_FILE", "c:\cookie.txt"); # Location of cookie file

Listing 3-9: LIB_http defaults

LIB_http Functions

The functions shown in Listing 3-10 are available within LIB_http. All of these functions return the array defined earlier, containing downloaded files, error messages, and the status of the file transfer.

http_get($target, $ref) # Simple get request (w/o header)

http_get_withheader($target, $ref) # Simple get request (w/ header)

http_get_form($target, $ref, $data_array) # Form (method ="GET", w/o

header)

http_get_form_withheader($target, $ref, $data_array) # Form (method ="GET", w/ header)

http_post_form($target, $ref, $data_array) # Form (method ="POST", w/o

header)

http_post_withheader($target, $ref, $data_array) # Form (method ="POST", w/

header)